回归预测 | MATLAB实现GWO-ELM灰狼算法优化极限学习机多输入单输出

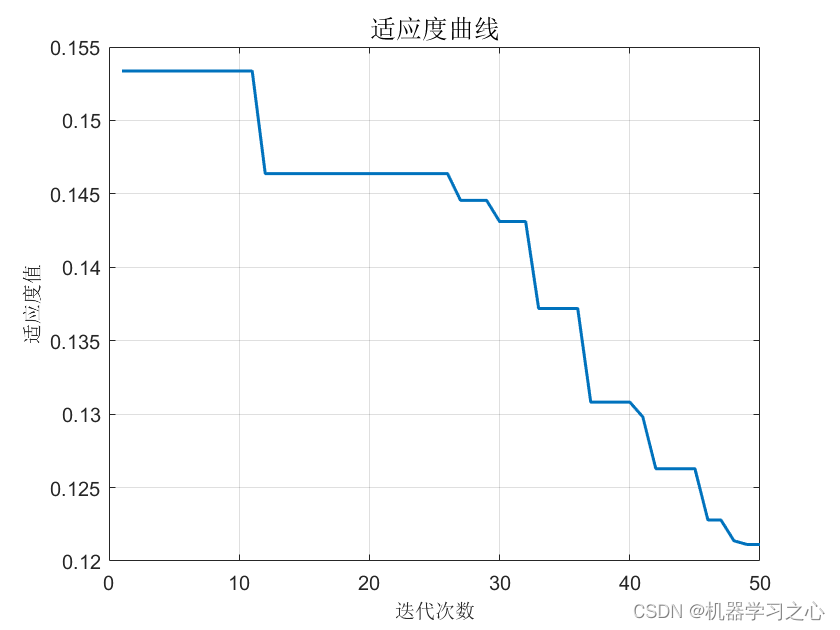

效果一览

基本介绍

MATLAB实现GWO-ELM灰狼算法优化极限学习机多输入单输出

优化参数为权值和阈值,自带数据为excel数据,多输入,单输出,回归预测。

灰狼优化算法(GWO)模拟了自然界灰狼的领导和狩猎层级,在狼群中存在四种角色,狼1负责领导是最具有智慧的在狩猎当中可以敏锐的知道猎物的位置,狼2可以认为是军师比较具有智慧比较能知道猎物的位置,狼3负责协助前两个层级的狼,最后是狼4负责跟从。

程序设计

- 完整程序和数据下载方式1(资源处直接下载):MATLAB实现GWO-ELM灰狼算法优化极限学习机多输入单输出

- 完整程序和数据下载方式2(订阅《智能学习》专栏,同时获取《智能学习》专栏收录程序6份,数据订阅后私信我获取):MATLAB实现GWO-ELM灰狼算法优化极限学习机多输入单输出

% Grey Wolf Optimizer

function [Best_pos,Best_score,curve]=GWO(SearchAgents_no,Max_iter,lb,ub,dim,fobj)

% initialize alpha, beta, and delta_pos

Best_pos=zeros(1,dim);

Best_score=inf; %change this to -inf for maximization problems

Beta_pos=zeros(1,dim);

Beta_score=inf; %change this to -inf for maximization problems

Delta_pos=zeros(1,dim);

Delta_score=inf; %change this to -inf for maximization problems

%Initialize the positions of search agents

Positions=initialization(SearchAgents_no,dim,ub,lb);

curve=zeros(1,Max_iter);

l=0;% Loop counter

% Main loop

while l<Max_iter

for i=1:size(Positions,1)

% Return back the search agents that go beyond the boundaries of the search space

Flag4ub=Positions(i,:)>ub;

Flag4lb=Positions(i,:)<lb;

Positions(i,:)=(Positions(i,:).*(~(Flag4ub+Flag4lb)))+ub.*Flag4ub+lb.*Flag4lb;

% Calculate objective function for each search agent

fitness=fobj(Positions(i,:));

% Update Alpha, Beta, and Delta

if fitness<Best_score

Best_score=fitness; % Update alpha

Best_pos=Positions(i,:);

end

if fitness>Best_score && fitness<Beta_score

Beta_score=fitness; % Update beta

Beta_pos=Positions(i,:);

end

if fitness>Best_score && fitness>Beta_score && fitness<Delta_score

Delta_score=fitness; % Update delta

Delta_pos=Positions(i,:);

end

end

a=2-l*((2)/Max_iter); % a decreases linearly fron 2 to 0

% Update the Position of search agents including omegas

for i=1:size(Positions,1)

for j=1:size(Positions,2)

r1=rand(); % r1 is a random number in [0,1]

r2=rand(); % r2 is a random number in [0,1]

A1=2*a*r1-a; % Equation (3.3)

C1=2*r2; % Equation (3.4)

D_alpha=abs(C1*Best_pos(j)-Positions(i,j)); % Equation (3.5)-part 1

X1=Best_pos(j)-A1*D_alpha; % Equation (3.6)-part 1

r1=rand();

r2=rand();

A2=2*a*r1-a; % Equation (3.3)

C2=2*r2; % Equation (3.4)

D_beta=abs(C2*Beta_pos(j)-Positions(i,j)); % Equation (3.5)-part 2

X2=Beta_pos(j)-A2*D_beta; % Equation (3.6)-part 2

r1=rand();

r2=rand();

A3=2*a*r1-a; % Equation (3.3)

C3=2*r2; % Equation (3.4)

D_delta=abs(C3*Delta_pos(j)-Positions(i,j)); % Equation (3.5)-part 3

X3=Delta_pos(j)-A3*D_delta; % Equation (3.5)-part 3

Positions(i,j)=(X1+X2+X3)/3;% Equation (3.7)

end

end

l=l+1;

curve(l)=Best_score;

end

学习总结

极限学习机,为人工智能机器学习领域中的一种人工神神经网络模型,是一种求解单隐层前馈神经网路的学习演算法。极限学习机是用于分类、回归、聚类、稀疏逼近、压缩和特征学习的前馈神经网络,具有单层或多层隐层节点,其中隐层节点的参数(不仅仅是将输入连接到隐层节点的权重)不需要被调整。这些隐层节点可以随机分配并且不必再更新(即它们是随机投影但具有非线性变换),或者可以从其祖先继承下来而不被更改。在大多数情况下,隐层节点的输出权重通常是一步学习的,这本质上相当于学习一个线性模型。

参考资料

[1] G.-B. Huang, Q.-Y. Zhu, and C.-K. Siew, “Extreme learning machine: A new learning scheme of feedforward neural networks,” in Proc. Int. Joint Conf. Neural Networks, July 2004, vol. 2, pp. 985–990.

[2] https://blog.csdn.net/kjm13182345320/article/details/127361354