ResNet34介绍

-

定义

-

残差网络(ResNet)是由来自Microsoft Research的4位学者提出的卷积神经网络,在2015年的ImageNet大规模视觉识别竞赛(ImageNet Large Scale Visual Recognition Challenge, ILSVRC)中获得了图像分类和物体识别的优胜。 残差网络的特点是容易优化,并且能够通过增加相当的深度来提高准确率。其内部的残差块使用了跳跃连接(short cut),缓解了在深度神经网络中增加深度带来的梯度消失问题

-

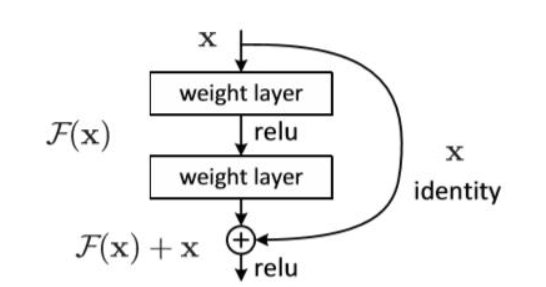

深度残差网络(ResNet)除最开始的卷积池化和最后池化的全连接之外,网络中有很多结构相似的单元,这些重复的单元的共同点就是有个跨层直连的short cut,同时将这些单元称作Residual Block。Residual Block的构造图如下(图中 x identity 标注的曲线表示 short cut):

-

-

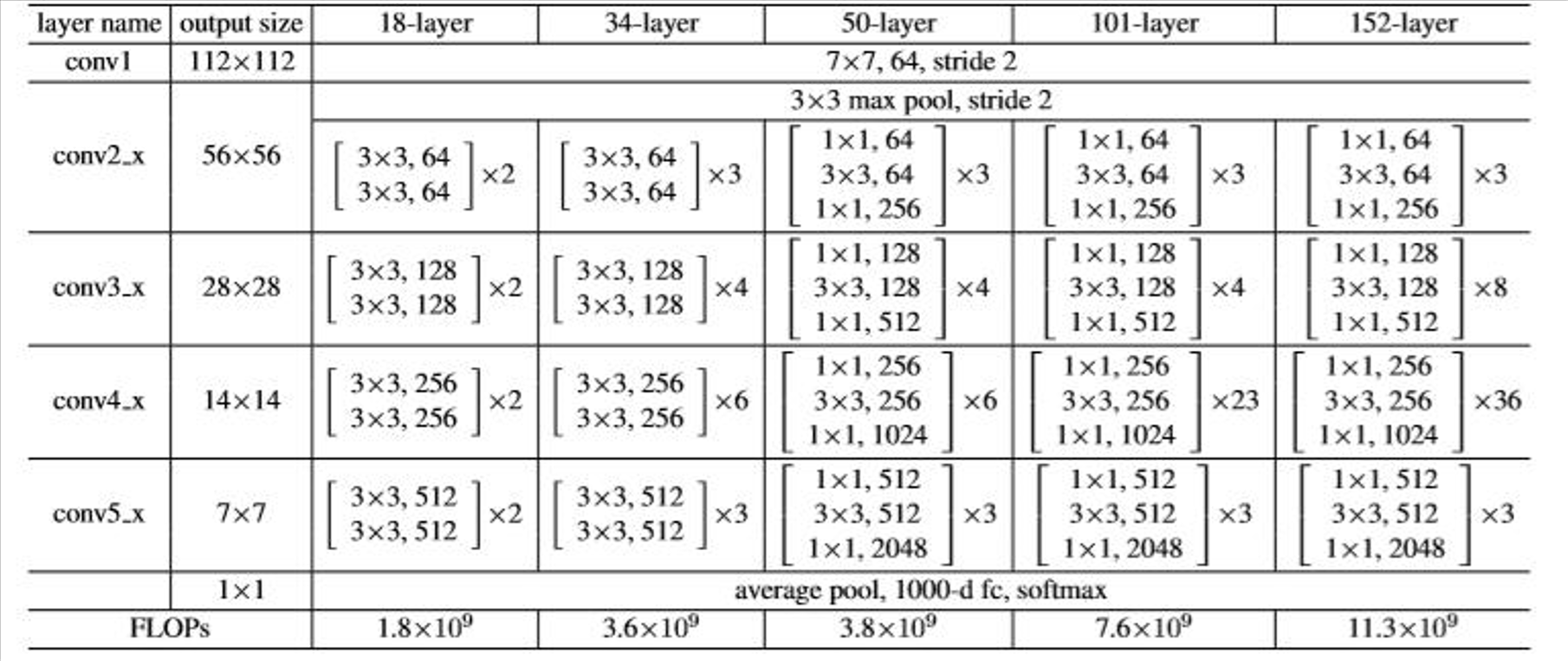

网络结构图

CIFAR10数据集

- 该数据集在另一篇文章里已经介绍过了,这里就不再重复介绍了,主要看一下代码实现

代码实现

-

resnet.py

import torch from torch import nn from torch.nn import functional as F class ResBlk(nn.Module): # resnet block def __init__(self, ch_in, ch_out, stride = 1): super(ResBlk, self).__init__() self.conv1 = nn.Conv2d(ch_in, ch_out, kernel_size=3, stride=stride, padding=1) self.bn1 = nn.BatchNorm2d(ch_out) self.relu = nn.ReLU(inplace=True) self.conv2 = nn.Conv2d(ch_out, ch_out, kernel_size=3, stride=1, padding=1) self.bn2 = nn.BatchNorm2d(ch_out) self.extra = nn.Sequential() if ch_out != ch_in: # [b, ch_in, h, w] -> [b, ch_out, h, w] self.extra = nn.Sequential( nn.Conv2d(ch_in, ch_out, kernel_size=1, stride=2), nn.BatchNorm2d(ch_out), ) def forward(self, x): # x:[b, ch, h, w] out =F.relu(self.bn1(self.conv1(x))) out =self.bn2(self.conv2(out)) # short cut out = self.extra(x) + out # out = F.relu(out) return out # ResNet34 class ResNet18(nn.Module): def __init__(self, block): super(ResNet18, self).__init__() self.conv1 = nn.Sequential( nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3), nn.BatchNorm2d(64), nn.ReLU(), nn.MaxPool2d(kernel_size=3, stride=1, padding=1) ) # followed 4 blocks # [b, 64, h, w] -> [b, 128, h, w] # self.blk1 = ResBlk(16, 16) self.blk1 = nn.Sequential( ResBlk(64, 64, 1), ResBlk(64, 64, 1), ResBlk(64, 64, 1) ) # [b, 128, h, w] -> [b, 256, h, w] # self.blk2 = ResBlk(16, 32) self.blk2 = nn.Sequential( ResBlk(64, 128, 2), ResBlk(128, 128, 1), ResBlk(128, 128, 1), ResBlk(128, 128, 1), ) # [b, 256, h, w] -> [b, 512, h, w] self.blk3 = nn.Sequential( ResBlk(128, 256, 2), ResBlk(256, 256, 1), ResBlk(256, 256, 1), ResBlk(256, 256, 1), ResBlk(256, 256, 1), ResBlk(256, 256, 1), ) # self.blk3 = ResBlk(128, 256) # [b, 512, h, w] -> [b, 1024, h, w] self.blk4 = nn.Sequential( ResBlk(256, 512, 2), ResBlk(512, 512, 1), ResBlk(512, 512, 1), ) # self.blk4 = ResBlk(256, 512) self.avg_pool = nn.AvgPool2d(kernel_size=2) self.outlayer = nn.Linear(512, 10) def forward(self, x): x = F.relu(self.conv1(x)) # [b, 64, h, w] -> [b, 128, h, w] x = self.blk1(x) x = self.blk2(x) x = self.blk3(x) x = self.blk4(x) x = self.avg_pool(x) x = x.view(x.size(0), -1) x = self.outlayer(x) return x def main(): # blk = ResBlk(64, 128, 1) # tmp = torch.randn(2, 64, 32, 32) # out = blk(tmp) # # torch.Size([2, 128, 32, 32]) # print('blk:', out.shape) model = ResNet18(ResBlk) tmp = torch.randn(2, 3, 32, 32) out = model(tmp) print('resnet:', out.shape) if __name__ == '__main__': main() -

CNN.py

import torch from torch.utils.data import DataLoader from torchvision import datasets from torch import nn, optim from torchvision import transforms # from lenet5 import Lenet5 from resnet import ResNet18, ResBlk import matplotlib.pyplot as plt def main(): batchsz = 32 # 加载CIFAR10数据集 cifar_train = datasets.CIFAR10('cifar', True, transform = transforms.Compose([ transforms.Resize([32, 32]), transforms.ToTensor() ]), download = True) cifar_train = DataLoader(cifar_train, batch_size=batchsz, shuffle=True) cifar_test = datasets.CIFAR10('cifar', False, transform=transforms.Compose([ transforms.Resize([32, 32]), transforms.ToTensor() ]), download=True) cifar_test = DataLoader(cifar_test, batch_size=batchsz, shuffle=True) # 测试 x, label = iter(cifar_train).next() print('x:', x.shape, 'label:', label.shape) device = torch.device('cuda') # model = Lenet5().to(device) model = ResNet18(ResBlk).to(device) criteon = nn.CrossEntropyLoss().to(device) optimizer = optim.Adam(model.parameters(), lr=1e-3) print(model) train_result = [] test_result = [] for epoch in range(100): model.train() for batchidx, (x, label) in enumerate(cifar_train): # print(batchidx) x, label = x.to(device), label.to(device) # logits:[b, 10] # label:[b] # loss:tensor scalar logits = model(x) loss = criteon(logits, label) # backprop optimizer.zero_grad() loss.backward() optimizer.step() # 完成一个epoch print(epoch, "loss = ", loss.item()) train_result.append(loss.item()) model.eval() with torch.no_grad(): # test total_correct = 0 total_num = 0 for x, label in cifar_test: x, label = x.to(device), label.to(device) logits = model(x) # 得到最大值所在的索引 pred = logits.argmax(dim=1) # [b] vs [b] -> scalar tensor total_correct += torch.eq(pred, label).float().sum().item() total_num += x.size(0) acc = total_correct / total_num test_result.append(acc) print(epoch, acc) # print(train_result) # print(test_result) plt.plot(train_result, label="train_loss") plt.plot(test_result, label="test_acc") plt.legend() plt.savefig("picture", dpi=300) # plt.show() # file = open("result.txt", 'a') # for line1, line2 in train_result, test_result: # file.write(str(line1) + '\n') # file.write(str(line2) + '\n') # # file.write(train_result) # # file.write(test_result) # file.close() if __name__ == '__main__': main()

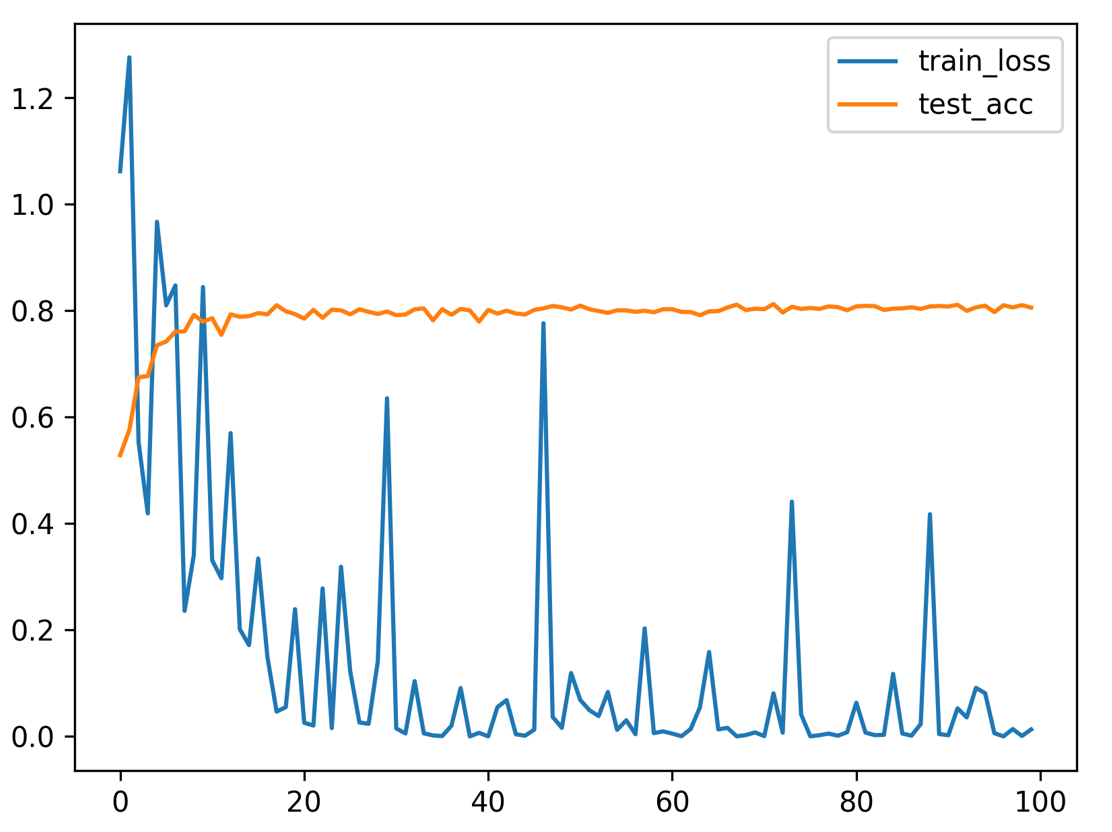

结果展示

总共进行了100轮试验,相较于 LeNet-5 网络,ResNet 网络在准确率上有了很大的提升,大约在 80% 左右