在前文基础上,我们初步基于常用的机器学习模型简单学习实践了婴儿啼哭的声音识别分类任务,这里更进一步,主要目的是基于常用的深度学习模型开发构建更加通用的声纹分类识别模型。

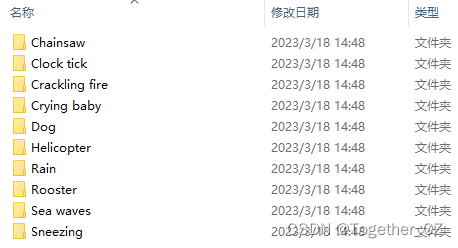

首先简单看下数据集,这里的数据来源于公开数据集,共包含10种不同类型的声音,如下:

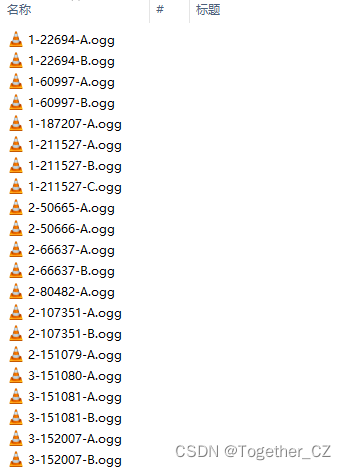

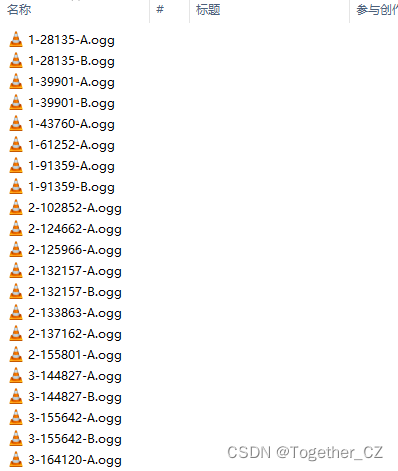

随机选取几种看下数据样例情况。

Chainsaw:

Crying baby:

Sea waves:

特征提取部分与前文相近,如下:

features=[]

labels=[]

map_to_int={}

label_list=os.listdir(dataDir)

for i in range(len(label_list)):

map_to_int[label_list[i]]=i

for one_label in os.listdir(dataDir):

oneDir=dataDir+one_label+"/"

for one_file in os.listdir(oneDir):

one_path=oneDir+one_file

y, fs = librosa.load(one_path, sr=44100)

mfcc_feature = feature.mfcc(y, fs, 3)

logmel_feature = feature.logmel(y, fs, 3)

one_vec=np.hstack([mfcc_feature, logmel_feature]).tolist()

features.append(one_vec)

labels.append(map_to_int[one_label])

print("features_shape: ", np.array(features).shape)

print("labels_shape: ", np.array(labels).shape)

result={}

result["features"]=features

result["labels"]=labels

with open(save_path, "w") as f:

f.write(json.dumps(result))

完成数据解析特征提取之后就可以初始化构建模型了,首先来看CNN模型:

input_ = Input(shape=input_shape)

# Conv1

x = Conv2D(64, kernel_size=(3, 3), strides=(1, 1), padding='same')(input_)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPool2D(pool_size=(2, 2), strides=(2, 2))(x)

# Conv2

x = Conv2D(128, kernel_size=(3, 3), strides=(1, 1), padding='same')(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPool2D(pool_size=(2, 2), strides=(2, 2))(x)

# Conv3

x = Conv2D(256, kernel_size=(3, 3), strides=(1, 1), padding='same')(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPool2D(pool_size=(2, 2), strides=(2, 2))(x)

# Conv4

x = Conv2D(512, kernel_size=(3, 3), strides=(1, 1), padding='same')(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPool2D(pool_size=(2, 2), strides=(2, 2))(x)

# GAP

x = GlobalAvgPool2D()(x)

# Dense_1

x = Dense(1024, activation='relu')(x)

x = Dropout(0.5)(x)

# Dense_2

output_ = Dense(nclass, activation='softmax')(x)

model = Model(inputs=input_, outputs=output_)

model.summary()

sgd = optimizers.sgd(lr=0.01, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])结果如下:

接下来是LSTM:

inputs = Input(shape=input_shape)

X = LSTM(128, return_sequences=True)(inputs)

X = Activation('relu')(X)

X = Dropout(0.25)(X)

X = Dense(128)(X)

X = Activation('relu')(X)

X = Dropout(0.35)(X)

X = Dense(256)(X)

X = Activation('relu')(X)

X = Dropout(0.45)(X)

X = Flatten()(X)

outputs = Dense(nclass, activation='softmax')(X)

model = Model(inputs=[inputs], outputs=outputs)

model.summary()

sgd = optimizers.sgd(lr=0.01, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])结果如下:

后续会尝试将CNN与LSTM进行结合,同时考虑Attention的融合,看看对于不同的数据集表现效果如何。