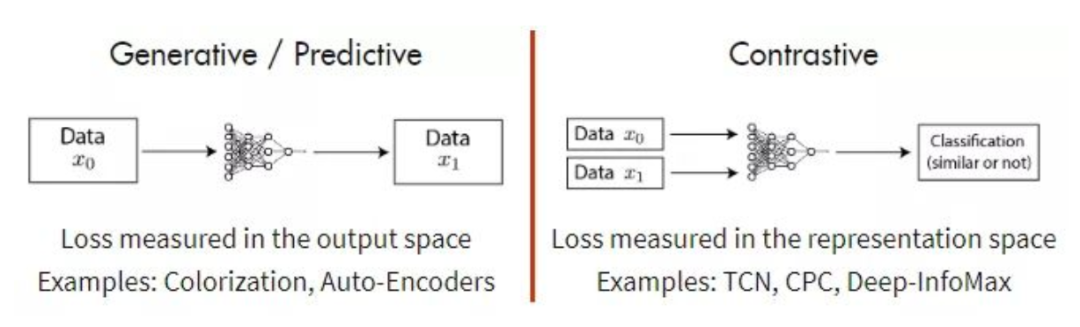

对比自监督学习技术是一种很有前途的方法,它通过学习对使两种事物相似或不同的东西进行编码来构建表示。Contrastive learning有很多文章介绍,区别于生成式的自监督方法,如AutoEncoder通过重建输入信号获取中间表示,Contrastive Methods通过在特征空间建立度量,学习判别不同类型的输入,不需要重建信号而又充分挖掘了无标签数据之间的特征差异。

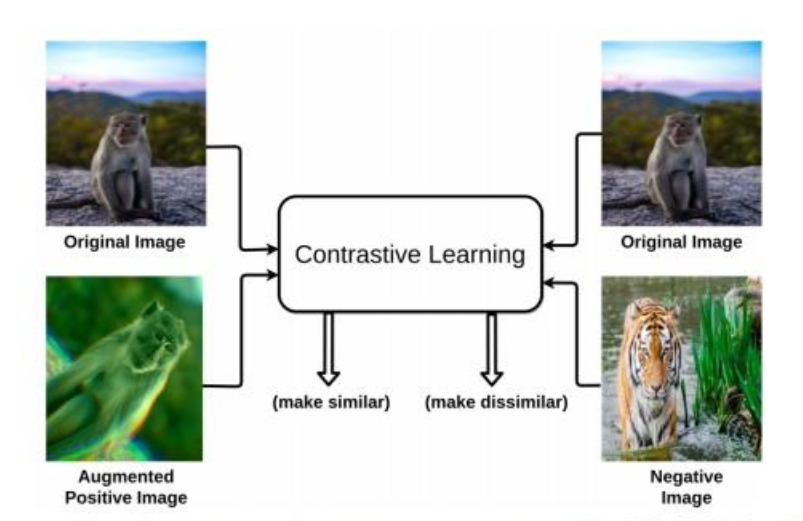

对比学习通过同时最大化同一图像的不同变换视图(例如剪裁,翻转,颜色变换等)之间的一致性,以及最小化不同图像的变换视图之间的一致性来学习的。简单来说,就是对比学习要做到相同的图像经过各类变换之后,依然能识别出是同一张图像,所以要最大化各类变换后图像的相似度(因为都是同一个图像得到的)。相反,如果是不同的图像(即使经过各种变换可能看起来会很类似),就要最小化它们之间的相似度。通过这样的对比训练,编码器(encoder)能学习到图像的更高层次的通用特征 (image-level representations),而不是图像级别的生成模型(pixel-level generation)。

本资源整理了最近几年,特别是2020年对比无监督学习最新的一些必读论文,方便需要的朋友研究使用。

2020

•Contrastive Representation Learning: A Framework and Review, Phuc H. Le-Khac

•Supervised Contrastive Learning, Prannay Khosla, 2020, [pytorch*]

•A Simple Framework for Contrastive Learning of Visual Representations, Ting Chen, 2020, [pytroch, tensorflow*]

•Improved Baselines with Momentum Contrastive Learning, Xinlei Chen, 2020, [tensorflow]

•Contrastive Representation Distillation, Yonglong Tian, ICLR-2020 [pytorch*]

•COBRA: Contrastive Bi-Modal Representation Algorithm, Vishaal Udandarao, 2020

•What makes for good views for contrastive learning, Yonglong Tian, 2020

•Prototypical Contrastive Learning of Unsupervised Representations, Junnan Li, 2020

•Contrastive Multi-View Representation Learning on Graphs, Kaveh Hassani, 2020

•DeCLUTR: Deep Contrastive Learning for Unsupervised Textual Representations, John M. Giorgi, 2020

•On Mutual Information in Contrastive Learning for Visual Representations, Mike Wu, 2020

•Semi-Supervised Contrastive Learning with Generalized Contrastive Loss and Its Application to Speaker Recognition, Nakamasa Inoue, 2020

2019

•Momentum Contrast for Unsupervised Visual Representation Learning, Kaiming He, 2019, [pytorch]

•Data-Efficient Image Recognition with Contrastive Predictive Coding, Olivier J. Hénaff, 2019

•Contrastive Multiview Coding, Yonglong Tian, 2019, [pytorch*]

•Learning deep representations by mutual information estimation and maximization, R Devon Hjelm, ICLR-2019, [pytorch]

•Contrastive Adaptation Network for Unsupervised Domain Adaptation, Guoliang Kang, CVPR-2019

2018

•Representation learning with contrastive predictive coding, Aaron van den Oord, 2018, [pytorch]

•Unsupervised Feature Learning via Non-Parametric Instance-level Discrimination, Zhirong Wu, CVPR-2018, [pytorch*]

•Adversarial Contrastive Estimation, Avishek Joey Bose, ACL-2018,

2017

•Time-Contrastive Networks: Self-Supervised Learning from Video, Pierre Sermanet, CVPR-2017

•Contrastive Learning for Image Captioning, Bo Dai, NeurIPS-2017, [lua*]

Before 2017

•Noise-contrastive estimation for answer selection with deep neural networks, Jinfeng Rao, 2016, [torch]

•Improved Deep Metric Learning with Multi-class N-pair Loss Objective, Kihyuk Sohn, NeurIPS-2016, [pytorch]

•Learning word embeddings efficiently with noise-contrastive estimation, Andriy Mnih, NeurIPS-2013,

•Noise-contrastive estimation: A new estimation principle for unnormalized statistical models, Michael Gutmann, AISTATS 2010, [pytorch]

•Dimensionality Reduction by Learning an Invariant Mapping, Raia Hadsell, 2006

往期精品内容推荐

加州理工《数据驱动算法设计》课程(2020)视频及ppt分享

深度强化学习圣经-《Reinforcement Learning-第二版》

DeepMind深度学习系列讲座-10-深度学习里的表示学习

知识图谱(KG)存储、可视化、公开数据集、图计算、图编程工具分享