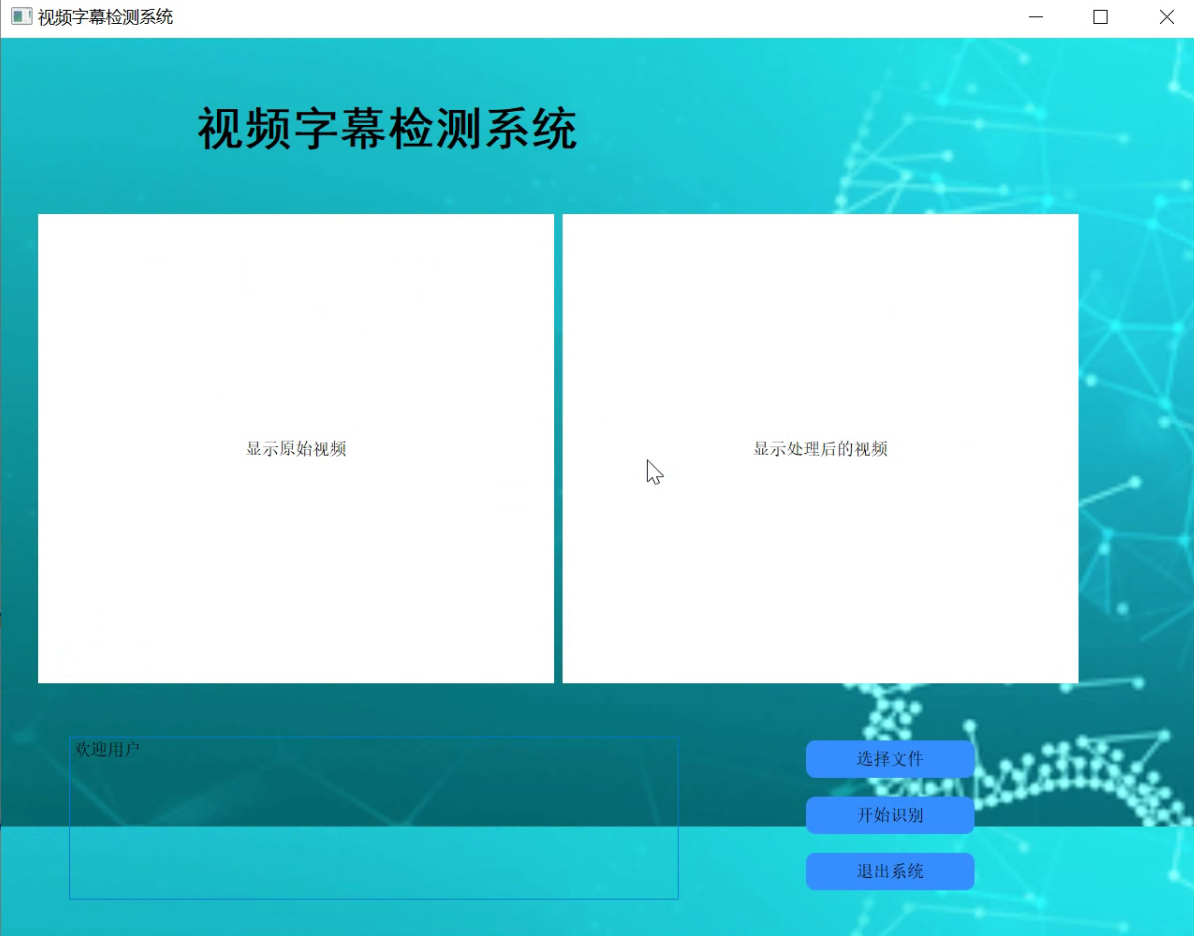

1.项目展示

](https://upload-images.jianshu.io/upload_images/28411888-8290b78d96b37ac3.png?imageMogr2/auto-orient/strip%7CimageView2/2/w/1240)

2.项目背景

随着计算机技术、多媒体技术和网络技术的飞速发展,数字视频的应用也越来越广泛。如何从这些海量的视频数据中快速、准确地查找所需要的信息的需求已变得日益迫切,因此,基于内容的视频检索技术(CBVR)便成为人们关注的热点。本文从视频的字幕入手把字幕作为检索的一个线索,先从视频中提取出字幕进而转换成纯文本格式保存,然后利用这些字幕文本来检索视频,给视频检索技术的实现提供一个可行的方法。视频字幕识别主要包括字幕检测、字幕定位、字幕抽取、字幕增强和字幕识别几个主要步骤。本文研究的内容是字幕检测、字幕定位、字幕抽取和字幕识别这几个问题,并且字幕检测在文中分为字幕帧检测和帧图像字幕检测两部分。 本文首先介绍了视频字幕提取的背景及研究现状,然后总结了传统的字幕提取方法,分析了现有方法的优缺点。接着根据现有的方法,从理论和实际相结合的角度出发,提出了新的字幕检测算法,提高了字幕帧检测的效率和准确率;通过分析图像背景和字幕区域的颜色变化,提出了基于分块的字幕检测(在帧图像上检测字幕,区别于字幕帧检测)和定位方法,提高字幕检测和定位的效率;通过分析RGB颜色空间和LAB颜色空间的特点,提出了基于LAB颜色模型的字幕抽取方法,减少了因采用RGB颜色空间的图像二值化方法而造成的颜色丢失,从而提高了二值化效果;通过比较视频字幕中的字符和一般字符的特点,提出了一种针对视频字幕的基于特征补偿的字幕识别方法,取得了很好的识别效果。

3.解决思路

1.将视频按帧截取成图片

2.将上一步截取的图片再进行裁剪,只保留字幕部分,然后在进行灰度处理

3.输入RCNN&CTC网络

4.输出成txt

裁剪视频

def tailor_video():

# 要提取视频的文件名,隐藏后缀

sourceFileName = 'material'

# 在这里把后缀接上

video_path = os.path.join("G:/material/", sourceFileName + '.mp4')

times = 0

# 提取视频的频率,每10帧提取一个

frameFrequency = 10

# 输出图片到当前目录video文件夹下

outPutDirName = 'G:/material/video/' + sourceFileName + '/'

if not os.path.exists(outPutDirName):

# 如果文件目录不存在则创建目录

os.makedirs(outPutDirName)

camera = cv2.VideoCapture(video_path)

while True:

times += 1

res, image = camera.read()

if not res:

print('not res , not image')

break

if times % frameFrequency == 0:

cv2.imwrite(outPutDirName + str(times) + '.jpg', image) #文件目录下将输出的图片名字命名为10.jpg这种形式

print(outPutDirName + str(times) + '.jpg')

print('图片提取结束')

4.CRNN+CTC文本识别网络构建

首先CNN提取图像卷积特征

然后LSTM进一步提取图像卷积特征中的序列特征

最后引入CTC解决训练时字符无法对齐的问题

一般情况下对一张图像中的文字进行识别需要以下步骤:

定位文稿中的图片,表格,文字区域,区分文字段落(版面分析)

进行文本行识别(识别)

使用NLP相关算法对文字识别结果进行矫正(后处理)

整个CRNN网络可以分为三个部分:

假设输入图像大小为 ,注意提及图像都是 形式。

Convlutional Layers

这里的卷积层就是一个普通的CNN网络,用于提取输入图像的Convolutional feature maps,即将大小为 的图像转换为 大小的卷积特征矩阵,网络细节请参考本文给出的实现代码。

Recurrent Layers

这里的循环网络层是一个深层双向LSTM网络,在卷积特征的基础上继续提取文字序列特征。

在CRNN中显然使用了第二种stack形深层双向结构。

由于CNN输出的Feature map是大小,所以对于RNN最大时间长度 (即有25个时间输入)。

Transcription Layers

将RNN输出做softmax后,为字符输出。

对于Recurrent Layers,如果使用常见的Softmax cross-entropy loss,则每一列输出都需要对应一个字符元素。那么训练时候每张样本图片都需要标记出每个字符在图片中的位置,再通过CNN感受野对齐到Feature map的每一列获取该列输出对应的Label才能进行训练,如图9。

在实际情况中,标记这种对齐样本非常困难(除了标记字符,还要标记每个字符的位置),工作量非常大。另外,由于每张样本的字符数量不同,字体样式不同,字体大小不同,导致每列输出并不一定能与每个字符一一对应。

整个CRNN的流程如图。先通过CNN提取文本图片的Feature map,然后将每一个channel作为 的时间序列输入到LSTM中。

详细教程参考:

一文读懂CRNN+CTC文字识别 - 知乎 (zhihu.com)

5.代码实现

import cv2

from math import *

import numpy as np

from detect.ctpn_predict import get_det_boxes

from recognize.crnn_recognizer import PytorchOcr

recognizer = PytorchOcr()

def dis(image):

cv2.imshow('image', image)

cv2.waitKey(0)

def sort_box(box):

"""

对box进行排序

"""

box = sorted(box, key=lambda x: sum([x[1], x[3], x[5], x[7]]))

return box

def dumpRotateImage(img, degree, pt1, pt2, pt3, pt4):

height, width = img.shape[:2]

heightNew = int(width * fabs(sin(radians(degree))) + height * fabs(cos(radians(degree))))

widthNew = int(height * fabs(sin(radians(degree))) + width * fabs(cos(radians(degree))))

matRotation = cv2.getRotationMatrix2D((width // 2, height // 2), degree, 1)

matRotation[0, 2] += (widthNew - width) // 2

matRotation[1, 2] += (heightNew - height) // 2

imgRotation = cv2.warpAffine(img, matRotation, (widthNew, heightNew), borderValue=(255, 255, 255))

pt1 = list(pt1)

pt3 = list(pt3)

[[pt1[0]], [pt1[1]]] = np.dot(matRotation, np.array([[pt1[0]], [pt1[1]], [1]]))

[[pt3[0]], [pt3[1]]] = np.dot(matRotation, np.array([[pt3[0]], [pt3[1]], [1]]))

ydim, xdim = imgRotation.shape[:2]

imgOut = imgRotation[max(1, int(pt1[1])): min(ydim - 1, int(pt3[1])),

max(1, int(pt1[0])): min(xdim - 1, int(pt3[0]))]

return imgOut

def charRec(img, text_recs, adjust=False):

"""

加载OCR模型,进行字符识别

"""

results = {}

xDim, yDim = img.shape[1], img.shape[0]

for index, rec in enumerate(text_recs):

xlength = int((rec[6] - rec[0]) * 0.1)

ylength = int((rec[7] - rec[1]) * 0.2)

if adjust:

pt1 = (max(1, rec[0] - xlength), max(1, rec[1] - ylength))

pt2 = (rec[2], rec[3])

pt3 = (min(rec[6] + xlength, xDim - 2), min(yDim - 2, rec[7] + ylength))

pt4 = (rec[4], rec[5])

else:

pt1 = (max(1, rec[0]), max(1, rec[1]))

pt2 = (rec[2], rec[3])

pt3 = (min(rec[6], xDim - 2), min(yDim - 2, rec[7]))

pt4 = (rec[4], rec[5])

degree = degrees(atan2(pt2[1] - pt1[1], pt2[0] - pt1[0])) # 图像倾斜角度

partImg = dumpRotateImage(img, degree, pt1, pt2, pt3, pt4)

# dis(partImg)

if partImg.shape[0] < 1 or partImg.shape[1] < 1 or partImg.shape[0] > partImg.shape[1]: # 过滤异常图片

continue

text = recognizer.recognize(partImg)

if len(text) > 0:

results[index] = [rec]

results[index].append(text) # 识别文字

return results

def ocr(image):

# detect

text_recs, img_framed, image = get_det_boxes(image)

text_recs = sort_box(text_recs)

result = charRec(image, text_recs)

return result, img_framed

6.项目展示

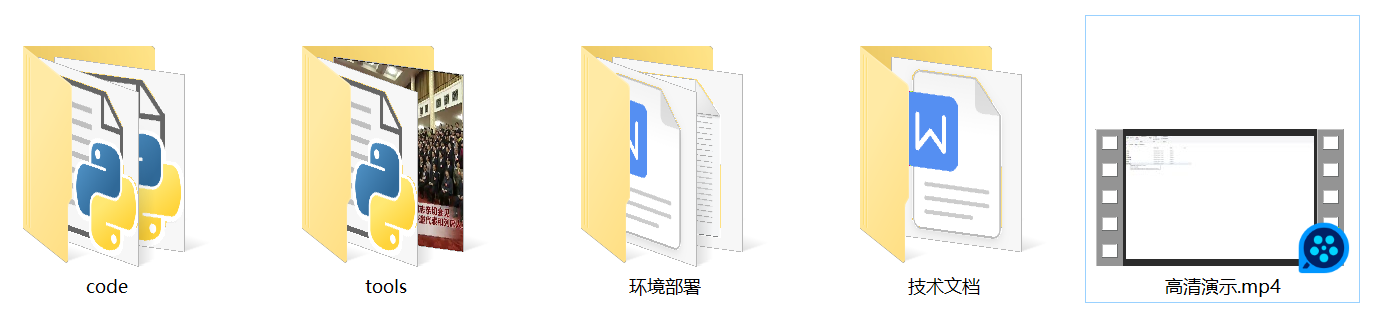

7.完整源码&环境部署教程:

百度面包多搜索标题名即可下载源码

8.参考文献:

-

Shi, B., Bai, X., & Yao, C. (2016). An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE transactions on pattern analysis and machine intelligence, 39(11), 2298-2304.↩︎

-

Fedor Borisyuk, Albert Gordo, and Viswanath Sivakumar. Rosetta: Large scale system for text detection and recognition in images. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 71–79. ACM, 2018.↩︎

-

Gao, Y., Chen, Y., Wang, J., & Lu, H. (2017). Reading scene text with attention convolutional sequence modeling. arXiv preprint arXiv:1709.04303.↩︎

-

Shi, B., Wang, X., Lyu, P., Yao, C., & Bai, X. (2016). Robust scene text recognition with automatic rectification. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4168-4176).↩︎

-

Baoguang Shi, Mingkun Yang, XingGang Wang, Pengyuan Lyu, Xiang Bai, and Cong Yao. Aster: An attentional scene text recognizer with flexible rectification. IEEE transactions on pattern analysis and machine intelligence, 31(11):855–868, 2018.↩︎

-

Star-Net Max Jaderberg, Karen Simonyan, Andrew Zisserman, et al. Spatial transformer networks. In Advances in neural information processing systems, pages 2017–2025, 2015.↩︎

-

Lee C Y , Osindero S . Recursive Recurrent Nets with Attention Modeling for OCR in the Wild[C]// IEEE Conference on Computer Vision & Pattern Recognition. IEEE, 2016.↩︎

-

Li, H., Wang, P., Shen, C., & Zhang, G. (2019, July). Show, attend and read: A simple and strong baseline for irregular text recognition. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 33, No. 01, pp. 8610-8617).↩︎

-

P. Lyu, C. Yao, W. Wu, S. Yan, and X. Bai. Multi-oriented scene text detection via corner localization and region segmentation. In Proc. CVPR, pages 7553–7563, 2018.↩︎

-

Liao, M., Zhang, J., Wan, Z., Xie, F., Liang, J., Lyu, P., … & Bai, X. (2019, July). Scene text recognition from two-dimensional perspective. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 33, No. 01, pp. 8714-8721).↩︎

-

Yu, D., Li, X., Zhang, C., Liu, T., Han, J., Liu, J., & Ding, E. (2020). Towards accurate scene text recognition with semantic reasoning networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 12113-12122).↩︎

-

Sheng, F., Chen, Z., & Xu, B. (2019, September). NRTR: A no-recurrence sequence-to-sequence model for scene text recognition. In 2019 International Conference on Document Analysis and Recognition (ICDAR) (pp. 781-786). IEEE.↩︎

-

Yang, L., Wang, P., Li, H., Li, Z., & Zhang, Y. (2020). A holistic representation guided attention network for scene text recognition. Neurocomputing, 414, 67-75.↩︎

-

Wang, Y., Xie, H., Fang, S., Wang, J., Zhu, S., & Zhang, Y. (2021). From two to one: A new scene text recognizer with visual language modeling network. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 14194-14203).↩︎

-

Li, H., Wang, P., Shen, C., & Zhang, G. (2019, July). Show, attend and read: A simple and strong baseline for irregular text recognition. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 33, No. 01, pp. 8610-8617).↩︎

-

Canjie, L., Yuanzhi, Z., & Lianwen, J. (2020). Yongpan Wang2Learn to Augment: Joint Data Augmentation and Network Optimization for Text Recognition.↩︎