假设兄弟们之前的内容已经看完了,训练也有自己的数据集了,也训练出了自己的模型,所以我们接下就来看看模型的效果,我们这里没有任何指标性的数据,仅仅是看看效果,别杠我啊,兄弟们。

那就先上两张效果图吧.这是某年的全名星东西部的照片,东部有我喜欢的罗斯。西部有大家喜欢的KOBE(btw,我有一个科比亲笔签名的篮球,还是2001总决赛的球,听说老值钱了。)看到这个图,估计大家就知道我肯定是上年纪了,哈哈哈哈。

可以看到,检测效果还是可以的,虽然不完美,因为训练的时间,样本增强,以前损失函数都没有搞,所以这种效果我也是能够接受的 。

好了,上代码。

4.推理

import torch

import torchvision.transforms as transform

import cv2

import numpy

import config

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

class Inference:

def __init__(self, model_path):

self.net = torch.load(model_path).to(device)

self.transform = transform.Compose([transform.ToTensor(), transform.Normalize([0.5], [0.5])])

self.resize_image_size = config.resize_image_size

self.net.eval()

self.conf_treshold = 0.5

self.iou_threshold = 0.5

def test(self, img):

ori_size = img.shape

resize_img = cv2.resize(img, (self.resize_image_size[1], self.resize_image_size[0]))

img_tensor = self.transform(resize_img).unsqueeze(0).to(device)

predict_tensor_32, predict_tensor_16, predict_tensor_8 = self.net(img_tensor)

# print(predict_tensor_32.shape,predict_tensor_16.shape,predict_tensor_8.shape)

predict_boxes = self.get_boxes(predict_tensor_32, predict_tensor_16,

predict_tensor_8) # [-1,6] 6=[cls_id,conf,xmin,ymin,xmax,ymax] 此时的坐标还是resize之后的坐标

print(predict_boxes)

if predict_boxes.size(0) == 0:

return []

after_nms_boxes = self.NMS(predict_boxes, self.iou_threshold)

print(after_nms_boxes)

change_coor_boxes = self.change_coordinate(after_nms_boxes, ori_size, config.resize_image_size)

return change_coor_boxes

def change_coordinate(self, after_nms_boxes, ori_size, resize_image_size):

boxes = []

h_ratio = ori_size[0] / resize_image_size[0]

w_ratio = ori_size[1] / resize_image_size[1]

for i in after_nms_boxes:

box = i.int().cpu().numpy().tolist()

box[2] = int(box[2] * w_ratio)

box[4] = int(box[4] * w_ratio)

box[3] = int(box[3] * h_ratio)

box[5] = int(box[5] * h_ratio)

boxes.append([box[0], box[2], box[3], box[4], box[5]])

return boxes

def get_boxes(self, predict_tensor_32, predict_tensor_16, predict_tensor_8):

"""

suppose input image size = [640,640]

:param predict_tensor_32: shape=[batch,21,20,20] stride = 32的featuremap

:param predict_tensor_16: shape=[batch,21,20,20] stride = 16的featuremap

:param predict_tensor_8: shape=[batch,21,20,20] stride = 8的featuremap

:return:

"""

boxes_32 = self.get_featuremap_box(predict_tensor_32, 32, self.conf_treshold)

boxes_16 = self.get_featuremap_box(predict_tensor_16, 16, self.conf_treshold)

boxes_8 = self.get_featuremap_box(predict_tensor_8, 8, self.conf_treshold)

boxes = torch.cat((boxes_32, boxes_16, boxes_8), dim=0).cpu()

boxes[boxes <= 0] = 0

return boxes

def NMS(self, lists, treshold):

values, index = (-lists[:, 1]).sort(0)

boxes = lists[index].float()

fal_box = torch.tensor([]).view(-1, 6)

while boxes.shape[0] > 1: # 多于一个框

max_score_box = boxes[0].view(-1, 6)

rest_boxes = boxes[1:].view(-1, 6)

fal_box = torch.cat((fal_box, max_score_box), dim=0)

boxes = rest_boxes[self.IOU(max_score_box[:, 2:], rest_boxes[:, 2:]) < treshold] # 小于阈值的保留

if boxes.shape[0] > 0: # 只有一个框的情况

fal_box = torch.cat((fal_box, boxes[0].view(-1, 6)), dim=0)

return fal_box

def IOU(self, max_score_box, rest_boxes):

# max_score_box = [xmin,ymin,xmax,ymax,score]

max_score_area = (max_score_box[:, 2] - max_score_box[:, 0]) * (

max_score_box[:, 3] - max_score_box[:, 1]) # 最大值框的面积

rest_area = (rest_boxes[:, 2] - rest_boxes[:, 0]) * (rest_boxes[:, 3] - rest_boxes[:, 1]) # 剩下的框的面积

x1 = torch.max(max_score_box[:, 0], rest_boxes[:, 0])

y1 = torch.max(max_score_box[:, 1], rest_boxes[:, 1])

x2 = torch.min(max_score_box[:, 2], rest_boxes[:, 2])

y2 = torch.min(max_score_box[:, 3], rest_boxes[:, 3])

w = torch.max(torch.tensor([0.]), x2 - x1)

h = torch.max(torch.tensor([0.]), y2 - y1)

intersection = w * h # 交集

union = rest_area + max_score_area - intersection # 并集

iou = intersection / union

return iou

def get_featuremap_box(self, featuremap, stride, threshold, anchor_num=3):

"""

:param featuremap: eg:[batch,21,20,20]

:param anchor_num: anchor的个数

:return:

"""

featuremap = featuremap.view(featuremap.size(0), anchor_num, -1, featuremap.size(2),

featuremap.size(3)) # [batch,3,7,20,20]

featuremap = featuremap.permute(0, 3, 4, 1, 2) # [batch,20,20,3,7]

batch, h, w, anchor, channel = featuremap.size()

featuremap = featuremap[0] # 去掉batch维度 [20,20,3,7]

featuremap[:, :, :, 0] = featuremap[:, :, :, 0] # 置信度先sigmoid

obj_mask = torch.gt(featuremap[:, :, :, 0], threshold) # [20,20,3] 里面只有0,1

featuremap_coordinate = torch.nonzero(

obj_mask).float() # [-1,3] 里面是不为0的坐标点 -1代表有几个框 3代表[y_index,x_index,anchor_index]

# confidence and offset and w h

confidence = featuremap[:, :, :, 0][obj_mask].view(-1, 1).float()

offset_x = featuremap[:, :, :, 1][obj_mask].view(-1, 1).float()

offset_y = featuremap[:, :, :, 2][obj_mask].view(-1, 1).float()

w = torch.exp(featuremap[:, :, :, 3][obj_mask].view(-1, 1).float())

h = torch.exp(featuremap[:, :, :, 4][obj_mask].view(-1, 1).float())

# 类别cls

if featuremap_coordinate.size(0) == 0:

# 代表没有框

cls = torch.tensor([]).view(featuremap_coordinate.size(0), 0).to(device)

return torch.tensor([]).view(-1, 6).to(device)

else:

cls = torch.tensor([]).view(featuremap_coordinate.size(0), -1).to(device)

for i in range(int(channel - 5)):

cl = featuremap[:, :, :, 5 + i][obj_mask].view(-1, 1).float()

cls = torch.cat((cls, cl), 1)

_, cls_id = torch.max(cls, dim=1)

cls_id = cls_id.view(-1, 1).float()

# 转换wh坐标(resize之后)

selected_anchor = torch.tensor(config.ANCHORS_GROUP[stride])[

featuremap_coordinate[:, 2].int().cpu().numpy().tolist()]

after_resize_wh = selected_anchor.to(device).float() * torch.cat((w, h), dim=1) # [-1,2] [w,h]

# 转换坐标(resize之后)

xmin = (featuremap_coordinate[:, 1].view(-1, 1) + offset_x) * stride - (after_resize_wh[:, 0].view(-1, 1) / 2)

ymin = (featuremap_coordinate[:, 0].view(-1, 1) + offset_y) * stride - (after_resize_wh[:, 1].view(-1, 1) / 2)

xmax = xmin + after_resize_wh[:, 0].view(-1, 1)

ymax = ymin + after_resize_wh[:, 1].view(-1, 1)

finall_box = torch.cat((cls_id, confidence, xmin, ymin, xmax, ymax), dim=1)

return finall_box

def draw_box(img, boxes,img_name):

for i in boxes:

cv2.rectangle(img, (i[1], i[2]), (i[3], i[4]), color=(0, 0, 255),thickness=3)

cv2.imwrite('./result/'+img_name,img)

# cv2.imshow('demo', cv2.resize(img,(640,640)))

# cv2.waitKey()

return img

if __name__ == '__main__':

model_path = './weights/yolov3_net.pth'

img_folder = './sample'

Model = Inference(model_path)

import os

for i in os.listdir(img_folder):

img_path = os.path.join(img_folder,i)

img = cv2.imread(img_path)

boxes = Model.test(img)

img = draw_box(img, boxes,i)

看到这么长。你先不要退缩,训练的你如果看懂了的话,推理就不会特别难,推理就是训练数据准备的逆过程。我们来一步一步的看

4.1 初始化函数

def __init__(self, model_path):

"""

:param model_path: 训练好的模型的路径

"""

self.net = torch.load(model_path).to(device) # 加载训练好的模型

self.transform = transform.Compose([transform.ToTensor(), transform.Normalize([0.5], [0.5])]) # 数据转化对象初始化

self.resize_image_size = config.resize_image_size # 定义resize图片的大小

self.net.eval() # 模型开到推理模式

self.conf_treshold = 0.5 # 定义置信度的阈值

self.iou_threshold = 0.5 # 定义iou的阈值之前有人说我不谢代码注释,今天它来了。

4.2 推理函数

def test(self, img):

"""

:param img: opencv读取的BGR通道的图片

:return: 目标的坐标值

"""

ori_size = img.shape # 获取原图的高/宽/channel值

resize_img = cv2.resize(img, (self.resize_image_size[1], self.resize_image_size[0])) # resize 图片

img_tensor = self.transform(resize_img).unsqueeze(0).to(device) # 对图片进行预处理,再增加一个batch的维度

predict_tensor_32, predict_tensor_16, predict_tensor_8 = self.net(img_tensor) # 图片放入加载好的模型中

# print(predict_tensor_32.shape,predict_tensor_16.shape,predict_tensor_8.shape)

predict_boxes = self.get_boxes(predict_tensor_32, predict_tensor_16,

predict_tensor_8) # [-1,6] 6=[cls_id,conf,xmin,ymin,xmax,ymax] 此时的坐标还是resize之后的坐标

# 调用自定义的get_boxes函数,获取推理的3个featuremap

# print(predict_boxes)

if predict_boxes.size(0) == 0:

return []

after_nms_boxes = self.NMS(predict_boxes, self.iou_threshold) # 对得到的结果进行NMS操作

# print(after_nms_boxes)

change_coor_boxes = self.change_coordinate(after_nms_boxes, ori_size, config.resize_image_size) # 把得到的坐标转换为原图对应的坐标

return change_coor_boxes4.2.1 get_boxes函数

def get_boxes(self, predict_tensor_32, predict_tensor_16, predict_tensor_8):

"""

suppose input image size = [640,640]

:param predict_tensor_32: shape=[batch,21,20,20] stride = 32的featuremap

:param predict_tensor_16: shape=[batch,21,20,20] stride = 16的featuremap

:param predict_tensor_8: shape=[batch,21,20,20] stride = 8的featuremap

:return:

"""

boxes_32 = self.get_featuremap_box(predict_tensor_32, 32, self.conf_treshold)

boxes_16 = self.get_featuremap_box(predict_tensor_16, 16, self.conf_treshold)

boxes_8 = self.get_featuremap_box(predict_tensor_8, 8, self.conf_treshold)

boxes = torch.cat((boxes_32, boxes_16, boxes_8), dim=0).cpu()

boxes[boxes <= 0] = 0

return boxes这个函数其实就是在调用另一个get_featuremap_box这个自定义的函数,所以我们来看这个函数就行了,如果训练是数据准备是编码过程,这个就是解码过程了

def get_featuremap_box(self, featuremap, stride, threshold, anchor_num=3):

"""

:param featuremap: 单个featuremap eg 维度 [batch,21,20,20]

:param stride: 下采样的倍数

:param threshold: 置信度的阈值

:param anchor_num: anchor的数量

:return:

"""

featuremap = featuremap.view(featuremap.size(0), anchor_num, -1, featuremap.size(2),

featuremap.size(3)) # [batch,3,7,20,20] # 变化维度

featuremap = featuremap.permute(0, 3, 4, 1, 2) # [batch,20,20,3,7] # 交换维度

batch, h, w, anchor, channel = featuremap.size() # 获取各个维度的值

featuremap = featuremap[0] # 去掉batch维度 [20,20,3,7]

featuremap[:, :, :, 0] = featuremap[:, :, :, 0] # 置信度

obj_mask = torch.gt(featuremap[:, :, :, 0], threshold) # [20,20,3] 里面只有0,1 # 比较置信度和置信度的阈值

featuremap_coordinate = torch.nonzero(

obj_mask).float() # [-1,3] 里面是不为0的坐标点 -1代表有几个框 3代表[y_index,x_index,anchor_index]

# confidence and offset and w h

confidence = featuremap[:, :, :, 0][obj_mask].view(-1, 1).float() # 获取置信度

offset_x = featuremap[:, :, :, 1][obj_mask].view(-1, 1).float() # 获取x的偏移值

offset_y = featuremap[:, :, :, 2][obj_mask].view(-1, 1).float() # 获取y的偏移值

w = torch.exp(featuremap[:, :, :, 3][obj_mask].view(-1, 1).float()) # 目标的宽

h = torch.exp(featuremap[:, :, :, 4][obj_mask].view(-1, 1).float()) # 目标的高

# 类别cls

if featuremap_coordinate.size(0) == 0:

# 代表没有框

cls = torch.tensor([]).view(featuremap_coordinate.size(0), 0).to(device)

return torch.tensor([]).view(-1, 6).to(device)

else:

cls = torch.tensor([]).view(featuremap_coordinate.size(0), -1).to(device)

for i in range(int(channel - 5)):

cl = featuremap[:, :, :, 5 + i][obj_mask].view(-1, 1).float()

cls = torch.cat((cls, cl), 1)

_, cls_id = torch.max(cls, dim=1)

cls_id = cls_id.view(-1, 1).float() # 获取到类别的id

# 转换wh坐标(resize之后)

selected_anchor = torch.tensor(config.ANCHORS_GROUP[stride])[

featuremap_coordinate[:, 2].int().cpu().numpy().tolist()]

after_resize_wh = selected_anchor.to(device).float() * torch.cat((w, h), dim=1) # [-1,2] [w,h]

# 转换坐标(resize之后)

xmin = (featuremap_coordinate[:, 1].view(-1, 1) + offset_x) * stride - (after_resize_wh[:, 0].view(-1, 1) / 2)

ymin = (featuremap_coordinate[:, 0].view(-1, 1) + offset_y) * stride - (after_resize_wh[:, 1].view(-1, 1) / 2)

xmax = xmin + after_resize_wh[:, 0].view(-1, 1)

ymax = ymin + after_resize_wh[:, 1].view(-1, 1)

finall_box = torch.cat((cls_id, confidence, xmin, ymin, xmax, ymax), dim=1)

return finall_box这个函数就是精华中的精华了,这个函数看懂,那么,yolo你估计也就没啥疑问了。

4.2.2 NMS函数

def NMS(self, lists, treshold):

values, index = (-lists[:, 1]).sort(0)

boxes = lists[index].float()

fal_box = torch.tensor([]).view(-1, 6)

while boxes.shape[0] > 1: # 多于一个框

max_score_box = boxes[0].view(-1, 6)

rest_boxes = boxes[1:].view(-1, 6)

fal_box = torch.cat((fal_box, max_score_box), dim=0)

boxes = rest_boxes[self.IOU(max_score_box[:, 2:], rest_boxes[:, 2:]) < treshold] # 小于阈值的保留

if boxes.shape[0] > 0: # 只有一个框的情况

fal_box = torch.cat((fal_box, boxes[0].view(-1, 6)), dim=0)

return fal_box

def IOU(self, max_score_box, rest_boxes):

# max_score_box = [xmin,ymin,xmax,ymax,score]

max_score_area = (max_score_box[:, 2] - max_score_box[:, 0]) * (

max_score_box[:, 3] - max_score_box[:, 1]) # 最大值框的面积

rest_area = (rest_boxes[:, 2] - rest_boxes[:, 0]) * (rest_boxes[:, 3] - rest_boxes[:, 1]) # 剩下的框的面积

x1 = torch.max(max_score_box[:, 0], rest_boxes[:, 0])

y1 = torch.max(max_score_box[:, 1], rest_boxes[:, 1])

x2 = torch.min(max_score_box[:, 2], rest_boxes[:, 2])

y2 = torch.min(max_score_box[:, 3], rest_boxes[:, 3])

w = torch.max(torch.tensor([0.]), x2 - x1)

h = torch.max(torch.tensor([0.]), y2 - y1)

intersection = w * h # 交集

union = rest_area + max_score_area - intersection # 并集

iou = intersection / union

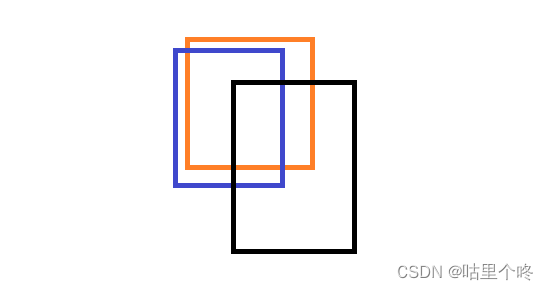

return iouNMS就是为了去掉多余的框,去掉的条件就是,iou大于iou阈值的框,好了,你要问了,啥叫IOU,上图

假设红框是置信度最高的框,蓝色和黑色是另外预测出来的两个框,但是置信度没他高,iou就是红框和蓝框交集/红框和蓝框的并集,黑框同理。代码都在上面了,可以理一理。

4.3 画框

def draw_box(img, boxes,img_name):

for i in boxes:

cv2.rectangle(img, (i[1], i[2]), (i[3], i[4]), color=(0, 0, 255),thickness=3)

cv2.imwrite('./result/'+img_name,img)

# cv2.imshow('demo', cv2.resize(img,(640,640)))

# cv2.waitKey()

return img这就是一个可视化的函数了,把框画到图片上。

好了,到这里,整个项目就结束了。各位兄弟,如果可以对YOLO感兴趣的话,可以去试一试了。

5.项目代码

guligedong_yolo.7z-深度学习文档类资源-CSDN文库![]() https://download.csdn.net/download/guligedong/56893586

https://download.csdn.net/download/guligedong/56893586

至此,敬礼,salute!!!!

老规矩,上咩咩