tensorrt python测试程序

onnx模型转tensorrt模型

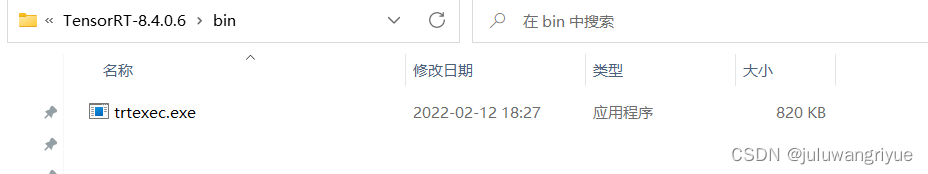

在安装路径下的bin目录中有一个 trtexec.exe 文件

执行脚本:

trtexec --onnx=xxx.onnx --saveEngine=xxx.engine --fp16

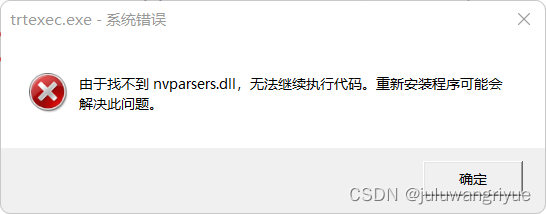

直接执行上述命令报错

解决办法一:设置环境变量

上一级的lib文件夹设置为环境变量

解决办法二:将依赖库拷贝到当前文件夹

将上一级的lib文件夹下的动态库拷贝到当前文件夹

我采用的是第办法二。

再次执行

>trtexec.exe --onnx=AnkleSeg.onnx --saveEngine=AnkleSeg.engine --fp16

&&&& RUNNING TensorRT.trtexec [TensorRT v8400] # trtexec.exe --onnx=AnkleSeg.onnx --saveEngine=AnkleSeg.engine --fp16

[06/14/2022-09:32:12] [I] === Model Options ===

[06/14/2022-09:32:12] [I] Format: ONNX

[06/14/2022-09:32:12] [I] Model: AnkleSeg.onnx

[06/14/2022-09:32:12] [I] Output:

[06/14/2022-09:32:12] [I] === Build Options ===

[06/14/2022-09:32:12] [I] Max batch: explicit batch

[06/14/2022-09:32:12] [I] Memory Pools: workspace: default, dlaSRAM: default, dlaLocalDRAM: default, dlaGlobalDRAM: default

[06/14/2022-09:32:12] [I] minTiming: 1

[06/14/2022-09:32:12] [I] avgTiming: 8

[06/14/2022-09:32:12] [I] Precision: FP32+FP16

[06/14/2022-09:32:12] [I] LayerPrecisions:

[06/14/2022-09:32:12] [I] Calibration:

[06/14/2022-09:32:12] [I] Refit: Disabled

[06/14/2022-09:32:12] [I] Sparsity: Disabled

[06/14/2022-09:32:12] [I] Safe mode: Disabled

[06/14/2022-09:32:12] [I] DirectIO mode: Disabled

[06/14/2022-09:32:12] [I] Restricted mode: Disabled

[06/14/2022-09:32:12] [I] Save engine: AnkleSeg.engine

[06/14/2022-09:32:12] [I] Load engine:

[06/14/2022-09:32:12] [I] Profiling verbosity: 0

[06/14/2022-09:32:12] [I] Tactic sources: Using default tactic sources

[06/14/2022-09:32:12] [I] timingCacheMode: local

[06/14/2022-09:32:12] [I] timingCacheFile:

[06/14/2022-09:32:12] [I] Input(s)s format: fp32:CHW

[06/14/2022-09:32:12] [I] Output(s)s format: fp32:CHW

[06/14/2022-09:32:12] [I] Input build shapes: model

[06/14/2022-09:32:12] [I] Input calibration shapes: model

[06/14/2022-09:32:12] [I] === System Options ===

[06/14/2022-09:32:12] [I] Device: 0

[06/14/2022-09:32:12] [I] DLACore:

[06/14/2022-09:32:12] [I] Plugins:

[06/14/2022-09:32:12] [I] === Inference Options ===

[06/14/2022-09:32:12] [I] Batch: Explicit

[06/14/2022-09:32:12] [I] Input inference shapes: model

[06/14/2022-09:32:12] [I] Iterations: 10

[06/14/2022-09:32:12] [I] Duration: 3s (+ 200ms warm up)

[06/14/2022-09:32:12] [I] Sleep time: 0ms

[06/14/2022-09:32:12] [I] Idle time: 0ms

[06/14/2022-09:32:12] [I] Streams: 1

[06/14/2022-09:32:12] [I] ExposeDMA: Disabled

[06/14/2022-09:32:12] [I] Data transfers: Enabled

[06/14/2022-09:32:12] [I] Spin-wait: Disabled

[06/14/2022-09:32:12] [I] Multithreading: Disabled

[06/14/2022-09:32:12] [I] CUDA Graph: Disabled

[06/14/2022-09:32:12] [I] Separate profiling: Disabled

[06/14/2022-09:32:12] [I] Time Deserialize: Disabled

[06/14/2022-09:32:12] [I] Time Refit: Disabled

[06/14/2022-09:32:12] [I] Skip inference: Disabled

[06/14/2022-09:32:12] [I] Inputs:

[06/14/2022-09:32:12] [I] === Reporting Options ===

[06/14/2022-09:32:12] [I] Verbose: Disabled

[06/14/2022-09:32:12] [I] Averages: 10 inferences

[06/14/2022-09:32:12] [I] Percentile: 99

[06/14/2022-09:32:12] [I] Dump refittable layers:Disabled

[06/14/2022-09:32:12] [I] Dump output: Disabled

[06/14/2022-09:32:12] [I] Profile: Disabled

[06/14/2022-09:32:12] [I] Export timing to JSON file:

[06/14/2022-09:32:12] [I] Export output to JSON file:

[06/14/2022-09:32:12] [I] Export profile to JSON file:

[06/14/2022-09:32:12] [I]

[06/14/2022-09:32:12] [I] === Device Information ===

[06/14/2022-09:32:12] [I] Selected Device: GeForce GTX 1650 Ti

[06/14/2022-09:32:12] [I] Compute Capability: 7.5

[06/14/2022-09:32:12] [I] SMs: 16

[06/14/2022-09:32:12] [I] Compute Clock Rate: 1.485 GHz

[06/14/2022-09:32:12] [I] Device Global Memory: 4096 MiB

[06/14/2022-09:32:12] [I] Shared Memory per SM: 64 KiB

[06/14/2022-09:32:12] [I] Memory Bus Width: 128 bits (ECC disabled)

[06/14/2022-09:32:12] [I] Memory Clock Rate: 6.001 GHz

[06/14/2022-09:32:12] [I]

[06/14/2022-09:32:12] [I] TensorRT version: 8.4.0

[06/14/2022-09:32:13] [I] [TRT] [MemUsageChange] Init CUDA: CPU +401, GPU +0, now: CPU 11356, GPU 905 (MiB)

[06/14/2022-09:32:14] [I] [TRT] [MemUsageSnapshot] Begin constructing builder kernel library: CPU 11559 MiB, GPU 905 MiB

[06/14/2022-09:32:15] [I] [TRT] [MemUsageSnapshot] End constructing builder kernel library: CPU 11795 MiB, GPU 979 MiB

[06/14/2022-09:32:15] [I] Start parsing network model

[06/14/2022-09:32:15] [I] [TRT] ----------------------------------------------------------------

[06/14/2022-09:32:15] [I] [TRT] Input filename: AnkleSeg.onnx

[06/14/2022-09:32:15] [I] [TRT] ONNX IR version: 0.0.7

[06/14/2022-09:32:15] [I] [TRT] Opset version: 12

[06/14/2022-09:32:15] [I] [TRT] Producer name: pytorch

[06/14/2022-09:32:15] [I] [TRT] Producer version: 1.10

[06/14/2022-09:32:15] [I] [TRT] Domain:

[06/14/2022-09:32:15] [I] [TRT] Model version: 0

[06/14/2022-09:32:15] [I] [TRT] Doc string:

[06/14/2022-09:32:15] [I] [TRT] ----------------------------------------------------------------

[06/14/2022-09:32:15] [I] Finish parsing network model

[06/14/2022-09:32:15] [W] Dynamic dimensions required for input: input, but no shapes were provided. Automatically overriding shape to: 1x1x1x1x1

[06/14/2022-09:32:15] [E] Error[4]: [graphShapeAnalyzer.cpp::nvinfer1::builder::`anonymous-namespace'::ShapeNodeRemover::processCheck::593] Error Code 4: Internal Error (Concat_32: dimensions not compatible for concatenation)

[06/14/2022-09:32:15] [E] Error[2]: [builder.cpp::nvinfer1::builder::Builder::buildSerializedNetwork::619] Error Code 2: Internal Error (Assertion engine != nullptr failed. )

[06/14/2022-09:32:15] [E] Engine could not be created from network

[06/14/2022-09:32:15] [E] Building engine failed

[06/14/2022-09:32:15] [E] Failed to create engine from model.

[06/14/2022-09:32:15] [E] Engine set up failed

&&&& FAILED TensorRT.trtexec [TensorRT v8400] # trtexec.exe --onnx=AnkleSeg.onnx --saveEngine=AnkleSeg.engine --fp16

上面的打印信息说生成失败,失败的原因是动态维度需要input

所以下面有2个办法尝试,使用固定维度的onnx模型,或者将动态维度的模型指定input.