AI:《DEEP LEARNING’S DIMINISHING RETURNS》翻译与解读

导读:深度学习的收益递减。麻省理工学院的 Neil Thompson 和他的几位合作者以一篇关于训练深度学习系统的计算和能源成本的深思熟虑的专题文章夺得榜首。 他们分析了图像分类器的改进,发现“要将错误率减半,可能需要 500 倍以上的计算资源。” 他们写道:“面对飞涨的成本,研究人员要么不得不想出更有效的方法来解决这些问题,要么放弃对这些问题的研究,进展就会停滞不前。” 不过,他们的文章并不完全令人沮丧。 他们最后提出了一些关于前进方向的有希望的想法。

目录

《DEEP LEARNING’S DIMINISHING RETURNS》翻译与解读

《DEEP LEARNING’S DIMINISHING RETURNS》翻译与解读

文章地址:Deep Learning’s Diminishing Returns - IEEE Spectrum

发布时间:2021年9月24日

| DEEP LEARNING’S DIMINISHING RETURNS The cost of improvement is becoming unsustainable |

深度学习的回报递减 改进的成本变得不可持续 |

第一个人工神经网络

| DEEP LEARNING IS NOW being used to translate between languages, predict how proteins fold, analyze medical scans, and play games as complex as Go, to name just a few applications of a technique that is now becoming pervasive. Success in those and other realms has brought this machine-learning technique from obscurity in the early 2000s to dominance today. Although deep learning's rise to fame is relatively recent, its origins are not. In 1958, back when mainframe computers filled rooms and ran on vacuum tubes, knowledge of the interconnections between neurons in the brain inspired Frank Rosenblatt at Cornell to design the first artificial neural network, which he presciently described as a "pattern-recognizing device." But Rosenblatt's ambitions outpaced the capabilities of his era—and he knew it. Even his inaugural paper was forced to acknowledge the voracious appetite of neural networks for computational power, bemoaning that "as the number of connections in the network increases...the burden on a conventional digital computer soon becomes excessive." |

深度学习现在被用于语言之间的翻译、预测蛋白质如何折叠、分析医学扫描以及玩像围棋这样复杂的游戏,仅举几个现在变得普遍的技术的应用。在这些领域和其他领域的成功使这种机器学习技术从 2000 年代初的默默无闻发展到今天的主导地位。 尽管深度学习的成名相对较新,但它的起源却并非如此。 1958 年,当大型计算机充满房间并在真空管上运行时,大脑神经元之间互连的知识启发了康奈尔大学的弗兰克·罗森布拉特(Frank Rosenblatt)设计了第一个人工神经网络,他有先见之明地将其描述为“模式识别设备”。但罗森布拉特的野心超过了他那个时代的能力——他知道这一点。甚至他的就职论文也被迫承认神经网络对计算能力的贪婪胃口,哀叹“随着网络中连接数量的增加......传统数字计算机的负担很快就会变得过重。” |

| Fortunately for such artificial neural networks—later rechristened "deep learning" when they included extra layers of neurons—decades of Moore's Law and other improvements in computer hardware yielded a roughly 10-million-fold increase in the number of computations that a computer could do in a second. So when researchers returned to deep learning in the late 2000s, they wielded tools equal to the challenge. |

幸运的是,这种人工神经网络——后来被重新命名为“深度学习”,当它们包含额外的神经元层时——数十年的摩尔定律和计算机硬件的其他改进,使计算机在一秒钟内可以完成的计算量增加了大约1000万倍。因此,当研究人员在21世纪头十年末重返深度学习领域时,他们使用了能够应对挑战的工具。 |

深度学习的兴起

| These more-powerful computers made it possible to construct networks with vastly more connections and neurons and hence greater ability to model complex phenomena. Researchers used that ability to break record after record as they applied deep learning to new tasks. While deep learning's rise may have been meteoric, its future may be bumpy. Like Rosenblatt before them, today's deep-learning researchers are nearing the frontier of what their tools can achieve. To understand why this will reshape machine learning, you must first understand why deep learning has been so successful and what it costs to keep it that way. |

这些更强大的计算机使构建具有更多连接和神经元的网络成为可能,从而具有更强的建模复杂现象的能力。研究人员利用这种能力,在他们将深度学习应用于新任务时,打破了一个又一个记录。 虽然深度学习的兴起可能是飞速的,但它的未来可能是坎坷的。就像Rosenblatt之前一样,今天的深度学习研究人员已经接近了他们的工具所能实现的目标的前沿。要了解为什么这将重塑机器学习,您必须首先了解深度学习为何如此成功以及保持这种状态需要付出什么代价。 |

| Deep learning is a modern incarnation of the long-running trend in artificial intelligence that has been moving from streamlined systems based on expert knowledge toward flexible statistical models. Early AI systems were rule based, applying logic and expert knowledge to derive results. Later systems incorporated learning to set their adjustable parameters, but these were usually few in number. Today's neural networks also learn parameter values, but those parameters are part of such flexible computer models that—if they are big enough—they become universal function approximators, meaning they can fit any type of data. This unlimited flexibility is the reason why deep learning can be applied to so many different domains. The flexibility of neural networks comes from taking the many inputs to the model and having the network combine them in myriad ways. This means the outputs won't be the result of applying simple formulas but instead immensely complicated ones. For example, when the cutting-edge image-recognition system Noisy Student converts the pixel values of an image into probabilities for what the object in that image is, it does so using a network with 480 million parameters. The training to ascertain the values of such a large number of parameters is even more remarkable because it was done with only 1.2 million labeled images—which may understandably confuse those of us who remember from high school algebra that we are supposed to have more equations than unknowns. Breaking that rule turns out to be the key. |

深度学习是人工智能长期趋势的现代体现,这一趋势已从基于专家知识的精简系统向灵活的统计模型转变。早期的人工智能系统是基于规则的,应用逻辑和专家知识来得出结果。后来的系统结合了学习来设置它们的可调参数,但这些通常很少。 今天的神经网络也学习参数值,但这些参数是这种灵活的计算机模型的一部分,如果它们足够大,它们就会成为通用函数逼近器,这意味着它们可以拟合任何类型的数据。这种无限的灵活性是深度学习能够应用于这么多不同领域的原因。 神经网络的灵活性来自于将许多输入输入到模型中,并让网络以多种种方式将它们组合起来。这意味着输出将不是应用简单公式的结果,而是非常复杂公式的结果。 例如,当尖端的图像识别系统noisestudent将图像的像素值转换为图像中物体的概率时,它使用的是一个具有4.8亿个参数的网络实现的。为了确定这么多参数的值而进行的训练更值得注意,因为它只使用了120万张带标签的图像——这可能会让我们这些从高中代数中记得应该有更多方程而不是未知数的人感到困惑,这是可以理解的。打破这个规则被证明是关键。 |

| Deep-learning models are overparameterized, which is to say they have more parameters than there are data points available for training. Classically, this would lead to overfitting, where the model not only learns general trends but also the random vagaries of the data it was trained on. Deep learning avoids this trap by initializing the parameters randomly and then iteratively adjusting sets of them to better fit the data using a method called stochastic gradient descent. Surprisingly, this procedure has been proven to ensure that the learned model generalizes well. The success of flexible deep-learning models can be seen in machine translation. For decades, software has been used to translate text from one language to another. Early approaches to this problem used rules designed by grammar experts. But as more textual data became available in specific languages, statistical approaches—ones that go by such esoteric names as maximum entropy, hidden Markov models, and conditional random fields—could be applied. Initially, the approaches that worked best for each language differed based on data availability and grammatical properties. For example, rule-based approaches to translating languages such as Urdu, Arabic, and Malay outperformed statistical ones—at first. Today, all these approaches have been outpaced by deep learning, which has proven itself superior almost everywhere it's applied. |

深度学习模型是过度参数化的,也就是说,它们的参数比可供训练的数据点还要多。通常,这将导致过度拟合,在这种情况下,模型不仅会学习一般趋势,还会学习所训练数据的随机变幻莫测。深度学习通过随机初始化参数,然后使用一种称为随机梯度下降的方法迭代调整参数集,以更好地拟合数据,从而避免这种陷阱。令人惊讶的是,这一过程已被证明可以确保学习到的模型具有很好的泛化性。 灵活的深度学习模型的成功可以在机器翻译中看到。几十年来,软件一直被用来将文本从一种语言翻译成另一种语言。早期解决这个问题的方法使用语法专家设计的规则。但是,随着更多的文本数据以特定的语言出现,可以应用统计学方法——诸如最大熵、隐马尔可夫模型和条件随机场等深奥名称的方法。 最初,对每种语言最有效的方法因数据可用性和语法特性而异。例如,在乌尔都语、阿拉伯语和马来语等语言的翻译中,基于规则的方法在一开始就优于统计方法。如今,所有这些方法都被深度学习超越了,深度学习几乎在任何应用它的地方都证明了自己的优越性。 |

深度学习的困惑—计算高成本

| So the good news is that deep learning provides enormous flexibility. The bad news is that this flexibility comes at an enormous computational cost. This unfortunate reality has two parts. The first part is true of all statistical models: To improve performance by a factor of k, at least k2 more data points must be used to train the model. The second part of the computational cost comes explicitly from overparameterization. Once accounted for, this yields a total computational cost for improvement of at least k4. That little 4 in the exponent is very expensive: A 10-fold improvement, for example, would require at least a 10,000-fold increase in computation. To make the flexibility-computation trade-off more vivid, consider a scenario where you are trying to predict whether a patient's X-ray reveals cancer. Suppose further that the true answer can be found if you measure 100 details in the X-ray (often called variables or features). The challenge is that we don't know ahead of time which variables are important, and there could be a very large pool of candidate variables to consider. |

因此,好消息是,深度学习提供了巨大的灵活性。坏消息是,这种灵活性需要巨大的计算成本。这个不幸的现实有两个部分。 第一部分适用于所有的统计模型:要将性能提高 k 倍,必须至少使用 k^2 个以上的数据点来训练模型。计算成本的第二部分明显来自过度参数化。一旦考虑到这一点,就会产生至少k^4的改进的总计算成本。指数中的小4非常昂贵:例如,10倍的改进至少需要1万倍的计算量。 为了使灵活性和计算之间的权衡更加生动,考虑这样一个场景:您试图预测病人的X 射线是否显示癌症。进一步假设,如果在X射线中测量100个细节(通常称为变量或特征),就能找到真正的答案。挑战在于,我们无法提前知道哪些变量是重要的,可能会有大量的候选变量需要考虑。 |

专家系统—筛选特征

| The expert-system approach to this problem would be to have people who are knowledgeable in radiology and oncology specify the variables they think are important, allowing the system to examine only those. The flexible-system approach is to test as many of the variables as possible and let the system figure out on its own which are important, requiring more data and incurring much higher computational costs in the process. Models for which experts have established the relevant variables are able to learn quickly what values work best for those variables, doing so with limited amounts of computation—which is why they were so popular early on. But their ability to learn stalls if an expert hasn't correctly specified all the variables that should be included in the model. In contrast, flexible models like deep learning are less efficient, taking vastly more computation to match the performance of expert models. But, with enough computation (and data), flexible models can outperform ones for which experts have attempted to specify the relevant variables. |

专家系统解决这个问题的方法是让在放射学和肿瘤学方面有知识的人指定他们认为重要的变量,允许系统只检查这些变量。灵活系统的方法是测试尽可能多的变量,让系统自己找出哪些是重要的,这需要更多数据并在此过程中产生更高的计算成本。 专家们建立了相关变量的模型,能够快速了解哪些值对这些变量最有效,这样做只需要有限的计算量——这就是为什么它们在早期如此受欢迎。但如果专家没有正确指定模型中应该包含的所有变量,他们的学习能力就会停滞。相比之下,像深度学习这样的灵活模型效率较低,需要大量的计算才能匹配专家模型的性能。但是,有了足够的计算(和数据),灵活的模型可以胜过专家试图指定相关变量的模型。 |

量化深度学习的算力及其成本

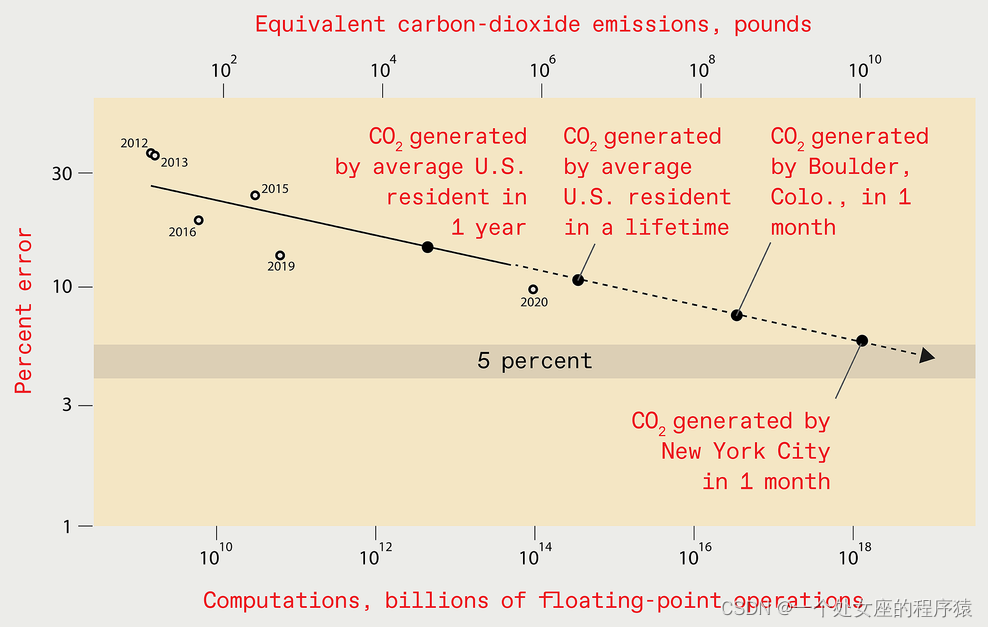

| Extrapolating the gains of recent years might suggest that by 2025 the error level in the best deep-learning systems designed for recognizing objects in the ImageNet data set should be reduced to just 5 percent [top]. But the computing resources and energy required to train such a future system would be enormous, leading to the emission of as much carbon dioxide as New York City generates in one month [bottom]. SOURCE: N.C. THOMPSON, K. GREENEWALD, K. LEE, G.F. MANSO |

根据近年来的进展推断,到2025年,为识别图像网络数据集中的对象而设计的最好的深度学习系统的误差水平应该降低到只有5%[最高]。但训练这样一个未来系统所需的计算资源和能源将是巨大的,导致排放的二氧化碳相当于纽约市一个月的排放量[底部]。资料来源:n.c. thompson, k. greenewald, k. lee, g.f. manso |

| Clearly, you can get improved performance from deep learning if you use more computing power to build bigger models and train them with more data. But how expensive will this computational burden become? Will costs become sufficiently high that they hinder progress? To answer these questions in a concrete way, we recently gathered data from more than 1,000 research papers on deep learning, spanning the areas of image classification, object detection, question answering, named-entity recognition, and machine translation. Here, we will only discuss image classification in detail, but the lessons apply broadly. |

显然,如果你使用更多的计算能力来构建更大的模型,用更多的数据训练它们,你可以从深度学习中获得更好的性能。但是这种计算负担会变得多昂贵呢?成本是否会高到足以阻碍进步? 为了具体地回答这些问题,我们最近收集了1000多篇关于深度学习的研究论文的数据,涵盖了图像分类、目标检测、问题回答、命名实体识别和机器翻译等领域。在这里,我们将只详细讨论图像分类,但经验教训适用广泛。 |

| Over the years, reducing image-classification errors has come with an enormous expansion in computational burden. For example, in 2012 AlexNet, the model that first showed the power of training deep-learning systems on graphics processing units (GPUs), was trained for five to six days using two GPUs. By 2018, another model, NASNet-A, had cut the error rate of AlexNet in half, but it used more than 1,000 times as much computing to achieve this. Our analysis of this phenomenon also allowed us to compare what's actually happened with theoretical expectations. Theory tells us that computing needs to scale with at least the fourth power of the improvement in performance. In practice, the actual requirements have scaled with at least the ninth power. This ninth power means that to halve the error rate, you can expect to need more than 500 times the computational resources. That's a devastatingly high price. There may be a silver lining here, however. The gap between what's happened in practice and what theory predicts might mean that there are still undiscovered algorithmic improvements that could greatly improve the efficiency of deep learning. |

多年来,减少图像分类错误带来了巨大的计算负担。例如,在2012年,AlexNet模型首次展示了在图形处理单元(GPU)上训练深度学习系统的能力,使用两个GPU进行了5到6天的训练。到2018年,另一个模型NASNet-A已经将AlexNet的错误率降低了一半,但它使用了超过1000倍的计算量来实现这一目标。 我们对这一现象的分析也使我们能够将实际发生的情况与理论预期进行比较。理论告诉我们,计算需要至少以性能改进的4次方进行扩展。在实践中,实际需求至少有9次方。 这第9次方意味着要将错误率降低一半,您可能需要超过500倍的计算资源。这是一个非常高的成本。然而,这也有一线希望。实际情况和理论预测之间的差距可能意味着,仍有有待发现的算法改进,可以大大提高深度学习的效率。 |

要将错误率减半,您可能需要 500 倍以上的计算资源

| To halve the error rate, you can expect to need more than 500 times the computational resources. As we noted, Moore's Law and other hardware advances have provided massive increases in chip performance. Does this mean that the escalation in computing requirements doesn't matter? Unfortunately, no. Of the 1,000-fold difference in the computing used by AlexNet and NASNet-A, only a six-fold improvement came from better hardware; the rest came from using more processors or running them longer, incurring higher costs. |

要将错误率减半,您可能需要 500 倍以上的计算资源。 正如我们所注意到的,摩尔定律和其他硬件的进步极大地提高了芯片性能。这是否意味着计算需求的增长并不重要?抱歉,不行的。在AlexNet和NASNet-A所使用的1000倍的计算差异中,只有6倍的改进来自更好的硬件;其余部分来自使用更多的处理器或运行更长时间,从而导致更高的成本。 |

| Having estimated the computational cost-performance curve for image recognition, we can use it to estimate how much computation would be needed to reach even more impressive performance benchmarks in the future. For example, achieving a 5 percent error rate would require 10 19 billion floating-point operations. Important work by scholars at the University of Massachusetts Amherst allows us to understand the economic cost and carbon emissions implied by this computational burden. The answers are grim: Training such a model would cost US $100 billion and would produce as much carbon emissions as New York City does in a month. And if we estimate the computational burden of a 1 percent error rate, the results are considerably worse. Is extrapolating out so many orders of magnitude a reasonable thing to do? Yes and no. Certainly, it is important to understand that the predictions aren't precise, although with such eye-watering results, they don't need to be to convey the overall message of unsustainability. Extrapolating this way would be unreasonable if we assumed that researchers would follow this trajectory all the way to such an extreme outcome. We don't. Faced with skyrocketing costs, researchers will either have to come up with more efficient ways to solve these problems, or they will abandon working on these problems and progress will languish. |

估计了图像识别的计算成本—性能曲线后,我们可以用它来估计需要多少计算才能在未来达到更令人印象深刻的性能基准。例如,实现5%的错误率将需要进行10190亿次浮点操作。 马萨诸塞大学阿姆赫斯特分校(University of Massachusetts Amherst)的学者们所做的重要工作,让我们能够理解这种计算负担所隐含的经济成本和碳排放。答案是严峻的:训练这样一个模型将花费1000亿美元,并且将产生相当于纽约市一个月的碳排放。如果我们估计计算负担为1%的错误率,结果就会相当糟糕。 推断出这么多数量级是合理的吗?是,不是。当然,重要的是要明白,预测并不精确,尽管有如此令人垂涎的结果,它们并不需要传达不可持续性的整体信息。如果我们假设研究人员会沿着这条轨迹一直走到如此极端的结果,那么这样的推断是不合理的。我们没有。面对飞涨的成本,研究人员要么必须想出更有效的方法来解决这些问题,要么他们将放弃对这些问题的研究,进展将会停滞。 |

| On the other hand, extrapolating our results is not only reasonable but also important, because it conveys the magnitude of the challenge ahead. The leading edge of this problem is already becoming apparent. When Google subsidiary DeepMind trained its system to play Go, it was estimated to have cost $35 million. When DeepMind's researchers designed a system to play the StarCraft II video game, they purposefully didn't try multiple ways of architecting an important component, because the training cost would have been too high. At OpenAI, an important machine-learning think tank, researchers recently designed and trained a much-lauded deep-learning language system called GPT-3 at the cost of more than $4 million. Even though they made a mistake when they implemented the system, they didn't fix it, explaining simply in a supplement to their scholarly publication that "due to the cost of training, it wasn't feasible to retrain the model." |

另一方面,推断我们的结果不仅合理而且重要,因为它传达了未来挑战的重要性。这个问题的前沿已经变得很明显。当谷歌的子公司DeepMind训练它的系统下围棋时,估计花费了3500万美元。当DeepMind的研究人员设计一个玩星际争霸2 (StarCraft II)电子游戏的系统时,他们有意没有尝试多种方法来构建一个重要的组件,因为训练成本太高了。 在重要的机器学习智囊团OpenAI,研究人员最近设计并训练了一个广受赞誉的深度学习语言系统GPT-3,成本超过400万美元。尽管他们在实施这个系统的时候犯了一个错误,但他们也没有修复它,只是在他们的学术出版物的一份补编中解释说,“由于训练的成本,再训练这个模型是不可行的。” |

| Even businesses outside the tech industry are now starting to shy away from the computational expense of deep learning. A large European supermarket chain recently abandoned a deep-learning-based system that markedly improved its ability to predict which products would be purchased. The company executives dropped that attempt because they judged that the cost of training and running the system would be too high. Faced with rising economic and environmental costs, the deep-learning community will need to find ways to increase performance without causing computing demands to go through the roof. If they don't, progress will stagnate. But don't despair yet: Plenty is being done to address this challenge. |

就连科技行业以外的企业现在也开始回避深度学习的计算成本。一家欧洲大型连锁超市最近放弃了一种基于深度学习的系统,该系统显著提高了其预测哪些产品将被购买的能力。该公司高管放弃了这一尝试,因为他们认为训练和运行系统的成本太高。 面对不断上升的经济和环境成本,深度学习社区将需要找到在不导致计算需求飙升的情况下提高性能的方法。如果他们不这样做,进展就会停滞。但不要绝望:为了应对这一挑战,我们已经做了很多工作。 |

如何解决深度学习的高成本难题

| One strategy is to use processors designed specifically to be efficient for deep-learning calculations. This approach was widely used over the last decade, as CPUs gave way to GPUs and, in some cases, field-programmable gate arrays and application-specific ICs (including Google's Tensor Processing Unit). Fundamentally, all of these approaches sacrifice the generality of the computing platform for the efficiency of increased specialization. But such specialization faces diminishing returns. So longer-term gains will require adopting wholly different hardware frameworks—perhaps hardware that is based on analog, neuromorphic, optical, or quantum systems. Thus far, however, these wholly different hardware frameworks have yet to have much impact. |

一种策略是使用专为深度学习计算而设计的高效处理器。这种方法在过去的十年中得到了广泛的应用,因为cpu被gpu所取代,在某些情况下,现场可编程门阵列FPGA和特定应用的ic(包括谷歌的张量处理单元)。从根本上说,所有这些方法都牺牲了计算平台的通用性,以提高专业化的效率。但是这种专业化面临着收益递减的问题。因此,长期的收益将需要采用完全不同的硬件框架——也许是基于模拟、神经形态、光学或量子系统的硬件。然而,到目前为止,这些完全不同的硬件框架还没有产生太大的影响。 |

| We must either adapt how we do deep learning or face a future of much slower progress. Another approach to reducing the computational burden focuses on generating neural networks that, when implemented, are smaller. This tactic lowers the cost each time you use them, but it often increases the training cost (what we've described so far in this article). Which of these costs matters most depends on the situation. For a widely used model, running costs are the biggest component of the total sum invested. For other models—for example, those that frequently need to be retrained— training costs may dominate. In either case, the total cost must be larger than just the training on its own. So if the training costs are too high, as we've shown, then the total costs will be, too. And that's the challenge with the various tactics that have been used to make implementation smaller: They don't reduce training costs enough. For example, one allows for training a large network but penalizes complexity during training. Another involves training a large network and then "prunes" away unimportant connections. Yet another finds as efficient an architecture as possible by optimizing across many models—something called neural-architecture search. While each of these techniques can offer significant benefits for implementation, the effects on training are muted—certainly not enough to address the concerns we see in our data. And in many cases they make the training costs higher. |

我们要么调整深度学习的方式,要么面对一个进展缓慢得多的未来。 另一种减少计算负担的方法侧重于生成更小的神经网络。这种策略降低了每次使用时的成本,但它通常会增加训练成本(我们在本文中已经描述过)。这些费用中哪一项最重要取决于实际情况。对于一个广泛使用的模型,运行成本是总投资的最大组成部分。对于其它模型——例如那些经常需要再训练的模型——训练成本可能占主导地位。无论哪种情况,总成本都必须大于训练本身。因此,如果训练成本太高,正如我们所展示的,那么总成本也会太高。 这就是用于缩小实施规模的各种策略所面临的挑战:它们没有充分降低训练成本。例如,允许训练一个大型网络,但在训练过程中会降低复杂性。另一种方法是训练一个大型网络,然后“修剪”掉不重要的连接。另一种方法是通过对多个模型进行优化,从而找到一个尽可能高效的架构——这被称为神经体系架构搜索。虽然每一种技术都可以为实施提供显著的好处,但对训练的影响是微弱的——当然不足以解决我们在数据中看到的问题。在很多情况下,它们使训练成本更高。 |

规避深度学习计算局限性的几种探究

| One up-and-coming technique that could reduce training costs goes by the name meta-learning. The idea is that the system learns on a variety of data and then can be applied in many areas. For example, rather than building separate systems to recognize dogs in images, cats in images, and cars in images, a single system could be trained on all of them and used multiple times. Unfortunately, recent work by Andrei Barbu of MIT has revealed how hard meta-learning can be. He and his coauthors showed that even small differences between the original data and where you want to use it can severely degrade performance. They demonstrated that current image-recognition systems depend heavily on things like whether the object is photographed at a particular angle or in a particular pose. So even the simple task of recognizing the same objects in different poses causes the accuracy of the system to be nearly halved. Benjamin Recht of the University of California, Berkeley, and others made this point even more starkly, showing that even with novel data sets purposely constructed to mimic the original training data, performance drops by more than 10 percent. If even small changes in data cause large performance drops, the data needed for a comprehensive meta-learning system might be enormous. So the great promise of meta-learning remains far from being realized. |

一种有望降低训练成本的新技术被称为元学习。其想法是,系统可以学习各种数据,然后可以应用于许多领域。例如,与其建立独立的系统来识别图像中的狗、图像中的猫和图像中的汽车,不如对所有这些图像进行训练并多次使用单一的系统。 不幸的是,麻省理工学院的Andrei Barbu最近的研究揭示了元学习有多难。他和他的合著者表明,原始数据和您想要使用它的地方之间即使有微小的差异,也会严重降低性能。他们证明,当前的图像识别系统在很大程度上依赖于诸如物体是否以特定角度或特定姿势拍摄。因此,即使是识别同一物体在不同姿势下的简单任务,也会导致系统的准确性下降近一半。 加州大学伯克利分校(University of California, Berkeley)的本杰明·雷希特(Benjamin Recht)和其他人更明确地阐述了这一点,他们表明,即使是有意构建新的数据集来模仿原始的训练数据,性能也会下降10%以上。如果数据的微小变化导致性能大幅下降,那么一个全面的元学习系统所需要的数据可能会非常庞大。因此,元学习的巨大前景仍远未实现。 |

| Another possible strategy to evade the computational limits of deep learning would be to move to other, perhaps as-yet-undiscovered or underappreciated types of machine learning. As we described, machine-learning systems constructed around the insight of experts can be much more computationally efficient, but their performance can't reach the same heights as deep-learning systems if those experts cannot distinguish all the contributing factors. Neuro-symbolic methods and other techniques are being developed to combine the power of expert knowledge and reasoning with the flexibility often found in neural networks. Like the situation that Rosenblatt faced at the dawn of neural networks, deep learning is today becoming constrained by the available computational tools. Faced with computational scaling that would be economically and environmentally ruinous, we must either adapt how we do deep learning or face a future of much slower progress. Clearly, adaptation is preferable. A clever breakthrough might find a way to make deep learning more efficient or computer hardware more powerful, which would allow us to continue to use these extraordinarily flexible models. If not, the pendulum will likely swing back toward relying more on experts to identify what needs to be learned. |

另一种可能规避深度学习计算局限性的策略是,转向其他可能尚未被发现或未被重视的机器学习类型。正如我们所描述的,基于专家的见解构建的机器学习系统在计算效率上可以更高效,但如果这些专家不能区分所有的影响因素,它们的性能就无法达到深度学习系统的高度。神经符号方法和其他技术正在被开发,以将专家知识和推理的力量与神经网络的灵活性结合起来。 就像Rosenblatt在神经网络出现之初所面临的情况一样,如今的深度学习正受到现有计算工具的限制。面对将对经济和环境造成破坏的计算尺度,我们必须要么适应深度学习的方式,要么面对一个进展缓慢得多的未来。显然,适应是更好的选择。一个聪明的突破可能会找到一种方法,使深度学习更有效或计算机硬件更强大,这将允许我们继续使用这些非常灵活的模型。如果没有,钟摆可能会转向更多地依靠专家来确定需要学习的内容。 |