People commonly tend to put much effort on hyperparameter tuning and training while using Tensoflow&Deep Learning. A realistic problem for TF is how to integrate models into industry: saving pre-trained models, restoring them when necessary, and doing predictions regarding to request input. Fortunately, Google AI helps!

Actually, while a model is trained, tensorflow has two different modes to save it. Most people and blog posts adopt Checkpoint, which refers to 'Training Mode'. The training work continues if someone load the checkpoint. But a drawback is you have to define the architecture once and once again before restore the checkpoint. Another mode called 'SavedModel' is more suitable for serving (release version product). Applications can send prediction requests to a server where the 'SavedModel' is deployed, and then responses will be sent back.

Before that, we only need to follow three steps: save the model properly, deploy it onto Google AI, transform data to required format then request. I am going to illustrate them one by one.

1. Save the model into SavedModel:

In a typical tensorflow training work, architecture is defined first, then it is trained, finally comes to saving part. We just jump to the saving code: the function used here is 'simple_save', and four parameters are session, saving folder, input variable&name, output variable&name.

tf.saved_model.simple_save(sess, 'simple_save/model', \ inputs={"x": tf_x},outputs={"pred": pred})

After that, we got the saved model on the target directory:

saved_model.pb

/variables/variables.index

/variables/variables.data-00000-of-00001

2. Deploy SavedModel onto Google AI:

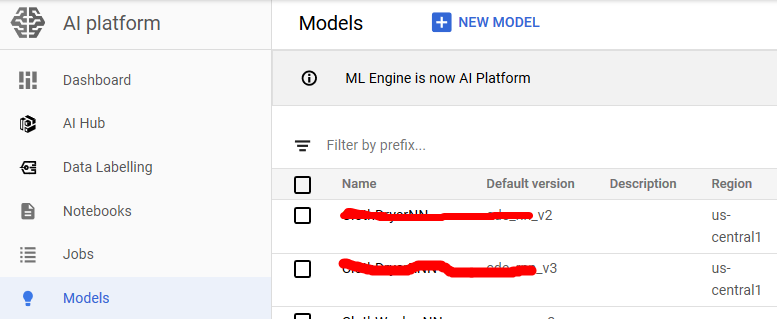

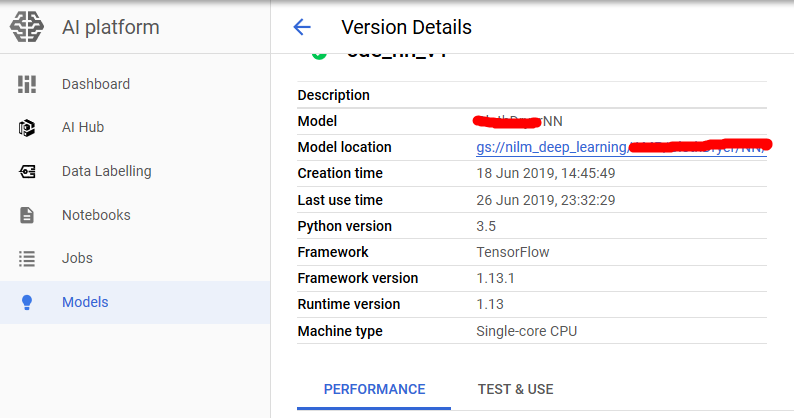

On Google Cloud, files are stored on Google Bucket, so first a Tensorflow model (.pb file and variables folder) need to be uploaded. Then create a Google AI model and a version. Actually there can be multiple versions under a model, which is quite like solving one task by different ways. You can even use several deep learning architectures as

different version, and then switch solutions when request predictions. Versions and Google Bucket location that stores the SavedModel are bound.

3. Doing online predictions:

Because we request prediction inside the application, and require immediate response, so we choose online prediction. The official code to request is shown below, which is HTTP Request and HTTP Response. The input and output data are all in Json Format. We can transform our input data into List, and call this function.

def predict_json(project, model, instances, version=None):

"""Send json data to a deployed model for prediction.

Args:

project (str): project where the AI Platform Model is deployed.

model (str): model name.

instances ([Mapping[str: Any]]): Keys should be the names of Tensors

your deployed model expects as inputs. Values should be datatypes

convertible to Tensors, or (potentially nested) lists of datatypes

convertible to tensors.

version: str, version of the model to target.

Returns:

Mapping[str: any]: dictionary of prediction results defined by the

model.

"""

# Create the AI Platform service object.

# To authenticate set the environment variable

# GOOGLE_APPLICATION_CREDENTIALS=<path_to_service_account_file>

service = googleapiclient.discovery.build('ml', 'v1')

name = 'projects/{}/models/{}'.format(project, model)

if version is not None:

name += '/versions/{}'.format(version)

response = service.projects().predict(

name=name,

body={'instances': instances}

).execute()

if 'error' in response:

raise RuntimeError(response['error'])

return response['predictions']

The response is also in Json format, I wrote a piece of code to transform it into Numpy Array:

def from_json_to_array(dict_list):

value_list = []

for dict_instance in dict_list:

instance = dict_instance.get('pred')

value_list.append(instance)

value_array = np.asarray(value_list)

return value_array

Yeah, that's it! Let's get your hands dirty!

Reference:

https://www.tensorflow.org/guide/saved_model

https://cloud.google.com/blog/products/ai-machine-learning/simplifying-ml-predictions-with-google-cloud-functions

https://cloud.google.com/ml-engine/docs/tensorflow/online-predict