基于deepstream-test3添加目标跟踪插件和4类sinkType输出(包括rtsp输入输出)

deepstream官方示例没有给出一个单管道的多类输入和4类sinkType输出的简单示例,于是,本人参考了一些资料之后,在deepstream-test3的基础上简单修改了一下,主要是为了增加目标跟踪的插件,file文件和rtsp的输出。

运行环境

- gpu服务器:可以使用官方提供的docker镜像,创建并进入docker容器里面编译运行

- jetson设备:jetson设备安装了deepstream的开发环境之后,直接编译运行

编译运行指令

-

解压文件:

tar -zxf deepstream-test3-zyh.tar.gz

cd deepstream-test3-zyh/ -

编译:

make clean

make -j4 -

运行:

./deepstream-test3-app rtsp://xxxx

或者

./deepstream-test3-app file:/home/xxx/test.mp4

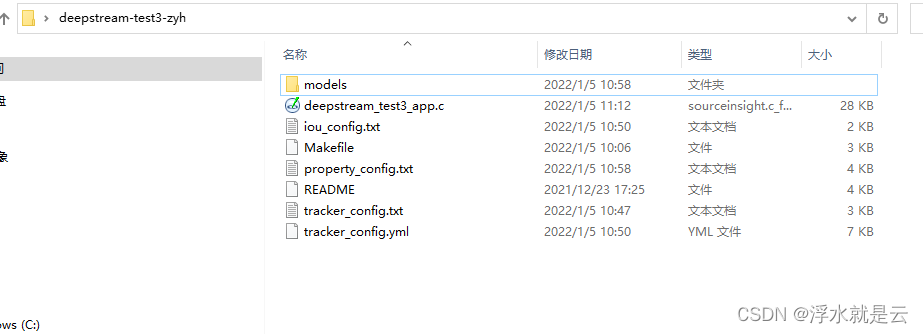

代码

- deepstream_test3_app.c

/*

* Copyright (c) 2018-2020, NVIDIA CORPORATION. All rights reserved.

*

* Permission is hereby granted, free of charge, to any person obtaining a

* copy of this software and associated documentation files (the "Software"),

* to deal in the Software without restriction, including without limitation

* the rights to use, copy, modify, merge, publish, distribute, sublicense,

* and/or sell copies of the Software, and to permit persons to whom the

* Software is furnished to do so, subject to the following conditions:

*

* The above copyright notice and this permission notice shall be included in

* all copies or substantial portions of the Software.

*

* THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

* IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

* FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

* THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

* LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

* FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

* DEALINGS IN THE SOFTWARE.

*

*

*/

#include <gst/gst.h>

#include <glib.h>

#include <stdio.h>

#include <math.h>

#include <string.h>

#include <sys/time.h>

#include <cuda_runtime_api.h>

#include "gstnvdsmeta.h"

//#include "gstnvstreammeta.h"

#ifndef PLATFORM_TEGRA

#include "gst-nvmessage.h"

#endif

#include "nvdsmeta.h"

#include <gst/rtsp-server/rtsp-server.h>

#include <nvll_osd_struct.h>

#define MAX_DISPLAY_LEN 64

#define PGIE_CLASS_ID_VEHICLE 0

#define PGIE_CLASS_ID_PERSON 2

/* By default, OSD process-mode is set to CPU_MODE. To change mode, set as:

* 1: GPU mode (for Tesla only)

* 2: HW mode (For Jetson only)

*/

#define OSD_PROCESS_MODE 0

/* By default, OSD will not display text. To display text, change this to 1 */

#define OSD_DISPLAY_TEXT 0

/* The muxer output resolution must be set if the input streams will be of

* different resolution. The muxer will scale all the input frames to this

* resolution. */

#define MUXER_OUTPUT_WIDTH 1920

#define MUXER_OUTPUT_HEIGHT 1080

/* Muxer batch formation timeout, for e.g. 40 millisec. Should ideally be set

* based on the fastest source's framerate. */

#define MUXER_BATCH_TIMEOUT_USEC 40000

#define TILED_OUTPUT_WIDTH 1280

#define TILED_OUTPUT_HEIGHT 720

/* NVIDIA Decoder source pad memory feature. This feature signifies that source

* pads having this capability will push GstBuffers containing cuda buffers. */

#define GST_CAPS_FEATURES_NVMM "memory:NVMM"

gchar pgie_classes_str[4][32] = {

"Vehicle", "TwoWheeler", "Person",

"RoadSign"

};

#define FPS_PRINT_INTERVAL 300

//static struct timeval start_time = { };

//static guint probe_counter = 0;

/* tiler_sink_pad_buffer_probe will extract metadata received on OSD sink pad

* and update params for drawing rectangle, object information etc. */

static GstPadProbeReturn

tiler_src_pad_buffer_probe (GstPad * pad, GstPadProbeInfo * info,

gpointer u_data)

{

GstBuffer *buf = (GstBuffer *) info->data;

guint num_rects = 0;

NvDsObjectMeta *obj_meta = NULL;

guint vehicle_count = 0;

guint person_count = 0;

NvDsMetaList * l_frame = NULL;

NvDsMetaList * l_obj = NULL;

//NvDsDisplayMeta *display_meta = NULL;

NvDsBatchMeta *batch_meta = gst_buffer_get_nvds_batch_meta (buf);

for (l_frame = batch_meta->frame_meta_list; l_frame != NULL;

l_frame = l_frame->next) {

NvDsFrameMeta *frame_meta = (NvDsFrameMeta *) (l_frame->data);

//int offset = 0;

for (l_obj = frame_meta->obj_meta_list; l_obj != NULL;

l_obj = l_obj->next) {

obj_meta = (NvDsObjectMeta *) (l_obj->data);

if (obj_meta->class_id == PGIE_CLASS_ID_VEHICLE) {

vehicle_count++;

num_rects++;

}

if (obj_meta->class_id == PGIE_CLASS_ID_PERSON) {

person_count++;

num_rects++;

}

}

g_print ("Frame Number = %d Number of objects = %d "

"Vehicle Count = %d Person Count = %d\n",

frame_meta->frame_num, num_rects, vehicle_count, person_count);

#if 0

display_meta = nvds_acquire_display_meta_from_pool(batch_meta);

NvOSD_TextParams *txt_params = &display_meta->text_params;

txt_params->display_text = g_malloc0 (MAX_DISPLAY_LEN);

offset = snprintf(txt_params->display_text, MAX_DISPLAY_LEN, "Person = %d ", person_count);

offset = snprintf(txt_params->display_text + offset , MAX_DISPLAY_LEN, "Vehicle = %d ", vehicle_count);

/* Now set the offsets where the string should appear */

txt_params->x_offset = 10;

txt_params->y_offset = 12;

/* Font , font-color and font-size */

txt_params->font_params.font_name = "Serif";

txt_params->font_params.font_size = 10;

txt_params->font_params.font_color.red = 1.0;

txt_params->font_params.font_color.green = 1.0;

txt_params->font_params.font_color.blue = 1.0;

txt_params->font_params.font_color.alpha = 1.0;

/* Text background color */

txt_params->set_bg_clr = 1;

txt_params->text_bg_clr.red = 0.0;

txt_params->text_bg_clr.green = 0.0;

txt_params->text_bg_clr.blue = 0.0;

txt_params->text_bg_clr.alpha = 1.0;

nvds_add_display_meta_to_frame(frame_meta, display_meta);

#endif

}

return GST_PAD_PROBE_OK;

}

static gboolean

bus_call (GstBus * bus, GstMessage * msg, gpointer data)

{

GMainLoop *loop = (GMainLoop *) data;

switch (GST_MESSAGE_TYPE (msg)) {

case GST_MESSAGE_EOS:

g_print ("End of stream\n");

g_main_loop_quit (loop);

break;

case GST_MESSAGE_WARNING:

{

gchar *debug;

GError *error;

gst_message_parse_warning (msg, &error, &debug);

g_printerr ("WARNING from element %s: %s\n",

GST_OBJECT_NAME (msg->src), error->message);

g_free (debug);

g_printerr ("Warning: %s\n", error->message);

g_error_free (error);

break;

}

case GST_MESSAGE_ERROR:

{

gchar *debug;

GError *error;

gst_message_parse_error (msg, &error, &debug);

g_printerr ("ERROR from element %s: %s\n",

GST_OBJECT_NAME (msg->src), error->message);

if (debug)

g_printerr ("Error details: %s\n", debug);

g_free (debug);

g_error_free (error);

g_main_loop_quit (loop);

break;

}

#ifndef PLATFORM_TEGRA

case GST_MESSAGE_ELEMENT:

{

if (gst_nvmessage_is_stream_eos (msg)) {

guint stream_id;

if (gst_nvmessage_parse_stream_eos (msg, &stream_id)) {

g_print ("Got EOS from stream %d\n", stream_id);

}

}

break;

}

#endif

default:

break;

}

return TRUE;

}

static void

cb_newpad (GstElement * decodebin, GstPad * decoder_src_pad, gpointer data)

{

g_print ("In cb_newpad\n");

GstCaps *caps = gst_pad_get_current_caps (decoder_src_pad);

const GstStructure *str = gst_caps_get_structure (caps, 0);

const gchar *name = gst_structure_get_name (str);

GstElement *source_bin = (GstElement *) data;

GstCapsFeatures *features = gst_caps_get_features (caps, 0);

/* Need to check if the pad created by the decodebin is for video and not

* audio. */

if (!strncmp (name, "video", 5)) {

/* Link the decodebin pad only if decodebin has picked nvidia

* decoder plugin nvdec_*. We do this by checking if the pad caps contain

* NVMM memory features. */

if (gst_caps_features_contains (features, GST_CAPS_FEATURES_NVMM)) {

/* Get the source bin ghost pad */

GstPad *bin_ghost_pad = gst_element_get_static_pad (source_bin, "src");

if (!gst_ghost_pad_set_target (GST_GHOST_PAD (bin_ghost_pad),

decoder_src_pad)) {

g_printerr ("Failed to link decoder src pad to source bin ghost pad\n");

}

gst_object_unref (bin_ghost_pad);

} else {

g_printerr ("Error: Decodebin did not pick nvidia decoder plugin.\n");

}

}

}

static void

decodebin_child_added (GstChildProxy * child_proxy, GObject * object,

gchar * name, gpointer user_data)

{

g_print ("Decodebin child added: %s\n", name);

if (g_strrstr (name, "decodebin") == name) {

g_signal_connect (G_OBJECT (object), "child-added",

G_CALLBACK (decodebin_child_added), user_data);

}

}

static GstElement *

create_source_bin (guint index, gchar * uri)

{

GstElement *bin = NULL, *uri_decode_bin = NULL;

gchar bin_name[16] = {

};

g_snprintf (bin_name, 15, "source-bin-%02d", index);

/* Create a source GstBin to abstract this bin's content from the rest of the

* pipeline */

bin = gst_bin_new (bin_name);

/* Source element for reading from the uri.

* We will use decodebin and let it figure out the container format of the

* stream and the codec and plug the appropriate demux and decode plugins. */

uri_decode_bin = gst_element_factory_make ("uridecodebin", "uri-decode-bin");

if (!bin || !uri_decode_bin) {

g_printerr ("One element in source bin could not be created.\n");

return NULL;

}

/* We set the input uri to the source element */

g_object_set (G_OBJECT (uri_decode_bin), "uri", uri, NULL);

/* Connect to the "pad-added" signal of the decodebin which generates a

* callback once a new pad for raw data has beed created by the decodebin */

g_signal_connect (G_OBJECT (uri_decode_bin), "pad-added",

G_CALLBACK (cb_newpad), bin);

g_signal_connect (G_OBJECT (uri_decode_bin), "child-added",

G_CALLBACK (decodebin_child_added), bin);

gst_bin_add (GST_BIN (bin), uri_decode_bin);

/* We need to create a ghost pad for the source bin which will act as a proxy

* for the video decoder src pad. The ghost pad will not have a target right

* now. Once the decode bin creates the video decoder and generates the

* cb_newpad callback, we will set the ghost pad target to the video decoder

* src pad. */

if (!gst_element_add_pad (bin, gst_ghost_pad_new_no_target ("src",

GST_PAD_SRC))) {

g_printerr ("Failed to add ghost pad in source bin\n");

return NULL;

}

return bin;

}

#define CONFIG_GROUP_TRACKER "tracker"

#define CONFIG_GROUP_TRACKER_WIDTH "tracker-width"

#define CONFIG_GROUP_TRACKER_HEIGHT "tracker-height"

#define CONFIG_GROUP_TRACKER_LL_CONFIG_FILE "ll-config-file"

#define CONFIG_GROUP_TRACKER_LL_LIB_FILE "ll-lib-file"

#define CONFIG_GROUP_TRACKER_ENABLE_BATCH_PROCESS "enable-batch-process"

#define CONFIG_GROUP_TRACKER_ENABLE_PAST_FRAME "enable-past-frame"

#define CONFIG_GROUP_TRACKER_TRACKING_SURFACE_TYPE "tracking-surface-type"

#define CONFIG_GROUP_TRACKER_DISPLAY_TRACKING_ID "display-tracking-id"

#define CONFIG_GPU_ID "gpu-id"

#define CHECK_ERROR(error) \

if (error) {

\

g_printerr ("Error while parsing config file: %s\n", error->message); \

goto done; \

}

static gchar *

get_absolute_file_path (gchar *cfg_file_path, gchar *file_path)

{

gchar abs_cfg_path[PATH_MAX + 1];

gchar *abs_file_path;

gchar *delim;

if (file_path && file_path[0] == '/') {

return file_path;

}

if (!realpath (cfg_file_path, abs_cfg_path)) {

g_free (file_path);

return NULL;

}

/* Return absolute path of config file if file_path is NULL. */

if (!file_path) {

abs_file_path = g_strdup (abs_cfg_path);

return abs_file_path;

}

delim = g_strrstr (abs_cfg_path, "/");

*(delim + 1) = '\0';

abs_file_path = g_strconcat (abs_cfg_path, file_path, NULL);

g_free (file_path);

return abs_file_path;

}

static gboolean

set_tracker_properties (GstElement *nvtracker, char * config_file_name)

{

gboolean ret = FALSE;

GError *error = NULL;

gchar **keys = NULL;

gchar **key = NULL;

GKeyFile *key_file = g_key_file_new ();

if (!g_key_file_load_from_file (key_file, config_file_name, G_KEY_FILE_NONE,

&error)) {

g_printerr ("Failed to load config file: %s\n", error->message);

return FALSE;

}

keys = g_key_file_get_keys (key_file, CONFIG_GROUP_TRACKER, NULL, &error);

CHECK_ERROR (error);

for (key = keys; *key; key++) {

if (!g_strcmp0 (*key, CONFIG_GROUP_TRACKER_WIDTH)) {

gint width =

g_key_file_get_integer (key_file, CONFIG_GROUP_TRACKER,

CONFIG_GROUP_TRACKER_WIDTH, &error);

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), "tracker-width", width, NULL);

} else if (!g_strcmp0 (*key, CONFIG_GROUP_TRACKER_HEIGHT)) {

gint height =

g_key_file_get_integer (key_file, CONFIG_GROUP_TRACKER,

CONFIG_GROUP_TRACKER_HEIGHT, &error);

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), "tracker-height", height, NULL);

} else if (!g_strcmp0 (*key, CONFIG_GPU_ID)) {

guint gpu_id =

g_key_file_get_integer (key_file, CONFIG_GROUP_TRACKER,

CONFIG_GPU_ID, &error);

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), "gpu_id", gpu_id, NULL);

} else if (!g_strcmp0 (*key, CONFIG_GROUP_TRACKER_LL_CONFIG_FILE)) {

char* ll_config_file = get_absolute_file_path (config_file_name,

g_key_file_get_string (key_file,

CONFIG_GROUP_TRACKER,

CONFIG_GROUP_TRACKER_LL_CONFIG_FILE, &error));

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), "ll-config-file",

ll_config_file, NULL);

} else if (!g_strcmp0 (*key, CONFIG_GROUP_TRACKER_LL_LIB_FILE)) {

char* ll_lib_file = get_absolute_file_path (config_file_name,

g_key_file_get_string (key_file,

CONFIG_GROUP_TRACKER,

CONFIG_GROUP_TRACKER_LL_LIB_FILE, &error));

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), "ll-lib-file", ll_lib_file, NULL);

} else if (!g_strcmp0 (*key, CONFIG_GROUP_TRACKER_ENABLE_BATCH_PROCESS)) {

gboolean enable_batch_process =

g_key_file_get_integer (key_file, CONFIG_GROUP_TRACKER,

CONFIG_GROUP_TRACKER_ENABLE_BATCH_PROCESS, &error);

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), "enable_batch_process",

enable_batch_process, NULL);

}

//zyh

else if(!g_strcmp0 (*key, CONFIG_GROUP_TRACKER_ENABLE_PAST_FRAME)){

gboolean enable_past_frame =

g_key_file_get_integer (key_file, CONFIG_GROUP_TRACKER,

CONFIG_GROUP_TRACKER_ENABLE_PAST_FRAME, &error);

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), CONFIG_GROUP_TRACKER_ENABLE_PAST_FRAME,

enable_past_frame, NULL);

} else if (!g_strcmp0 (*key, CONFIG_GROUP_TRACKER_TRACKING_SURFACE_TYPE)) {

gint tracking_surface_type =

g_key_file_get_integer (key_file, CONFIG_GROUP_TRACKER,

CONFIG_GROUP_TRACKER_TRACKING_SURFACE_TYPE, &error);

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), CONFIG_GROUP_TRACKER_TRACKING_SURFACE_TYPE,

tracking_surface_type, NULL);

} else if(!g_strcmp0 (*key, CONFIG_GROUP_TRACKER_DISPLAY_TRACKING_ID)){

gboolean display_tracking_id =

g_key_file_get_integer (key_file, CONFIG_GROUP_TRACKER,

CONFIG_GROUP_TRACKER_DISPLAY_TRACKING_ID, &error);

CHECK_ERROR (error);

g_object_set (G_OBJECT (nvtracker), CONFIG_GROUP_TRACKER_DISPLAY_TRACKING_ID,

display_tracking_id, NULL);

}

//

else {

g_printerr ("Unknown key '%s' for group [%s]", *key,

CONFIG_GROUP_TRACKER);

}

}

ret = TRUE;

done:

if (error) {

g_error_free (error);

}

if (keys) {

g_strfreev (keys);

}

if (!ret) {

g_printerr ("%s failed", __func__);

}

return ret;

}

//成功返回true1, 否则false0

static GMutex server_cnt_lock;

gboolean start_rtsp_streaming (GstRTSPServer *server, guint rtsp_port_num, guint updsink_port_num,int enctype, guint64 udp_buffer_size)

{

GstRTSPMountPoints *mounts;

GstRTSPMediaFactory *factory;

char udpsrc_pipeline[512];

char port_num_Str[64] = {

0 };

char encoder_name[32];

if (enctype == 1) {

sprintf(encoder_name, "%s", "H264");

} else if (enctype == 2) {

//encoder_name = "H265";

sprintf(encoder_name, "%s", "H265");

} else {

//NVGSTDS_ERR_MSG_V ("%s failed", __func__);

return FALSE;

}

if (udp_buffer_size == 0)

udp_buffer_size = 512 * 1024;

sprintf (udpsrc_pipeline,

"( udpsrc name=pay0 port=%d buffer-size=%lu caps=\"application/x-rtp, media=video, "

"clock-rate=90000, encoding-name=%s, payload=96 \" )",

updsink_port_num, udp_buffer_size, encoder_name);

sprintf (port_num_Str, "%d", rtsp_port_num);

g_mutex_lock (&server_cnt_lock);

server = gst_rtsp_server_new ();

g_object_set (server, "service", port_num_Str, NULL);

mounts = gst_rtsp_server_get_mount_points (server);

factory = gst_rtsp_media_factory_new ();

gst_rtsp_media_factory_set_launch (factory, udpsrc_pipeline);

gst_rtsp_mount_points_add_factory (mounts, "/ds-test", factory);

g_object_unref (mounts);

gst_rtsp_server_attach (server, NULL);

g_mutex_unlock (&server_cnt_lock);

g_print ("\n *** DeepStream: Launched RTSP Streaming at rtsp://localhost:%d/ds-test ***\n\n", rtsp_port_num);

return TRUE;

}

static GstRTSPFilterResult client_filter (GstRTSPServer * server, GstRTSPClient * client, gpointer user_data)

{

return GST_RTSP_FILTER_REMOVE;

}

void destroy_rtsp_sink_bin (GstRTSPServer * server)

{

GstRTSPMountPoints *mounts;

GstRTSPSessionPool *pool;

mounts = gst_rtsp_server_get_mount_points (server);

gst_rtsp_mount_points_remove_factory (mounts, "/ds-test");

g_object_unref (mounts);

gst_rtsp_server_client_filter (server , client_filter, NULL);

pool = gst_rtsp_server_get_session_pool (server);

gst_rtsp_session_pool_cleanup (pool);

g_object_unref (pool);

}

int

main (int argc, char *argv[])

{

GMainLoop *loop = NULL;

GstElement *pipeline = NULL, *streammux = NULL, *sink = NULL, *pgie = NULL,

*queue1, *queue2, *queue3, *queue4, *queue5, *queue6, *nvvidconv = NULL,

*nvosd = NULL, *tiler = NULL;

GstElement *transform = NULL;

GstBus *bus = NULL;

guint bus_watch_id;

GstPad *tiler_src_pad = NULL;

guint i, num_sources;

guint tiler_rows, tiler_columns;

guint pgie_batch_size;

//ZYH

GstElement *tracker = NULL;

GstElement *nvvidconv1 = NULL;

GstElement *capfilt = NULL;

GstCaps *caps = NULL;

GstElement *nvh264enc = NULL;

GstElement *parser = NULL;

GstElement *rtppay = NULL;

const int sinkType = 4;

char *outFilename = (char *)"output.mp4";

//int inputUriVideoWidth = pipeline_msg->inputUriVideoWidth;

//int inputUriVideoHeight = pipeline_msg->inputUriVideoHeight;

int rtspPort = 8554;

int udpPort = 5400;

GstRTSPServer *rtspServer1 = NULL;

char *property_config_file = (char *)"property_config.txt";

char *tracker_config_file = (char *)"tracker_config.txt";

int current_device = -1;

cudaGetDevice(¤t_device);

struct cudaDeviceProp prop;

cudaGetDeviceProperties(&prop, current_device);

/* Check input arguments */

if (argc < 2) {

g_printerr ("Usage: %s <uri1> [uri2] ... [uriN] \n", argv[0]);

return -1;

}

num_sources = argc - 1;

/* Standard GStreamer initialization */

gst_init (&argc, &argv);

loop = g_main_loop_new (NULL, FALSE);

/* Create gstreamer elements */

/* Create Pipeline element that will form a connection of other elements */

pipeline = gst_pipeline_new ("dstest3-pipeline");

/* Create nvstreammux instance to form batches from one or more sources. */

streammux = gst_element_factory_make ("nvstreammux", "stream-muxer");

if (!pipeline || !streammux) {

g_printerr ("One element could not be created. Exiting.\n");

return -1;

}

gst_bin_add (GST_BIN (pipeline), streammux);

for (i = 0; i < num_sources; i++) {

GstPad *sinkpad, *srcpad;

gchar pad_name[16] = {

};

GstElement *source_bin = create_source_bin (i, argv[i + 1]);

if (!source_bin) {

g_printerr ("Failed to create source bin. Exiting.\n");

return -1;

}

gst_bin_add (GST_BIN (pipeline), source_bin);

g_snprintf (pad_name, 15, "sink_%u", i);

sinkpad = gst_element_get_request_pad (streammux, pad_name);

if (!sinkpad) {

g_printerr ("Streammux request sink pad failed. Exiting.\n");

return -1;

}

srcpad = gst_element_get_static_pad (source_bin, "src");

if (!srcpad) {

g_printerr ("Failed to get src pad of source bin. Exiting.\n");

return -1;

}

if (gst_pad_link (srcpad, sinkpad) != GST_PAD_LINK_OK) {

g_printerr ("Failed to link source bin to stream muxer. Exiting.\n");

return -1;

}

gst_object_unref (srcpad);

gst_object_unref (sinkpad);

}

/* Use nvinfer to infer on batched frame. */

pgie = gst_element_factory_make ("nvinfer", "primary-nvinference-engine");//一级推理

//zyh

tracker = gst_element_factory_make ("nvtracker", "nvtracker");

/* Add queue elements between every two elements */

queue1 = gst_element_factory_make ("queue", "queue1");

queue2 = gst_element_factory_make ("queue", "queue2");

queue3 = gst_element_factory_make ("queue", "queue3");

queue4 = gst_element_factory_make ("queue", "queue4");

queue5 = gst_element_factory_make ("queue", "queue5");

queue6 = gst_element_factory_make ("queue", "queue6");

/* Use nvtiler to composite the batched frames into a 2D tiled array based

* on the source of the frames. */

tiler = gst_element_factory_make ("nvmultistreamtiler", "nvtiler");

/* Use convertor to convert from NV12 to RGBA as required by nvosd */

nvvidconv = gst_element_factory_make ("nvvideoconvert", "nvvideo-converter");

/* Create OSD to draw on the converted RGBA buffer */

nvosd = gst_element_factory_make ("nvdsosd", "nv-onscreendisplay");

//zyh

nvvidconv1 = gst_element_factory_make ("nvvideoconvert", "nvvid-converter1");

capfilt = gst_element_factory_make ("capsfilter", "nvvideo-caps");

nvh264enc = gst_element_factory_make ("nvv4l2h264enc" ,"nvvideo-h264enc");//硬件编码

/* Finally render the osd output */

if(prop.integrated) {

transform = gst_element_factory_make ("nvegltransform", "nvegl-transform");

}

if (sinkType == 1)

sink = gst_element_factory_make ("filesink", "nvvideo-renderer");

else if (sinkType == 2)

sink = gst_element_factory_make ("fakesink", "fake-renderer");

else if (sinkType == 3) {

sink = gst_element_factory_make ("nveglglessink", "nvvideo-renderer");

} else if (sinkType == 4) {

parser = gst_element_factory_make ("h264parse", "h264-parser");

rtppay = gst_element_factory_make ("rtph264pay", "rtp-payer");

sink = gst_element_factory_make ("udpsink", "udp-sink");

}

//sink = gst_element_factory_make ("nveglglessink", "nvvideo-renderer");

if (!pgie || !tiler || !nvvidconv || !nvosd || !sink) {

g_printerr ("One element could not be created. Exiting.\n");

return -1;

}

if(!transform && prop.integrated) {

g_printerr ("One tegra element could not be created. Exiting.\n");

return -1;

}

g_object_set (G_OBJECT (streammux), "batch-size", num_sources, NULL);

g_object_set (G_OBJECT (streammux), "width", MUXER_OUTPUT_WIDTH, "height",

MUXER_OUTPUT_HEIGHT,

"batched-push-timeout", MUXER_BATCH_TIMEOUT_USEC, NULL);

/* Configure the nvinfer element using the nvinfer config file. */

g_object_set (G_OBJECT (pgie), "config-file-path", property_config_file, NULL);

//g_object_set (G_OBJECT (pgie), "config-file-path", "dstest3_pgie_config.txt", "unique-id", 1, NULL);

/* Override the batch-size set in the config file with the number of sources. */

g_object_get (G_OBJECT (pgie), "batch-size", &pgie_batch_size, NULL);

if (pgie_batch_size != num_sources) {

g_printerr

("WARNING: Overriding infer-config batch-size (%d) with number of sources (%d)\n",

pgie_batch_size, num_sources);

g_object_set (G_OBJECT (pgie), "batch-size", num_sources, NULL);

}

//zyh

if (!set_tracker_properties(tracker, tracker_config_file)) {

g_printerr ("Failed to set tracker properties. Exiting.\n");

}

tiler_rows = (guint) sqrt (num_sources);

tiler_columns = (guint) ceil (1.0 * num_sources / tiler_rows);

/* we set the tiler properties here */

g_object_set (G_OBJECT (tiler), "rows", tiler_rows, "columns", tiler_columns,

"width", TILED_OUTPUT_WIDTH, "height", TILED_OUTPUT_HEIGHT, NULL);

// g_object_set (G_OBJECT (nvosd), "process-mode", OSD_PROCESS_MODE, "display-text", OSD_DISPLAY_TEXT, NULL);

g_object_set (G_OBJECT (nvosd), "process-mode", OSD_PROCESS_MODE, "display-text", 1, NULL);

//zyh

caps = gst_caps_from_string ("video/x-raw(memory:NVMM), format=I420");//硬件编码

g_object_set (G_OBJECT (capfilt), "caps", caps, NULL);

gst_caps_unref (caps);

/* we add a message handler */

bus = gst_pipeline_get_bus (GST_PIPELINE (pipeline));

bus_watch_id = gst_bus_add_watch (bus, bus_call, loop);

gst_object_unref (bus);

//zyh:后面可以加也可以不加queue

/* Set up the pipeline */

/* we add all elements into the pipeline */

gst_bin_add_many (GST_BIN (pipeline), queue1, pgie, queue2, tracker, queue3, tiler, queue4,

nvvidconv, queue5, nvosd, sink, NULL);//sink先加入,后面再连接起来

/* we link the elements together

* nvstreammux -> nvinfer -> nvtiler -> nvvidconv -> nvosd -> video-renderer */

if (!gst_element_link_many (streammux, queue1, pgie, queue2, tracker, queue3, tiler, queue4,

nvvidconv, queue5, nvosd, NULL)) {

g_printerr ("Elements could not be linked. Exiting.\n");

return -1;

}

g_object_set (G_OBJECT (sink), "qos", 0, NULL);

//zyh

if (sinkType == 1) {

g_object_set (G_OBJECT (sink), "location", outFilename,NULL);

gst_bin_add_many (GST_BIN (pipeline), nvvidconv1, capfilt, nvh264enc, NULL);

if (!gst_element_link_many (nvosd, nvvidconv1, capfilt, nvh264enc, sink, NULL)) {

g_printerr ("OSD and sink elements link failure.\n");

return -1;

}

} else if (sinkType == 2) {

g_object_set (G_OBJECT (sink), "sync", 0, "async", false,NULL);

if (!gst_element_link (nvosd, sink)) {

g_printerr ("OSD and sink elements link failure.\n");

return -1;

}

} else if (sinkType == 3) {

if(prop.integrated) {

gst_bin_add_many (GST_BIN (pipeline), transform, NULL);

if (!gst_element_link_many (nvosd, transform, sink, NULL)) {

g_printerr ("OSD and sink elements link failure.\n");

return -1;

}

} else {

if (!gst_element_link_many (nvosd, sink, NULL)) {

g_printerr ("OSD and sink elements link failure.\n");

return -1;

}

}

} else if (sinkType == 4) {

g_object_set (G_OBJECT (nvh264enc), "bitrate", 4000000, NULL);

g_object_set (G_OBJECT (nvh264enc), "profile", 0, NULL);

g_object_set (G_OBJECT (nvh264enc), "iframeinterval", 30, NULL);

g_object_set (G_OBJECT (nvh264enc), "preset-level", 1, NULL);

g_object_set (G_OBJECT (nvh264enc), "insert-sps-pps", 1, NULL);

g_object_set (G_OBJECT (nvh264enc), "bufapi-version", 1, NULL);

g_object_set (G_OBJECT (sink), "host", (char *)"127.0.0.1", "port", udpPort, "async", FALSE, "sync", 0, NULL);

gst_bin_add_many (GST_BIN (pipeline), nvvidconv1, capfilt, nvh264enc, parser, rtppay, NULL);

gst_element_link_many (nvosd, nvvidconv1, capfilt, nvh264enc, parser, rtppay, sink, NULL);

if (TRUE != start_rtsp_streaming (rtspServer1, rtspPort, udpPort, 1, 512*1024)) {

g_printerr ("%s: start_rtsp_straming function failed\n", __func__);

return -1;

}

}

/* Lets add probe to get informed of the meta data generated, we add probe to

* the sink pad of the osd element, since by that time, the buffer would have

* had got all the metadata. */

tiler_src_pad = gst_element_get_static_pad (pgie, "src");

if (!tiler_src_pad)

g_print ("Unable to get src pad\n");

else

gst_pad_add_probe (tiler_src_pad, GST_PAD_PROBE_TYPE_BUFFER,

tiler_src_pad_buffer_probe, NULL, NULL);

gst_object_unref (tiler_src_pad);

/* Set the pipeline to "playing" state */

g_print ("Now playing:");

for (i = 0; i < num_sources; i++) {

g_print (" %s,", argv[i + 1]);

}

g_print ("\n");

gst_element_set_state (pipeline, GST_STATE_PLAYING);

/* Wait till pipeline encounters an error or EOS */

g_print ("Running...\n");

g_main_loop_run (loop);

/* Out of the main loop, clean up nicely */

g_print ("Returned, stopping playback\n");

gst_element_set_state (pipeline, GST_STATE_NULL);

g_print ("Deleting pipeline\n");

gst_object_unref (GST_OBJECT (pipeline));

g_source_remove (bus_watch_id);

g_main_loop_unref (loop);

return 0;

}

- Makefile:

################################################################################

# Copyright (c) 2019-2020, NVIDIA CORPORATION. All rights reserved.

#

# Permission is hereby granted, free of charge, to any person obtaining a

# copy of this software and associated documentation files (the "Software"),

# to deal in the Software without restriction, including without limitation

# the rights to use, copy, modify, merge, publish, distribute, sublicense,

# and/or sell copies of the Software, and to permit persons to whom the

# Software is furnished to do so, subject to the following conditions:

#

# The above copyright notice and this permission notice shall be included in

# all copies or substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

# THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

# FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

# DEALINGS IN THE SOFTWARE.

################################################################################

#CUDA_VER?=

#ifeq ($(CUDA_VER),)

# $(error "CUDA_VER is not set")

#endif

APP:= deepstream-test3-app

TARGET_DEVICE = $(shell gcc -dumpmachine | cut -f1 -d -)

#NVDS_VERSION:=5.1

LIB_INSTALL_DIR?=/opt/nvidia/deepstream/deepstream/lib/

APP_INSTALL_DIR?=/opt/nvidia/deepstream/deepstream/bin/

ifeq ($(TARGET_DEVICE),aarch64)

CFLAGS:= -DPLATFORM_TEGRA

endif

SRCS:= $(wildcard *.c)

INCS:= $(wildcard *.h)

PKGS:= gstreamer-1.0

OBJS:= $(SRCS:.c=.o)

CFLAGS+= -I /opt/nvidia/deepstream/deepstream/sources/includes \

-I /opt/nvidia/deepstream/deepstream/sources/apps/apps-common/includes \

-I /usr/local/cuda/include \

-I /usr/include/gstreamer-1.0/ \

-I /usr/include/glib-2.0/ \

-I /usr/lib/aarch64-linux-gnu/glib-2.0/include/ \

CFLAGS+= $(shell pkg-config --cflags $(PKGS))

LIBS:= $(shell pkg-config --libs $(PKGS))

LIBS+= -L/usr/local/cuda/lib64/ -lcudart -lnvdsgst_helper -lm -lgstrtspserver-1.0\

-L$(LIB_INSTALL_DIR) -lnvdsgst_meta -lnvds_meta \

-lcuda -Wl,-rpath,$(LIB_INSTALL_DIR)

all: $(APP)

%.o: %.c $(INCS) Makefile

$(CC) -c -o $@ $(CFLAGS) $<

$(APP): $(OBJS) Makefile

$(CC) -o $(APP) $(OBJS) $(LIBS)

install: $(APP)

cp -rv $(APP) $(APP_INSTALL_DIR)

clean:

rm -rf $(OBJS) $(APP)