1. 训练代码

pytorch自身部署较麻烦,一般使用onnx和mnn较为实用

训练模型的代码:

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transforms

import torch.optim as optim

from torch.optim import lr_scheduler

import torch.onnx

if __name__ == '__main__':

# transforms

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))])

# datasets

trainset = torchvision.datasets.FashionMNIST('data',download=True,train=True, transform=transform)

testset = torchvision.datasets.FashionMNIST('data',download=True,train=False,transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=1,

shuffle=True, num_workers=2)

testloader = torch.utils.data.DataLoader(testset, batch_size=1,

shuffle=False, num_workers=2)

classes = ('T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat','Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle Boot')

print('trainloader--------',trainloader)

class FashionMNIST(nn.Module):

def __init__(self,num_classes=10):

super(FashionMNIST,self).__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=16, kernel_size=3, stride=1, padding=1)

self.conv2 = nn.Conv2d(in_channels=16, out_channels=32, kernel_size=3, stride=1, padding=1)

self.pool = nn.MaxPool2d(kernel_size=2)

self.drop = nn.Dropout(0.2)

self.conv3 = nn.Conv2d(in_channels=32, out_channels=64, kernel_size=3, stride=1, padding=1)

self.fc1 = nn.Linear(in_features=64* 3 * 3, out_features=128)

self.fc2 = nn.Linear(in_features=128, out_features=num_classes)

def forward(self, input):

output = self.conv1(input)

output=nn.ReLU()(output)

output= self.pool(output)

output = self.conv2(output)

output=nn.ReLU()(output)

output= self.pool(output)

output = self.conv3(output)

output=nn.ReLU()(output)

output= self.pool(output)

output=self.drop(output)

output = output.view(-1, 64* 3 * 3)

output=nn.Flatten()(output)

output = self.fc1(output)

output = self.fc2(output)

return output

model = FashionMNIST().to(device='cpu')

optimizer = optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

criterion = nn.CrossEntropyLoss()

exp_lr_scheduler = lr_scheduler.StepLR(optimizer, step_size=7, gamma=0.1)

for epoch in range(1):

for index,data in enumerate(trainloader):

inputs,labels=data

#print(labels)

inputs = inputs.to('cpu')

labels = labels.to('cpu')

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

if index%1000==0:

print(index,loss)

torch.save(model, 'FashionMNIST.pth')

model.eval()

x = torch.randn(1, 1, 28, 28, requires_grad=True)

torch_out = model(x)

torch.onnx.export(model, x, "FashionMNIST.onnx", export_params=True, opset_version=10,

do_constant_folding=True, input_names = ['input'], output_names = ['output'],

)

2. 转mnn模型

训练完成后会保存一个onnx模型 ,pytorch模型是直接转不了mnn,需要转onnx后才能转mnn

值得注意的是,torch.onnx.export保存onnx模型的时候,最好不要使用dynamic_axes,因为默认是固定输入形状大小的,不然后面调用mnn模型比较麻烦

然后将onnx转mnn模型:

mnnconvert -f ONNX --modelFile FashionMNIST.onnx --MNNModel FashionMNIST.mnn

3. 验证onnx和mnn模型

import onnxruntime

import MNN

import numpy as np

if __name__ == '__main__':

x=np.ones([1, 1, 28, 28]).astype(np.float32)

#onnx

session = onnxruntime.InferenceSession("FashionMNIST.onnx")

inputs = {

session.get_inputs()[0].name: x}

outs = session.run(None, inputs)

print('onnx result is:',outs)

#mnn

interpreter = MNN.Interpreter("FashionMNIST.mnn")

print("mnn load")

mnn_session = interpreter.createSession()

input_tensor = interpreter.getSessionInput(mnn_session)

print(input_tensor.getShape())

tmp_input = MNN.Tensor((1, 1, 28, 28),\

MNN.Halide_Type_Float, x[0], MNN.Tensor_DimensionType_Tensorflow)

print(tmp_input.getShape())

#print(tmp_input.getData())

print(input_tensor.copyFrom(tmp_input))

input_tensor.printTensorData()

interpreter.runSession(mnn_session)

output_tensor = interpreter.getSessionOutput(mnn_session,'output')

output_tensor.printTensorData()

output_data=np.array(output_tensor.getData())

print('mnn result is:',output_data)

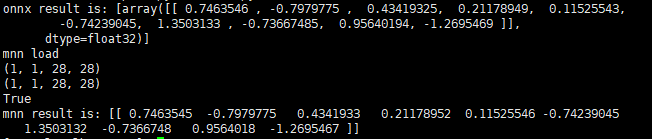

运行结果:

4. C++调用mnn模型

#include <iostream>

#include<opencv2/core.hpp>

#include<opencv2/imgproc.hpp>

#include<opencv2/highgui.hpp>

#include<MNN/Interpreter.hpp>

#include<MNN/ImageProcess.hpp>

using namespace std;

using namespace cv;

using namespace MNN;

int main()

{

auto net = std::shared_ptr<MNN::Interpreter>(MNN::Interpreter::createFromFile("FashionMNIST.mnn"));//创建解释器

cout << "Interpreter created" << endl;

ScheduleConfig config;

config.numThread = 8;

config.type = MNN_FORWARD_CPU;

auto session = net->createSession(config);//创建session

cout << "session created" << endl;

auto inTensor = net->getSessionInput(session, NULL);

auto outTensor = net->getSessionInput(session, NULL);

auto _Tensor = MNN::Tensor::create<float>(inTensor->shape(), NULL, MNN::Tensor::TENSORFLOW);

for (int i = 0; i < _Tensor->elementSize(); i++) {

_Tensor->host<float>()[i] = 1.f;

}

inTensor->copyFromHostTensor(_Tensor);

//推理

net->runSession(session);

auto output= net->getSessionOutput(session, NULL);

MNN::Tensor feat_tensor(output, output->getDimensionType());

output->copyToHostTensor(&feat_tensor);

feat_tensor.print();

waitKey(0);

return 0;

}

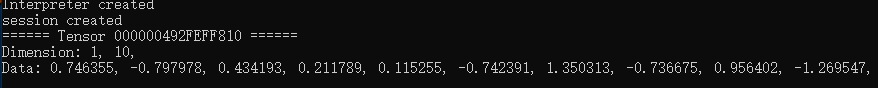

运行结果:

结果和python调用一致

5. c++调用onnx

#include<onnxruntime_cxx_api.h>

#include<iostream>

#include<vector>

#include<opencv2/core.hpp>

#include<opencv2/imgproc.hpp>

#include<opencv2/highgui.hpp>

using namespace std;

using namespace cv;

static constexpr const int width_ = 28;

static constexpr const int height_ = 28;

static constexpr const int channel = 1;

std::array<float, width_* height_* channel> input_image_{

};

std::array<float, 10> results_{

};

int result_{

0 };

Ort::Value input_tensor_{

nullptr };

std::array<int64_t, 4> input_shape_{

1,1, width_, height_ };

Ort::Value output_tensor_{

nullptr };

std::array<int64_t, 2> output_shape_{

1, 10};

OrtSession* session_ = nullptr;

OrtSessionOptions* session_option;

int main() {

Ort::Env env{

ORT_LOGGING_LEVEL_WARNING, "test" };

auto memory_info = Ort::MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU);

input_tensor_ = Ort::Value::CreateTensor<float>(memory_info, input_image_.data(), input_image_.size(), input_shape_.data(), input_shape_.size());

const char* input_names[] = {

"input" };

const char* output_names[] = {

"output" };

Ort::SessionOptions session_option;

session_option.SetIntraOpNumThreads(1);

session_option.SetGraphOptimizationLevel(ORT_ENABLE_BASIC);

Ort::Session session_(env, L"FashionMNIST.onnx", session_option);

cout << "session created sucess" << endl;

float* output = input_image_.data();

fill(input_image_.begin(), input_image_.end(), 1.f);

//执行推理

session_.Run(Ort::RunOptions{

nullptr }, input_names, &input_tensor_, 1, output_names, &output_tensor_, 1);

for (int i = 0;i<10;i++) {

cout << output_tensor_.GetTensorMutableData<float>()[i] << endl;

}

return 0;

}

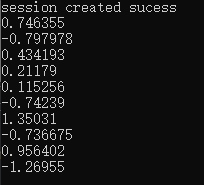

运行结果:

结果一致