跟随川川打卡爬虫第四天,受益匪浅!!!

文章目录

扫描二维码关注公众号,回复:

13336479 查看本文章

一、前言

前面两篇文章我已经把requests基础与高阶篇都做了详细讲解,也有不少了例子。那么本篇在基于前两篇文章之上,专门做一篇实战篇。

requests 基础篇 进阶篇

环境:jupyter

如果你不会使用jupyter请看我这一篇文章:jupyter安装教程与使用教程

二、实战

1)获取百度网页并打印

#-*- coding: utf-8 -*

import requests

url = 'http://www.baidu.com'

r = requests.get(url)

r.encoding = r.apparent_encoding

print(r.text)运行结果:

2)获取帅哥图片并下载到本地

此照片链接 点它就行

现在我们就把这张图片下载下来:

代码:

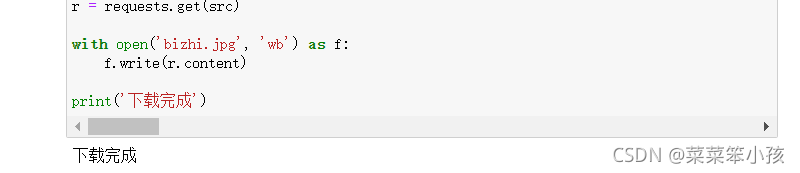

import requests

src = 'https://cn.bing.com/images/search?view=detailV2&ccid=yj6ElAFe&id=D93F105743FB238DEB0F368C30CD9881AEB3B8E8&thid=OIP.yj6ElAFeZl8v6dYUhuMgqAHaHa&mediaurl=https%3A%2F%2Ftse1-mm.cn.bing.net%2Fth%2Fid%2FR-C.ca3e8494015e665f2fe9d61486e320a8%3Frik%3D6LizroGYzTCMNg%26riu%3Dhttp%253a%252f%252fp4.music.126.net%252f9Fpqj1WM0H7fjlRQc3-TSw%253d%253d%252f109951165325278290.jpg%26ehk%3Dr9puRRQ%252fYEoDToUqJ%252bOt%252fBhB69sKQ8Zl0cwQXKrOWng%253d%26risl%3D%26pid%3DImgRaw%26r%3D0&riu=http%253a%252f%252fp2.music.126.net%252fPFVNR3tU9DCiIY71NdUDcQ%253d%253d%252f109951165334518246.jpg&ehk=o08VEDcuKybQIPsOGrNpQ2glID%252fIiEV7cw%252bFo%252fzopiM%253d&risl=1&pid=ImgRaw&r=0&exph=1024&expw=1024&q=%E5%BC%A0%E6%9D%B0&simid=608056275652971603&form=IRPRST&ck=61FF572F08E45A84E73B7ECCF670E32A&selectedindex=3&ajaxhist=0&ajaxserp=0&vt=0&sim=11'

r = requests.get(src)

with open('bizhi.jpg', 'wb') as f:

f.write(r.content)

print('下载完成') 运行结果:

4) 获取美女视频并下载到本地

比如我得到一个视频链接:美女变身

截图:

代码:

import requests

src = 'https://v26-web.douyinvod.com/cbf8c5256aa1445d0db1cc23cb324a96/61951eb0/video/tos/cn/tos-cn-ve-15-alinc2/ffaec236e9b84baa8de831b1335db83c/?a=6383&br=1360&bt=1360&cd=0%7C0%7C0&ch=5&cr=3&cs=0&cv=1&dr=0&ds=3&er=&ft=OyFYlOZZI0rC17XzGTh9D8Fxuhsd5.RcHqY&l=20211117222423010135163078021E6247&lr=all&mime_type=video_mp4&net=0&pl=0&qs=0&rc=amZ5eGk6Zm10OTMzNGkzM0ApNTtmOzw4O2Q1NzVoZmQ4M2ctX2s1cjQwYzBgLS1kLWFzczJeX15eLy1iMS4uYGFgYGE6Yw%3D%3D&vl=&vr='

r = requests.get(src)

with open('movie.mp4', 'wb') as f:

f.write(r.content)

print('下载完成')运行结果:

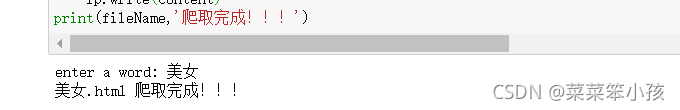

5)搜狗关键词搜索爬取

代码:

import requests

#指定url

url='https://www.sogou.com/web'

kw=input('enter a word: ')

header={

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.131 Safari/537.36'

}

param={

'query':kw

}

#发起请求,做好伪装

response=requests.get(url=url,params=param,headers=header)

#获取相应数据

content=response.text

fileName=kw+'.html'

#将数据保存在本地

with open(fileName,'w',encoding='utf-8') as fp:

fp.write(content)

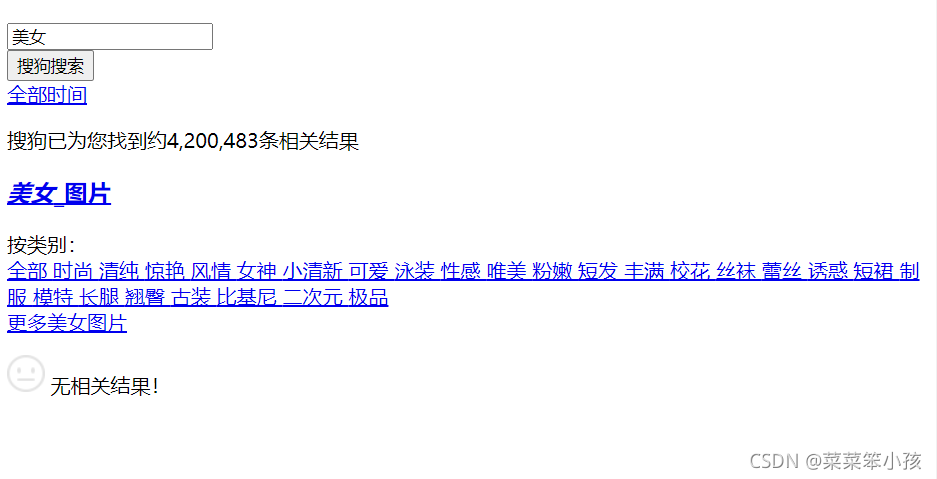

print(fileName,'爬取完成!!!')运行结果: 输入 美女 回车

网址详情:

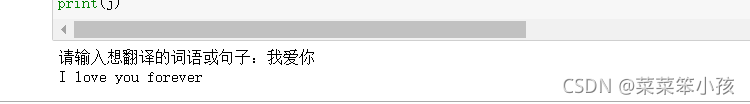

6)爬取百度翻译

分析找到接口:

由此我们可以拿到接口和请求方式:

代码:

import json

import requests

url='https://fanyi.baidu.com/sug'

word=input('请输入想翻译的词语或句子:')

data={

'kw':word

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2626.106 Safari/537.36'

}

reponse=requests.post(url=url,data=data,headers=headers)

dic_obj=reponse.json()

# print(dic_obj)

filename=word+'.json'

with open(filename,'w',encoding='utf-8') as fp:

json.dump(dic_obj,fp=fp,ensure_ascii=False)

j=dic_obj['data'][1]['v']

print(j)测试结果:

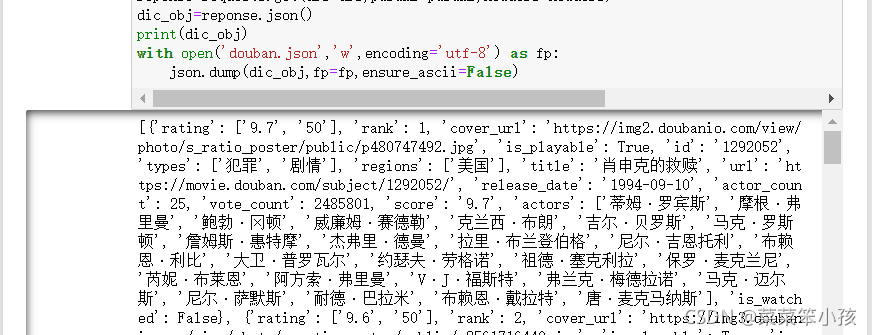

7)爬取豆瓣电影榜单

目标网址:

https://movie.douban.com/chart

代码:

import json

import requests

url='https://movie.douban.com/j/chart/top_list?'

params={

'type': '11',

'interval_id': '100:90',

'action': '',

'start': '0',

'limit': '20',

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2626.106 Safari/537.36'

}

reponse=requests.get(url=url,params=params,headers=headers)

dic_obj=reponse.json()

print(dic_obj)

with open('douban.json','w',encoding='utf-8') as fp:

json.dump(dic_obj,fp=fp,ensure_ascii=False)运行结果:(同时保存为json)

8)JK妹子爬取

import requests

import re

import urllib.request

import time

import os

header={

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.162 Safari/537.36'

}

url='https://cn.bing.com/images/async?q=jk%E5%88%B6%E6%9C%8D%E5%A5%B3%E7%94%9F%E5%A4%B4%E5%83%8F&first=118&count=35&relp=35&cw=1177&ch=705&tsc=ImageBasicHover&datsrc=I&layout=RowBased&mmasync=1&SFX=4'

request=requests.get(url=url,headers=header)

c=request.text

pattern=re.compile(

r'<div class="imgpt".*?<div class="img_cont hoff">.*?src="(.*?)".*?</div>',re.S

)

items = re.findall(pattern, c)

# print(items)

os.makedirs('photo',exist_ok=True)

for a in items:

print(a)

for a in items:

print("下载图片:"+a)

b=a.split('/')[-1]

urllib.request.urlretrieve(a,'photo/'+str(int(time.time()))+'.jpg')

print(a+'.jpg')

time.sleep(2)运行结果:

图片如下:

总结:

每天坚持打卡,坚持自己动手实践,真的收获了很多,非常感谢川川给的机会!

如果本文的表头或者url处不懂或不知道怎么找,请移步到基础篇先去学习一下!

如果本文有不当之处,请你指出,谢谢!!!