Content-Aware Unsupervised Deep Homography Estimation

内容感知的非监督深度单应矩阵估计

code

Abstract

RANSAC → learn an outlier mask (Only select reliable regions for homography estimation )

learned deep features → calculate loss

formulate a novel triplet loss → achieve the unsupervised training

supervised solution

Something about dataset generation

Require Ground Truth Homography → produce image pairs(input image → GT homography → generate output image)

Weakness: far from real cases (real depth disparities 实际深度差异) 对真实图像的泛化能力较差 depth variations of parallax

To solve this problem: unsupervised solution is promoted.

unsupervised solution

Nguyen et al. : Unsupervised deep homography: A fast and robust homography estimation model.

minimizes the photometric loss on real image pairs

Two main problems:

- the loss calculated with respect to image intensity is less effective than that in the feature space

- the loss is calculated uniformly in

the entire image ignoring the RANSAC-like process

Result: cannot exclude the moving or non-planar objects to contribute the final loss

Limit: work on aerial images that are far away from the camera to minimize the influence of depth variations of parallax

Contributions

A new architecture with content-awareness learning 内容感知

Object: image pairs with a small baseline

Optimize a homography: specially learns

( intermediate results)

1. a deep feature for alignment → loss calculation

2. a content-aware mask → reject outlier regions & loss calculation

DeTone et al.: Deep Image Homography Estimation using photometric loss to caculate loss.

Formulate a novel triplet loss

An image pair dataset: contains 5 categories of scenes and human-labeled GT point correspondences

Algorithm

STN is used to achieve the warping operation.

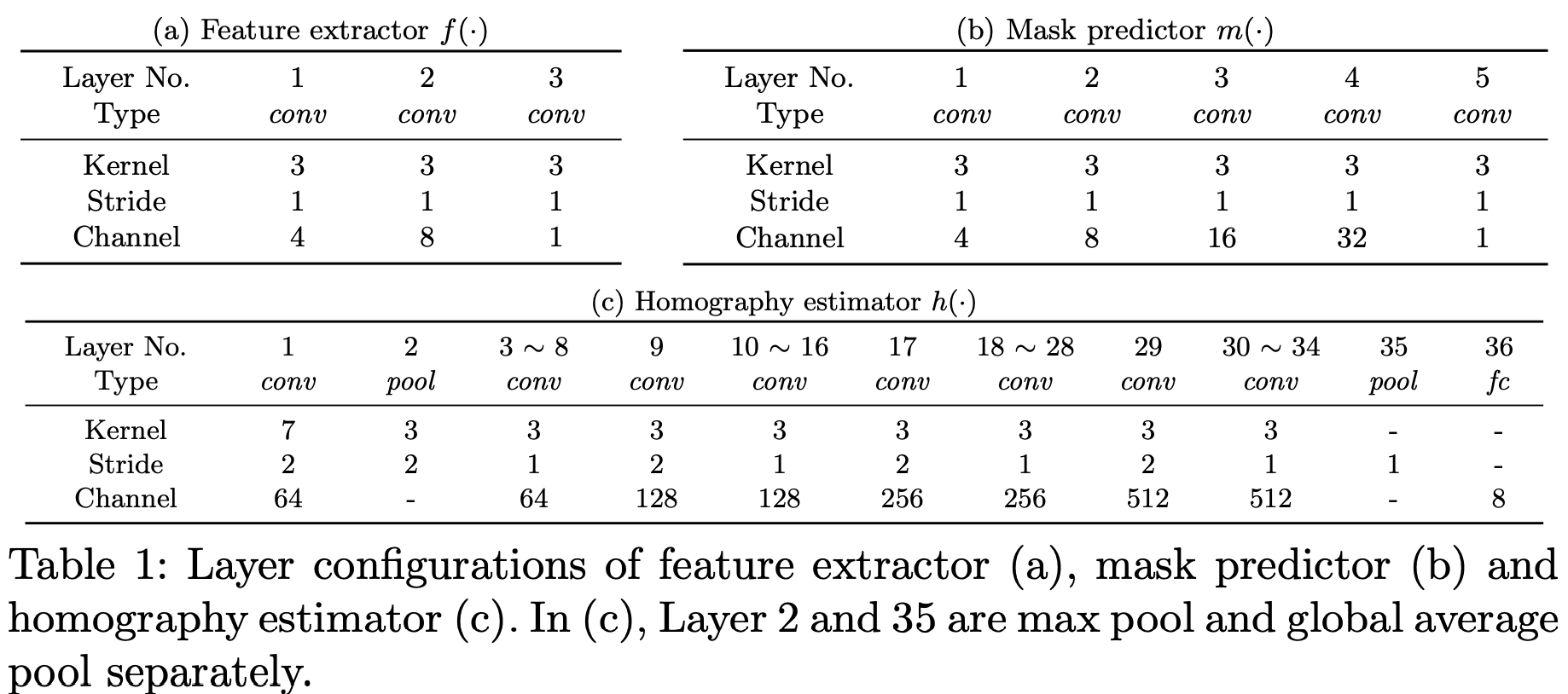

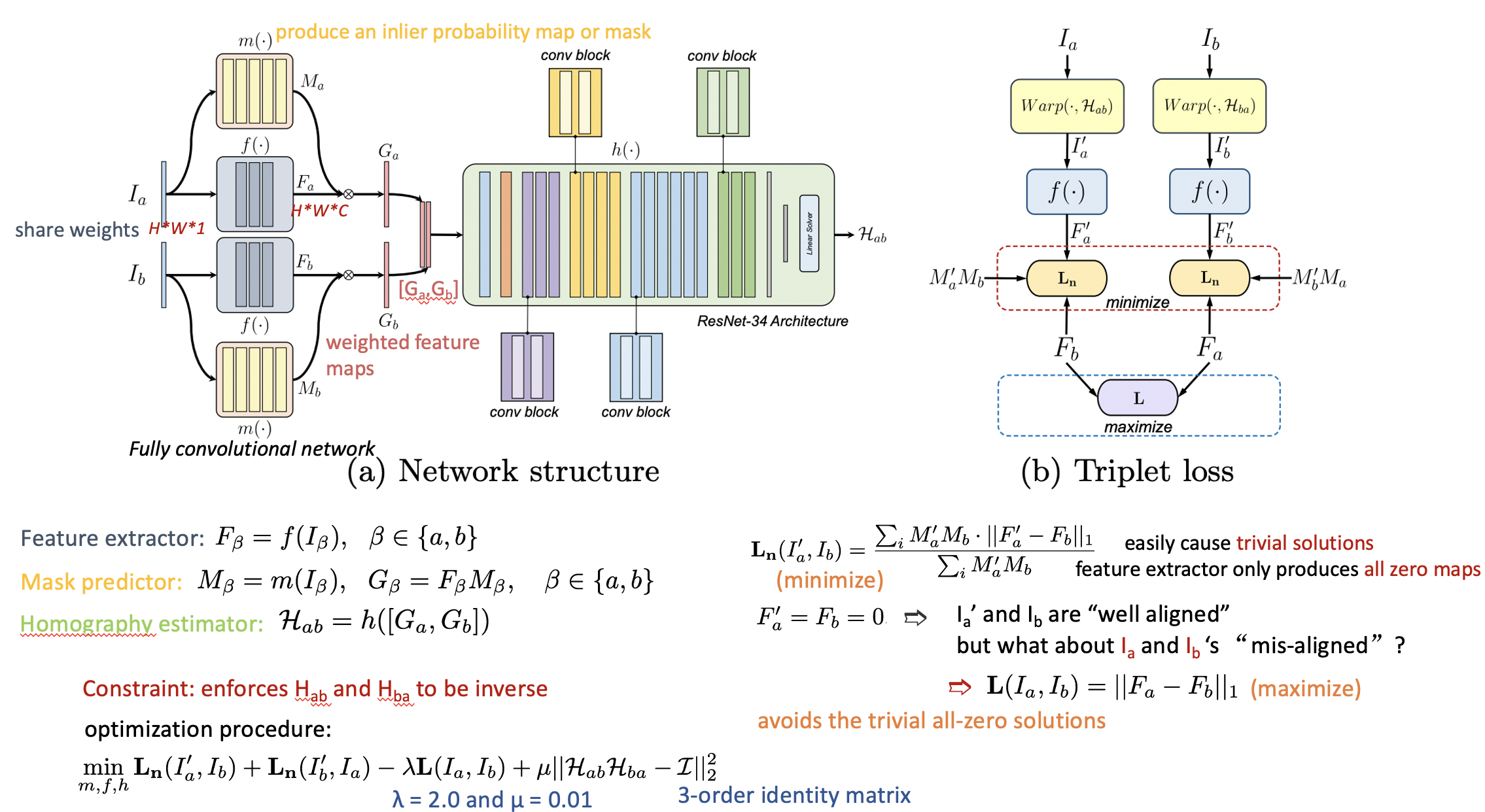

Network Structure & Triplet Loss

Training

Two-stage strategy:

- disabling the attention map role of the mask G β = F β , β ∈ a , b G_β=F_β, β∈a, b Gβ=Fβ,β∈a,b

- 60k iterations later, finetune the network by involving

the attention map role of the mask as M β = m ( I β ) , G β = F β M β , β ∈ a , b M_β=m(I_β), G_β=F_βM_β, β∈a, b Mβ=m(Iβ),Gβ=FβMβ,β∈a,b

Advantages: Reduces the error by 4.40% in average comparing with train totally from scratch.

Dataset

80k image pairs including regular (RE), low-texture (LT), low-light(LL), small-foregrounds (SF), and large-foregrounds (LF) scenes.

Test data: 4.2k image pairs are randomly chosen from all categories

Implementation Details

trained with 120k iterations by an Adam optimizer( l r = 1.0 × 1 0 − 4 , β 1 = 0.9 , β 2 = 0.999 , ε = 1.0 × 1 0 − 8 l_r=1.0×10^{−4}, β_1=0.9, β_2=0.999, ε=1.0×10^{−8} lr=1.0×10−4,β1=0.9,β2=0.999,ε=1.0×10−8 )

Batch size: 64

For every 12k iterations, the learning rate l r l_r lr is reduced

by 20%

To augment the training data and avoid black boundaries appearing in the warped image, we randomly crop patches of size 315 × 560 from the original image to form I a I_a Ia and I b I_b Ib.