整个程序使用OpenCV提供的算法进行求解。

//代码执行所需要的头文件

#include<iostream>

#include<opencv2/core/core.hpp>

#include<opencv2/features2d/features2d.hpp>

#include<opencv2/highgui/highgui.hpp>

#include<opencv2/calib3d/calib3d.hpp>

using namespace std;

using namespace cv;

//函数声明

//特征点匹配的函数声明

void find_feature_matches(const Mat &img_1,const Mat &img_2,std::vector<KeyPoint>&keypoints_1,

std::vector<KeyPoint> &keypoints_2,std::vector<DMatch> &matches);

//估计两张图像间的运动,即求R,t的函数声明

void pose_estimation_2d2d(std::vector<KeyPoint> keypoints_1, std::vector<KeyPoint> keypoints_2,

std::vector<DMatch> matches,Mat &R, Mat &t);

//像素坐标转相机归一化坐标

Point2d pixel2cam(const Point2d &p,const Mat &K);

//主函数

int main(int argc,char **argv)

{

//判断输出的是不是三个参数,如果不是则执行if下面的语句

if(argc!=3)

{

cout<<"usage:pose_estimation_2d2d img1 img2"<<endl;

return 1;

}

//--读取图像

//其中 CV_LOAD_IMAGE_COLOR 是以RGB格式读取图像;其他介绍在代码之后,知识点1

Mat img_1=imread(argv[1], CV_LOAD_IMAGE_COLOR);

Mat img_2=imread(argv[2], CV_LOAD_IMAGE_COLOR);

assert(img_1.data&&img_2.data &&"Can not load images!");

vector<KeyPoint> keypoints_1,keypoints_2;

vector<DMatch> matches;

find_feature_matches(img_1,img_2,keypoints_1,keypoints_2,matches); //调用函数

cout<<"一共找到了"<<matches.size()<<" 组匹配点"<<endl;

//--估计两张图像间的运动

Mat R,t; //定义Mat类的对象

pose_estimation_2d2d(keypoints_1,keypoints_2,matches,R,t);

//--验证E=t^R*scale

//将平移t转换为3*3的反对称矩阵即为t^,详细见知识点2

Mat t_x=

(Mat_<double>(3,3)<<0,-t.at<double>(2,0),t.at<double>(1,0),

t.at<double>(2,0),0,-t.at<double>(0,0),

-t.at<double>(1,0),t.at<double>(0,0),0);

cout<<"t^R="<<endl<<t_x*R<<endl;

//--验证对极约束

//K为相机的内参

Mat K=(Mat_<double>(3,3)<<520.9,0,325.1,0,521.0,249.7,0,0,1);

for(DMatch m:matches)

{

Point2d pt1=pixel2cam(keypoints_1[m.queryIdx].pt,K); //queryIdx相当于keypoints_1的索引

Mat y1=(Mat_<double>(3,1)<<pt1.x,pt1.y,1);

Point2d pt2=pixel2cam(keypoints_2[m.trainIdx].pt,K); //trainIdx相当于keypoints_1的索引

Mat y2=(Mat_<double>(3,1)<<pt2.x,pt2.y,1);

Mat d=y2.t()*t_x*R*y1;

cout<<"epipolar constraint="<<d<<endl;

}

return 0;

}

//特征点匹配函数

void find_feature_matches(const Mat &img_1,const Mat &img_2,std::vector<KeyPoint>&keypoints_1,

std::vector<KeyPoint> &keypoints_2,std::vector<DMatch> &matches)

{

//初始化

Mat descriptors_1,descriptors_2;

Ptr<FeatureDetector> detector=ORB::create(); //特征点检测

Ptr<DescriptorExtractor> descriptor=ORB::create(); //描述子提取器

Ptr<DescriptorMatcher> matcher=DescriptorMatcher::create("BruteForce-Hamming");

//--第一步:检测Oriented FAST角点位置

detector->detect(img_1,keypoints_1);

detector->detect(img_2,keypoints_2);

//-- 第二步:根据角点位置计算BRIEF描述子

descriptor->compute(img_1,keypoints_1,descriptors_1);

descriptor->compute(img_2,keypoints_2,descriptors_2);

//--第三步:对两幅图像中的BRIEF描述子进行匹配,使用Hamming距离

vector<DMatch>match;

matcher->match(descriptors_1,descriptors_2,match);

//第四步:匹配点对筛选

double min_dist=10000,max_dist=0;

//找出所有匹配之间的最小距离和最大距离,即最相似的和最不相似的两组点之间的距离

for(int i=0;i<descriptors_1.rows;i++)

{

double dist=match[i].distance;

if(dist<min_dist)

min_dist=dist;

if(dist>max_dist)

max_dist=dist;

}

printf("--Max dist:%f\n",max_dist);

printf("--Min dist:%f\n",min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误,但有时候最小距离会非常小,设置一个经验值30作为下限

for(int i=0;i<descriptors_1.rows;i++)

{

if(match[i].distance<=max(2*min_dist,30.0))

{

matches.push_back(match[i]);

}

}

}

//像素坐标转相机归一化坐标:见知识点3

Point2d pixel2cam(const Point2d &p,const Mat &K)

{

return Point2d

(

(p.x - K.at<double>(0,2))/K.at<double>(0,0),

(p.y - K.at<double>(1,2))/K.at<double>(1,1)

);

}

//估计两张图像间的运动

void pose_estimation_2d2d(std::vector<KeyPoint> keypoints_1, std::vector<KeyPoint> keypoints_2,

std::vector<DMatch> matches,Mat &R, Mat &t)

{

//相机内参,TUM Freiburg2

Mat K=(Mat_<double>(3,3)<<520.9,0,325.1,0,521.0,249.7,0,0,1);

//--把匹配点转换为vector<Point2f>的形式

vector<Point2f>points1;

vector<Point2f>points2;

for(int i=0;i<(int)matches.size();i++)

{

points1.push_back(keypoints_1[matches[i].queryIdx].pt);

points2.push_back(keypoints_2[matches[i].trainIdx].pt);

}

//--计算基础矩阵

Mat fundamental_matrix;

fundamental_matrix=findFundamentalMat(points1,points2,CV_FM_8POINT);//points1,points2是匹配点对的像素坐标,并且能够一一对应

cout<<"fundamental_matrix is"<<endl<<fundamental_matrix<<endl;

//计算本质矩阵

Point2d principal_point(325.1,249.7); //相机光心,TUM dataset标定值

double focal_length=521; //相机焦距,TUM dataset标定值

Mat essential_matrix;

essential_matrix=findEssentialMat(points1,points2,focal_length,principal_point);

cout<<"essential_matrix is"<<endl<<essential_matrix<<endl;

//--计算单应矩阵

Mat homography_matrix;

homography_matrix=findHomography(points1,points2,RANSAC,3);

cout<<"homography_matrix is"<<endl<<homography_matrix<<endl;

//从本质矩阵中恢复旋转和平移信息

recoverPose(essential_matrix,points1,points2,R,t,focal_length,principal_point);

cout<<"R is"<<endl<<R<<endl;

cout<<"t is"<<endl<<t<<endl;

}

代码中涉及到的知识点

1.知识点1

LOAD_IMAGE_UNCHANGED(<0): 以原始图像的读取(包括alpha通道)

LOAD_IMAGE_GRAYSCALE(=0):以灰度图像读取

LOAD_IMAGE_COLOR(>0):以RGB格式读取

2.知识点2

由代码运行的结果可以知道相机运行过程中的平移t是

t

[-0.9350802885437915;

-0.03514646275858852;

0.3526890700495534]

下面这个代码的作用即是将t转换为反对称矩阵t^

t=

[0 , -0.3526890700495534 , -0.03514646275858852;

0.3526890700495534 , 0 , 0.9350802885437915;

0.03514646275858852 , -0.9350802885437915,0]

其中Max t_x=(Mat_(3,3)<<…)定义一个3*3的矩阵并进行初始化

Mat t_x=

(Mat_<double>(3,3)<<0,-t.at<double>(2,0),t.at<double>(1,0),

t.at<double>(2,0),0,-t.at<double>(0,0),

-t.at<double>(1,0),t.at<double>(0,0),0);

cout<<"t^R="<<endl<<t_x*R<<endl;

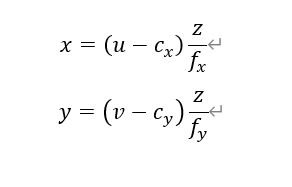

3.知识点3

//像素坐标转相机归一化坐标

Point2d pixel2cam(const Point2d &p,const Mat &K)

{

return Point2d

(

(p.x - K.at<double>(0,2))/K.at<double>(0,0),

(p.y - K.at<double>(1,2))/K.at<double>(1,1)

);

}

其中,K为相机的内参

公式: