Linux其他教程文章可以访问本人博客

https://blog.yangwn.top/index.php/archives.html

本人在二进制部署的过程中走了很多弯路,所以博文中部署过程很详细!得到帮助的麻烦给个赞,谢谢!

Kubernetes安装部署方式

单节点微型k8s、功能预览、在线学习

生产环境首选、安装过程困难、新手推荐(可以自定义证书有限期)

k8s的部署工具、相对简单、适用于生产环境、熟手推荐(默认证书有限期为1年)

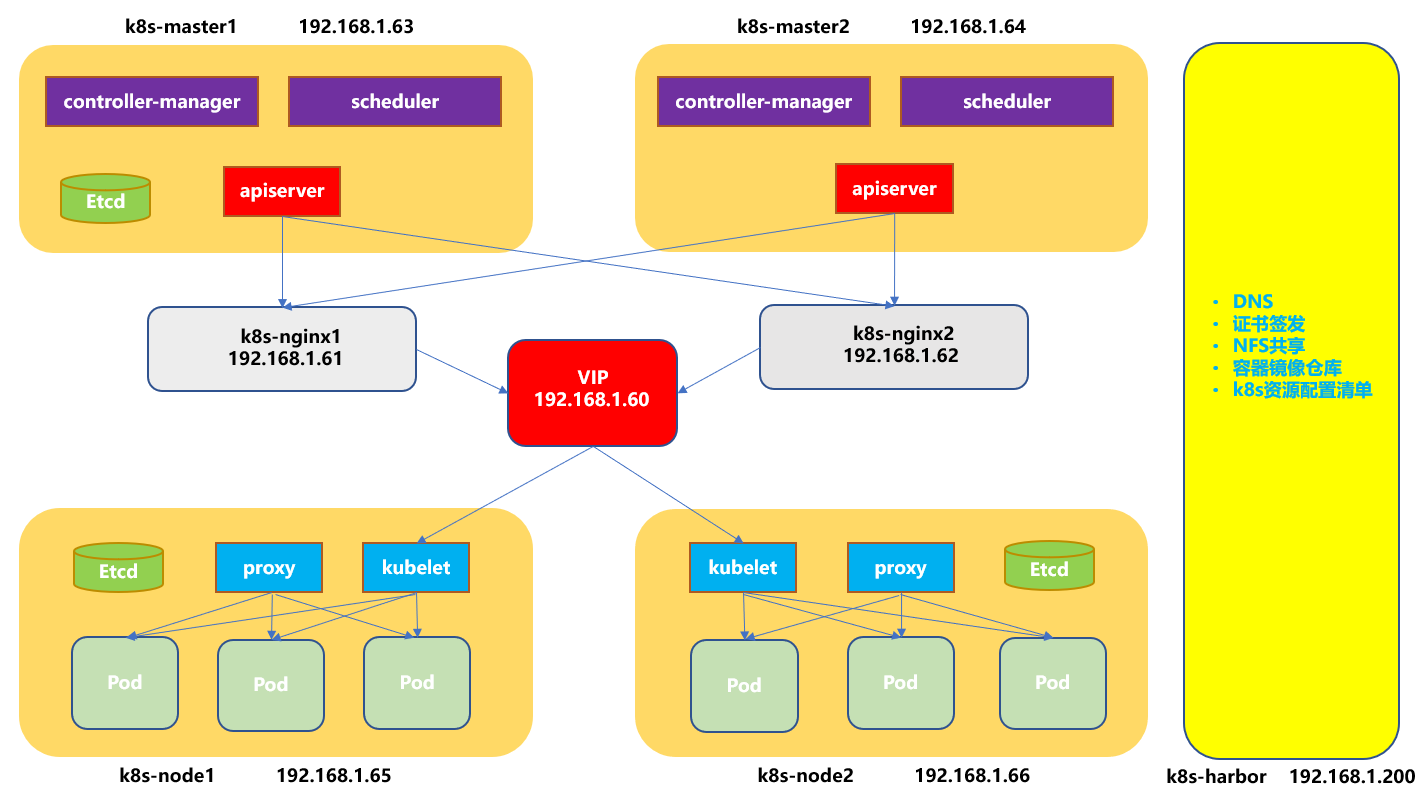

- 本博文采用二进制安装部署多master高可用架构

适用于v1.15.0以上的版本(演示部署版本为v1.16.0)

Kubernetes实验规划

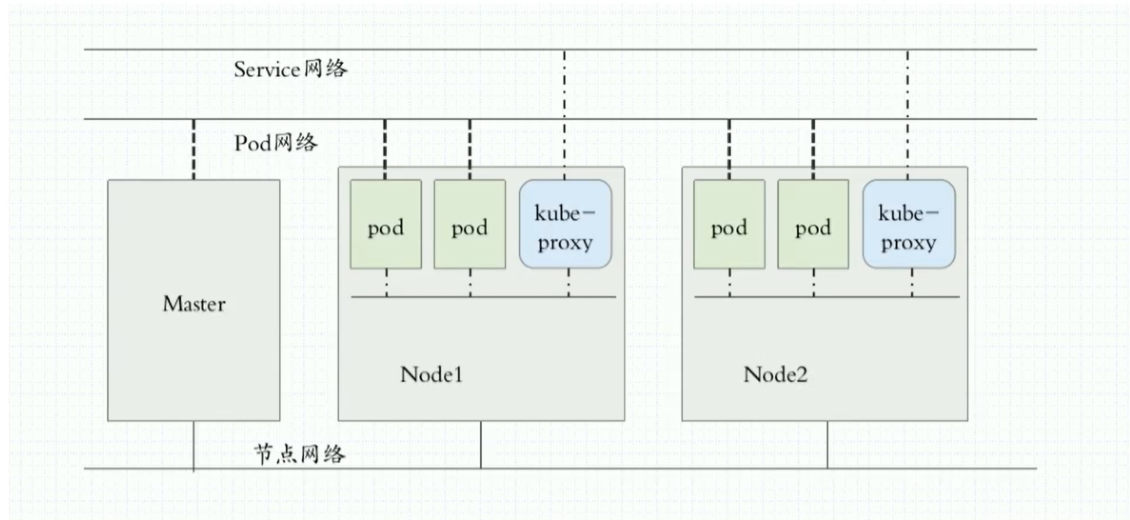

- 网络规划示意图

- 实验环境示意图

- 实验规划文字说明

#Service网络

10.0.0.0/24

#Pod网络

172.17.0.0/24

#节点网络(宿主机网络)

192.168.1.0/24

#虚拟机性能要求

2核心、2G内存、50G磁盘空间

#IP地址、计算机名称规划

192.168.1.60 VIP #keeplived虚拟IP

192.168.1.61 k8s-nginx1 #ngixn、keeplived

192.168.1.62 k8s-nginx2 #ngixn、keeplived

192.168.1.63 k8s-master1 #kube-apiserver、kube-controller-manager、kube-scheduler、kubectl、etcd

192.168.1.64 k8s-master2 #kube-apiserver、kube-controller-manager、kube-scheduler、kubectl

192.168.1.65 k8s-node1 #docker、kubelet、kube-proxy、etcd

192.168.1.66 k8s-node2 #docker、kubelet、kube-proxy、etcd

192.168.1.200 k8s-harbor #harbor、cfssl、NFS、DNS

#Vmware虚拟机配置信息

使用NAT网络模式

网段 192.168.1.0/24

网关 192.168.1.254

Kubernetes部署前准备

- 系统基础优化

#操作系统版本

cat /etc/centos-release

CentOS Linux release 7.9.2009 (Core)

#关闭防火墙和selinux

systemctl disable firewalld.service

systemctl stop firewalld.service

sed -i 's/^SELINUX=.*/SELINUX=disabled/g' /etc/selinux/config

#关闭了selinux需要重启服务器生效

reboot

#yum源准备(必须有epel和Base源)

wget -O /tmp/system.sh https://index.swireb.cn/shell/system.sh && sh /tmp/system.sh #通过脚本优化yum源(使用的为阿里云yum仓库)

#安装常用软件

yum install -y wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils ntpdate

#配置定时同步任务

echo '# NTP' >>/var/spool/cron/root

echo '*/01 * * * * /usr/sbin/ntpdate ntp1.aliyun.com >/dev/null 2>&1' >>/var/spool/cron/root

systemctl enable --now crond

#关闭SWAP交换分区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

- 系统内核优化

#此优化主要针对node节点的主机

#加载br_netfilter模块

lsmod |grep br_netfilter

cat > /etc/rc.sysinit << EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

cat > /etc/sysconfig/modules/br_netfilter.modules << EOF

modprobe br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

#内核参数修改

cat << EOF | tee /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.tcp_tw_recycle = 0

net.ipv6.conf.all.disable_ipv6 = 1

EOF

sysctl --system

k8s-harbor主机部署DNS服务

#安装DNS软件

yum install -y bind

#修改主配置文件

vim /etc/named.conf

options {

listen-on port 53 {

192.168.1.200; }; #修改监听地址

// listen-on-v6 port 53 {

::1; }; #不监听IPV6地址

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

allow-query {

any; }; #允许全部主机使用DNS

forwarders {

1.1.1.1;192.168.1.254; }; #上级DNS指向网关

recursion yes; #递归查询DNS

dnssec-enable no;

dnssec-validation no;

};

#新增host.com域

vim /etc/named.rfc1912.zones

zone "host.com" IN {

type master;

file "host.com.zone";

allow-update {

192.168.1.200; };

};

#配置host.com域的解析记录

vim /var/named/host.com.zone

$ORIGIN host.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.host.com. dnsadmin.host.com. (

2021031001 ; serial #2021年3月10日第一次记录

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.host.com.

$TTL 60 ; 1 minute

dns A 192.168.1.200

k8s-nginx1 A 192.168.1.61

k8s-nginx2 A 192.168.1.62

k8s-master1 A 192.168.1.63

k8s-node1 A 192.168.1.65

k8s-node2 A 192.168.1.66

k8s-harbor A 192.168.1.200

harbor A 192.168.1.200

#检查主配置文件语法

named-checkconf

#启动DNS服务

systemctl enable --now named

#测试DNS解析

dig -t A harbor.host.com @192.168.1.200 +shor

#Windows相关设置

修改VMware Network Adapter VMnet8网卡

IP 192.168.1.253

DNS 192.168.1.200

自动跳跃数据值修改低一些让网络优先走VMware Network Adapter VMnet8网卡

#修改全部主机的DNS地址

sed -i 's|^DNS1=.*|DNS1=192.168.1.200|g' /etc/sysconfig/network-scripts/ifcfg-ens33

sed -i '$aDNS2=114.114.114.114' /etc/sysconfig/network-scripts/ifcfg-ens33

sed -i '$aDOMAIN=host.com' /etc/sysconfig/network-scripts/ifcfg-ens33

systemctl restart network

k8s-node1、k8s-node2、k8s-harbor主机安装docker

#准备docker源文件

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum clean all && yum makecache

#安装docker-ce

yum install -y yum-utils device-mapper-persistent-data lvm2 docker-ce-18.06.3.ce-3.el7

#修改守护进程daemon的配置文件

mkdir -p /data/docker /etc/docker

vi /etc/docker/daemon.json

{

"graph": "/data/docker",

"bip": "172.17.0.1/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.host.com"],

"registry-mirrors": ["http://hub-mirror.c.163.com", "https://docker.mirrors.ustc.edu.cn"]

}

systemctl daemon-reload && systemctl enable --now docker

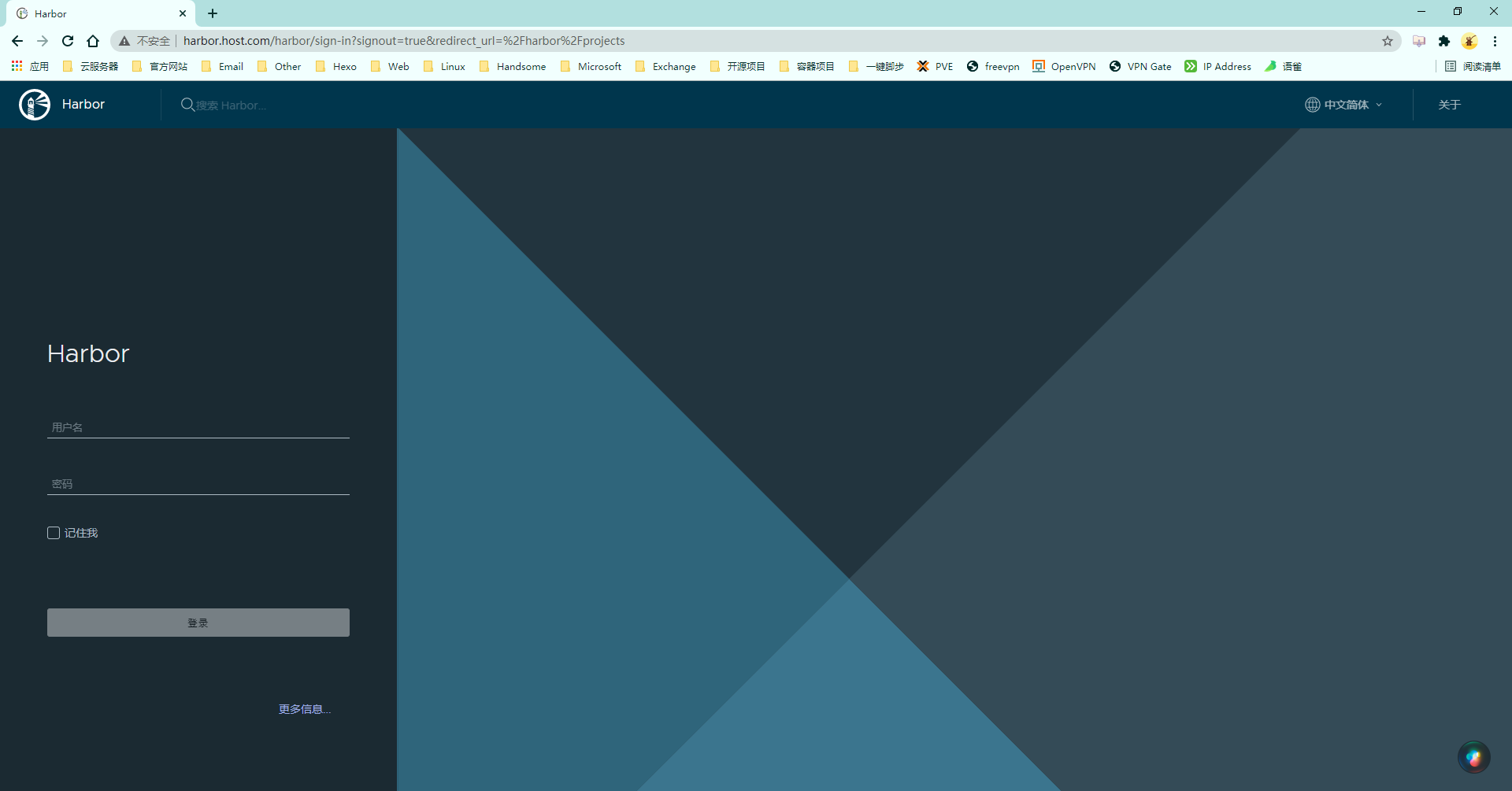

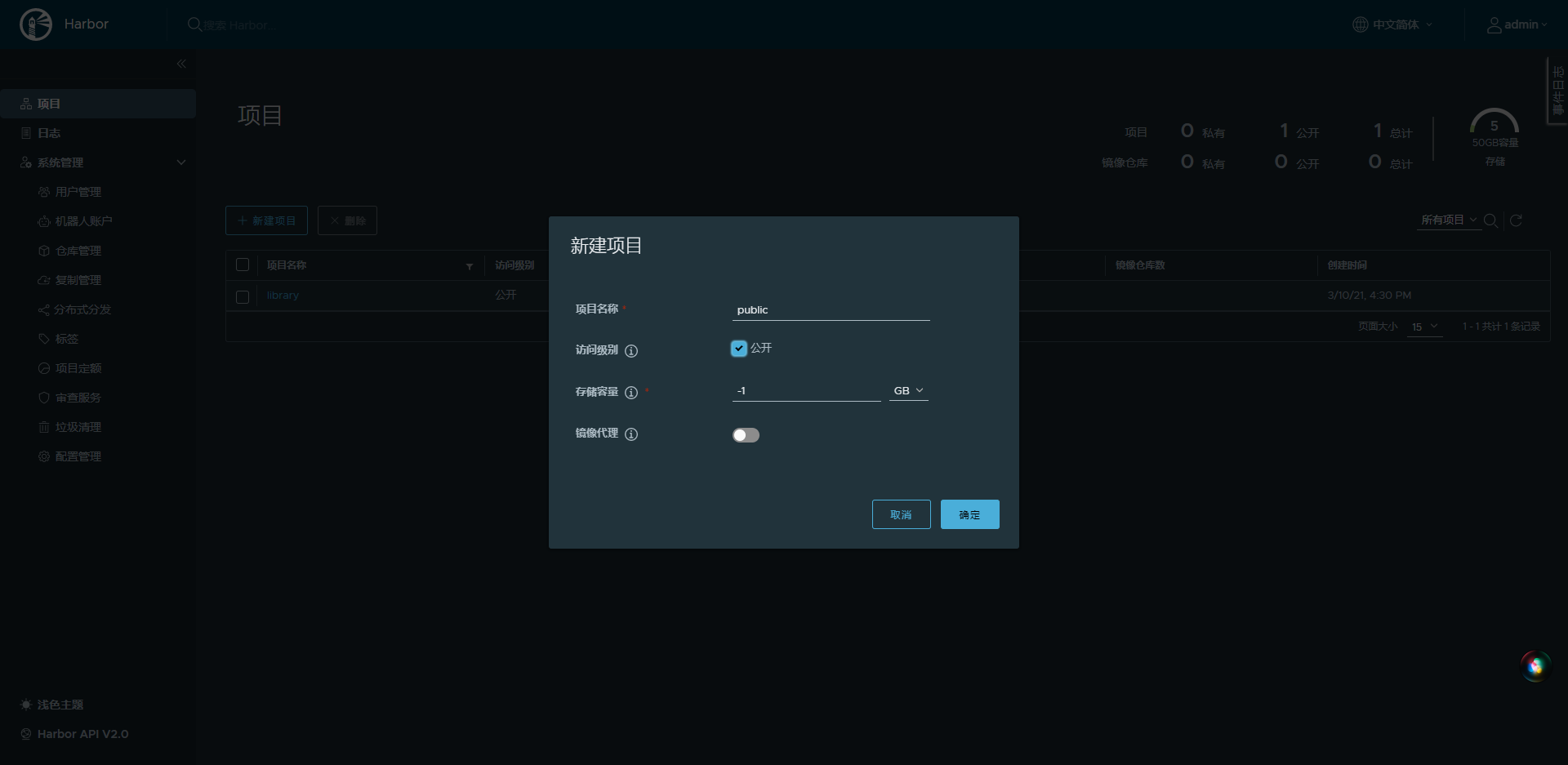

k8s-harbor主机部署私有镜像仓库

#安装docker-compose

yum install -y docker-compose

#下载harbor离线包

wget https://github.com/goharbor/harbor/releases/download/v2.2.0/harbor-offline-installer-v2.2.0.tgz

tar xf harbor-offline-installer-v2.2.0.tgz -C /opt && rm harbor-offline-installer-v2.2.0.tgz && cd /opt/harbor

#修改安装配置文件

cp harbor.yml.tmpl harbor.yml #利用示例文件生成配置文件

vim harbor.yml

hostname: harbor.host.com

http:

port: 180

# https: #注释掉https相关配置

# port: 443

# certificate: /your/certificate/path

# private_key: /your/private/key/path

harbor_admin_password: 123456

data_volume: /data/harbor

#安装harbor

./install.sh

#安装成功系统会运行以下的容器并且状态是UP

docker container ls -a

CONTAINER ID IMAGE

b1c99860720f goharbor/nginx-photon:v2.2.0

46833643b392 goharbor/harbor-jobservice:v2.2.0

1c2a5fafe485 goharbor/harbor-core:v2.2.0

5517c2499f39 goharbor/harbor-registryctl:v2.2.0

50983e4f3a71 goharbor/registry-photon:v2.2.0

616ecc111e65 goharbor/harbor-db:v2.2.0

3adfcc054c5f goharbor/redis-photon:v2.2.0

f847a26ca688 goharbor/harbor-log:v2.2.0

#设置harbor开机自启

chmod +x /etc/rc.d/rc.local

vim /etc/rc.d/rc.local

# start harbor(新增下方信息)

cd /opt/harbor

docker-compose down -v

docker-compose up -d

#安装并配置nginx反向代理harbor

yum install -y nginx

vim /etc/nginx/conf.d/harbor.conf

server {

listen 80;

server_name harbor.host.com;

client_max_body_size 1000m; #允许最大上传大小为1000M

location / {

proxy_pass http://127.0.0.1:180; }

}

systemctl enable --now nginx

测试harbor镜像仓库

- 游览器访问

harbor.host.com

- 测试Harbor镜像仓库

docker pull nginx:1.19

docker tag nginx:1.19 harbor.host.com/public/nginx:1.19

docker login -u admin harbor.host.com

docker push harbor.host.com/public/nginx:1.19

docker logout

docker pull harbor.host.com/public/nginx:1.19

k8s-harbor主机准备自签证书

#下载并解压cfssl工具

wget https://index.swireb.cn/software/k8s/cfssl.tar.gz

tar xf cfssl.tar.gz -C /opt && rm cfssl.tar.gz

#目录结构简介

tree /opt/cfssl

├── bin

│ ├── cfssl

│ ├── cfssl-certinfo

│ └── cfssljson

├── cfssl-install.sh #cfssl工具安装脚本

├── etcd

│ ├── ca-config.json

│ ├── ca-csr.json

│ ├── cfssl-etcd.sh #ercd自签证书生成脚本

│ └── server-csr.json #需要自定义"hosts"字段

└── k8s

├── admin-csr.json

├── ca-config.json

├── ca-csr.json

├── cfssl-admin.sh #管理员自签证书生成脚本(必须先执行cfssl-k8s.sh脚本)

├── cfssl-k8s.sh #apiserve、kube-proxy自签证书生成脚本

├── kube-proxy-csr.json

└── server-csr.json #需要自定义"hosts"字段(将所有可能的etcd服务器添加到host列表)

#安装cfssl工具

cd /opt/cfssl && ./cfssl-install.sh

cfssl version

- 颁发

etcd证书

#将所有可能用于etcd服务的IP地址添加到hosts列表

vim /opt/cfssl/etcd/server-csr.json

{

"CN": "etcd",

"hosts": [

"192.168.1.63",

"192.168.1.65",

"192.168.1.66"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

#颁发etcd服务的自签证书

cd /opt/cfssl/etcd && ./cfssl-etcd.sh

ll *pem

-rw------- 1 root root 1679 Mar 12 16:15 ca-key.pem

-rw-r--r-- 1 root root 1265 Mar 12 16:15 ca.pem

-rw------- 1 root root 1679 Mar 12 16:15 server-key.pem

-rw-r--r-- 1 root root 1346 Mar 12 16:15 server.pem

- 颁发apiserver、kube-proxy、admin证书

#将所有可能用于apiserver、kube-proxy服务的IP地址添加到hosts列表

vim /opt/cfssl/k8s/server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"192.168.1.60",

"192.168.1.61",

"192.168.1.62",

"192.168.1.63",

"192.168.1.64",

"192.168.1.65",

"192.168.1.66"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

#颁发apiserver、kube-proxy服务的自签证书

cd /opt/cfssl/k8s && ./cfssl-k8s.sh

ll *pem

-rw------- 1 root root 1675 Mar 12 22:57 ca-key.pem

-rw-r--r-- 1 root root 1359 Mar 12 22:57 ca.pem

-rw------- 1 root root 1679 Mar 12 22:57 kube-proxy-key.pem

-rw-r--r-- 1 root root 1403 Mar 12 22:57 kube-proxy.pem

-rw------- 1 root root 1679 Mar 12 22:57 server-key.pem

-rw-r--r-- 1 root root 1684 Mar 12 22:57 server.pem

#颁发admin证书

cd /opt/cfssl/k8s && ./cfssl-admin.sh

ll admin*pem

-rw------- 1 root root 1675 Mar 19 02:21 admin-key.pem

-rw-r--r-- 1 root root 1399 Mar 19 02:21 admin.pem

k8s-master1、k8s-node1、k8s-node2主机部署etcd集群

- Github项目地址

- 准备目录结构和证书

#创建目录结构

mkdir -p /opt/etcd/{

bin,cfg,ssl}

#从k8s-harbor主机发送证书到etcd节点

cd /opt/cfssl/etcd/ && for i in master1 node1 node2;do scp *pem k8s-${i}:/opt/etcd/ssl ;done

#可以将变量写入~/.bashrc文件永久生效

#k8s-master1主机上定义变量

NAME_1=etcd-1

NAME_2=etcd-2

NAME_3=etcd-3

HOST_1=192.168.1.63

HOST_2=192.168.1.65

HOST_3=192.168.1.66

CLUSTER=${NAME_1}=https://${HOST_1}:2380,${NAME_2}=https://${HOST_2}:2380,${NAME_3}=https://${HOST_3}:2380

TOKEN=etcd-cluster

CLUSTER_STATE=new

THIS_NAME=${NAME_1}

THIS_IP=${HOST_1}

#k8s-node1主机上定义变量

NAME_1=etcd-1

NAME_2=etcd-2

NAME_3=etcd-3

HOST_1=192.168.1.63

HOST_2=192.168.1.65

HOST_3=192.168.1.66

CLUSTER=${NAME_1}=https://${HOST_1}:2380,${NAME_2}=https://${HOST_2}:2380,${NAME_3}=https://${HOST_3}:2380

TOKEN=etcd-cluster

CLUSTER_STATE=new

THIS_NAME=${NAME_2}

THIS_IP=${HOST_2}

#k8s-node2主机上定义变量

NAME_1=etcd-1

NAME_2=etcd-2

NAME_3=etcd-3

HOST_1=192.168.1.63

HOST_2=192.168.1.65

HOST_3=192.168.1.66

CLUSTER=${NAME_1}=https://${HOST_1}:2380,${NAME_2}=https://${HOST_2}:2380,${NAME_3}=https://${HOST_3}:2380

TOKEN=etcd-cluster

CLUSTER_STATE=new

THIS_NAME=${NAME_3}

THIS_IP=${HOST_3}

- etcd3.3版本部署演示

#下载解压软件

wget https://github.com/etcd-io/etcd/releases/download/v3.3.13/etcd-v3.3.13-linux-amd64.tar.gz

tar xf etcd-v3.3.13-linux-amd64.tar.gz

mv etcd-v3.3.13-linux-amd64/{

etcd,etcdctl} /opt/etcd/bin && rm -rf etcd-v3.3.13-linux-amd64*

#服务配置文件

cat << EOF | tee /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="${THIS_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${THIS_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${THIS_IP}:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${THIS_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${THIS_IP}:2379"

ETCD_INITIAL_CLUSTER="${CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="${TOKEN}"

ETCD_INITIAL_CLUSTER_STATE="${CLUSTER_STATE}"

#[Security]

ETCD_CERT_FILE="/opt/etcd/ssl/server.pem"

ETCD_KEY_FILE="/opt/etcd/ssl/server-key.pem"

ETCD_TRUSTED_CA_FILE="/opt/etcd/ssl/ca.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_PEER_CERT_FILE="/opt/etcd/ssl/server.pem"

ETCD_PEER_KEY_FILE="/opt/etcd/ssl/server-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/opt/etcd/ssl/ca.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

EOF

#参数简述

ETCD_DATA_DIR #数据目录

ETCD_LISTEN_PEER_URLS #集群通信监听地址

ETCD_LISTEN_CLIENT_URLS #客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS #集群通告地址

ETCD_ADVERTISE_CLIENT_URLS #客户端通告地址

ETCD_INITIAL_CLUSTER #集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN #集群Token

ETCD_INITIAL_CLUSTER_STATE #加入集群的当前状态(new表示新集群、existing表示加入已有集群)

#守护进程文件

cat << EOF | tee /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd \\

--name=\${ETCD_NAME} \\

--data-dir=\${ETCD_DATA_DIR} \\

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \\

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS} \\

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \\

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \\

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \\

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \\

--initial-cluster-state=\${ETCD_INITIAL_CLUSTER_STATE} \\

--cert-file=\${ETCD_CERT_FILE} \\

--key-file=\${ETCD_KEY_FILE} \\

--trusted-ca-file=\${ETCD_TRUSTED_CA_FILE} \\

--client-cert-auth=\${ETCD_CLIENT_CERT_AUTH} \\

--peer-cert-file=\${ETCD_PEER_CERT_FILE} \\

--peer-key-file=\${ETCD_PEER_KEY_FILE} \\

--peer-trusted-ca-file=\${ETCD_PEER_TRUSTED_CA_FILE} \\

--peer-client-cert-auth=\${ETCD_PEER_CLIENT_CERT_AUTH}

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#启动etcd服务(需要同时启动两个节点)

systemctl daemon-reload && systemctl enable --now etcd.service

#创建etcdctl命令的软连接

ln -s /opt/etcd/bin/etcdctl /usr/local/bin

#验证集群状态

etcdctl \

--ca-file=/opt/etcd/ssl/ca.pem \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--endpoints="https://${HOST_1}:2379,https://${HOST_2}:2379,https://${HOST_3}:2379" \

member list

etcdctl \

--ca-file=/opt/etcd/ssl/ca.pem \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--endpoints="https://${HOST_1}:2379,https://${HOST_2}:2379,https://${HOST_3}:2379" \

cluster-health

- etcd3.4版本部署演示

#下载解压软件

wget https://github.com/etcd-io/etcd/releases/download/v3.4.15/etcd-v3.4.15-linux-amd64.tar

tar xf etcd-v3.4.15-linux-amd64.tar

mv etcd-v3.4.15-linux-amd64/{

etcd,etcdctl} /opt/etcd/bin && rm -rf etcd-v3.4.15-linux-amd64*

#flannel操作etcd使用的是v2的API(为了兼容flannel将默认开启v2版本)

#服务配置文件

cat << EOF | tee /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="${THIS_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${THIS_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${THIS_IP}:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${THIS_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${THIS_IP}:2379"

ETCD_INITIAL_CLUSTER="${CLUSTER}"

ETCD_INITIAL_CLUSTER_TOKEN="${TOKEN}"

ETCD_INITIAL_CLUSTER_STATE="${CLUSTER_STATE}"

ETCD_ENABLE_V2="true"

#[Security]

ETCD_CERT_FILE="/opt/etcd/ssl/server.pem"

ETCD_KEY_FILE="/opt/etcd/ssl/server-key.pem"

ETCD_TRUSTED_CA_FILE="/opt/etcd/ssl/ca.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_PEER_CERT_FILE="/opt/etcd/ssl/server.pem"

ETCD_PEER_KEY_FILE="/opt/etcd/ssl/server-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/opt/etcd/ssl/ca.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

EOF

#etcd3.4版本会自动读取环境变量的参数(所以EnvironmentFile文件中有的参数不需要再次在ExecStart启动参数中添加)

#守护进程文件

cat << EOF | tee /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#启动etcd服务(需要同时启动两个节点)

systemctl daemon-reload && systemctl enable --now etcd.service

#创建etcdctl命令的软连接

ln -s /opt/etcd/bin/etcdctl /usr/local/bin

#验证集群状态

etcdctl \

--cacert=/opt/etcd/ssl/ca.pem \

--cert=/opt/etcd/ssl/server.pem \

--key=/opt/etcd/ssl/server-key.pem \

--endpoints="https://${HOST_1}:2379,https://${HOST_2}:2379,https://${HOST_3}:2379" \

member list

etcdctl \

--cacert=/opt/etcd/ssl/ca.pem \

--cert=/opt/etcd/ssl/server.pem \

--key=/opt/etcd/ssl/server-key.pem \

--endpoints="https://${HOST_1}:2379,https://${HOST_2}:2379,https://${HOST_3}:2379" \

--write-out=table \

endpoint health

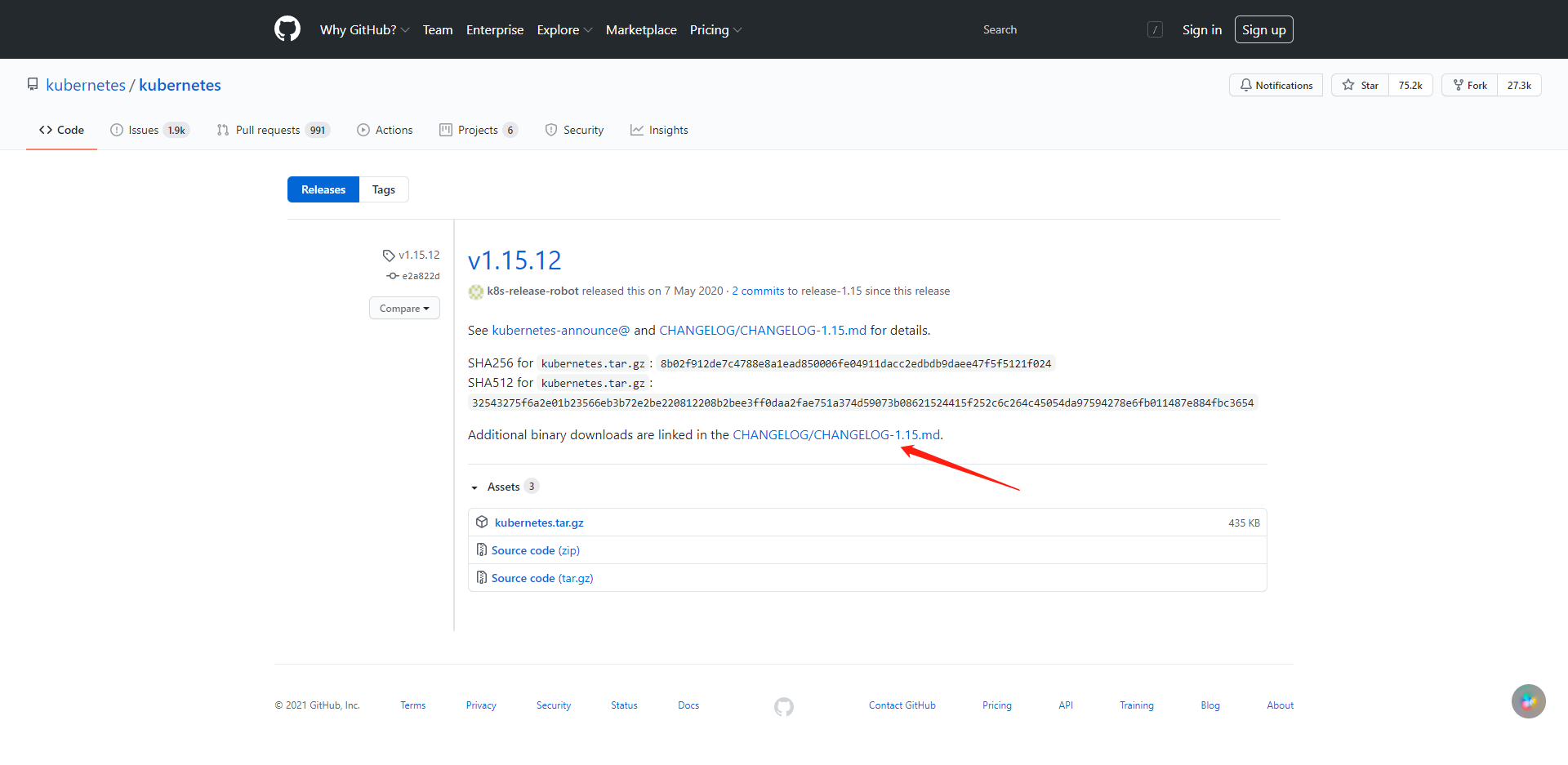

下载kubernetes服务端软件

- 进入Github项目地址

- 进入

tags页签 - 选择要下载的版本

- 点击

CHANGELOG-${version}.md进入说明页面

#v1.15

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.15.md#downloads-for-v1160

#v1.16

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.16.md#downloads-for-v1160

#v1.17

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.17.md#downloads-for-v1160

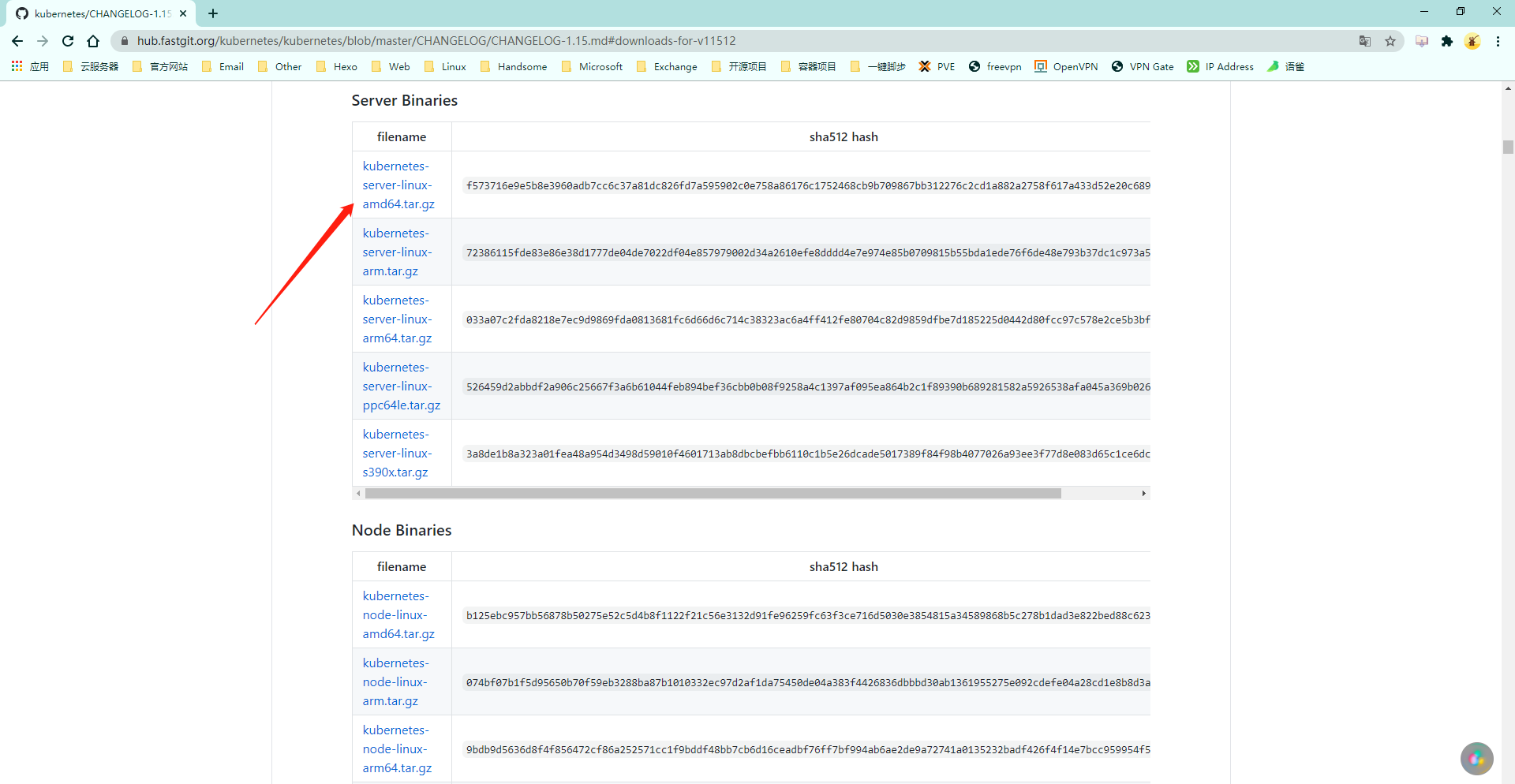

- 选择下载

Server Binaries

- 准备目录结构、证书和kubernetes软件

#在全部master、node节点上操作

#创建目录结构

mkdir -p /opt/kubernetes/{

bin,cfg,ssl,logs}

#k8s-harbor主机发送证书到master、node节点

cd /opt/cfssl/k8s/ && for i in master1 node1 node2;do scp *pem k8s-${i}:/opt/kubernetes/ssl ;done

#下载并且解压软件

wget https://dl.k8s.io/v1.16.0/kubernetes-server-linux-amd64.tar.gz && tar xf kubernetes-server-linux-amd64.tar.gz

#只需二进制可执行文件

mv kubernetes/server/bin/* /opt/kubernetes/bin && rm -rf kubernetes*

rm /opt/kubernetes/bin/{

*.tar,*_tag}

k8s-master1主机部署master节点

- kube-apiserver服务配置文件

cat << EOF | tee /opt/kubernetes/cfg/kube-apiserver.conf

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--etcd-servers=https://192.168.1.63:2379,https://192.168.1.65:2379,https://192.168.1.66:2379 \\

--bind-address=192.168.1.63 \\

--secure-port=6443 \\

--advertise-address=192.168.1.63 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=30000-32767 \\

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

EOF

#参数简述

https://v1-16.docs.kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-apiserver/

-–logtostderr #启用日志

-—v #日志等级

-–log-dir #日志目录

-–etcd-servers #etcd集群地址

-–bind-address #监听地址

-–secure-port #https安全端口

-–advertise-address #集群通告地址

-–allow-privileged #启用授权

-–service-cluster-ip-range #Service虚拟IP地址段

-–enable-admission-plugins #准入控制模块

-–authorization-mode #认证授权,启用RBAC授权和节点自管理

-–enable-bootstrap-token-auth #启用TLS bootstrap机制

-–token-auth-file #bootstrap token文件

-–service-node-port-range #Service nodeport类型默认分配端口范围

-–kubelet-client-xxx #apiserver访问kubelet客户端证书

-–tls-xxx-file #apiserver https证书

-–etcd-xxxfile #连接etcd集群证书

-–audit-log-xxx #审计日志

cat << EOF | tee /opt/kubernetes/cfg/token.csv

c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF

#第一列随机字符 head -c 16 /dev/urandom | od -An -t x | tr -d ' '

#第二列用户名

#第三列UID

#第四列用户组

cat << EOF | tee /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

- kube-controller-manager服务配置文件

cat << EOF | tee /opt/kubernetes/cfg/kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--leader-elect=true \\

--master=127.0.0.1:8080 \\

--address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s"

EOF

#参数简述

https://v1-16.docs.kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-controller-manager/

–-master #通过本地非安全本地端口8080连接apiserver

-–leader-elect #当该组件启动多个时自动选举(HA)

--cluster-cidr #集群中Pod的CIDR范围(需要于CNI插件网络范围一致)

-–service-cluster-ip-range #Service虚拟IP地址段

-–cluster-signing-cert-file #自动为kubelet颁发证书的CA与apiserver保持一致

-–cluster-signing-key-file #自动为kubelet颁发证书的CA与apiserver保持一致

cat << EOF | tee /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

- kube-scheduler服务配置文件

cat << EOF | tee /opt/kubernetes/cfg/kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--leader-elect \\

--master=127.0.0.1:8080 \\

--address=127.0.0.1"

EOF

#参数简述

https://v1-16.docs.kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-scheduler/

–-master #通过本地非安全本地端口8080连接apiserver

-–leader-elect #当该组件启动多个时自动选举(HA)

cat << EOF | tee /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

- 启动服务

systemctl daemon-reload

systemctl enable --now kube-apiserver

systemctl enable --now kube-scheduler

systemctl enable --now kube-controller-manager

- 服务验证

#创建kubelet的软链接

ln -s /opt/kubernetes/bin/kubectl /usr/local/sbin/

#检查启动结果

kubectl get cs

NAME AGE

etcd-2 <unknown>

scheduler <unknown>

controller-manager <unknown>

etcd-1 <unknown>

etcd-0 <unknown>

tls基于bootstrap自动颁发证书

#将kubelet-bootstrap用户绑定到系统集群角色

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

k8s-master1主机生成kubeconfig文件

- 设置环境变量

#apiserver

KUBE_APISERVER="https://192.168.1.63:6443"

#token.csv文件中的随机字段

TOKEN="c47ffb939f5ca36231d9e3121a252940"

- 生成

bootstrap.kubeconfig文件用于node节点kubelet服务

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=bootstrap.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

- 生成

kube-proxy.kubeconfig文件用于node节点kube-proxy服务

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/ssl/kube-proxy.pem \

--client-key=/opt/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

- 生成

config文件用于远程管理

#config文件可以让其他主机都可以远程管理apiserver(需要严格保密)

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=config

kubectl config set-credentials cluster-admin \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--client-key=/opt/kubernetes/ssl/admin-key.pem \

--client-certificate=/opt/kubernetes/ssl/admin.pem \

--kubeconfig=config

kubectl config set-context default \

--cluster=kubernetes \

--user=cluster-admin \

--kubeconfig=config

kubectl config use-context default --kubeconfig=config

#其他主机远程管理apiserver

kubectl get cs --kubeconfig=config

mkdir ~/.kube

mv config ~/.kube/

- 将生成的

kubeconfig文件发送给其他主机

for i in node1 node2;do scp bootstrap.kubeconfig kube-proxy.kubeconfig k8s-${i}:/opt/kubernetes/cfg ;done

k8s-node1主机部署node节点

- kubelet服务配置文件

#创建配置文件

cat << EOF | tee /opt/kubernetes/cfg/kubelet.conf

KUBELET_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--hostname-override=k8s-node1 \\

--network-plugin=cni \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet-config.yml \\

--cert-dir=/opt/kubernetes/ssl \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

EOF

#参数简述

https://v1-16.docs.kubernetes.io/zh/docs/reference/command-line-tools-reference/kubelet/

-–hostname-override #当前主机主机名(要能解析)

-–network-plugin #启用CNI网络插件

-–kubeconfig #会自动生成后面用于连接apiserver

-–bootstrap-kubeconfig #首次启动向apiserver申请证书

-–config #配置参数文件

-–cert-dir #kubelet证书生成目录

-–pod-infra-container-image #管理Pod网络容器的镜像(启动Pod时的第一个镜像)

#创建配置参数yml文件

cat << EOF | tee /opt/kubernetes/cfg/kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: systemd

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

#注意Cgroup驱动要与docker info查看到的实际驱动相同

#clusterDNS是CoreDNS地址

#failSwapOn关闭使用SWAP交换分区

#创建守护进程文件

cat << EOF | tee /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Before=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

- kube-proxy服务配置文件

#创建配置文件

cat << EOF | tee /opt/kubernetes/cfg/kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

EOF

#参数简述

https://v1-16.docs.kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-proxy/

#创建配置参数yml文件

cat << EOF | tee /opt/kubernetes/cfg/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

address: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: k8s-node1

clusterCIDR: 10.0.0.0/24

mode: ipvs

ipvs:

scheduler: "rr"

iptables:

masqueradeAll: true

EOF

#hostnameOverride 当前主机主机名

#mode 网络模式ipvs

#创建守护进程文件

cat << EOF | tee /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

- 启动服务

systemctl daemon-reload

systemctl enable --now kubelet

systemctl enable --now kube-proxy

- 服务验证

tail -f /opt/kubernetes/logs/kubelet.INFO

No valid private key and/or certificate found, reusing existing private key or creating a new one

- 将全部的配置文件发送给k8s-node2

#其他node节点配置文件修改几个地方地方即可使用

scp /opt/kubernetes/cfg/* k8s-node2:/opt/kubernetes/cfg

#其他node节点可以共用守护进程文件无需修改

scp /usr/lib/systemd/system/kube* k8s-node2:/usr/lib/systemd/system

k8s-master1主机为k8s-node1主机颁发证书

#获取证书签名请求(此时状态为Pending)

kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-hEydWXzvobAxPz4mQ9iCJgpB5HYrYd91qTarip9Dyrs 2m59s kubelet-bootstrap Pending

#允许证书签名请求

kubectl certificate approve node-csr-hEydWXzvobAxPz4mQ9iCJgpB5HYrYd91qTarip9Dyrs

#获取证书签名请求(此时状态为Approved)

kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-hEydWXzvobAxPz4mQ9iCJgpB5HYrYd91qTarip9Dyrs 7m40s kubelet-bootstrap Approved,Issued

k8s-node2主机部署node节点

- 修改配置文件

vim /opt/kubernetes/cfg/kube-proxy-config.yml

hostnameOverride: k8s-node2

vim /opt/kubernetes/cfg/kubelet.conf

--hostname-override=k8s-node2 \

- 启动服务

systemctl daemon-reload

systemctl enable --now kubelet

systemctl enable --now kube-proxy

- 服务验证

tail -f /opt/kubernetes/logs/kubelet.INFO

No valid private key and/or certificate found, reusing existing private key or creating a new one

k8s-master1主机为k8s-node2主机颁发证书

#获取证书签名请求(此时状态为Pending)

NAME AGE REQUESTOR CONDITION

node-csr-ek0DPmvBH9nT4z-1q8Hwc7UTiXgS-gnngOlGDlPe-O4 3m26s kubelet-bootstrap Pending

node-csr-hEydWXzvobAxPz4mQ9iCJgpB5HYrYd91qTarip9Dyrs 21m kubelet-bootstrap Approved,Issued

#允许证书签名请求

kubectl certificate approve node-csr-ek0DPmvBH9nT4z-1q8Hwc7UTiXgS-gnngOlGDlPe-O4

#查看加入的node节点信息

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 NotReady <none> 15m v1.16.0

k8s-node2 NotReady <none> 83s v1.16.0

node节点安装CNI网络插件

#确认启用CNI插件功能

grep "cni" /opt/kubernetes/cfg/kubelet.conf

#k8s-node1、k8s-node2主机下载并解压软件

mkdir -p /opt/cni/bin /etc/cni/net.d

wget https://github.com/containernetworking/plugins/releases/download/v0.8.2/cni-plugins-linux-amd64-v0.8.2.tgz

tar xf cni-plugins-linux-amd64-v0.8.2.tgz -C /opt/cni/bin && rm cni-plugins-linux-amd64-v0.8.2.tgz

通过master节点部署flannel网络

#这是官方给出yaml文件的参考

#Kubernetesv1.17+

https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#Kubernetesv1.6-v1.15

https://index.swireb.cn/cfg/k8s/k8s-master-yaml/kube-flannel.yaml

#安装flannel

kubectl apply -f https://index.swireb.cn/cfg/k8s/k8s-master-yaml/kube-flannel.yaml

#查看flannel运行状态(状态长期处于Pending的话需要看下node节点kubelet服务的日志信息)

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-amd64-5l8cm 1/1 Running 0 18m

kube-flannel-ds-amd64-qbn52 1/1 Running 0 18m

#此时节点状态为Ready

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready <none> 3h28m v1.16.0

k8s-node2 Ready <none> 3h27m v1.16.0

#查看节点详细信息

kubectl describe node k8s-node1

#授权apiserver可以访问kubelet

kubectl apply -f https://index.swireb.cn/cfg/k8s/k8s-master-yaml/apiserver-to-kubelet-rbac.yaml

通过master节点部署CoreDNS

#coredns.yaml文件可以参考官方的coredns.yaml.sed文件

clusterIP (要和kubelet-config.yml文件中clusterDNS地址保持一致)

kubernetes cluster.local(要和kubelet-config.yml文件中clusterDomain域名保持一致)

#安装CoreDNS

kubectl apply -f https://index.swireb.cn/cfg/k8s/k8s-master-yaml/coredns.yaml

#查看CoreDNS运行状态

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5bd5f9dbd9-592ls 1/1 Running 0 25m

#查看CoreDNS端口信息

kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP,9153/TCP 51m

#测试CoreDNS服务

kubectl apply -f https://index.swireb.cn/cfg/k8s/k8s-master-yaml/buxybox.yaml

kubectl exec -it busybox sh

nslookup kubernetes #测试内网解析

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.0.0.1 kubernetes.default.svc.cluster.local

nslookup www.baidu.com #测试外网解析

Server: 10.0.0.2

Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local

Name: www.baidu.com

Address 1: 163.177.151.110

Address 2: 163.177.151.109

通过master节点启动nginx容器

#通过deployment来创建和管理nginx容器

kubectl create deployment myweb --image=harbor.host.com/public/nginx:1.19

#查看一下deployment的状态

kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

myweb 1/1 1 1 74s

#查看pod的状态

kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-7894687bc6-ptkn5 1/1 Running 0 3m23s

#查看pod详细信息

kubectl describe pod myweb-7894687bc6-ptkn5

#暴露myweb的端口到宿主机

kubectl expose deployment myweb --port=80 --type=NodePort

#查看myweb暴露的端口信息

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 13m

myweb NodePort 10.0.0.8 <none> 80:31530/TCP 24s

#访问nginx测试页面

curl http://192.168.1.65:31530

curl http://192.168.1.66:31530

通过master节点部署web管理界面

#安装dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

#查看dashboard运行状态

kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b8b58dc8b-7xh6t 1/1 Running 0 2m20s

kubernetes-dashboard-7867cbccbb-n2cvj 1/1 Running 0 2m20s

#创建服务帐户

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

EOF

#创建ClusterRoleBinding

cat <<EOF | kubectl apply -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

#暴露dashboard端口

kubectl patch svc kubernetes-dashboard -p '{"spec":{"type":"NodePort"}}' -n kubernetes-dashboard

#查看dashboard暴露端口

kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.0.0.223 <none> 8000/TCP 2m53s

kubernetes-dashboard NodePort 10.0.0.65 <none> 443:30073/TCP 2m53s

#查看访问令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{

{.data.token | base64decode}}"

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXNmcDR4Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5ZWNhMWQyNC1iZWY0LTRhMWQtOGM4Mi02YjBlNTcyNTczNGIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.TjKBVEbJJCEbvjMY2Z33vkJnD8fEDWa9Vl5Tmsn4bjfUf0AZuDVF7m0FDRan4emASCzo4QGrQOVlOWV6zMMBYxn5fm498sepAMiQboTxJkdhWsTntK4-pqtkJDlgaCDTdCWdNn5CG1wQUym5L9Vq5bCsYGTB_MHV4uOf-tbQaRTbO7YCnVaAB0rCoB-VFQv_Q8fZa5HVGSvk5bzXVH4CZD3AvzlqjgP-P6bd6BMv5AURQ641XODHBYex4RPWyoXDcDli6drWgFyE47EhPOHiKFT2S-46orBG9Cy1ONlOwMDIkb6JWBNbppTKOEJUWOjITOHY_z63jD1Qeiy6X6pMlA

#火狐游览器访问web管理页面

https://192.168.1.65:30073/#/login

#安装Kuboard

kubectl apply -f https://kuboard.cn/install-script/kuboard.yaml

#查看Kuboard运行状态

kubectl get pods -l k8s.kuboard.cn/name=kuboard -n kube-system

NAME READY STATUS RESTARTS AGE

kuboard-59bdf4d5fb-cz67s 1/1 Running 0 91s

#查看Kuboard暴露端口

kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kuboard NodePort 10.0.0.114 <none> 80:32567/TCP 4m5s

#查看访问令牌

echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{

{.data.token}}' | base64 -d)

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJvYXJkLXVzZXItdG9rZW4tcWMycGsiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia3Vib2FyZC11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZDA5M2FlNDItM2UzZS00NDMwLWE3YTMtMWNiODZjYWNmMjgwIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmt1Ym9hcmQtdXNlciJ9.tyGY4vLZlwrgy0y5mPOVQrmqO1J6GYxRjNbGLfIEViuFzaytWkyDMAwYEBg0FasRu-fKdE4toRyEZ5WlgKUA1AuYWrpQTjSY_x9xhpW9cAKrFqXHfvYC3fhnNsI2v8zfMDtxWrsQ4h9jZi3nu2frVc5oLNrkmrV1VbLDOnVHezvRApIFpMj2uVf2dQRtBg5JdYHEF-meWnkzYjFIndPgU-yNXTYkOf6azxknfpjsEVusWz8zUSV3Cf-64qGA2O5Gr5VzmtODUC4y_d2v4yIjrR6q1gL5FaG4mdOeLnnPdQ1XQjAmFAAAthDDO7_zb7_mvMu4Sbg7fXQPODJzkTsjPA

#访问web管理页面

http://192.168.1.65:32567

kubelet服务报错处理

- 报错信息列举

#查看下日志信息

tail -f /opt/kubernetes/logs/kubelet.INFO

ListPodSandbox with filter nil from runtime service failed: rpc error: code = Unknown desc = Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? #如果有这种报错要重启docker、kubelet、kube-proxy服务

Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized #这种报错提示需要安装CNI插件和部署flannel网络

kubelet_network_linux.go:111] Not using `--random-fully` in the MASQUERADE rule for iptables because the local version of iptables does not support it #这种报错需要升级iptables或者系统内核没有优化

[ContainerManager]: Discovered runtime cgroups name: /system.slice/docker.service #这种报错kubernetes和docker版本兼容性问题(貌似不影响)

- 编译安装iptables

#安装编译环境

yum install gcc make libnftnl-devel libmnl-devel autoconf automake libtool bison flex libnetfilter_conntrack-devel libnetfilter_queue-devel libpcap-devel -y

#设置变量

export LC_ALL=C

#下载并且解压软件

wget https://www.netfilter.org/projects/iptables/files/iptables-1.6.2.tar.bz2 && tar xf iptables-1.6.2.tar.bz2

rm iptables-1.6.2.tar.bz2

#编译安装

cd iptables-1.6.2

./autogen.sh

./configure

make -j4

make install

#版本验证

iptables -V

iptables v1.6.2

#重启服务即可

systemctl restart docker

systemctl restart kubelet

systemctl restart kube-proxy

master高可用架构

- 新增k8s-master2作为master节点

#k8s-master1主机将软件发送给k8s-master2主机

scp -r /opt/etcd k8s-master2:/opt

scp -r /opt/kubernetes k8s-master2:/opt

scp /usr/lib/systemd/system/kube*service k8s-master2:/usr/lib/systemd/system

#k8s-master2主机修改配置文件

vi /opt/kubernetes/cfg/kube-apiserver.conf

--bind-address=192.168.1.64 \

--advertise-address=192.168.1.64 \

#k8s-master2主机清空日志

rm -rf /opt/kubernetes/logs/*

#启动服务

systemctl daemon-reload

systemctl enable --now kube-apiserver

systemctl enable --now kube-scheduler

systemctl enable --now kube-controller-manager

#服务验证

ln -s /opt/kubernetes/bin/kubectl /usr/local/sbin/

kubectl get cs

NAME AGE

etcd-2 <unknown>

scheduler <unknown>

controller-manager <unknown>

etcd-1 <unknown>

etcd-0 <unknown>

- k8s-nginx1、k8s-nginx2主机配置nginx反向代理

#安装软件

yum install -y nginx keepalived

#修改配置文件

vi /etc/nginx/nginx.conf

events {

worker_connections 1024;

} #将stream放在events后

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.1.63:6443; #Master1 APISERVER IP:PORT

server 192.168.1.64:6443; #Master2 APISERVER IP:PORT

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

#启动服务

systemctl enable --now nginx

- k8s-nginx1主机配置负载均衡

#修改配置文件

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh" #通过脚本判断服务是否正常

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51 #VRRP路由ID实例(每个实例是唯一)

priority 100 #优先级(备用服务器90)

advert_int 1 #指定VRRP心跳包通告间隔时间1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.60/24 #VIP地址(虚拟IP地址)

}

track_script {

check_nginx

}

}

EOF

#服务检查脚本

cat > /etc/keepalived/check_nginx.sh << "EOF"

#!/bin/bash

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

#启动服务

systemctl enable --now keepalived

#服务验证

ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:f9:ce:84 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.61/24 brd 192.168.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.1.60/24 scope global secondary ens33 #出现VIP地址

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fef9:ce84/64 scope link noprefixroute

valid_lft forever preferred_lft forever

- k8s-nginx2主机配置负载均衡

#修改配置文件

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.60/24

}

track_script {

check_nginx

}

}

EOF

#服务检查脚本

cat > /etc/keepalived/check_nginx.sh << "EOF"

#!/bin/bash

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_nginx.sh

#启动服务

systemctl enable --now keepalived

- 访问VIP地址进行负载均衡测试

#在K8s集群中任意一个节点使用curl查看K8s版本

curl -k https://192.168.1.60:6443/version

{

"major": "1",

"minor": "16",

"gitVersion": "v1.16.0",

"gitCommit": "e2a822d9f3c2fdb5c9bfbe64313cf9f657f0a725",

"gitTreeState": "clean",

"buildDate": "2020-05-06T05:09:48Z",

"goVersion": "go1.12.17",

"compiler": "gc",

"platform": "linux/amd64"

}

#通过查看Nginx日志也可以看到转发apiserver IP

tail /var/log/nginx/k8s-access.log -f

192.168.1.62 192.168.1.63:6443 - [23/Mar/2021:13:26:03 +0800] 200 421

192.168.1.62 192.168.1.64:6443 - [23/Mar/2021:13:31:08 +0800] 200 421

192.168.1.62 192.168.1.63:6443 - [23/Mar/2021:13:31:10 +0800] 200 421

192.168.1.62 192.168.1.64:6443 - [23/Mar/2021:13:31:16 +0800] 200 421

- 让全部node节点连接VIP

#k8s-node1、k8s-node2主机连接VIP

sed -i 's/192.168.1.63:6443/192.168.1.60:6443/' /opt/kubernetes/cfg/*

grep '192.168.1.64:6443' /opt/kubernetes/cfg/*

#重启服务

systemctl restart kubelet kube-proxy