一.稠密光流法跟踪移动物体

例1

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char** argv)

{

VideoCapture capture("mulballs.mp4");

Mat prevFrame, prevGray;

if (!capture.read(prevFrame))

{

cout << "请确认视频文件名称是否正确" << endl;

return -1;

}

//将彩色图像转换成灰度图像

cvtColor(prevFrame, prevGray, COLOR_BGR2GRAY);

while (true)

{

Mat nextFrame, nextGray;

//所有图像处理完成后推出程序

if (!capture.read(nextFrame))

{

break;

}

imshow("视频图像", nextFrame);

//计算稠密光流

cvtColor(nextFrame, nextGray, COLOR_BGR2GRAY);

Mat_<Point2f> flow; //两个方向的运动速度

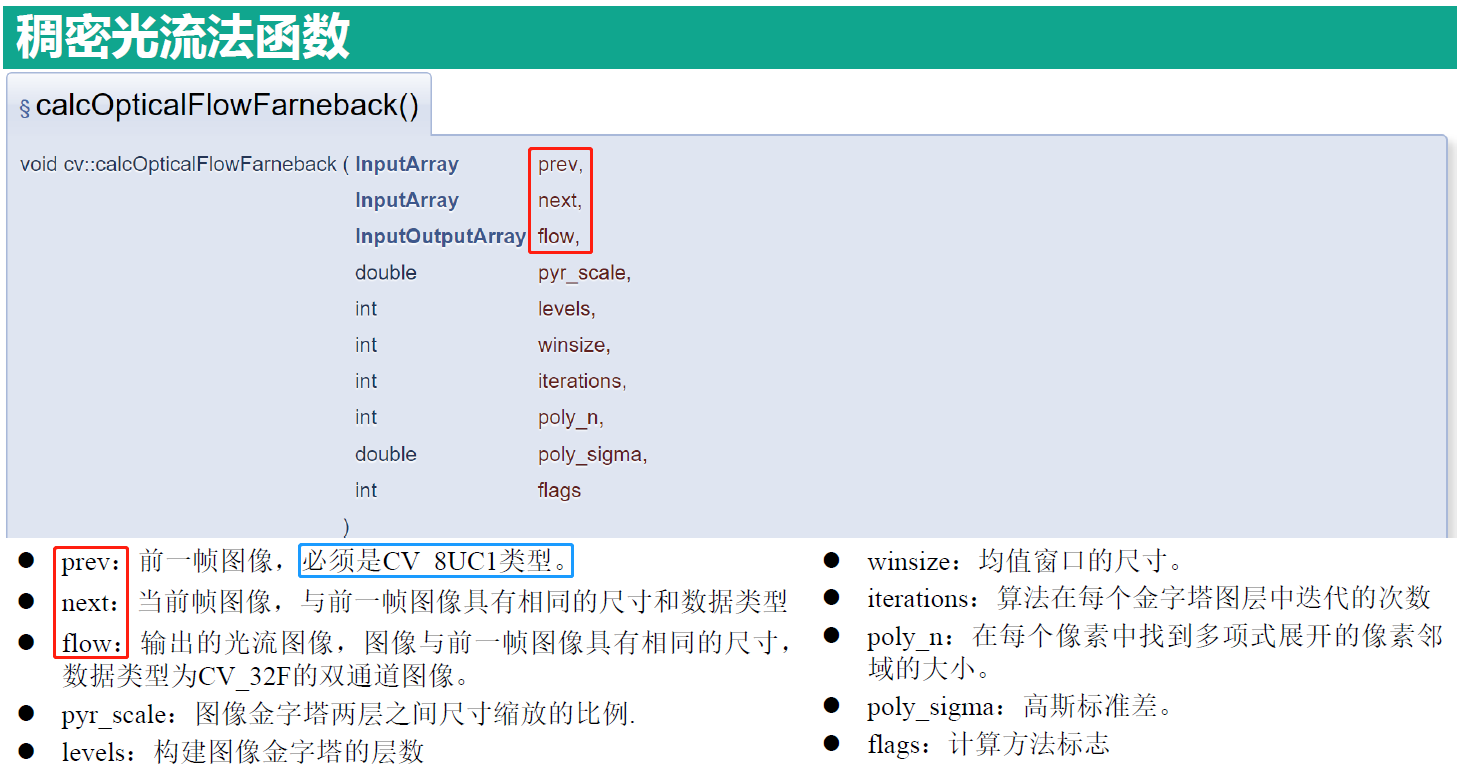

calcOpticalFlowFarneback(prevGray, nextGray, flow, 0.5, 3, 15, 3, 5, 1.2, 0);

Mat xV = Mat::zeros(prevFrame.size(), CV_32FC1); //x方向移动速度

Mat yV = Mat::zeros(prevFrame.size(), CV_32FC1); //y方向移动速度

//提取两个方向的速度

for (int row = 0; row < flow.rows; row++)

{

for (int col = 0; col < flow.cols; col++)

{

const Point2f& flow_xy = flow.at<Point2f>(row, col);

xV.at<float>(row, col) = flow_xy.x;

yV.at<float>(row, col) = flow_xy.y;

}

}

//计算向量角度和幅值

Mat magnitude, angle;

cartToPolar(xV, yV, magnitude, angle);//cartToPolar是OpenCV中的一个函数

//讲角度转换成角度制

angle = angle * 180.0 / CV_PI / 2.0;

//把幅值归一化到0-255区间便于显示结果

normalize(magnitude, magnitude, 0, 255, NORM_MINMAX);

//计算角度和幅值的绝对值

convertScaleAbs(magnitude, magnitude);

convertScaleAbs(angle, angle);

//讲运动的幅值和角度生成HSV颜色空间的图像

Mat HSV = Mat::zeros(prevFrame.size(), prevFrame.type());

vector<Mat> result;

split(HSV, result);

result[0] = angle; //决定颜色

result[1] = Scalar(255);

result[2] = magnitude; //决定形态

//将三个多通道图像合并成三通道图像

merge(result, HSV);

//讲HSV颜色空间图像转换到RGB颜色空间中

Mat rgbImg;

cvtColor(HSV, rgbImg, COLOR_HSV2BGR);

//显示检测结果

imshow("运动检测结果", rgbImg);

int ch = waitKey(5);

if (ch == 27)

{

break;

}

}

waitKey(0);

return 0;

}

运行结果:

二.稀疏光流法跟踪移动物体

通常情况下,我们使用的是托马斯角点

例2

*附例2代码:

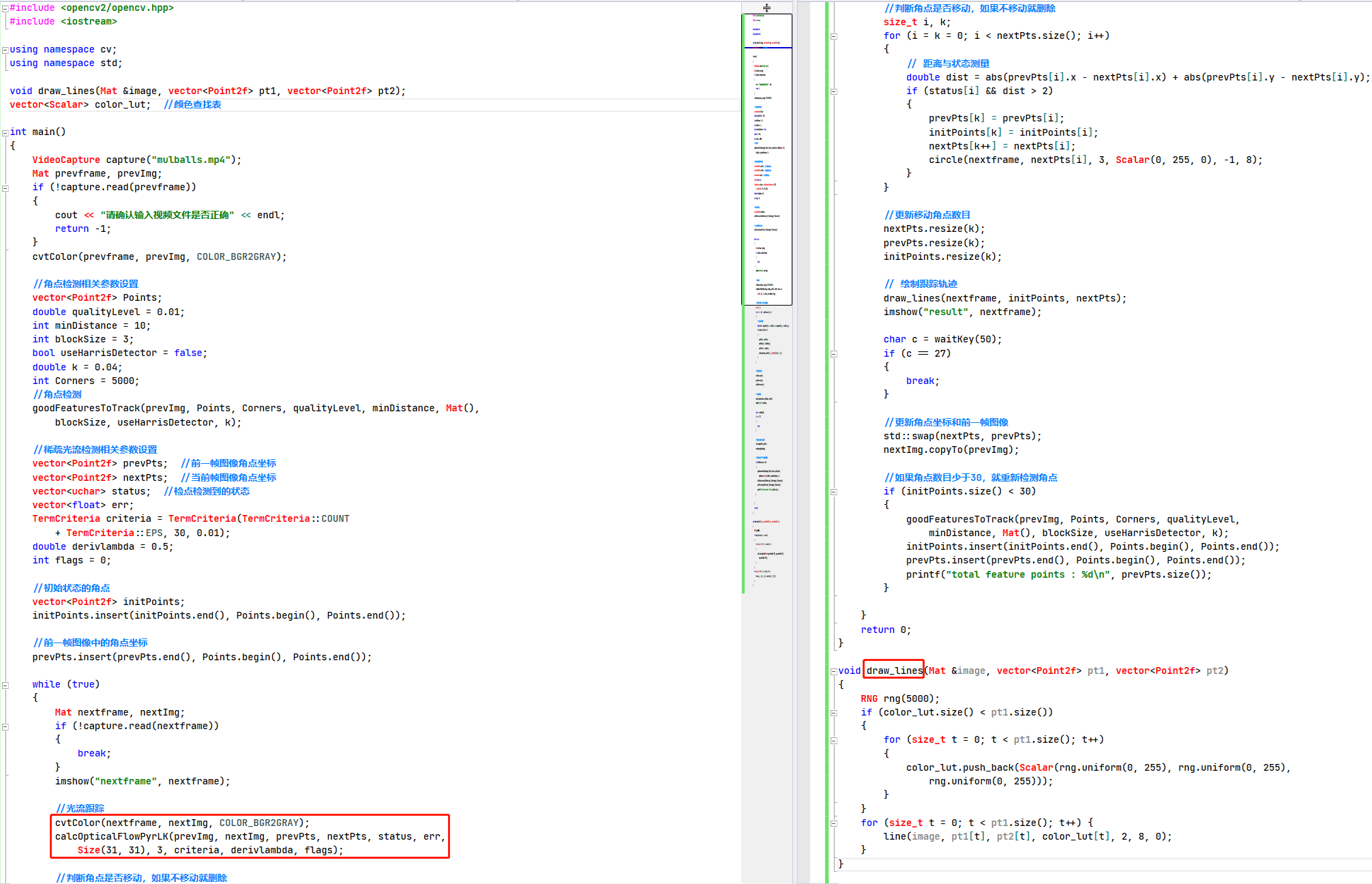

nclude <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

void draw_lines(Mat &image, vector<Point2f> pt1, vector<Point2f> pt2);

vector<Scalar> color_lut; //颜色查找表

int main()

{

VideoCapture capture("mulballs.mp4");

Mat prevframe, prevImg;

if (!capture.read(prevframe))

{

cout << "请确认输入视频文件是否正确" << endl;

return -1;

}

cvtColor(prevframe, prevImg, COLOR_BGR2GRAY);

//角点检测相关参数设置

vector<Point2f> Points;

double qualityLevel = 0.01;

int minDistance = 10;

int blockSize = 3;

bool useHarrisDetector = false;

double k = 0.04;

int Corners = 5000;

//角点检测

goodFeaturesToTrack(prevImg, Points, Corners, qualityLevel, minDistance, Mat(),

blockSize, useHarrisDetector, k);

//稀疏光流检测相关参数设置

vector<Point2f> prevPts; //前一帧图像角点坐标

vector<Point2f> nextPts; //当前帧图像角点坐标

vector<uchar> status; //检点检测到的状态

vector<float> err;

TermCriteria criteria = TermCriteria(TermCriteria::COUNT

+ TermCriteria::EPS, 30, 0.01);

double derivlambda = 0.5;

int flags = 0;

//初始状态的角点

vector<Point2f> initPoints;

initPoints.insert(initPoints.end(), Points.begin(), Points.end());

//前一帧图像中的角点坐标

prevPts.insert(prevPts.end(), Points.begin(), Points.end());

while (true)

{

Mat nextframe, nextImg;

if (!capture.read(nextframe))

{

break;

}

imshow("nextframe", nextframe);

//光流跟踪

cvtColor(nextframe, nextImg, COLOR_BGR2GRAY);

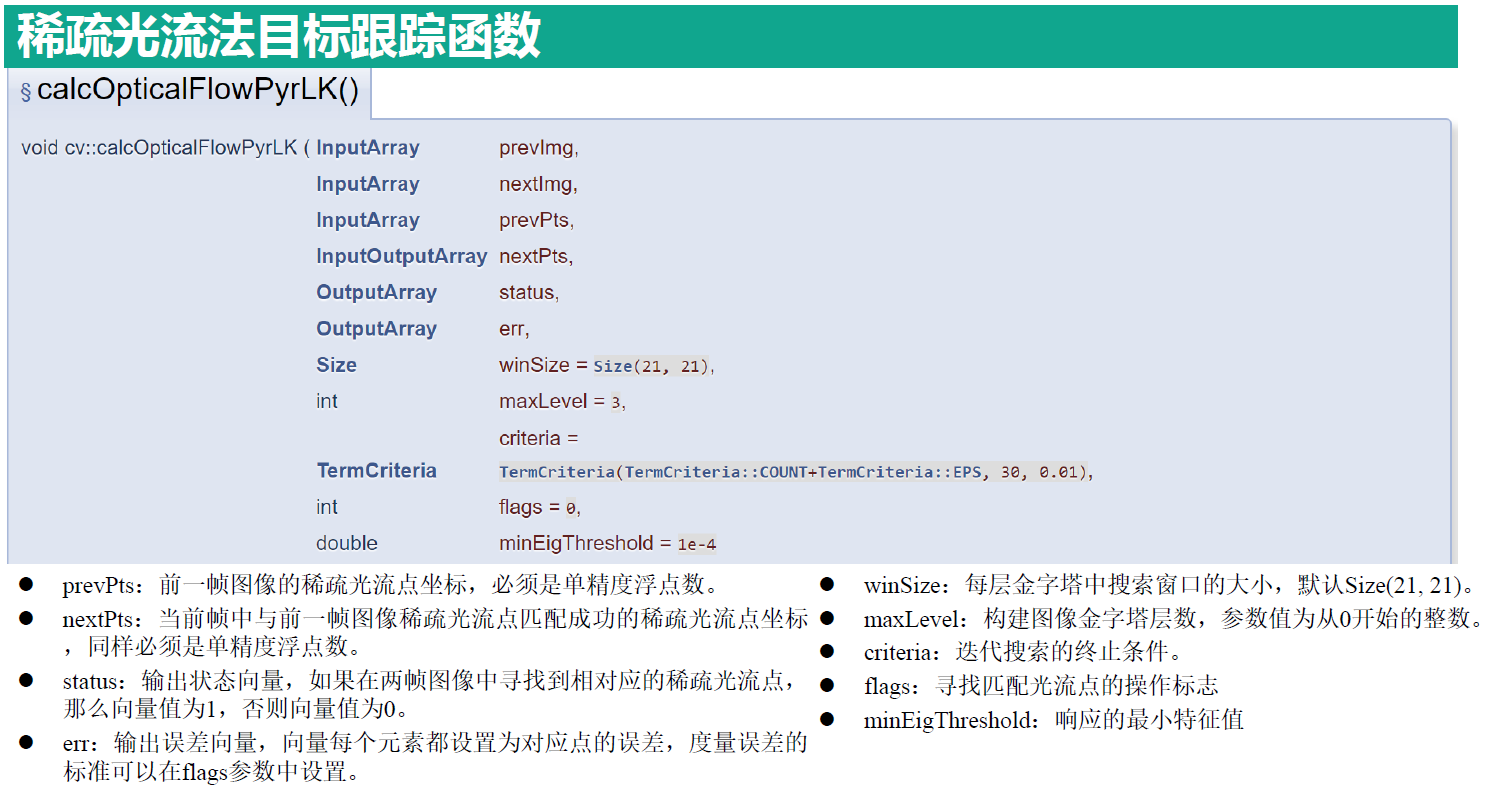

calcOpticalFlowPyrLK(prevImg, nextImg, prevPts, nextPts, status, err,

Size(31, 31), 3, criteria, derivlambda, flags);

//判断角点是否移动,如果不移动就删除

size_t i, k;

for (i = k = 0; i < nextPts.size(); i++)

{

// 距离与状态测量

double dist = abs(prevPts[i].x - nextPts[i].x) + abs(prevPts[i].y - nextPts[i].y);

if (status[i] && dist > 2)

{

prevPts[k] = prevPts[i];

initPoints[k] = initPoints[i];

nextPts[k++] = nextPts[i];

circle(nextframe, nextPts[i], 3, Scalar(0, 255, 0), -1, 8);

}

}

//更新移动角点数目

nextPts.resize(k);

prevPts.resize(k);

initPoints.resize(k);

// 绘制跟踪轨迹

draw_lines(nextframe, initPoints, nextPts);

imshow("result", nextframe);

char c = waitKey(50);

if (c == 27)

{

break;

}

//更新角点坐标和前一帧图像

std::swap(nextPts, prevPts);

nextImg.copyTo(prevImg);

//如果角点数目少于30,就重新检测角点

if (initPoints.size() < 30)

{

goodFeaturesToTrack(prevImg, Points, Corners, qualityLevel,

minDistance, Mat(), blockSize, useHarrisDetector, k);

initPoints.insert(initPoints.end(), Points.begin(), Points.end());

prevPts.insert(prevPts.end(), Points.begin(), Points.end());

printf("total feature points : %d\n", prevPts.size());

}

}

return 0;

}

void draw_lines(Mat &image, vector<Point2f> pt1, vector<Point2f> pt2)

{

RNG rng(5000);

if (color_lut.size() < pt1.size())

{

for (size_t t = 0; t < pt1.size(); t++)

{

color_lut.push_back(Scalar(rng.uniform(0, 255), rng.uniform(0, 255),

rng.uniform(0, 255)));

}

}

for (size_t t = 0; t < pt1.size(); t++) {

line(image, pt1[t], pt2[t], color_lut[t], 2, 8, 0);

}

}

运行结果: