0. 前序

本文的文字及图片来源于网络,仅供学习、交流使用,不具有任何商业用途,如有问题请及时联系我们以作处理。

PS:如有需要Python学习资料的小伙伴可以点击下方链接自行获取

每天迈出一小步,朝着目标迈一大步。

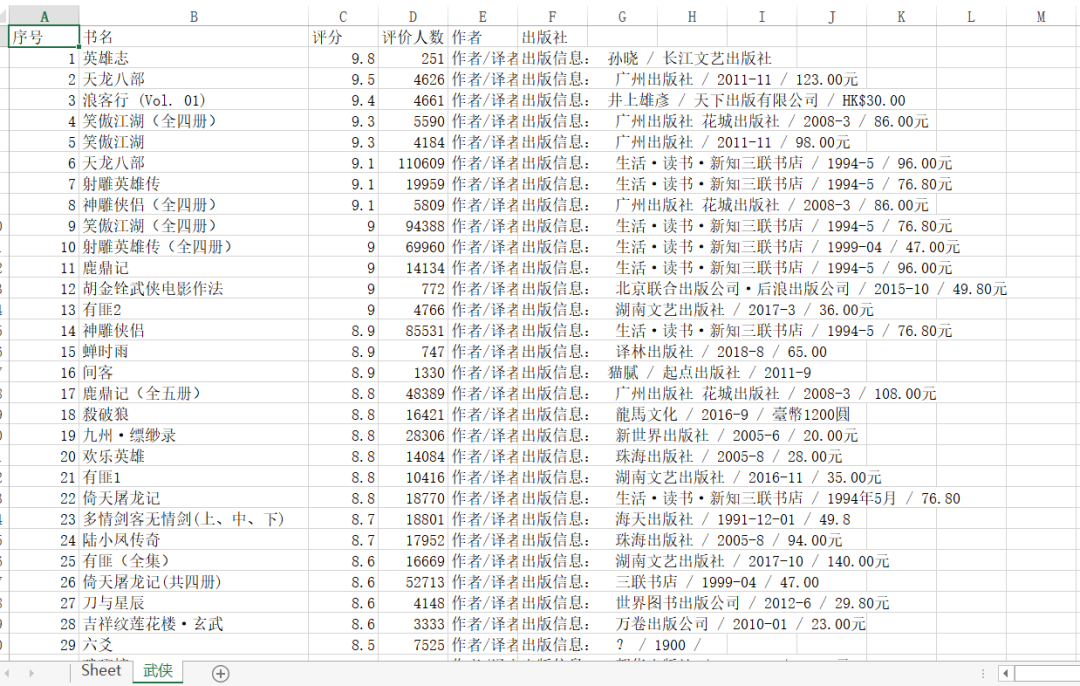

书荒的朋友可以不用担心了,我们可以通过豆瓣图书喜欢的标签,获取相应的图书。把对应的书名,价格,评分等等都归类下载下来。

1. 数据怎么来

首先打开豆瓣读书的官网链接,然后选择对应喜欢的标签,通过F12分析页面数据。

我们会看到搜索出来的结果,大概有五十多页,要是人工一页页翻,不知道翻到啥时候。这时我们可以用爬虫,把这些信息都下载下来。

2. 数据处理

思路如下:

- 通过requests去获取网站数据,每次拉取15行数据。

- 豆瓣返回的是html文件,这里通过BeautifulSoup去解析文件。

- 通过书籍详情链接去获取作者信息。

- 最后把爬到书籍信息存到excel里。

完整源码如下:

#-*- coding: UTF-8 -*-

import time

import requests

import numpy as np

from bs4 import BeautifulSoup

from openpyxl import Workbook

#Some User Agents

hds=[{'User-Agent':'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6'},\

{'User-Agent':'Mozilla/5.0 (Windows NT 6.2) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.12 Safari/535.11'},\

{'User-Agent': 'Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.2; Trident/6.0)'}]

def book_spider(book_tag):

page_num=0;

book_list=[]

try_times=0

while(1):

# url='http://www.douban.com/tag/%E5%B0%8F%E8%AF%B4/book?start=0' # For Test

# url='http://www.douban.com/tag/'+urllib.quote(book_tag)+'/book?start='+str(page_num*15)

url='http://www.douban.com/tag/'+book_tag+'/book?start='+str(page_num*15)

time.sleep(np.random.rand()*5)

#Last Version

try:

req = requests.get(url, headers=hds[page_num%len(hds)])

source_code = req.text

except Exception as e:

print(e)

continue

soup = BeautifulSoup(source_code, "html.parser")

list_soup = soup.find('div', {'class': 'mod book-list'})

try_times+=1;

if list_soup is None and try_times<200:

continue

elif list_soup is None or len(list_soup)<=1 or page_num == 3:

break # Break when no informatoin got after 200 times requesting

for book_info in list_soup.findAll('dd'):

title = book_info.find('a', {'class':'title'}).string.strip()

desc = book_info.find('div', {'class':'desc'}).string.strip()

desc_list = desc.split('/')

book_url = book_info.find('a', {'class':'title'}).get('href')

try:

author_info = '作者/译者:' + '/'.join(desc_list[0:-3])

except:

author_info ='作者/译者:暂无'

try:

pub_info = '出版信息:' + '/'.join(desc_list[-3:])

except:

pub_info = '出版信息:暂无'

try:

rating = book_info.find('span', {'class':'rating_nums'}).string.strip()

except:

rating='0.0'

try:

#people_num = book_info.findAll('span')[2].string.strip()

people_num = get_people_num(book_url)

people_num = people_num.strip('人评价')

except:

people_num ='0'

book_list.append([title,rating,people_num,author_info,pub_info])

try_times=0 #set 0 when got valid information

page_num += 1

print('Downloading Information From Page %d' % page_num)

return book_list

def get_people_num(url):

#url='http://book.douban.com/subject/6082808/?from=tag_all' # For Test

req = requests.get(url, headers=hds[np.random.randint(0, len(hds))])

source_code = req.text

soup = BeautifulSoup(source_code, "html.parser")

people_num = soup.find('div', {'class':'rating_sum'}).findAll('span')[1].string.strip()

return people_num

def do_spider(book_tag_lists):

book_lists = []

for book_tag in book_tag_lists:

book_list = book_spider(book_tag)

book_list = sorted(book_list, key=lambda x:x[1],reverse=True)

book_lists.append(book_list)

return book_lists

def print_book_lists_excel(book_lists, book_tag_lists):

wb = Workbook()

ws = []

for i in range(len(book_tag_lists)):

ws.append(wb.create_sheet(title=book_tag_lists[i]))

for i in range(len(book_tag_lists)):

ws[i].append(['序号', '书名', '评分', '评价人数', '作者', '出版社'])

count=1

for bl in book_lists[i]:

ws[i].append([count, bl[0], float(bl[1]), int(bl[2]), bl[3], bl[4]])

count += 1

save_path='book_list'

for i in range(len(book_tag_lists)):

save_path += ('-'+book_tag_lists[i])

save_path += '.xlsx'

wb.save(save_path)

if __name__=='__main__':

#book_tag_lists = ['心理','判断与决策','算法','数据结构','经济','历史']

#book_tag_lists = ['传记','哲学','编程','创业','理财','社会学','佛教']

#book_tag_lists = ['思想','科技','科学','web','股票','爱情','两性']

#book_tag_lists = ['计算机','机器学习','linux','android','数据库','互联网']

#book_tag_lists = ['数学']

#book_tag_lists = ['摄影','设计','音乐','旅行','教育','成长','情感','育儿','健康','养生']

#book_tag_lists = ['商业','理财','管理']

# book_tag_lists = ['名著']

#book_tag_lists = ['科普','经典','生活','心灵','文学']

#book_tag_lists = ['科幻','思维','金融']

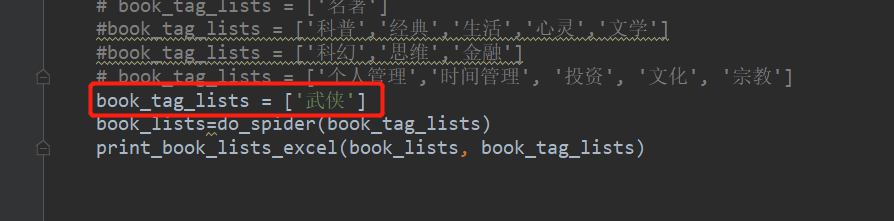

book_tag_lists = ['武侠']

book_lists=do_spider(book_tag_lists)

print_book_lists_excel(book_lists, book_tag_lists)

3. 写在最后

代码中加了周期间隔,刚开始验证测试的时候,最好先选取单个标签,防止代码运行过长。

另外,每个标签返回的书籍列表会很多,可以加相应的页数限制,让效果更加明显,当然了想一下子获取全部可以忽略。