一、haproxy负载均衡

1.日志设置

[root@server1 ~]# vim /etc/rsyslog.conf

需要变动的部分如下:

$ModLoad imudp

$UDPServerRun 514

*.info;mail.none;authpriv.none;cron.none;local2.none /var/log/messages %加local2.none 表示local2里面的日志信息不要记录到 /var/log/messages文件中

local2.* /var/log/haproxy.log %把haproxy的日志设备定义到日志采集列表中并指定文件

[root@server1 ~]# systemctl restart rsyslog.service

[root@server1 ~]# systemctl start haproxy.service

[root@server1 ~]# cat /var/log/haproxy.log %查看产生的日志

2.相关参数配置

[root@server1 ~]# systemctl enable --now haproxy.service

[root@server1 ~]# vim /etc/haproxy/haproxy.cfg

stats uri /status

stats auth admin:westos %添加访问时候的网页认证

frontend main *:80

acl url_static path_beg -i /static /image /javascript /stylesheets

acl url_static path_end -i .jpg .gif .png .css .js

use_backend static if url_static %符合url_static条件时访问static后端

default_backend app %默认为app后端

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend static

balance roundrobin

server app2 10.4.17.1:80 check

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend app

balance roundrobin %定义调度算法,可更换,roundrobin为轮询调度

server app1 10.4.17.2:80 check

[root@server1 ~]# systemctl restart haproxy.service

[root@server2 ~]# cd /var/www/html/

[root@server2 html]# ls

index.html

[root@server2 html]# mkdir image

[root@server2 html]# cd image/

[root@server2 image]# ls

vim.jpg

效果:访问http://10.4.17.2/image/vim.jpg及http://10.4.17.1/status时都可以看到vim.jpg

%添加backup项,当所有后端都未开启http服务时访问backup端

[root@server1 ~]# vim /etc/haproxy/haproxy.cfg

backend app

balance roundrobin

#balance source

server app1 10.4.17.3:80 check

server backup 127.0.0.1:8080 backup %添加backup

[root@server1 ~]# vim /etc/httpd/conf/httpd.conf %端口改为8080,否则会和haproxy冲突

[root@server1 ~]# systemctl reload haproxy.service

[root@server1 ~]# systemctl restart httpd.service

[root@server1 ~]# cd /var/www/html

[root@server1 html]# ls

[root@server1 html]# vim index.html

[root@server1 html]# cat index.html

please try again later

[root@server3 html]# systemctl stop httpd.service

效果:此时访问调度器会出现please try again later

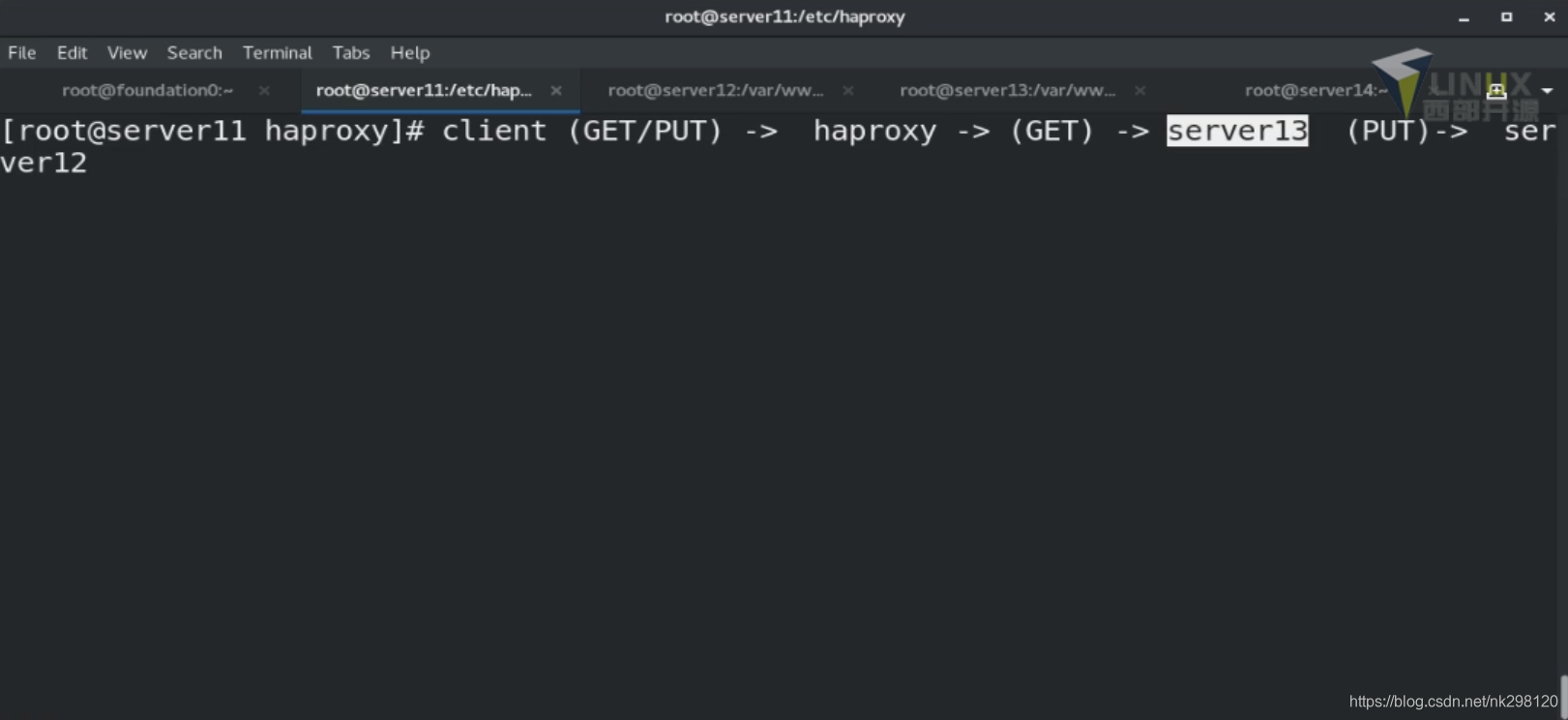

3.读写分离

在server3上读,写到server2上:

[root@zhenji html]# scp upload/* 10.4.17.3:/var/www/html/upload/

[email protected]'s password:

index.php 100% 257 352.4KB/s 00:00

upload_file.php 100% 927 1.8MB/s 00:00

[root@server1 html]# vim /etc/haproxy/haproxy.cfg

frontend main *:80

acl url_static path_beg -i /static /image /javascript /stylesheets

acl url_static path_end -i .jpg .gif .png .css .js

acl blacklist src 10.4.17.216

acl denyipg path /image/vim.jpg

acl write method PUT

acl write method POST

#tcp-request content accept if blacklist

#tcp-request content reject

#block if blacklist

#errorloc 403 http://www.baidu.com

#redirect location http://www.westos.org if blacklist

#http-request deny if denyipg blacklist

use_backend static if write

default_backend app

[root@server1 haproxy]# systemctl reload haproxy.service

[root@server3 html]# chmod 777 upload

[root@server3 html]# vim upload/upload_file.php

&& ($_FILES["file"]["size"] < 2000000)) %更改上传图片大小(非必要步骤,根据需求进行设定即可)

[root@server2 upload]# yum install php -y

[root@server3 html]# cd upload/

[root@server3 upload]# ls

index.php upload_file.php

[root@server3 upload]# mv index.php ..

[root@server3 upload]# cd ..

[root@server3 upload]# systemctl restart httpd.service

[root@server2 html]# mkdir upload

[root@server2 html]# scp [email protected]:/var/www/html/upload/* .

[root@server2 html]# ls %需要把index.php放到html目录中

image index.html index.php upload upload_file.php

[root@server2 html]# mv upload_file.php upload/

[root@server2 html]# cd upload/

[root@server2 upload]# ls

upload_file.php

[root@server2 upload]# yum install php -y

[root@server2 upload]# systemctl restart httpd.service

[root@server12 html]# ls upload

index.php vim.jpg

注意:server2和server3中都要安装php,server3必须要有index.php,因为通过访问这个页面进行上传,而server2中必须要有upload_file.php文件,因为上传的时候访问的是这个页面。且index.php移到html目录中。网页访问10.4.17.3/index.php,进行提交vim.jpg,然后在server2主机中对应目录下有vim.jpg

整体数据走向如下图所示:

二、集群管理

1.Pacemaker

Pacemaker是 Linux环境中使用最为广泛的开源集群资源管理器, Pacemaker利用集群基础架构(Corosync或者 Heartbeat)提供的消息和集群成员管理功能,实现节点和资源级别的故障检测和资源恢复,从而最大程度保证集群服务的高可用。从逻辑功能而言, pacemaker在集群管理员所定义的资源规则驱动下,负责集群中软件服务的全生命周期管理,这种管理甚至包括整个软件系统以及软件系统彼此之间的交互。 Pacemaker在实际应用中可以管理任何规模的集群,由于其具备强大的资源依赖模型,这使得集群管理员能够精确描述和表达集群资源之间的关系(包括资源的顺序和位置等关系)。同时,对于任何形式的软件资源,通过为其自定义资源启动与管理脚本(资源代理),几乎都能作为资源对象而被 Pacemaker管理。此外,需要指出的是, Pacemaker仅是资源管理器,并不提供集群心跳信息,由于任何高可用集群都必须具备心跳监测机制,因而很多初学者总会误以为 Pacemaker本身具有心跳检测功能,而事实上 Pacemaker的心跳机制主要基于 Corosync或 Heartbeat来实现

链接: https://www.cnblogs.com/longchang/p/10711529.html.

2.配置步骤

注意:需要关闭selinux和防火墙

[root@server1 haproxy]# yum install -y pacemaker pcs psmisc policycoreutils-python %安装pacemaker

[root@server1 haproxy]# systemctl enable --now pcsd.service

[root@server1 html]# echo westos|passwd --stdin hacluster

[root@server1 haproxy]# ssh server4 'echo westos|passwd --stdin hacluster'

[root@server4 haproxy]# yum install -y pacemaker pcs psmisc policycoreutils-python

[root@server4 haproxy]# systemctl enable --now pcsd.service

[root@server1 haproxy]# pcs cluster auth server1 server4

Username: hacluster

Password:

server4: Authorized

server1: Authorized

[root@server1 haproxy]# pcs cluster setup --name mycluster server1 server4

[root@server1 haproxy]# pcs cluster start --all

[root@server1 haproxy]# pcs cluster enable --all

[root@server1 haproxy]# pcs status %查看集群状态

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@server1 haproxy]# pcs property set stonith-enabled=false

[root@server1 haproxy]# crm_verify -LV %刷新

[root@server1 haproxy]# pcs status corosync

Membership information

----------------------

Nodeid Votes Name

1 1 server1 (local)

2 1 server4

[root@server1 haproxy]# pcs resource providers

heartbeat

openstack

pacemaker

[root@server1 haproxy]# pcs resource create --help

[root@server1 haproxy]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=10.4.17.100 op monitor interval=30s

[root@server1 haproxy]# ip addr show %查看是否生成vip

[root@server1 haproxy]# pcs cluster stop server1 %停掉server1的管理

[root@server1 haproxy]# systemctl disable --now haproxy.service %配置集群之前把所有之前的设置都清理,统一由集群配置

[root@server4 ~]# pcs status %管理由server4接管,注意:此时如果重新恢复server1对集群的管理,管理并不会切换到server1,这个机制是为了防止反复切换造成内存的损耗

vip (ocf::heartbeat:IPaddr2): Started server14

[root@server4 ~]# yum install haproxy -y

[root@server1 haproxy]# scp /etc/haproxy/haproxy.cfg root@10.4.17.4://etc/haproxy/ %需要做server1对server4的免密登陆

[root@server1 haproxy]# pcs cluster start server1

[root@server1 haproxy]# pcs resource create haproxy systemd:haproxy op monitor interval=30s

[root@server1 haproxy]# pcs resource group add hagroup vip haproxy %group把资源整合到一起,启动顺序,启动时一起启动

[root@server1 haproxy]# pcs status

Resource Group: hagroup

vip (ocf::heartbeat:IPaddr2): Started server4

haproxy (systemd:haproxy): Started server4

[root@server4 ~]# pcs node standby

[root@server1 haproxy]# pcs status

Node server4: standby

三、fence

当集群多个管理节点同时对节点进行操作且操作相悖时,会导致出现错误,可能会对存储造成损害,在生产环境中我们将这种情况称之为脑裂,因此就需要有一种机制能够在这种情况发生时强制结束某一结点并重启,提供该机制的设备被称之为fence设备

[root@zhenji ~]# yum search fence-virtd

[root@zhenji ~]# yum install fence-virtd.x86_64 fence-virtd-libvirt.x86_64 fence-virtd-multicast.x86_64

更改镜像:

[dvd]

name=rhel7.6

baseurl=http://10.4.17.216/rhel7.6

gpgcheck=0

[HighAvailability]

name=rhel7.6

baseurl=http://10.4.17.1216/rhel7.6/addons/HighAvailability

gpgcheck=0

[root@zhenji yum.repos.d]# mkdir /etc/cluster

[root@zhenji ~]# fence_virtd -c %执行完该指令后一直回车,中途把interface 为 "br0"

listeners {

multicast {

port = "1229";

family = "ipv4";

interface = "br0";

address = "225.0.0.12";

key_file = "/etc/cluster/fence_xvm.key";

}

[root@zhenji yum.repos.d]# dd if=/dev/urandom of=fence_xvm.key bs=128 count=1

1+0 records in

1+0 records out

128 bytes copied, 6.8975e-05 s, 1.9 MB/s

[root@zhenji yum.repos.d]# systemctl restart fence_virtd.service

[root@zhenji cluster]# netstat -anulp|grep :1229

udp 0 0 0.0.0.0:1229 0.0.0.0:* 21895/fence_virtd

[root@server11 haproxy]# mkdir /etc/cluster

[root@server11 haproxy]# cd /etc/cluster

[root@server11 cluster]# ls

fence_xvm.key

[root@server14 haproxy]# mkdir /etc/cluster

[root@server14 haproxy]# cd /etc/cluster

[root@server14 cluster]# ls

fence_xvm.key

[root@zhenji cluster]# scp fence_xvm.key root@10.4.17.1:/etc/cluster/

root@172.25.3.11's password:

fence_xvm.key

[root@zhenji cluster]# scp fence_xvm.key root@10.4.17.4:/etc/cluster/

root@10.4.17.4's password:

fence_xvm.key

[root@server14 ~]# yum install fence-virt.x86_64

[root@server14 ~]# stonith_admin -I

fence_xvm

fence_virt

2 devices found

[root@server11 ~]# yum install fence-virt.x86_64

[root@server11 ~]# stonith_admin -I

fence_xvm

fence_virt

2 devices found

[root@server11 cluster]# pcs stonith create vmfence fence_xvm pcmk_host_map="server1:node1;server4:node4" op monitor interval=60s

注意:如果写错了vmfence,需要pcs stonith disable vmfence;再pcs stonith delete vmfence

[root@server1 cluster]# pcs status

Resource Group: hagroup

vip (ocf::heartbeat:IPaddr2): Started server11

haproxy (systemd:haproxy): Started server11

vmfence (stonith:fence_xvm): Starting server14

[root@server1 cluster]# pcs property set stonith-enabled=true

[root@server4 ~]# echo c > /proc/sysrq-trigger %测试,该语句表示令内核损坏,此时server4会自动重启并加载到集群中

四、源码编译+nginx负载均衡

源码编译三大步:

1.configure > 用于生成makefile用于指导后续的make

2.make > 编译 make的过程都是在那个源码的目录里面执行

3.make install > 把编译好的二进制程序写入到当前的/usr/local/nginx/conf/nginx.conf文件中

[root@zhenji ~]# scp nginx-1.18.0.tar.gz root@10.4.17.1:/root

[root@server1 nginx-1.18.0]# tar zxf nginx-1.18.0.tar.gz

[root@server1 nginx-1.18.0]# yum install gcc

[root@server1 nginx-1.18.0]# yum install pcre-devel

[root@server1 nginx-1.18.0]# yum install openssl-devel

[root@server1 nginx-1.18.0]# ./configure --prefix=/usr/local/nginx --with-http_ssl_module

[root@server1 nginx-1.18.0]# make

[root@server1 nginx-1.18.0]# make install

[root@server1 nginx-1.18.0]# cd /usr/local/nginx/

[root@server1 nginx]# ls

conf html logs sbin

[root@server1 nginx]# du -sh

5.8M .

[root@server1 nginx]# cd ..

[root@server1 local]# rm -fr nginx/

[root@server1 local]# cd

[root@server1 ~]# cd nginx-1.18.0/

[root@server1 nginx-1.18.0]# ls

auto CHANGES CHANGES.ru conf configure contrib html LICENSE Makefile man objs README src

[root@server1 nginx-1.18.0]# make clean

[root@server1 nginx-1.18.0]# cd auto/

[root@server1 auto]# cd cc/

[root@server1 cc]# vim gcc

把debug屏蔽

# debug

#CFLAGS="$CFLAGS -g"

[root@server1 nginx-1.18.0]# cd ..

[root@server1 nginx-1.18.0]# ./configure --prefix=/usr/local/nginx --with-http_ssl_module

[root@server1 nginx-1.18.0]# make

[root@server1 nginx-1.18.0]# make install

[root@server1 nginx-1.18.0]# cd /usr/local/nginx/

[root@server1 nginx]# ls

[root@server1 nginx]# du -sh

[root@server1 nginx]# ls

[root@server1 nginx]# cd sbin/

[root@server1 sbin]# pwd

/usr/local/nginx/sbin/

[root@server1 sbin]# ecoh $PATH %加入系统环境变量

[root@server1~]# cd

[root@server1~]# vim .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ];then

. ~/bashrc

fi

#User specific environment and startup programs

PATH=$PATH:$HOME/bin:/usr/local/nginx/sbin

export PATH

[root@server1~]# source .bash_profile

[root@server1~]# which nginx

[root@server1~]# nginx

[root@server1~]# cd /usr/local/nginx/conf/

[root@server1 conf]# vim nginx.conf

http {

upstream westos {

server 10.4.17.2:80;

server 10.4.17.3:80;

}

server {

listen 80;

server_name demo.westos.org;

location / {

proxy_pass http://westos;

}

}

}

[root@server1 conf]# nginx -t

[root@server1 conf]# nginx -s reload

[root@zhenji ~]# curl demo.westos.org

server2

[root@zhenji ~]# curl demo.westos.org

server3