FFMPEG解码H264成YUV

1、源代码下载

博哥已经将这部分代码在github上开源,请在github上下载。

下载地址: https://github.com/wangfengbo2020/ffmped_decode_h264_to_yuv

(这里失误,将ffmpeg写成了ffmped,谢谢指认,后续改正)

考虑到源代码量不大,还是在这里贴出来:

videodecoder.c

#include <stdio.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include "videodecoder.h"

struct AVCodecContext *pAVCodecCtxDecoder = NULL;

struct AVCodec *pAVCodecDecoder;

struct AVPacket mAVPacketDecoder;

struct AVFrame *pAVFrameDecoder = NULL;

struct SwsContext* pImageConvertCtxDecoder = NULL;

struct AVFrame *pFrameYUVDecoder = NULL;

int ffmpeg_init_video_decoder(AVCodecParameters *codecParameters)

{

if (!codecParameters) {

printf("Source codec context is NULL."); //printf需替换为printf

return -1;

}

ffmpeg_release_video_decoder();

avcodec_register_all();

pAVCodecDecoder = avcodec_find_decoder(codecParameters->codec_id);

if (!pAVCodecDecoder) {

printf("Can not find codec:%d\n", codecParameters->codec_id);

return -2;

}

pAVCodecCtxDecoder = avcodec_alloc_context3(pAVCodecDecoder);

if (!pAVCodecCtxDecoder) {

printf("Failed to alloc codec context.");

ffmpeg_release_video_decoder();

return -3;

}

if (avcodec_parameters_to_context(pAVCodecCtxDecoder, codecParameters) < 0) {

printf("Failed to copy avcodec parameters to codec context.");

ffmpeg_release_video_decoder();

return -3;

}

if (avcodec_open2(pAVCodecCtxDecoder, pAVCodecDecoder, NULL) < 0){

printf("Failed to open h264 decoder");

ffmpeg_release_video_decoder();

return -4;

}

av_init_packet(&mAVPacketDecoder);

pAVFrameDecoder = av_frame_alloc();

pFrameYUVDecoder = av_frame_alloc();

return 0;

}

int ffmpeg_init_h264_decoder()

{

avcodec_register_all();

AVCodec *pAVCodec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (!pAVCodec){

printf("can not find H264 codec\n");

return -1;

}

AVCodecContext *pAVCodecCtx = avcodec_alloc_context3(pAVCodec);

if (pAVCodecCtx == NULL) {

printf("Could not alloc video context!\n");

return -2;

}

AVCodecParameters *codecParameters = avcodec_parameters_alloc();

if (avcodec_parameters_from_context(codecParameters, pAVCodecCtx) < 0) {

printf("Failed to copy avcodec parameters from codec context.");

avcodec_parameters_free(&codecParameters);

avcodec_free_context(&pAVCodecCtx);

return -3;

}

int ret = ffmpeg_init_video_decoder(codecParameters);

avcodec_parameters_free(&codecParameters);

avcodec_free_context(&pAVCodecCtx);

return ret;

}

int ffmpeg_release_video_decoder() {

if (pAVCodecCtxDecoder != NULL) {

avcodec_free_context(&pAVCodecCtxDecoder);

pAVCodecCtxDecoder = NULL;

}

if (pAVFrameDecoder != NULL) {

av_packet_unref(&mAVPacketDecoder);

av_free(pAVFrameDecoder);

pAVFrameDecoder = NULL;

}

if (pFrameYUVDecoder) {

av_frame_unref(pFrameYUVDecoder);

av_free(pFrameYUVDecoder);

pFrameYUVDecoder = NULL;

}

if (pImageConvertCtxDecoder) {

sws_freeContext(pImageConvertCtxDecoder);

}

av_packet_unref(&mAVPacketDecoder);

return 0;

}

int ffmpeg_decode_h264(unsigned char *inbuf, int inbufSize, int *framePara, unsigned char **outRGBBuf, unsigned char *outYUVBuf)

{

if (!pAVCodecCtxDecoder || !pAVFrameDecoder || !inbuf || inbufSize<=0 || !framePara || (!outRGBBuf && !outYUVBuf)) {

return -1;

}

av_frame_unref(pAVFrameDecoder);

av_frame_unref(pFrameYUVDecoder);

framePara[0] = framePara[1] = 0;

mAVPacketDecoder.data = inbuf;

mAVPacketDecoder.size = inbufSize;

int ret = avcodec_send_packet(pAVCodecCtxDecoder, &mAVPacketDecoder);

if (ret == 0) {

ret = avcodec_receive_frame(pAVCodecCtxDecoder, pAVFrameDecoder);

if (ret == 0) {

framePara[0] = pAVFrameDecoder->width;

framePara[1] = pAVFrameDecoder->height;

if (outYUVBuf) {

//*outYUVBuf = (unsigned char *)pAVFrameDecoder->data;

memcpy(outYUVBuf, pAVFrameDecoder->data, sizeof(pAVFrameDecoder->data));

framePara[2] = pAVFrameDecoder->linesize[0];

framePara[3] = pAVFrameDecoder->linesize[1];

framePara[4] = pAVFrameDecoder->linesize[2];

} else if (outRGBBuf) {

pFrameYUVDecoder->data[0] = outRGBBuf;

pFrameYUVDecoder->data[1] = NULL;

pFrameYUVDecoder->data[2] = NULL;

pFrameYUVDecoder->data[3] = NULL;

int linesize[4] = {

pAVCodecCtxDecoder->width * 3, pAVCodecCtxDecoder->height * 3, 0, 0 };

pImageConvertCtxDecoder = sws_getContext(pAVCodecCtxDecoder->width, pAVCodecCtxDecoder->height,

AV_PIX_FMT_YUV420P,

pAVCodecCtxDecoder->width,

pAVCodecCtxDecoder->height,

AV_PIX_FMT_RGB24, SWS_FAST_BILINEAR,

NULL, NULL, NULL);

sws_scale(pImageConvertCtxDecoder, (const uint8_t* const *) pAVFrameDecoder->data, pAVFrameDecoder->linesize, 0, pAVCodecCtxDecoder->height, pFrameYUVDecoder->data, linesize);

sws_freeContext(pImageConvertCtxDecoder);

}

return 1;

} else if (ret == AVERROR(EAGAIN)) {

return 0;

} else {

return -1;

}

}

return 0;

}

videodecoder.h

#include <libavcodec/avcodec.h>

/**

视频流解码器初始化

@param ctx 解码参数结构体AVCodecParameters

@see ffmpeg_init_video_decoder,此为解码H264视频流

@return 初始化成功返回0,否则<0

*/

int ffmpeg_init_video_decoder(AVCodecParameters *ctx);

/**

H264视频流解码器初始化

@return 初始化成功返回0,否则<0

*/

int ffmpeg_init_h264_decoder(void);

/**

释放解码器

@return 初始化成功返回0,否则<0

*/

int ffmpeg_release_video_decoder(void);

//return 0:暂未收到解码数据,-1:解码失败,1:解码成功

/**

解码视频流数据

@param inbuf 视频裸流数据

@param inbufSize 视频裸流数据大小

@param framePara 接收帧参数数组:{width,height,linesize1,linesiz2,linesize3}

@param outRGBBuf 输出RGB数据(若已申请内存)

@param outYUVBuf 输出YUV数据(若已申请内存)

@return 成功返回解码数据帧大小,否则<=0

*/

int ffmpeg_decode_h264(unsigned char * inbuf, int inbufSize, int *framePara, unsigned char **outRGBBuf, unsigned char *outYUVBuf);

decode264.c

#include <stdio.h>

#include <string.h>

#include <pthread.h>

#include <stdlib.h>

#include <unistd.h>

#include "videodecoder.h"

/* H264 source data, I frame */

#define H264_TEST_FILE "./Iframe4test.h264"

/* Target yuv data, yuv 420 */

#define TARGET_YUV_FILE "./target420.yuv"

int g_paramBuf[8] = {

0};

char g_inputData[1*1024*1024] = {

0};

char g_ouputData[4*1024*1024] = {

0};

int read_raw_data(char *path, char *data, int maxBuf)

{

FILE *fp = NULL;

int len = 0;

fp = fopen(path, "r");

if (NULL != fp)

{

memset(data, 0x0, maxBuf);

len = fread(data, 1, maxBuf, fp);

fclose(fp);

}

return len;

}

int write_raw_data_to_file(char *path, char *data, int dataLen)

{

FILE *fp = NULL;

int len = 0;

int left = dataLen;

int cnts = 0;

if (NULL == data || 0 == dataLen)

{

printf("para error return\n");

return 0;

}

if (access(path, F_OK) == 0)

{

remove(path);

}

fp = fopen(path, "ab+");

if (NULL != fp)

{

while(left)

{

if (left >= 4096)

{

len += fwrite((void *)data+cnts*4096, 1, 4096, fp);

cnts++;

left -= 4096;

sync();

}

else

{

len += fwrite(data+cnts*4096, 1, left, fp);

break;

}

}

fclose(fp);

}

return len;

}

int main()

{

int dataLen = 0;

int index = 150;

unsigned char *yuvData[10] = {

NULL};

char *rgbData = NULL;

//Step 1: init

int ret = ffmpeg_init_h264_decoder();

printf("Step 1 finished, ret = %d\n", ret);

//Step 2: get YUV data

dataLen = read_raw_data(H264_TEST_FILE, g_inputData, sizeof(g_inputData));

if (0 == dataLen)

{

printf("Step 2 failed, Read YUV data error\n");

return;

}

printf("Step 2 finished, readDatalen = %d\n", dataLen);

//Step 3: decode

ret = ffmpeg_decode_h264(g_inputData, dataLen, g_paramBuf, NULL, yuvData);

printf("Step 3 finished, decode ret = %d width = %d height = %d\n", ret, g_paramBuf[0], g_paramBuf[1]);

//Put the data to the target file according to the format 420.

memcpy(g_ouputData, (char *)(yuvData[0]), g_paramBuf[0]*g_paramBuf[1]);

memcpy(g_ouputData + g_paramBuf[0]*g_paramBuf[1] , (char *)(yuvData[1]), (g_paramBuf[0]*g_paramBuf[1])/4);

memcpy(g_ouputData + ((g_paramBuf[0]*g_paramBuf[1]))*5/4 , (char *)(yuvData[2]), (g_paramBuf[0]*g_paramBuf[1])/4);

//Step 4: write yuv data to file

ret = write_raw_data_to_file(TARGET_YUV_FILE, g_ouputData, g_paramBuf[0]*g_paramBuf[1]*3/2);

printf("Step 4 write finish len = %d\n", ret);

return 0;

}

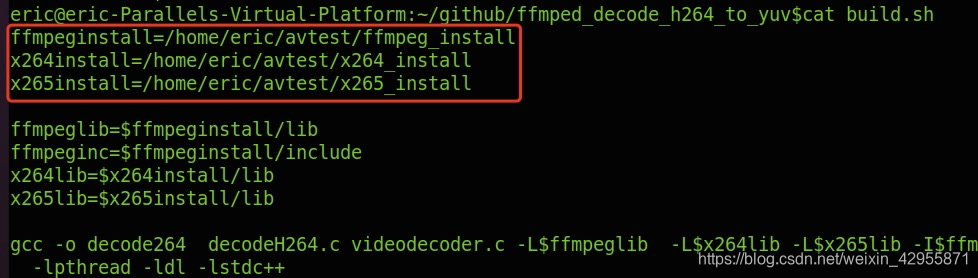

build.sh

ffmpeginstall=/home/eric/avtest/ffmpeg_install

x264install=/home/eric/avtest/x264_install

x265install=/home/eric/avtest/x265_install

ffmpeglib=$ffmpeginstall/lib

ffmpeginc=$ffmpeginstall/include

x264lib=$x264install/lib

x265lib=$x265install/lib

gcc -o decode264 decodeH264.c videodecoder.c -L$ffmpeglib -L$x264lib -L$x265lib -I$ffmpeginc -lavformat -lavcodec -lavutil -lswscale -lswresample -lx264 -lx265 -lm -lpthread -ldl -lstdc++

2、源码分析

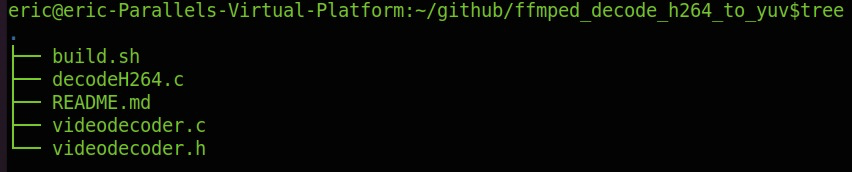

2.1 工程介绍

- build.sh: 编译脚本,无参数

- videodecoder.h: ffmpeg转码的API

- videodecoder.c: 对ffmpeg转码的API的实现

- decodeH264.c:demo

2.2 工程运行

(1)打开并修改build.sh文件

请修改上述路径。上述文件的编译请详见博哥之前的博客。

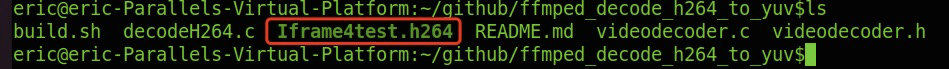

(2)增加目标文件(H264 I frame)

可以将测试的H264 I帧放到工程根目录下,命名如上标注。

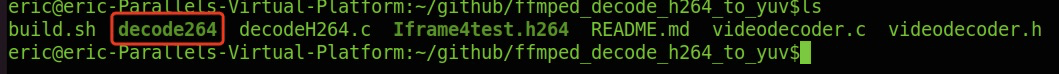

(3) 编译工程

sh build.sh

编译正常后,在根目录下生成decode264

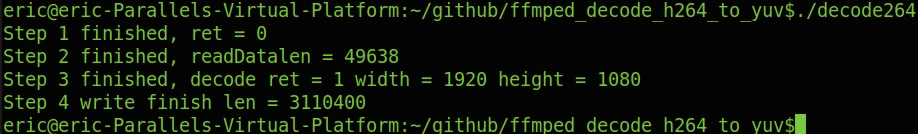

(4) 运行可执行文件

./decode264

若显示上述过程,说明运行正常,然后在跟录下下生成target420.yuv的文件。

(5)测试生成的文件

ffplay -f rawvideo -video_size 1920x1080 target420.yuv