以下内容笔记出自‘跟着迪哥学python数据分析与机器学习实战’,外加个人整理添加,仅供个人复习使用。

import pandas as pd

import jieba

import warnings

warnings.filterwarnings('ignore')

导入数据

df_news=pd.read_table(r'E:\R Python\Python 学习\@迪哥B站\迪哥-Python数据分析与机器学习实战\18:基于贝叶斯的新闻分类实战\data\val.txt',

names=['category','theme','URL','content'],

encoding='utf-8')

df_news=df_news.dropna()

print(df_news.shape)

df_news.head(1)

分词,使用jieba分词器

content_S=[]

for line in content:

current_segment=jieba.lcut(line)

if len(current_segment)>1 and current_segment !='\r\n':#换行符

content_S.append(current_segment)

content_S[12]

df_content=pd.DataFrame({

'content_S':content_S})

df_content.head(6)

导入停用词并删去文档中停用词

stopwords=pd.read_csv(r'stopwords.txt',

index_col=False,sep='\t',quoting=3,

names=['stopwords'],encoding='utf-8')

stopwords.head(6)

#定义删去停用词的函数

def drop_stopwords(contents,stopwords):

contents_clean=[]

all_words=[]

for line in contents:

line_clean=[]

for word in line:

if word in stopwords:

continue

#代码执行到continue时,本轮循环终止,进入下一轮循环

line_clean.append(word)

all_words.append(str(word))

contents_clean.append(line_clean)

return contents_clean,all_words

查看分词后结果:

contents=df_content.content_S.values.tolist()

stopwords=stopwords.stopwords.values.tolist()

contents_clean,all_words=drop_stopwords(contents,stopwords)

df_content=pd.DataFrame({

'content_clean':contents_clean})

df_content.head()

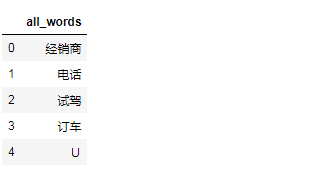

df_all=pd.DataFrame({

'all_words':all_words})

df_all.head()

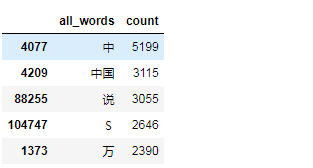

计算词频

import numpy as np

#words_count=df_all.groupby(by=['all_words'])['all_words'].count()

words_count=df_all.groupby(by=['all_words'])['all_words'].agg({

'count':np.size})

words_count=words_count.reset_index().sort_values(by='count',ascending=False)

words_count.head(6)

词云展示

from wordcloud import WordCloud

import matplotlib.pyplot as plt

%matplotlib inline

import matplotlib

plt.rcParams['font.sans-serif']='SimHei'

plt.figure(figsize=(10,5))

wordcloud=WordCloud(font_path='C:\\Windows\\Fonts\\simhei.ttf',

background_color='white',

max_font_size=80)

wordfreq={

x[0]:x[1] for x in words_count.head(100).values} #将数据框模式转化为列表模式

wordcloud=wordcloud.fit_words(wordfreq)

plt.imshow(wordcloud)

plt.axis('off')

关键词提取:TF-IDF

import jieba.analyse

index=2400

print(df_news['content'][index])

content_S_str=''.join(content_S[index])

print(' '.join(jieba.analyse.extract_tags(content_S_str,topK=5,

withWeight=False)))

耐克 阿迪达斯 欧洲杯 球衣 西班牙

主题模型:LDA

格式要求:list of list 格式,分词后的整个语料

from gensim import corpora,models,similarities

import gensim

#http://radimrehurek.com/gensim/

#做映射,相当于词袋

dictionary=corpora.Dictionary(contents_clean)

corpus=[dictionary.doc2bow(sentence) for sentence in contents_clean]

lda=gensim.models.ldamodel.LdaModel(corpus=corpus,

id2word=dictionary,

num_topics=20)

#类似与Kmeans,自己指定k值

#一号分类结果

print(lda.print_topic(1,topn=5))

0.010*“男人” + 0.009*“说” + 0.009*“中” + 0.007*“女人” + 0.005*“节目”

for topic in lda.print_topics(num_topics=20,num_words=5):

print(topic[1])

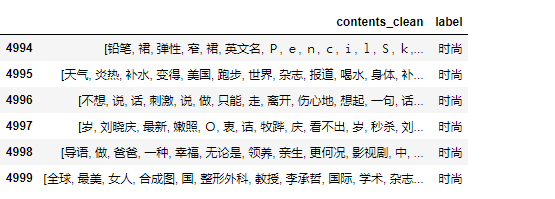

分类

df_train=pd.DataFrame({

'contents_clean':contents_clean,

'label':df_news['category']})

df_train.tail(6)

标签处理

label_map={

'汽车':1, '财经':2, '科技':3, '健康':4,

'体育': 5,'教育':6, '文化':7, '军事':8, '娱乐':9, '时尚':10}

df_train['label']=df_train['label'].map(label_map)

df_train.head(6)

划分测试集与训练集

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test=train_test_split(df_train['contents_clean'].values,

df_train['label'].values,

test_size=0.2,

random_state=1234)

words=[]

for line_index in range(len(X_train)):

try:

words.append(' '.join(X_train[line_index]))

except:

print(line_index,word_index)

words[0]

词向量处理

#示例

from sklearn.feature_extraction.text import CountVectorizer

texts=["dog cat fish","dog cat cat","fish bird", 'bird']

cv=CountVectorizer()

cv_fit=cv.fit_transform(texts)

print(cv.get_feature_names())

print(cv_fit.toarray())

print(cv_fit.toarray().sum(axis=0))

[‘bird’, ‘cat’, ‘dog’, ‘fish’]

[[0 1 1 1]

[0 2 1 0]

[1 0 0 1]

[1 0 0 0]]

[2 3 2 2]

#也可几个词组合,可能型更多,精准度越高,但数据量较大时,矩阵可能会过于稀疏

from sklearn.feature_extraction.text import CountVectorizer

texts=["dog cat fish","dog cat cat","fish bird", 'bird']

cv=CountVectorizer(ngram_range=(1,4)) #一般到2即可

cv_fit=cv.fit_transform(texts)

print(cv.get_feature_names())

print(cv_fit.toarray())

print(cv_fit.toarray().sum(axis=0))

[‘bird’, ‘cat’, ‘cat cat’, ‘cat fish’, ‘dog’, ‘dog cat’, ‘dog cat cat’, ‘dog cat fish’, ‘fish’, ‘fish bird’]

[[0 1 0 1 1 1 0 1 1 0]

[0 2 1 0 1 1 1 0 0 0]

[1 0 0 0 0 0 0 0 1 1]

[1 0 0 0 0 0 0 0 0 0]]

[2 3 1 1 2 2 1 1 2 1]

对新闻语料进行词向量处理

from sklearn.feature_extraction.text import CountVectorizer

cv=CountVectorizer(analyzer='word',

max_features=4000,

l

vec=cv.fit_transform(words)

print(len(cv.get_feature_names()))

print(vec.toarray())

print(vec.toarray().shape)

4000

[[0 0 0 … 0 0 0]

[0 0 0 … 0 0 0]

[0 0 0 … 0 0 0]

…

[0 0 0 … 0 0 0]

[0 0 0 … 0 0 0]

[0 0 0 … 0 0 0]]

(4000, 4000)

建模

from sklearn.naive_bayes import MultinomialNB

classifier=MultinomialNB()

classifier.fit(vec,y_train)

test_words=[]

for line_index in range(len(X_test)):

try:

test_words.append(' '.join(X_test[line_index]))

except:

print(line_index,word_index)

test_words[0]

classifier.score(cv.fit_transform(test_words),y_test)

0.138

再利用TF-IDF进行词向量处理建模

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer=TfidfVectorizer(analyzer='word',

max_features=4000,

lowercase=False)

vectorizer.fit(words)

from sklearn.naive_bayes import MultinomialNB

classifier=MultinomialNB()

classifier.fit(vectorizer.fit_transform(words),y_train)

classifier.score(vectorizer.fit_transform(test_words),y_test)

0.17