Alexnet主要内容

1.使用数据集为ImageNet

2.使用非饱和激活函数Relu比饱和激活函数具有更快的收敛速度

3.使用两个GPU并行训练

4.使用Local Response Normalization进行优化

5.网络结构:5层卷积层,3层全连接层,1,2,5层卷积层后接最大池化

,使用的是重叠池化令步长s<窗口边长z,这里令s=2,z=3,每层都使用Relu激活

6.减少过拟合使用两种方法,1、数据增广 2、Dropout

7.实现细节:使用随机梯度下降,动量参数为0.9,权值和偏置的初始化策略

复现Alexnet

做出的一些改动:

1.数据集的变化:ImageNet–>cifar10,图像大小由2242243–>32323

2.没有使用原文提到的双GPU策略,没有使用局部影响归一化策略

使用平台:

Google Colab 提供NVIDIA Tesla K80 GPU免费使用,不过存在一些限制,连接时间不超过12小时,Colab Pro版本收费且只提供给美国地区使用

代码部分

import keras

from keras.datasets import cifar10

from keras import backend as K

from keras.layers import Input, Conv2D, GlobalAveragePooling2D, Dense, BatchNormalization, Activation, MaxPooling2D

from keras.models import Model

from keras.layers import concatenate,Dropout,Flatten

from keras import optimizers,regularizers

from keras.preprocessing.image import ImageDataGenerator

from keras.initializers import he_normal

from keras.callbacks import LearningRateScheduler, TensorBoard, ModelCheckpoint

num_classes = 10

batch_size = 64

iterations =782

epochs = 300

DROPOUT = 0.5

CONCAT_AXIS = 3

weight_decay = 1e-4

DATA_FORMAT = 'channels_last'

log_filepath = './alexnet'

def color_preprocessing(x_train,x_test):

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

mean = [125.307,122.95,113.865]

std = [62.9932,62.0887,66.7048]

for i in range(3):

x_train[:,:,:,i] = (x_train[:,:,:,i]-mean[i]) / std[i]

x_test[:,:,:,i] = (x_test[:,:,:,i]-mean[i]) / std[i]

return x_train,x_test

def scheduler(epoch):

if epoch < 100:

return 0.01

if epoch < 200:

return 0.001

return 0.0001

#load_data

(x_train, y_train),(x_test, y_test) = cifar10.load_data()

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

x_train,x_test = color_preprocessing(x_train, x_test)

def alexnet(img_input,classes=10):

#1

x = Conv2D(96, (11,11), strides = (4,4), padding = 'same',

activation = 'relu', kernel_initializer = 'uniform')(img_input)#may kernel_initializer need change

#11*11*3*96+96= 34944

x = MaxPooling2D(pool_size = (3,3), strides = (2,2), padding = 'same', data_format = DATA_FORMAT)(x)

#2

x = Conv2D(256,(5,5), strides = (1,1), padding = 'same',

activation = 'relu', kernel_initializer = 'uniform')(x)

#5*5*96*256+256 = 614656

x = MaxPooling2D(pool_size = (3,3), strides = (2,2), padding = 'same', data_format = DATA_FORMAT)(x)

#3

x = Conv2D(384, (3,3), strides = (1,1), padding = 'same',

activation = 'relu', kernel_initializer = 'uniform')(x)

#3*3*256*384+384=885120

#4

x = Conv2D(384, (3,3), strides = (1,1), padding = 'same',

activation = 'relu', kernel_initializer = 'uniform')(x)

#3*3*384*384+384=1327488

#5

x = Conv2D(256, (3,3), strides = (1,1), padding = 'same',

activation = 'relu', kernel_initializer = 'uniform')(x)

#3*3*384*256+256=884992

x = MaxPooling2D(pool_size = (3,3), strides = (2,2), padding = 'same', data_format = DATA_FORMAT)(x)

x = Flatten()(x)

x = Dense(4096,activation = 'relu')(x)

#256*4096+4096=1052672

x = Dropout(0.5)(x)

x = Dense(4096,activation = 'relu')(x)

#4096*4096+4096 = 16781312

x = Dropout(0.5)(x)

out = Dense(classes, activation = 'softmax')(x)

#4096*10+10 = 40970

return out

img_input = Input(shape = (32,32,3))

output = alexnet(img_input)

model = Model(img_input, output)

model.summary()

sgd = optimizers.SGD(lr = .1,momentum = 0.9 ,nesterov = True)

model.compile(loss = 'categorical_crossentropy',optimizer = sgd, metrics = ['accuracy'])

tb_cb = TensorBoard(log_dir = log_filepath,histogram_freq = 0)

change_lr = LearningRateScheduler(scheduler)

cbks = [change_lr, tb_cb]

datagen = ImageDataGenerator(horizontal_flip = True,

width_shift_range = 0.125,

height_shift_range = 0.125,

fill_mode = 'constant',cval = 0.)

datagen.fit(x_train)

#train

model.fit_generator(datagen.flow(x_train,y_train,batch_size = batch_size),

steps_per_epoch = iterations,

epochs = epochs,

callbacks = cbks,

validation_data = (x_test,y_test))

model.save('alexnet.h5')

print('sucess!')

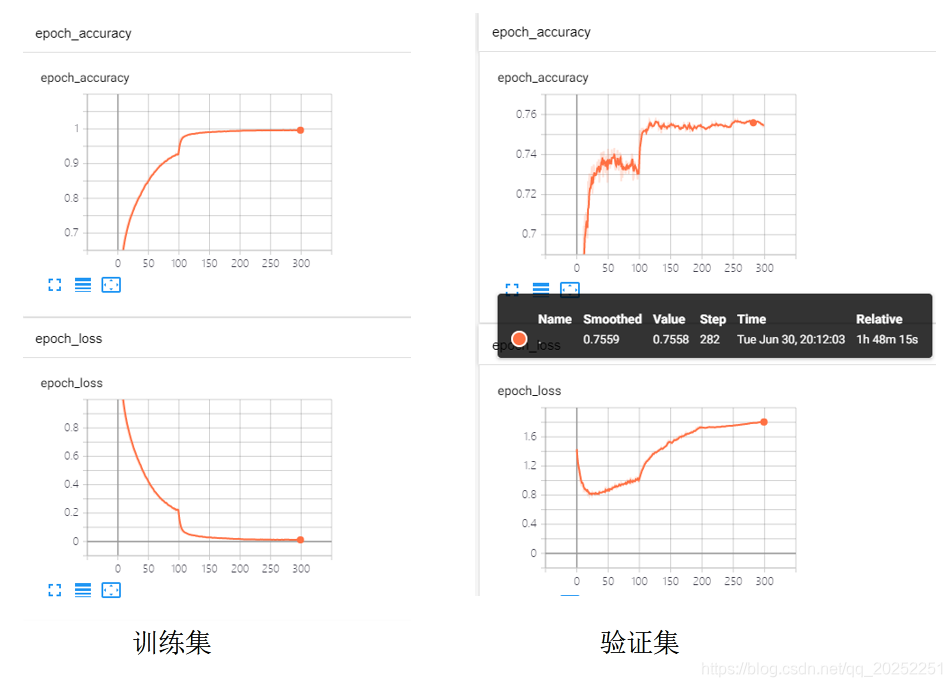

实验结果