导入数字识别的数据集

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

from sklearn import datasets

digits=datasets.load_digits()

digits.keys()

dict_keys(['data', 'target', 'target_names', 'images', 'DESCR'])

print(digits.DESCR)

.. _digits_dataset:

Optical recognition of handwritten digits dataset

--------------------------------------------------

**Data Set Characteristics:**

:Number of Instances: 5620

:Number of Attributes: 64

:Attribute Information: 8x8 image of integer pixels in the range 0..16.

:Missing Attribute Values: None

:Creator: E. Alpaydin (alpaydin '@' boun.edu.tr)

:Date: July; 1998

This is a copy of the test set of the UCI ML hand-written digits datasets

https://archive.ics.uci.edu/ml/datasets/Optical+Recognition+of+Handwritten+Digits

The data set contains images of hand-written digits: 10 classes where

each class refers to a digit.

Preprocessing programs made available by NIST were used to extract

normalized bitmaps of handwritten digits from a preprinted form. From a

total of 43 people, 30 contributed to the training set and different 13

to the test set. 32x32 bitmaps are divided into nonoverlapping blocks of

4x4 and the number of on pixels are counted in each block. This generates

an input matrix of 8x8 where each element is an integer in the range

0..16. This reduces dimensionality and gives invariance to small

distortions.

For info on NIST preprocessing routines, see M. D. Garris, J. L. Blue, G.

T. Candela, D. L. Dimmick, J. Geist, P. J. Grother, S. A. Janet, and C.

L. Wilson, NIST Form-Based Handprint Recognition System, NISTIR 5469,

1994.

.. topic:: References

- C. Kaynak (1995) Methods of Combining Multiple Classifiers and Their

Applications to Handwritten Digit Recognition, MSc Thesis, Institute of

Graduate Studies in Science and Engineering, Bogazici University.

- E. Alpaydin, C. Kaynak (1998) Cascading Classifiers, Kybernetika.

- Ken Tang and Ponnuthurai N. Suganthan and Xi Yao and A. Kai Qin.

Linear dimensionalityreduction using relevance weighted LDA. School of

Electrical and Electronic Engineering Nanyang Technological University.

2005.

- Claudio Gentile. A New Approximate Maximal Margin Classification

Algorithm. NIPS. 2000.

X=digits.data

X.shape

(1797, 64)

y=digits.target

y.shape

(1797,)

y[:100]

array([0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 0, 1,

2, 3, 4, 5, 6, 7, 8, 9, 0, 9, 5, 5, 6, 5, 0, 9, 8, 9, 8, 4, 1, 7,

7, 3, 5, 1, 0, 0, 2, 2, 7, 8, 2, 0, 1, 2, 6, 3, 3, 7, 3, 3, 4, 6,

6, 6, 4, 9, 1, 5, 0, 9, 5, 2, 8, 2, 0, 0, 1, 7, 6, 3, 2, 1, 7, 4,

6, 3, 1, 3, 9, 1, 7, 6, 8, 4, 3, 1])

取前10行元素

X[:10]

array([[ 0., 0., 5., 13., 9., 1., 0., 0., 0., 0., 13., 15., 10.,

15., 5., 0., 0., 3., 15., 2., 0., 11., 8., 0., 0., 4.,

12., 0., 0., 8., 8., 0., 0., 5., 8., 0., 0., 9., 8.,

0., 0., 4., 11., 0., 1., 12., 7., 0., 0., 2., 14., 5.,

10., 12., 0., 0., 0., 0., 6., 13., 10., 0., 0., 0.],

[ 0., 0., 0., 12., 13., 5., 0., 0., 0., 0., 0., 11., 16.,

9., 0., 0., 0., 0., 3., 15., 16., 6., 0., 0., 0., 7.,

15., 16., 16., 2., 0., 0., 0., 0., 1., 16., 16., 3., 0.,

0., 0., 0., 1., 16., 16., 6., 0., 0., 0., 0., 1., 16.,

16., 6., 0., 0., 0., 0., 0., 11., 16., 10., 0., 0.],

[ 0., 0., 0., 4., 15., 12., 0., 0., 0., 0., 3., 16., 15.,

14., 0., 0., 0., 0., 8., 13., 8., 16., 0., 0., 0., 0.,

1., 6., 15., 11., 0., 0., 0., 1., 8., 13., 15., 1., 0.,

0., 0., 9., 16., 16., 5., 0., 0., 0., 0., 3., 13., 16.,

16., 11., 5., 0., 0., 0., 0., 3., 11., 16., 9., 0.],

[ 0., 0., 7., 15., 13., 1., 0., 0., 0., 8., 13., 6., 15.,

4., 0., 0., 0., 2., 1., 13., 13., 0., 0., 0., 0., 0.,

2., 15., 11., 1., 0., 0., 0., 0., 0., 1., 12., 12., 1.,

0., 0., 0., 0., 0., 1., 10., 8., 0., 0., 0., 8., 4.,

5., 14., 9., 0., 0., 0., 7., 13., 13., 9., 0., 0.],

[ 0., 0., 0., 1., 11., 0., 0., 0., 0., 0., 0., 7., 8.,

0., 0., 0., 0., 0., 1., 13., 6., 2., 2., 0., 0., 0.,

7., 15., 0., 9., 8., 0., 0., 5., 16., 10., 0., 16., 6.,

0., 0., 4., 15., 16., 13., 16., 1., 0., 0., 0., 0., 3.,

15., 10., 0., 0., 0., 0., 0., 2., 16., 4., 0., 0.],

[ 0., 0., 12., 10., 0., 0., 0., 0., 0., 0., 14., 16., 16.,

14., 0., 0., 0., 0., 13., 16., 15., 10., 1., 0., 0., 0.,

11., 16., 16., 7., 0., 0., 0., 0., 0., 4., 7., 16., 7.,

0., 0., 0., 0., 0., 4., 16., 9., 0., 0., 0., 5., 4.,

12., 16., 4., 0., 0., 0., 9., 16., 16., 10., 0., 0.],

[ 0., 0., 0., 12., 13., 0., 0., 0., 0., 0., 5., 16., 8.,

0., 0., 0., 0., 0., 13., 16., 3., 0., 0., 0., 0., 0.,

14., 13., 0., 0., 0., 0., 0., 0., 15., 12., 7., 2., 0.,

0., 0., 0., 13., 16., 13., 16., 3., 0., 0., 0., 7., 16.,

11., 15., 8., 0., 0., 0., 1., 9., 15., 11., 3., 0.],

[ 0., 0., 7., 8., 13., 16., 15., 1., 0., 0., 7., 7., 4.,

11., 12., 0., 0., 0., 0., 0., 8., 13., 1., 0., 0., 4.,

8., 8., 15., 15., 6., 0., 0., 2., 11., 15., 15., 4., 0.,

0., 0., 0., 0., 16., 5., 0., 0., 0., 0., 0., 9., 15.,

1., 0., 0., 0., 0., 0., 13., 5., 0., 0., 0., 0.],

[ 0., 0., 9., 14., 8., 1., 0., 0., 0., 0., 12., 14., 14.,

12., 0., 0., 0., 0., 9., 10., 0., 15., 4., 0., 0., 0.,

3., 16., 12., 14., 2., 0., 0., 0., 4., 16., 16., 2., 0.,

0., 0., 3., 16., 8., 10., 13., 2., 0., 0., 1., 15., 1.,

3., 16., 8., 0., 0., 0., 11., 16., 15., 11., 1., 0.],

[ 0., 0., 11., 12., 0., 0., 0., 0., 0., 2., 16., 16., 16.,

13., 0., 0., 0., 3., 16., 12., 10., 14., 0., 0., 0., 1.,

16., 1., 12., 15., 0., 0., 0., 0., 13., 16., 9., 15., 2.,

0., 0., 0., 0., 3., 0., 9., 11., 0., 0., 0., 0., 0.,

9., 15., 4., 0., 0., 0., 9., 12., 13., 3., 0., 0.]])

取其中某一行

some_digit=X[666]

y[666]

0

用图像显示

some_digit_image=some_digit.reshape(8,8)

plt.imshow(some_digit_image,cmap=matplotlib.cm.binary)

plt.show()

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test=train_test_split(X,y,test_size=0.2,random_state=666)

from sklearn.neighbors import KNeighborsClassifier

kNNClassifier=KNeighborsClassifier(n_neighbors=3)

kNNClassifier.fit(X_train,y_train)

y_predict=kNNClassifier.predict(X_test)

y_predict

array([8, 1, 3, 4, 4, 0, 7, 0, 8, 0, 4, 6, 1, 1, 2, 0, 1, 6, 7, 3, 3, 6,

3, 2, 3, 4, 0, 2, 0, 3, 0, 8, 7, 2, 3, 5, 1, 3, 1, 5, 8, 6, 2, 6,

3, 1, 3, 0, 0, 4, 9, 9, 2, 8, 7, 0, 5, 4, 0, 9, 5, 5, 5, 7, 4, 2,

8, 8, 7, 1, 4, 3, 0, 2, 7, 2, 1, 2, 4, 0, 9, 0, 6, 6, 2, 0, 0, 5,

4, 4, 3, 1, 3, 8, 6, 4, 4, 7, 5, 6, 8, 4, 8, 4, 6, 9, 7, 7, 0, 8,

8, 3, 9, 7, 1, 8, 4, 2, 7, 0, 0, 4, 9, 6, 7, 3, 4, 6, 4, 8, 4, 7,

2, 6, 9, 5, 8, 7, 2, 5, 5, 9, 7, 9, 3, 1, 9, 4, 4, 1, 5, 1, 6, 4,

4, 8, 1, 6, 2, 5, 2, 1, 4, 4, 3, 9, 4, 0, 6, 0, 8, 3, 8, 7, 3, 0,

3, 0, 5, 9, 2, 7, 1, 8, 1, 4, 3, 3, 7, 8, 2, 7, 2, 2, 8, 0, 5, 7,

6, 7, 3, 4, 7, 1, 7, 0, 9, 2, 8, 9, 3, 8, 9, 1, 1, 1, 9, 8, 8, 0,

3, 7, 3, 3, 4, 8, 2, 1, 8, 6, 0, 1, 7, 7, 5, 8, 3, 8, 7, 6, 8, 4,

2, 6, 2, 3, 7, 4, 9, 3, 5, 0, 6, 3, 8, 3, 3, 1, 4, 5, 3, 2, 5, 6,

9, 6, 9, 5, 5, 3, 6, 5, 9, 3, 7, 7, 0, 2, 4, 9, 9, 9, 2, 5, 6, 1,

9, 6, 9, 7, 7, 4, 5, 0, 0, 5, 3, 8, 4, 4, 3, 2, 5, 3, 2, 2, 3, 0,

9, 8, 2, 1, 4, 0, 6, 2, 8, 0, 6, 4, 9, 9, 8, 3, 9, 8, 6, 3, 2, 7,

9, 4, 2, 7, 5, 1, 1, 6, 1, 0, 4, 9, 2, 9, 0, 3, 3, 0, 7, 4, 8, 5,

9, 5, 9, 5, 0, 7, 9, 8])

y_test

array([8, 1, 3, 4, 4, 0, 7, 0, 8, 0, 4, 6, 1, 1, 2, 0, 1, 6, 7, 3, 3, 6,

3, 2, 3, 4, 0, 2, 0, 3, 0, 8, 7, 2, 3, 5, 1, 3, 1, 5, 8, 6, 2, 6,

3, 1, 3, 0, 0, 4, 9, 9, 2, 8, 7, 0, 5, 4, 0, 9, 5, 5, 8, 3, 4, 2,

8, 8, 7, 1, 4, 3, 0, 2, 7, 2, 1, 2, 4, 0, 9, 0, 6, 6, 2, 0, 0, 5,

4, 4, 9, 1, 3, 8, 6, 4, 4, 7, 5, 6, 8, 4, 8, 4, 6, 9, 7, 7, 0, 8,

8, 3, 9, 7, 1, 8, 4, 2, 7, 0, 0, 4, 9, 6, 7, 3, 4, 6, 4, 8, 4, 7,

2, 6, 9, 5, 8, 7, 2, 5, 5, 9, 7, 9, 3, 1, 9, 4, 4, 1, 5, 1, 6, 4,

4, 8, 1, 6, 2, 5, 2, 1, 4, 4, 3, 9, 4, 0, 6, 0, 8, 3, 8, 7, 3, 0,

3, 0, 5, 9, 2, 7, 1, 8, 1, 4, 3, 3, 7, 8, 2, 7, 2, 2, 8, 0, 5, 7,

6, 7, 3, 4, 7, 1, 7, 0, 9, 2, 8, 9, 3, 8, 9, 1, 1, 1, 9, 8, 8, 0,

3, 7, 3, 3, 4, 8, 2, 1, 8, 6, 0, 1, 7, 7, 5, 8, 3, 8, 7, 6, 8, 4,

2, 6, 2, 3, 7, 4, 9, 3, 5, 0, 6, 3, 8, 3, 3, 1, 4, 5, 3, 2, 5, 6,

9, 6, 9, 5, 5, 3, 6, 5, 9, 3, 7, 7, 0, 2, 4, 9, 9, 9, 2, 5, 6, 1,

9, 6, 9, 7, 7, 4, 5, 0, 0, 5, 3, 8, 4, 4, 3, 2, 5, 3, 2, 2, 3, 0,

9, 8, 2, 1, 4, 0, 6, 2, 8, 0, 6, 4, 9, 9, 8, 3, 9, 8, 6, 3, 2, 7,

9, 4, 2, 7, 5, 1, 1, 6, 1, 0, 4, 5, 2, 9, 0, 3, 3, 0, 7, 4, 8, 5,

9, 5, 9, 5, 0, 7, 9, 8])

计算准确率

sum(y_test==y_predict)/len(y_test)

0.9888888888888889

调用sklearn中的accuracy_score

from sklearn.metrics import accuracy_score

accuracy_score(y_test,y_predict)

0.9888888888888889

不预测y_predict,只关心分类的准确率

kNNClassifier.score(X_test,y_test)

0.9888888888888889

超参数

1.寻找最好的k

import numpy as np

from sklearn import datasets

digits=datasets.load_digits()

X =digits.data

y =digits.target

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test=train_test_split(X,y,test_size=0.2,random_state=666)

from sklearn.neighbors import KNeighborsClassifier

knn_clf=KNeighborsClassifier(n_neighbors=3)

knn_clf.fit(X_train,y_train)

knn_clf.score(X_test,y_test)

0.9888888888888889

best_score=0.0

best_k= -1

for k in range(1,11):

knn_clf=KNeighborsClassifier(n_neighbors=k)

knn_clf.fit(X_train,y_train)

score = knn_clf.score(X_test,y_test)

if score > best_score:

best_k = k

best_score = score

print("best_k=",best_k)

print("best_score=",best_score)

best_k= 4

best_score= 0.9916666666666667

考虑距离?不考虑距离?

P也是一个超参数

best_method=""

best_score=0.0

best_k= -1

for method in ["uniform","distance"]:

for k in range(1,11):

knn_clf=KNeighborsClassifier(n_neighbors=k,weights=method)

knn_clf.fit(X_train,y_train)

score = knn_clf.score(X_test,y_test)

if score > best_score:

best_method=method

best_k = k

best_score = score

print("best_method=",best_method)

print("best_k=",best_k)

print("best_score=",best_score)

best_method= uniform

best_k= 4

best_score= 0.9916666666666667

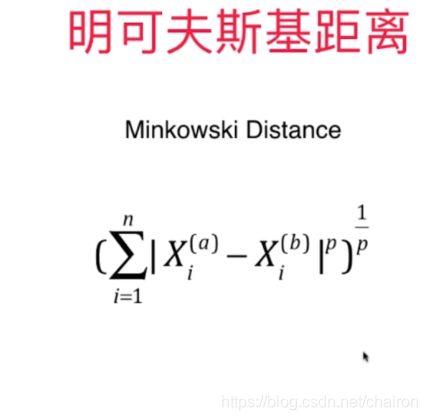

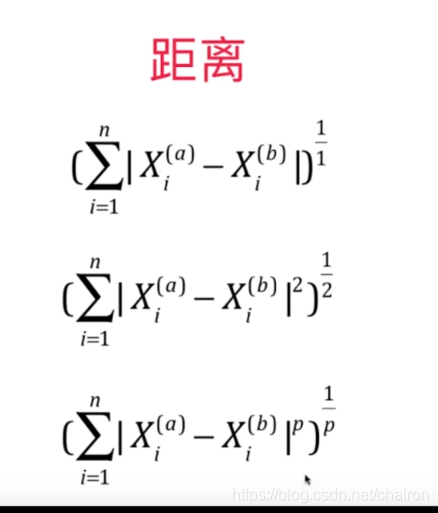

搜索明可夫斯基距离相应的P

%%time

best_p=-1

best_score=0.0

best_k= -1

for k in range(1,11):

for p in range(1,6):

knn_clf=KNeighborsClassifier(n_neighbors=k,weights="distance",p=p)

knn_clf.fit(X_train,y_train)

score = knn_clf.score(X_test,y_test)

if score > best_score:

best_p=p

best_k = k

best_score = score

print("best_p=",best_p)

print("best_k=",best_k)

print("best_score=",best_score)

best_p= 2

best_k= 3

best_score= 0.9888888888888889

Wall time: 37.9 s