1.爬取思路

- 进入’https://jobs.51job.com/zhongshan/p1/'页面,分页进行爬取,每一页中获取所有招聘岗位详情的URL

2.创建项目

scrapy startproject ping

cd ping

scrapy genspider ping 'jobs.51job.com'

3. 编辑需要爬取的数据字段

import scrapy

class ZhaopingItem(scrapy.Item):

title = scrapy.Field()

company = scrapy.Field()

companyperson = scrapy.Field()

companycategory = scrapy.Field()

companydo = scrapy.Field()

location = scrapy.Field()

address = scrapy.Field()

salary = scrapy.Field()

person = scrapy.Field()

data = scrapy.Field()

request = scrapy.Field()

experience = scrapy.Field()

4. 编辑爬虫解析数据和请求转发

class PingSpider(scrapy.Spider):

name = 'ping'

allowed_domains = ['jobs.51job.com']

start_urls = ['https://jobs.51job.com/zhongshan/p1/']

def parse(self, response):

print(response.url)

url = response.url.split('/')

city = url[len(url) - 3]

page = url[len(url) - 2]

new_page = re.findall(r'\d+',page)[0]

urls = response.xpath('.//div[@class="detlist gbox"]/div/p/span/a/@href').extract()

for url in urls:

yield scrapy.Request(url, callback=self.parse_info)

pages = response.xpath('.//div[@class="p_in"]/span[@class="td"]/text()').get()

pagenum = re.findall(r'\d+',pages)[0]

if int(new_page) < int(pagenum):

new_page = int(new_page) + 1

new_url = "https://jobs.51job.com/" + city + "/p" + str(new_page) + "/"

yield scrapy.Request(new_url, callback=self.parse)

def parse_info(self, response):

pass

item = ZhaopingItem()

item['title'] = response.xpath('//div[@class="in"]/div/h1/@title').get()

item['company'] = response.xpath('//div[@class="in"]/div/p/a[@class="catn"]/@title').get()

company = self.getPerAndCatAndDo(response)

item['companycategory'] = company[0]

item['companyperson'] = company[1]

item['companydo'] = company[2]

salary= response.xpath('//div[@class="tHeader tHjob"]/div/div/strong/text()').get()

if salary is not None:

item['salary'] = salary

else:

item['salary'] = ""

address = response.xpath('//div[@class="tBorderTop_box"]/div[@class="bmsg inbox"]/p/text()').get()

if address is not None:

item['address'] = address

else:

item['address'] = ''

recruit = self.getRequestInfo(response)

item['location'] = recruit[0]

item['experience'] = recruit[1]

item['request'] = recruit[2]

item['person'] = recruit[3]

item['data'] = recruit[4]

print(item)

yield item

def getPerAndCatAndDo(self,response):

result = response.xpath('//div[@class="tBorderTop_box"]/div[@class="com_tag"]/p/@title').extract()

if len(result) <= 2:

companycategory = result[0]

companydo = result[1]

elif len(result) == 3:

companycategory = result[0]

companyperson = re.findall(r'\d+',result[1])[0]

companydo = result[2]

else:

companycategory = result[0]

companyperson = ''

companydo = ''

return [companycategory,companyperson,companydo]

def getRequestInfo(self,response):

result = response.xpath('//div[@class="in"]/div/p[@class="msg ltype"]/text()').getall()

if len(result) >= 5:

location = result[0].replace(u'\xa0', u'')

experience = result[1].replace(u'\xa0', u'')

request = result[2].replace(u'\xa0', u'')

person = result[3].replace(u'\xa0', u'')

data = result[4].replace(u'\xa0', u'')

elif len(result) == 4:

location = result[0].replace(u'\xa0', u'')

experience = result[1].replace(u'\xa0', u'')

request = ''

person = result[2].replace(u'\xa0', u'')

data = result[3].replace(u'\xa0', u'')

return [location,experience,request,person,data]

5. 将爬取的数据保存到mongo中

import pymongo

from scrapy.exporters import JsonLinesItemExporter

class ZhaopingPipeline:

def __init__(self):

client = pymongo.MongoClient(host='127.0.0.1', port=27017)

db = client.ping

self.col = db.ping

def process_item(self,item,spider):

self.col.insert(dict(item))

print('插入成功')

return item

6. 设置配置文件 settings.py

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'zhaoping.pipelines.ZhaopingPipeline': 300,

}

7. 启动爬虫

scrapy crawl ping

8.构建分布式爬虫

pip3 install scrapy-redis -i http://mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com

- 将爬虫的类 scrapy.Spider 换成 scrapy_redis.spiders.RedisSpider

- 将 start_urls = [‘https://jobs.51job.com/zhongshan/p1/’] 删掉,添加一个 redis_key

class PingSpider(RedisCrawlSpider):

name = 'ping'

allowed_domains = ['jobs.51job.com']

redis_key = "ping:start_url"

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

SCHEDULER_PERSIST = True

REDIS_HOST = '127.0.0.1'

REDIS_PORT = 6379

lpush ping:start_url https://jobs.51job.com/zhongshan/p1/

- 启动爬虫,就可以在redis中看到爬取的数据了

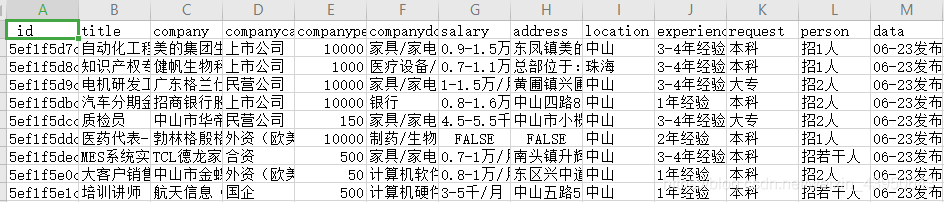

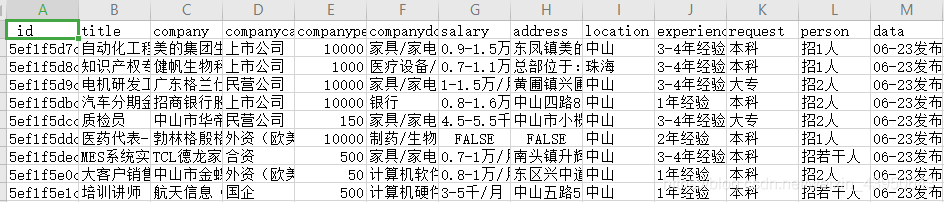

- 爬出的数据如下: