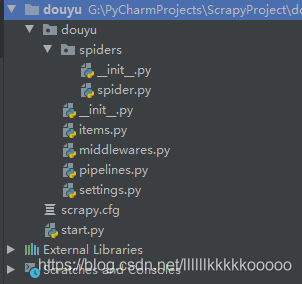

一、准备

1.创建scrapy项目

scrapy startproject douyu

cd douyu

scrapy genspider spider "www.douyu.com"

2.创建启动文件start.py

from scrapy import cmdline

cmdline.execute("scrapy crawl douyu".split())

二、分析

准备完毕,开始分析。

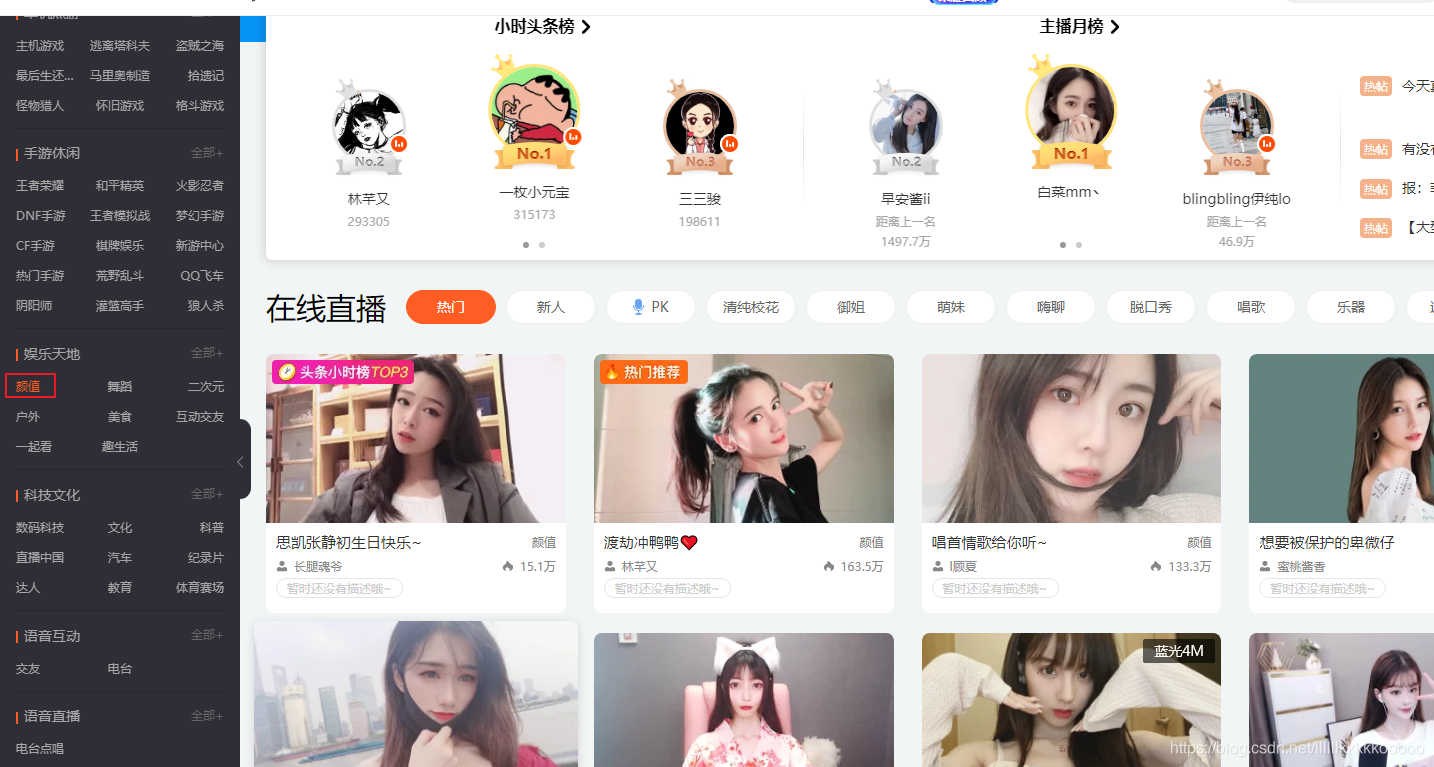

先进入斗鱼看看。

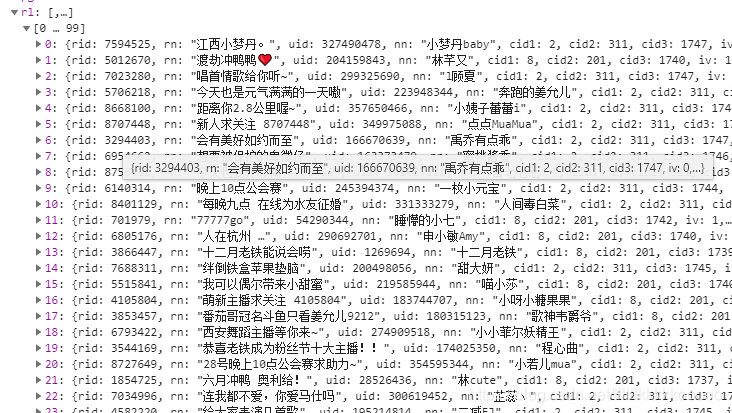

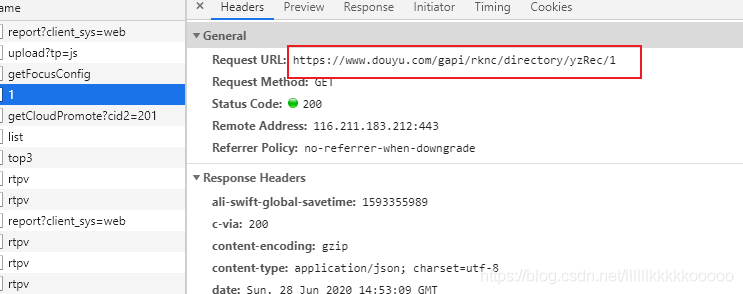

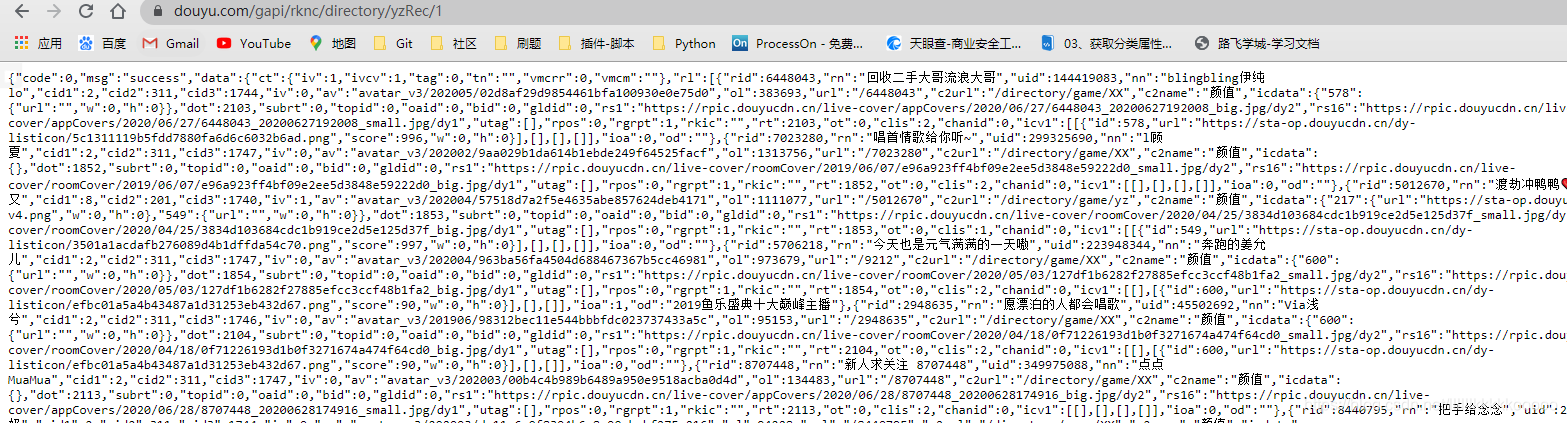

找到发送的请求

可以看到页面的数据都在其中

而请求的链接为

访问该网址

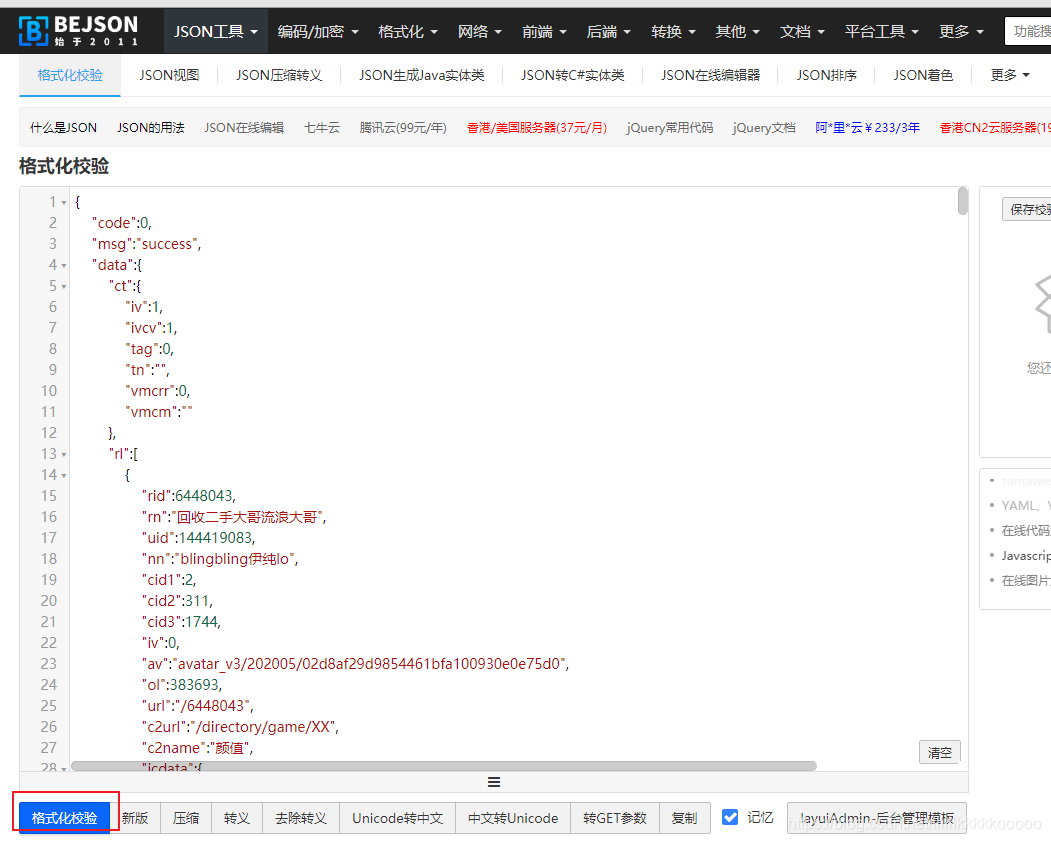

这么看数据可能不太好分析,复制数据使用在线json进行格式化校验

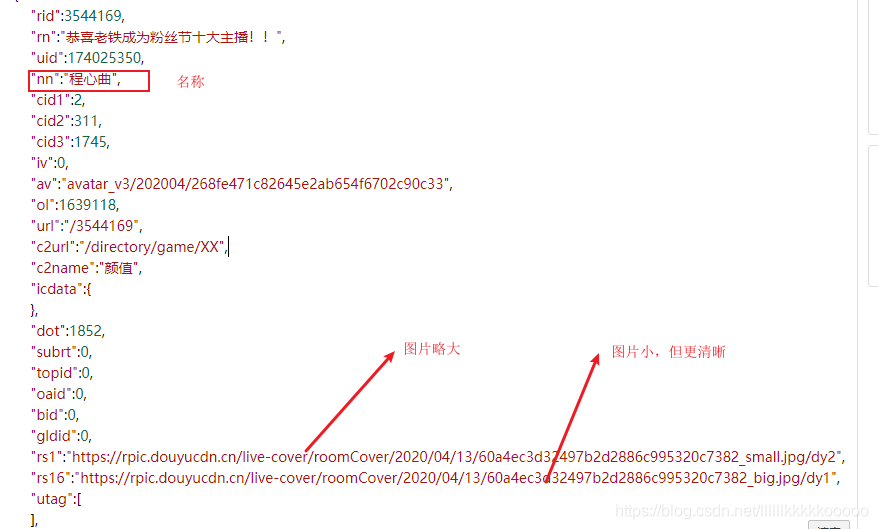

本次项目中要获取的是主播名称和图片url,分析json数据如下:

我们需要的数据位置:

OK,接下来分析代码

class DouyuSpider(scrapy.Spider):

name = 'douyu'

# allowed_domains = ['www.douyu.com']

#直接访问我们刚才找到的链接

start_urls = ['https://www.douyu.com/gapi/rknc/directory/yzRec/1']

offset = 1 #爬取多页限制条件

def parse(self, response):

#通过对json的分析,名称和图片都在rl下,我们直接拿到data下的rl进行遍历获取

data_list = json.loads(response.body)["data"]["rl"]

for data in data_list:

nn = data["nn"] #拿到名称

img_url = data["rs1"] #拿到图片url

item = DouyuItem(nn=nn,img_url=img_url)

yield item

#爬取多页,只需改变offset的限制条件,然后进行回调访问

self.offset += 1

if self.offset < 4:

num = int(str(response).split(" ")[1].replace(">", "").split("/")[-1])

num += 1

url = "https://www.douyu.com/gapi/rknc/directory/yzRec/" + str(num)

print(url)

yield scrapy.Request(url=url,callback=self.parse,encoding="utf-8",dont_filter=True)

class DouyuPipeline(ImagesPipeline):

#get_media_requests,该函数的作用是下载图片

def get_media_requests(self, item, info):

image_link = item["img_url"]

#传参,使图片名称为主播名称

image_name = item['nn']

yield scrapy.Request(image_link,meta={"image_name":image_name})

#图片重命名

def file_path(self, request, response=None, info=None):

#得到传过来的主播名称

category = request.meta['image_name']

#直接返回想要得到的图片名称

return category + ".jpg"

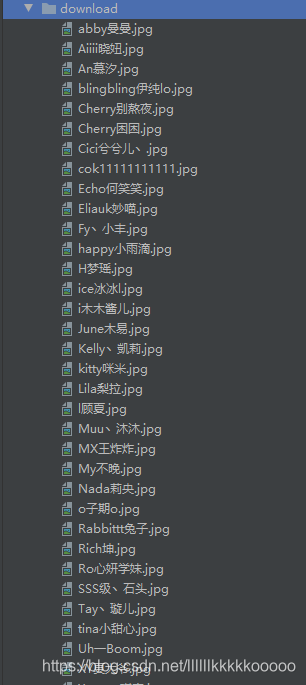

最终实现结果为:

三、完整代码

spider.py

# -*- coding: utf-8 -*-

import scrapy

import json

from douyu.items import DouyuItem

class DouyuSpider(scrapy.Spider):

name = 'douyu'

# allowed_domains = ['www.douyu.com']

start_urls = ['https://www.douyu.com/gapi/rknc/directory/yzRec/1']

offset = 1

def parse(self, response):

data_list = json.loads(response.body)["data"]["rl"]

for data in data_list:

nn = data["nn"]

img_url = data["rs1"]

item = DouyuItem(nn=nn,img_url=img_url)

yield item

self.offset += 1

if self.offset < 4:

num = int(str(response).split(" ")[1].replace(">", "").split("/")[-1])

num += 1

url = "https://www.douyu.com/gapi/rknc/directory/yzRec/" + str(num)

print(url)

yield scrapy.Request(url=url,callback=self.parse,encoding="utf-8",dont_filter=True)

pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

import scrapy

from scrapy.pipelines.images import ImagesPipeline

from douyu import settings

import os

class DouyuPipeline(ImagesPipeline):

#get_media_requests,该函数的作用是下载图片

def get_media_requests(self, item, info):

image_link = item["img_url"]

image_name = item['nn']

yield scrapy.Request(image_link,meta={"image_name":image_name})

def file_path(self, request, response=None, info=None):

# file_name = request.url.split('/')[-1]

category = request.meta['image_name']

# images_store = settings.IMAGES_STORE

# category_path = os.path.join(images_store, category)

# image_name = os.path.join(category, file_name)

return category + ".jpg"

items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class DouyuItem(scrapy.Item):

nn = scrapy.Field() #主播名称

img_url = scrapy.Field() #直播间封面

settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for douyu project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'douyu'

SPIDER_MODULES = ['douyu.spiders']

NEWSPIDER_MODULE = 'douyu.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'douyu (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

LOG_LEVEL = "ERROR"

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36'

}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'douyu.middlewares.DouyuSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'douyu.middlewares.DouyuDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'douyu.pipelines.DouyuPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

import os

IMAGES_STORE = "download"

本次项目到此结束,觉得不错的小伙伴可以点赞关注收藏哦,谢谢各位!

博主更多博客