超详细的Hadoop HA 高可用安装教程哟(^U^)ノ小白可入,手把手教学,可能出现的结果在这里会有呈现,超详细!答主在安装的时候也是踩过超多坑的 ̄□ ̄||hhh现分享给大家一些常见问题的解决方法来避坑呀欢迎大家前来指正━(`∀´)ノ亻!

前期准备知识

HA & Federation 介绍

https://editor.csdn.net/md/?articleId=106796456

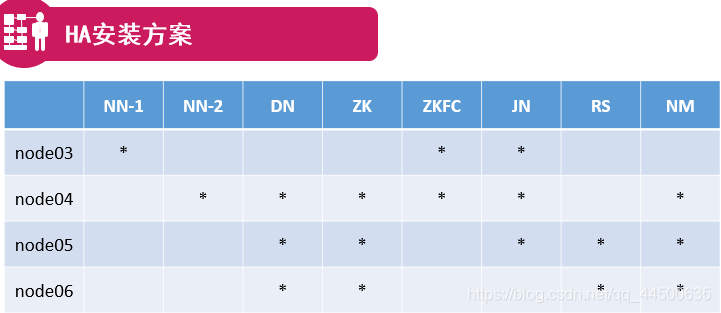

HA安装方案

接下来我们将安照这个表格来进行配置

一、jdk的分发和安装

1.分发jdk给node02,node03,node04

在发送之前我们可以在下面这个会话框在输入ll查看一下里面的文件(要先在红色圈住的地方选全部会话)

分发jdk给node02、node03、node04

scp jdk-7u67-linux-x64.rpm node02:`pwd`

scp jdk-7u67-linux-x64.rpm node03:`pwd`

scp jdk-7u67-linux-x64.rpm node04:`pwd`

注意:` 这一符号是数字1左边这个键

[root@node01 ~]# scp jdk-7u67-linux-x64.rpm node02:`pwd`

ssh: connect to host node02 port 22: No route to host

lost connection

[root@node01 ~]# scp jdk-7u67-linux-x64.rpm node02:`pwd`

root@node02's password:

jdk-7u67-linux-x64.rpm 100% 121MB 13.4MB/s 00:09

[root@node01 ~]# scp jdk-7u67-linux-x64.rpm node03:`pwd`

The authenticity of host 'node03 (192.168.19.33)' can't be established.

RSA key fingerprint is ca:52:70:e9:6e:d9:7b:0c:dd:25:ac:01:ab:ba:dc:ec.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node03,192.168.19.33' (RSA) to the list of known hosts.

root@node03's password:

jdk-7u67-linux-x64.rpm 100% 121MB 9.3MB/s 00:13

[root@node01 ~]# scp jdk-7u67-linux-x64.rpm node04:`pwd`

The authenticity of host 'node04 (192.168.19.34)' can't be established.

RSA key fingerprint is ca:52:70:e9:6e:d9:7b:0c:dd:25:ac:01:ab:ba:dc:ec.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node04,192.168.19.34' (RSA) to the list of known hosts.

root@node04's password:

jdk-7u67-linux-x64.rpm 100% 121MB 9.3MB/s 00:13

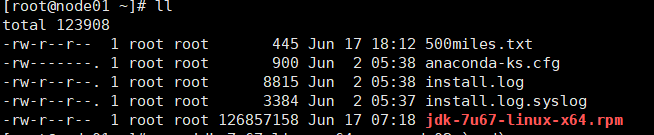

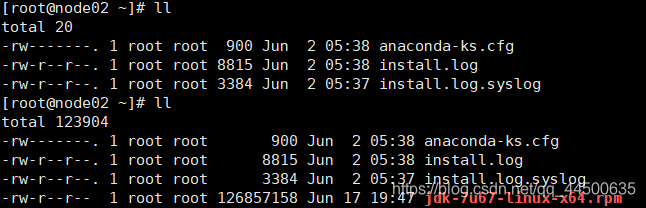

并在Xshell的全部会话栏里一起ll,看jdk是否发送成功。

node01

node02、node03、node04

2、给node02、03、04分别安装jdk

rpm -i jdk-7u67-linux-x64.rpm

[root@node02 ~]# rpm -i jdk-7u67-linux-x64.rpm

Unpacking JAR files...

rt.jar...

jsse.jar...

charsets.jar...

tools.jar...

localedata.jar...

jfxrt.jar...

安装成功

在node01上cd /etc,在此目录下把profile文件分发到node02、03、04上。

scp profile node02:`pwd`

利用Xshell全部会话栏,

source /etc/profile

利用Xshell全部会话栏,jps,看04、05、06这三台机子的jdk是否装好。

[root@node01 ~]# cd /etc

[root@node01 etc]# scp profile node02:`pwd`

root@node02's password:

profile 100% 1954 1.9KB/s 00:00

[root@node01 etc]# scp profile node03:`pwd`

root@node03's password:

profile 100% 1954 1.9KB/s 00:00

[root@node01 etc]# scp profile node04:`pwd`

root@node04's password:

profile 100% 1954 1.9KB/s 00:00

node01、node02、node03、noe04都正常显示jps

[root@node01 etc]# source /etc/profile

[root@node01 etc]# jps

2092 Jps

二、同步所有服务器的时间

-date 查看机子当前的时间。

时间不能差太大,否则集群启动后某些进程跑不起来。

若时间不同步,怎么办?

1、yum进行时间同步器的安装

yum -y install ntp

[root@node01 etc]# yum -y install ntp

Loaded plugins: fastestmirror

base | 3.7 kB 00:00

base/primary_db | 4.7 MB 00:00

extras | 3.4 kB 00:00

extras/primary_db | 29 kB 00:00

updates | 3.4 kB 00:00

updates/primary_db | 10 MB 00:01

Setting up Install Process

Resolving Dependencies

--> Running transaction check

---> Package ntp.x86_64 0:4.2.6p5-15.el6.centos will be installed

--> Processing Dependency: ntpdate = 4.2.6p5-15.el6.centos for package: ntp-4.2.6p5-15.el6.centos.x

86_64--> Running transaction check

---> Package ntpdate.x86_64 0:4.2.6p5-15.el6.centos will be installed

--> Finished Dependency Resolution

Dependencies Resolved

===================================================================================================

Package Arch Version Repository Size

===================================================================================================

Installing:

ntp x86_64 4.2.6p5-15.el6.centos updates 600 k

Installing for dependencies:

ntpdate x86_64 4.2.6p5-15.el6.centos updates 79 k

Transaction Summary

===================================================================================================

Install 2 Package(s)

Total download size: 679 k

Installed size: 1.8 M

Downloading Packages:

(1/2): ntp-4.2.6p5-15.el6.centos.x86_64.rpm | 600 kB 00:00

(2/2): ntpdate-4.2.6p5-15.el6.centos.x86_64.rpm | 79 kB 00:00

---------------------------------------------------------------------------------------------------

Total 1.2 MB/s | 679 kB 00:00

warning: rpmts_HdrFromFdno: Header V3 RSA/SHA1 Signature, key ID c105b9de: NOKEY

Retrieving key from file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

Importing GPG key 0xC105B9DE:

Userid : CentOS-6 Key (CentOS 6 Official Signing Key) <centos-6-key@centos.org>

Package: centos-release-6-5.el6.centos.11.1.x86_64 (@anaconda-CentOS-201311272149.x86_64/6.5)

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-6

Running rpm_check_debug

Running Transaction Test

Transaction Test Succeeded

Running Transaction

Warning: RPMDB altered outside of yum.

Installing : ntpdate-4.2.6p5-15.el6.centos.x86_64 1/2

Installing : ntp-4.2.6p5-15.el6.centos.x86_64 2/2

Verifying : ntpdate-4.2.6p5-15.el6.centos.x86_64 1/2

Verifying : ntp-4.2.6p5-15.el6.centos.x86_64 2/2

Installed:

ntp.x86_64 0:4.2.6p5-15.el6.centos

Dependency Installed:

ntpdate.x86_64 0:4.2.6p5-15.el6.centos

Complete!

2、执行同步命令

-ntpdate time1.aliyun.com 和阿里云服务器时间同步

ntpdate time1.aliyun.com

[root@node01 etc]# ntpdate time1.aliyun.com

17 Jun 12:25:11 ntpdate[2132]: step time server 203.107.6.88 offset -23943.713789 sec

三、装机之前的配置文件检查

1、-cat /etc/sysconfig/network

查看HOSTNAME是否正确

|cat /etc/sysconfig/network

[root@node01 ~]# cat /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=node01

2、-cat /etc/hosts

查看IP映射是否正确

cat /etc/hosts

[root@node01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.19.31 node01

192.168.19.32 node02

192.168.19.33 node03

192.168.19.34 node04

vi /etc/hosts

若不正确,可以用vi /etc/hosts来改文件,也可以把node01上的用scp分发过去。

3、-cat /etc/sysconfig/selinux里是否

SELINUX=disabled

cat /etc/sysconfig/selinux

[root@node01 ~]# cat /etc/sysconfig/selinux

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

4、service iptables status查看防火墙是否关闭

service iptables status

[root@node01 ~]# service iptables status

iptables: Firewall is not running.

四、NN与其他三台机子的免秘钥设置

1、在家目录下 ll –a看下有无.ssh文件,如果没有就ssh loalhost一下

(铁憨憨才会ssh localhost后忘记exit)。

[root@node01 ~]# ll -a

total 123944

dr-xr-x---. 3 root root 4096 Jun 17 2020 .

dr-xr-xr-x. 22 root root 4096 Jun 17 2020 ..

-rw-r--r-- 1 root root 445 Jun 17 2020 500miles.txt

-rw-------. 1 root root 900 Jun 2 05:38 anaconda-ks.cfg

-rw-------. 1 root root 2858 Jun 17 12:48 .bash_history

-rw-r--r--. 1 root root 18 May 20 2009 .bash_logout

-rw-r--r--. 1 root root 176 May 20 2009 .bash_profile

-rw-r--r--. 1 root root 176 Sep 23 2004 .bashrc

-rw-r--r--. 1 root root 100 Sep 23 2004 .cshrc

-rw-r--r--. 1 root root 8815 Jun 2 05:38 install.log

-rw-r--r--. 1 root root 3384 Jun 2 05:37 install.log.syslog

-rw-r--r-- 1 root root 126857158 Jun 17 07:18 jdk-7u67-linux-x64.rpm

drwx------ 2 root root 4096 Jun 17 07:55 .ssh

-rw-r--r--. 1 root root 129 Dec 4 2004 .tcshrc

2.-cd .ssh ,并ll查看一下

[root@node01 ~]# cd .ssh

[root@node01 .ssh]# ll

total 16

-rw-r--r-- 1 root root 601 Jun 17 07:55 authorized_keys

-rw------- 1 root root 672 Jun 17 07:52 id_dsa

-rw-r--r-- 1 root root 601 Jun 17 07:52 id_dsa.pub

-rw-r--r-- 1 root root 1999 Jun 17 2020 known_hosts

3.把node03的公钥发给其他三台机子

scp id_dsa.pub node02:`pwd`/node01.pub

scp id_dsa.pub node03:`pwd`/node01.pub

scp id_dsa.pub node04:`pwd`/node01.pub

node02:公钥发送到哪台机子

node01.pub:node01的公钥重命名

(如果遇到这种情况,需要去检查一下node02里面是不是没有创建.ssh文件夹,用mkdir .ssh命令创建;node03,node04同理)

[root@node01 .ssh]# scp id_dsa.pub node02:`pwd`/node01.pub

root@node02's password:

scp: /root/.ssh/node01.pub: No such file or directory

[root@node01 .ssh]# scp id_dsa.pub node02:`pwd`/node01.pub

root@node02's password:

id_dsa.pub 100% 601 0.6KB/s 00:00

[root@node01 .ssh]# scp id_dsa.pub node03:`pwd`/node01.pub

root@node03's password:

id_dsa.pub 100% 601 0.6KB/s 00:00

[root@node01 .ssh]# scp id_dsa.pub node04:`pwd`/node01.pub

root@node04's password:

id_dsa.pub 100% 601 0.6KB/s 00:00

这时候我们在node02、node03、node04上面查看

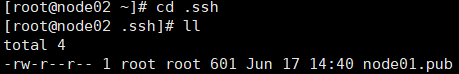

4、 在node02的.ssh目录下看是否有node01.pub

如果有,那就追加到authorized_keys

cat node01.pub >> authorized_keys

并且在node01上ssh node02看是否免密钥了,

记住最后一定要exit一下

给node03、04都追加一下node01.pub,也就是在node03、04的.ssh目录下执行cat node01.pub >> authorized_keys

5、同理给其他节点发送公钥并在各个节点上把node01的公钥追加上

scp id_dsa.pub node03:`pwd`/node01.pub

scp id_dsa.pub node04:`pwd`/node01.pub

在node01上分别ssh node02,ssh node03,ssh node04,看是否能免密钥登录,记住每次ssh后都别忘了exit一下

[root@node01 .ssh]# ssh node02

Last login: Wed Jun 17 13:09:05 2020 from 192.168.19.1

[root@node02 ~]# exit

logout

Connection to node02 closed.

[root@node01 .ssh]# ssh node03

Last login: Wed Jun 17 13:09:18 2020 from 192.168.19.1

[root@node03 ~]# exit

logout

Connection to node03 closed.

[root@node01 .ssh]# ssh node04

Last login: Wed Jun 17 13:09:31 2020 from 192.168.19.1

[root@node04 ~]# exit

logout

Connection to node04 closed.

OK,到这里我们在node01上面就可以免秘钥登录其他三台虚拟机了

五、两个NN间互相免密钥

node01与node02间互相免密钥

node01可免密钥登录node02,那现需node02上能免密钥登node01,

所以

在node02上:

在node02上的.ssh目录下(这里我曾经执行了很多次都不成功,最后发现要在.ssh目录下面才可以,希望大家不要和一样哈(/ω\))

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

ssh localhost验证一下(记住exit一下)

我们把node02的公钥分发到node01上并重命名为node02.pub

scp id_dsa.pub node01:`pwd`/node02.pub

在node01上:

在node01上的.ssh目录下

cat node02.pub >> authorized_keys

最后在node02上ssh node01验证一下可否免密钥登录

node02的总体代码

[root@node02 .ssh]# ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

Generating public/private dsa key pair.

/root/.ssh/id_dsa already exists.

Overwrite (y/n)? y

Your identification has been saved in /root/.ssh/id_dsa.

Your public key has been saved in /root/.ssh/id_dsa.pub.

The key fingerprint is:

95:3c:7b:e4:69:12:64:cf:f4:31:86:9b:a4:95:f0:a9 root@node02

The key's randomart image is:

+--[ DSA 1024]----+

| +..o+ |

| + *=+ o |

| *+*o. |

| ..Bo. |

| S E = |

| + |

| |

| |

| |

+-----------------+

[root@node02 .ssh]# cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[root@node02 .ssh]# ssh localhost

Last login: Wed Jun 17 15:26:06 2020 from localhost

[root@node02 ~]# exit

logout

Connection to localhost closed.

[root@node02 .ssh]# scp id_dsa.pub node01:`pwd`/node02.pub

root@node01's password:

id_dsa.pub 100% 601 0.6KB/s 00:00

[root@node02 .ssh]# ssh node01

Last login: Wed Jun 17 13:08:51 2020 from 192.168.19.1

[root@node01 ~]# exit

logout

Connection to node01 closed.

[root@node02 .ssh]#

六、修改namenode的一些配置信息

1、vi hdfs-site.xml

在cd /opt/ll/hadoop-2.6.5/etc/hadoop目录下

去掉snn的配置

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node01:50090</value>

</property>

增加以下property

为namenode集群定义一个services name

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<!--指定HDFS副本的数量,不能超过机器节点数-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- nameservice 包含哪些namenode,为各个namenode起名 -->

<property>

<name>dfs.ha.namenodes. mycluster</name>

<value>nn1,nn2</value>

</property>

<!-- 名为nn1的namenode的rpc地址和端口号,rpc用来和datanode通讯 -->

<property>

<name>dfs.namenode.rpc-address. mycluster.nn1</name>

<value>node03:9000</value>

</property>

<!--名为nn1的namenode的http地址和端口号,用来和web客户端通讯 -->

<property>

<name>dfs.namenode.http-address. mycluster.nn1</name>

<value>node03:50070</value>

</property>

<!-- 名为nn2的namenode的rpc地址和端口号,rpc用来和datanode通讯 -->

<property>

<name>dfs.namenode.rpc-address. mycluster.nn2</name>

<value>node04:9000</value>

</property>

<!--名为nn2的namenode的http地址和端口号,用来和web客户端通讯 -->

<property>

<name>dfs.namenode.http-address. mycluster.nn2</name>

<value>node04:50070</value>

</property>

<!-- namenode间用于共享编辑日志的journal节点列表 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://node03:8485;node04:8485;node05:8485/mycluster</value>

</property>

<!-- journalnode 上用于存放edits日志的目录 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/var/ldy/hadoop/ha/jn</value>

</property>

<!-- 指定该集群出现故障时,是否自动切换到另一台namenode -->

<property>

<name>dfs.ha.automatic-failover.enabled.cluster</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.cluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 一旦需要NameNode切换,使用ssh方式进行操作 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 如果使用ssh进行故障切换,使用ssh通信时用的密钥存储的位置 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

总体结果为

<configuration>

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>node01:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>node02:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.nn1</name>

<value>node01:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2</name>

<value>node02:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://node01:8485;node02:8485;node03:8485/mycluster</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/var/ll/hadoop/ha/jn</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_dsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

注意/var/ll/hadoop/ha/jn中“ll”是自己名字的文件夹

2、vi core-site.xml

在cd /opt/ll/hadoop-2.6.5/etc/hadoop目录下

<!– 集群名称mycluster-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<!– zookeeper布署的位置-->

<property>

<name>ha.zookeeper.quorum</name>

<value>node02:2181,node03:2181,node04:2181</value>

</property>

3、vi slaves

node02

node03

node04

4、安装hadoop

cd /opt ,将其下的ldy目录分发到node02、03、04

这里会传输很多东西,时间会很久哈

scp –r ll/ node02:`pwd`

scp –r ll/ node03:`pwd`

scp –r ll/ node04:`pwd`

传输成功的部分代码

yarn.cmd 100% 11KB 10.6KB/s 00:00

mapred 100% 5205 5.1KB/s 00:00

hadoop.cmd 100% 8298 8.1KB/s 00:00

test-container-executor 100% 121KB 121.4KB/s 00:00

mapred.cmd 100% 5949 5.8KB/s 00:00

hadoop 100% 5479 5.4KB/s 00:00

rcc 100% 1776 1.7KB/s 00:00

hadoop-root-datanode-node01.log 100% 26KB 26.0KB/s 00:00

hadoop-root-namenode-node01.out 100% 4908 4.8KB/s 00:00

SecurityAuth-root.audit 100% 0 0.0KB/s 00:00

hadoop-root-secondarynamenode-node01.log 100% 23KB 23.4KB/s 00:00

hadoop-root-secondarynamenode-node01.out 100% 715 0.7KB/s 00:00

hadoop-root-datanode-node01.out 100% 715 0.7KB/s 00:00

hadoop-root-namenode-node01.log 100% 29KB 29.5KB/s 00:00

README.txt 100% 1366 1.3KB/s 00:00

hadoop-2.6.5.tar.gz 100% 175MB 5.3MB/s 00:33

5、将hdfs-site.xml和core-site.xml分发到node04、05、06

在cd /opt/ll/hadoop-2.6.5/etc/hadoop目录下

scp hdfs-site.xml core-site.xml node02:`pwd`

scp hdfs-site.xml core-site.xml node03:`pwd`

scp hdfs-site.xml core-site.xml node04:`pwd`

[root@node01 ~]# cd /opt/ll/hadoop-2.6.5/etc/hadoop

[root@node01 hadoop]# scp hdfs-site.xml core-site.xml node02:`pwd`

hdfs-site.xml 100% 2305 2.3KB/s 00:00

core-site.xml 100% 1012 1.0KB/s 00:00

[root@node01 hadoop]# scp hdfs-site.xml core-site.xml node03:`pwd`

hdfs-site.xml 100% 2305 2.3KB/s 00:00

core-site.xml 100% 1012 1.0KB/s 00:00

[root@node01 hadoop]# scp hdfs-site.xml core-site.xml node04:`pwd`

hdfs-site.xml 100% 2305 2.3KB/s 00:00

core-site.xml 100% 1012 1.0KB/s 00:00

OK,到这里我们就完成了50%啦!

ヾ(◍°∇°◍)ノ゙

下面我们将进行第二阶段的安装

超详细大数据学习之Hadoop HA 高可用安装(二)

https://blog.csdn.net/qq_44500635/article/details/106815445