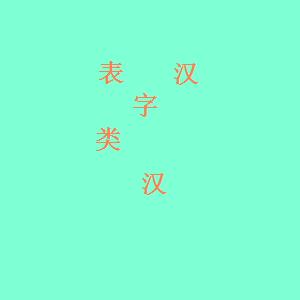

首先我们来生成一些样本用来训练和测试 首先上c#代码

string header = "<annotation><size><width>"+300+"</width><height>"+300+"</height></size>";

Bitmap bit = new Bitmap(300, 300);

Graphics g = Graphics.FromImage(bit);

g.FillRectangle(new SolidBrush(Color.Aquamarine), new Rectangle(0, 0, 1000, 1000));

Font font = new Font("华为宋体", 18, FontStyle.Bold);

//SizeF a=g.MeasureString("华", font);27

string name = Str(10,false);

for (int i = 0; i < 5; i++)

{

int x = rd.Next(30, 270);

int y = rd.Next(30, 270);

string text = f[rd.Next(0, f.Length - 1)];

PointF point = new PointF(x, y);

g.DrawString(text, font, Brushes.Coral, point);

int acs = (int)char.Parse(text);

header += string.Format(@"<object>

<name>a{0}</name>

<bndbox>

<xmin>{1}</xmin>

<ymin>{2}</ymin>

<xmax>{3}</xmax>

<ymax>{4}</ymax>

</bndbox>

</object>", acs.ToString(), x,y,x+30,y+30);

}

header += "</annotation>";

File.AppendAllText(name+".xml", header);

bit.Save(name+".png",System.Drawing.Imaging.ImageFormat.Jpeg);

贴部分代码 生成一批样本 然后看一下样本长啥样

然后贴一些层

class MTCNNDataLayer : public DataLayer{

public:

SETUP_LAYERFUNC(MTCNNDataLayer);

virtual int getBatchCacheSize(){

return 3;

}

virtual void loadBatch(Blob** top, int numTop){

int batch_size = top[0]->num();

//正负样本比例要是1:3

vector<Mat> ims;

vector<Rect_<float>> roi;

vector<int> label;

int numSel = 0;

int dw = top[0]->width();

int dh = top[0]->height();

while (numSel < batch_size){

string path = vfs_[cursor_];

Mat im = imread(path, top[0]->channel() == 3 ? 1 : 0);

vector<Rect_<float>> bbox = getBBoxFromPath(path);

int numPositive = (rand() % (bbox.size() - 1)) + 1;

int numNegitive = numPositive * 3;

vector<int> inds(bbox.size());

for (int i = 0; i < inds.size(); ++i)

inds[i] = i;

std::random_shuffle(inds.begin(), inds.end());

float accp = 0.5;

int numSelPos = 0;

int i = 0;

int numLab1 = 0, numLab_1 = 0;

while (numSelPos < numPositive && numSel < batch_size){

Rect crop = bbox[inds[i]];

Rect raw = crop;

float offx = randr(-crop.width * accp, crop.width * accp);

float offy = randr(-crop.height * accp, crop.height * accp);

crop.x += offx;

crop.y += offy;

crop.width += randr(-crop.width * accp, crop.width * accp);

crop.height = crop.width;

crop = crop & Rect(0, 0, im.cols, im.rows);

crop.width = min(crop.width, crop.height);

crop.height = crop.width;

float iou = IoU(crop, raw);

bool sel = true;

int lab = 0;

if (iou > 0.65){

lab = 1;

numLab1++;

}

else if (iou > 0.4 && (numLab_1+1) / (float)numPositive < 0.5){

lab = -1;

numLab_1++;

}

else{

sel = false;

}

if (sel){

numSelPos++;

Rect_<float> gt(

(raw.x - crop.x) / (float)crop.width,

(raw.y - crop.y) / (float)crop.height,

(raw.x + raw.width-1 - (crop.x + crop.width - 1)) / (float)crop.width,

(raw.y + raw.height-1 - (crop.y + crop.height - 1)) / (float)crop.height

);

Mat uim;

resize(im(crop), uim, Size(dw, dh));

ims.push_back(uim);

roi.push_back(gt);

label.push_back(lab);

numSel++;

}

i++;

if (i == numPositive)

i = 0;

}

int numSelNeg = 0;

i = 0;

while (i < numNegitive && numSel < batch_size){

int w = randr(12, min(im.rows, im.cols));

int x = randr(0, im.cols - w);

int y = randr(0, im.rows - w);

Rect crop(x, y, w, w);

crop = crop & Rect(0, 0, im.cols, im.rows);

float iou = maxIoU(bbox, crop);

if (iou < 0.3){

Rect_<float> gt;

Mat uim;

resize(im(crop), uim, Size(dw, dh));

ims.push_back(uim);

roi.push_back(gt);

label.push_back(0);

numSel++;

}

i++;

if (i == numNegitive)

i = 0;

}

cursor_++;

if (cursor_ == vfs_.size()){

cursor_ = 0;

std::random_shuffle(vfs_.begin(), vfs_.end());

}

}

CV_Assert(numSel == batch_size);

vector<int> rinds;

for (int i = 0; i < batch_size; ++i)

rinds.push_back(i);

std::random_shuffle(rinds.begin(), rinds.end());

float* data_im = top[0]->mutable_cpu_data();

float* data_label = top[1]->mutable_cpu_data();

float* data_roi = top[2]->mutable_cpu_data();

for (int i = 0; i < batch_size; ++i){

vector<Mat> ms;

for (int c = 0; c < top[0]->channel(); ++c)

ms.push_back(Mat(dh, dw, CV_32F, data_im + c * dw * dh));

int ind = rinds[i];

ims[ind].convertTo(ims[ind], CV_32F, 1 / 127.5, -1);

split(ims[ind], ms);

data_im += top[0]->channel() * dw * dh;

*data_label++ = label[ind];

*data_roi++ = roi[ind].x;

*data_roi++ = roi[ind].y;

*data_roi++ = roi[ind].width;

*data_roi++ = roi[ind].height;

}

}

void preperData(){

if (this->phase_ == PhaseTest)

paFindFiles("data-test", vfs_, "*.bmp");

else

paFindFiles("data-train", vfs_, "*.bmp");

std::random_shuffle(vfs_.begin(), vfs_.end());

this->cursor_ = 0;

if (vfs_.size() == 0){

printf("没有数据.\n");

exit(-1);

}

}

virtual void setup(const char* name, const char* type, const char* param_str, int phase, Blob** bottom, int numBottom, Blob** top, int numTop){

map<string, string> param = parseParamStr(param_str);

const int batch_size = getParamInt(param, "batch_size", phase == PhaseTest ? 10: 64);

this->phase_ = phase;

top[0]->Reshape(batch_size, 3, getParamInt(param, "width"), getParamInt(param, "height"));

top[1]->Reshape(batch_size, 1, 1, 1);

top[2]->Reshape(batch_size, 4, 1, 1);

preperData();

__super::setup(name, type, param_str, phase, bottom, numBottom, top, numTop);

}

private:

PaVfiles vfs_;

int cursor_;

int phase_;

};

class MTCNNLoss : public AbstractCustomLayer{

public:

SETUP_LAYERFUNC(MTCNNLoss);

virtual void setup(const char* name, const char* type, const char* param_str, int phase, Blob** bottom, int numBottom, Blob** top, int numTop){

diff_ = newBlob();

diff_->ReshapeLike(*bottom[0]);

top[0]->Reshape(1, 1, 1, 1);

//保证loss层的权重为1

top[0]->mutable_cpu_diff()[0] = 1;

}

virtual void forward(Blob** bottom, int numBottom, Blob** top, int numTop){

const float* label = bottom[2]->cpu_data();

int countLabel = bottom[2]->num();

int ignore_label = 0;

//label

float* diff = diff_->mutable_gpu_data();

int channel = bottom[0]->channel();

//memset(diff, 0, sizeof(float)*diff_->count());

caffe_gpu_set(diff_->count(), float(0), diff);

const float* b0 = bottom[0]->gpu_data();

const float* b1 = bottom[1]->gpu_data();

float loss = 0;

for (int i = 0; i < countLabel; ++i){

if (label[i] != ignore_label){

caffe_gpu_sub(

channel,

b0 + i * channel,

b1 + i * channel,

diff + i * channel);

//float dot = caffe_dot(channel, diff + i * channel, diff + i * channel);

float dot = 0;

caffe_gpu_dot(channel, diff + i * channel, diff + i * channel, &dot);

loss += dot / float(2);

}

}

top[0]->mutable_cpu_data()[0] = loss;

}

virtual void backward(Blob** bottom, int numBottom, Blob** top, int numTop, const bool* propagate_down){

const float* label = bottom[2]->cpu_data();

int countLabel = bottom[2]->num();

int channels = bottom[0]->channel();

int ignore_label = 0;

for (int i = 0; i < 2; ++i) {

if (propagate_down[i]) {

caffe_gpu_set(bottom[i]->count(), float(0), bottom[i]->mutable_gpu_diff());

const float sign = (i == 0) ? 1 : -1;

const float alpha = sign * top[0]->cpu_diff()[0] / bottom[i]->num();

for (int j = 0; j < countLabel; ++j){

if (label[j] != ignore_label){

caffe_gpu_axpby(

channels, // count

alpha, // alpha

diff_->gpu_data() + channels * j, // a

float(0), // beta

bottom[i]->mutable_gpu_diff() + channels * j); // b

}

}

}

}

};

virtual void reshape(Blob** bottom, int numBottom, Blob** top, int numTop){

diff_->ReshapeLike(*bottom[0]);

}

private:

WPtr<Blob> diff_;

};

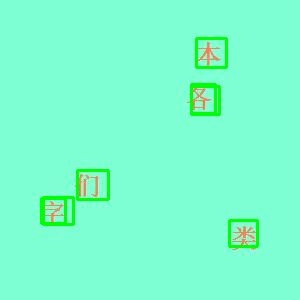

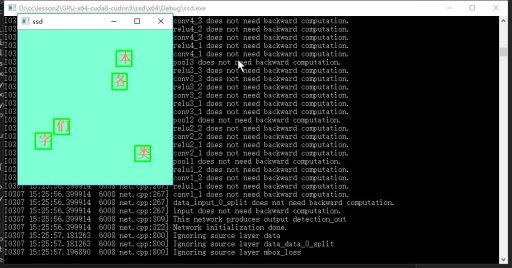

训练过程不多言 贴一些结果图

可以看到在少样本取得了很好的效果;