本次笔记整理的内容是:在OpenCV中利用dnn模块来调用基于残差SSD神经网络的人脸检测模型,OpenCV中的人脸检测模型分别由tensorflow框架和caffe框架训练而成。其中Tensorflow模型经过压缩,运算速度快,但精度比较低;而caffe模型使用FP16的浮点数据,检测精度更高,但运算速度略逊一筹。

一、下面先整理人脸检测tensorflow模型opencv_face_detector_uint8.pb的调用。

(1)首先加载模型,并设置计算后台和目标设备

string model_path = "D:\\opencv_c++\\opencv_tutorial\\data\\models\\face_detector\\opencv_face_detector_uint8.pb";

string config_path = "D:\\opencv_c++\\opencv_tutorial\\data\\models\\face_detector\\opencv_face_detector.pbtxt";

Net face_detector = readNetFromTensorflow(model_path, config_path);

face_detector.setPreferableBackend(DNN_BACKEND_INFERENCE_ENGINE);

face_detector.setPreferableTarget(DNN_TARGET_CPU);

(2)调用摄像头

VideoCapture capture;

capture.open(0);

if (!capture.isOpened())

{

cout << "can't open the camera" << endl;

exit(-1);

}

(3)循环读取图像,并转换为4维blob传入tensorflow模型中,经过前向传播得到结果矩阵prob,并对矩阵prob进行解码,得到预测结果矩阵detection。

Mat frame;

while (capture.read(frame))

{

int start = getTickCount();

flip(frame, frame, 1);

Mat inputBlob = blobFromImage(frame, 1.0, Size(300, 300), Scalar(104.0, 177.0, 123.0), false);

face_detector.setInput(inputBlob);

Mat prob = face_detector.forward();

Mat detection(prob.size[2], prob.size[3], CV_32F, prob.ptr<float>());

float confidence_thresh = 0.5;

for (int row = 0; row < detection.rows;row++)

{

float confidence = detection.at<float>(row, 2);

if (confidence > confidence_thresh)

{

int classID = detection.at<float>(row, 1);

int imageid= detection.at<float>(row, 0);

int top_left_x = detection.at<float>(row, 3)*frame.cols;

int top_left_y = detection.at<float>(row, 4)*frame.rows;

int button_right_x = detection.at<float>(row, 5)*frame.cols;

int button_right_y = detection.at<float>(row, 6)*frame.rows;

int width = button_right_x - top_left_x;

int height = button_right_y - top_left_y;

Rect box(top_left_x, top_left_y, width, height);

rectangle(frame, box, Scalar(0, 255, 0), 1, 8, 0);

cout << classID << "," << notKnown << "," << confidence << endl;

}

}

int end = getTickCount();

double run_time = (double(end) - double(start)) / getTickFrequency();

float FPS = 1 / run_time;

putText(frame, format("FPS: %0.2f", FPS), Point(20, 20), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 0, 255), 1, 8);

imshow("frame", frame);

char ch = waitKey(1);

if (ch == 27)

{

break;

}

}

capture.release();

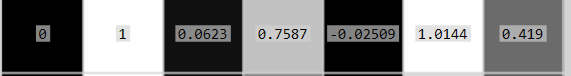

这里解码后得到的detection矩阵中的每一行就是一个检测到的目标,其中某一行如下:

第一列是输入图像的编号;

第二列的该检测目标的分类ID;

第三列是预测类别的置信度;

第四列到第七列分别是目标矩形框的左上角x、y坐标和右下角x、y坐标,注意这里的坐标值是与图像宽高成比例的比值,所以使用的时候需要乘上图像的宽高。

通过以上代码就可以实现实时人脸检测了,这里就不作演示啦哈哈哈哈哈哈毕竟大夏天的。。。在家穿衣比较随意。。

二、下面整理人脸检测caffe模型res10_300x300_ssd_iter_140000_fp16.caffemodel的调用。

由于caffe模型和上面的tensorflow模型对输入输出数据的处理很类似,包括在对预测结果矩阵进行解码处理的部分,也是几乎一样的,所以这里就直接给出完整的演示代码。

string caffe_model_path = "D:\\opencv_c++\\opencv_tutorial\\data\\models\\face_detector\\res10_300x300_ssd_iter_140000_fp16.caffemodel";

string caffe_config_path = "D:\\opencv_c++\\opencv_tutorial\\data\\models\\face_detector\\deploy.prototxt";

Net caffe_face_detector = readNetFromCaffe(caffe_config_path, caffe_model_path);

caffe_face_detector.setPreferableBackend(DNN_BACKEND_INFERENCE_ENGINE);

caffe_face_detector.setPreferableTarget(DNN_TARGET_CPU);

VideoCapture capture;

capture.open(0, CAP_DSHOW);

if (!capture.isOpened())

{

cout << "can't open camera" << endl;

exit(-1);

}

Mat frame;

while (capture.read(frame))

{

double start = getTickCount();

flip(frame, frame, 1);

Mat inputBlob = blobFromImage(frame, 1, Size(300, 300), Scalar(104, 117, 123), true, false);

caffe_face_detector.setInput(inputBlob);

Mat prob = caffe_face_detector.forward();

Mat detection(prob.size[2], prob.size[3], CV_32F, prob.ptr<float>());

float confidence_thresh = 0.5;

for (int row = 0; row < detection.rows; row++)

{

float confidence = detection.at<float>(row, 2);

if (confidence > confidence_thresh)

{

int classID = detection.at<float>(row, 1);

int top_left_x = detection.at<float>(row, 3) * frame.cols;

int top_left_y = detection.at<float>(row, 4) * frame.rows;

int button_right_x = detection.at<float>(row, 5) * frame.cols;

int button_right_y = detection.at<float>(row, 6) * frame.rows;

int width = button_right_x - top_left_x;

int height = button_right_y - top_left_y;

Rect box(top_left_x, top_left_y, width, height);

rectangle(frame, box, Scalar(0, 255, 0), 1, 8);

}

}

double end = getTickCount();

double run_time = (end - start) / getTickFrequency();

double fps = 1 / run_time;

cv::putText(frame, format("FPS: %0.2f", fps), Point(20, 20), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 0, 255), 1, 8);

cv::imshow("frame", frame);

char ch = waitKey(1);

if (ch == 27)

{

break;

}

}

上面两部分就是在dnn模块中调用OpenCV自带的人脸检测模型的方法啦,主要的问题在于对预测结果矩阵的解码处理,tensorflow模型和caffe模型的预测结果矩阵的结构是很类似的,只要了解了这点,那么在OpenCV通过调用这两个模型来实现实时人脸检测就不是什么难题啦。而且,检测效果还是很不错的,不管是人脸处于远近位置、或者是些许遮挡、又或者是侧脸的影响,都能检测出人脸并用矩形框标记,可以说把曾经的OpenCV人脸检测算法——级联检测器给按在地上摩擦(;´д`)ゞ

好的今天笔记就整理到此结束啦,谢谢阅读~

PS:本人的注释比较杂,既有自己的心得体会也有网上查阅资料时摘抄下的知识内容,所以如有雷同,纯属我向前辈学习的致敬,如果有前辈觉得我的笔记内容侵犯了您的知识产权,请和我联系,我会将涉及到的博文内容删除,谢谢!