Clocks and synchronization in GStreamer

When playing complex media, each sound and video sample must be played in a specific order at a specific time. For this purpose, GStreamer provides a synchronization mechanism.

GStreamer provides support for the following use cases:

-

Non-live sources with access faster than playback rate. This is the case where one is reading media from a file and playing it back in a synchronized fashion. In this case, multiple streams need to be synchronized, like audio, video and subtitles.

-

Capture and synchronized muxing/mixing of media from multiple live sources. This is a typical use case where you record audio and video from a microphone/camera and mux it into a file for storage.

-

Streaming from (slow) network streams with buffering. This is the typical web streaming case where you access content from a streaming server using HTTP.

-

Capture from live source and playback with configurable latency. This is used, for example, when capturing from a camera, applying an effect and displaying the result. It is also used when streaming low latency content over a network with UDP.

-

Simultaneous live capture and playback from prerecorded content. This is used in audio recording cases where you play a previously recorded audio and record new samples, the purpose is to have the new audio perfectly in sync with the previously recorded data.

GStreamer uses a GstClock object, buffer timestamps and a SEGMENT event to synchronize streams in a pipeline as we will see in the next sections.

Clock running-time

In a typical computer, there are many sources that can be used as a time source, e.g., the system time, soundcards, CPU performance counters, etc. For this reason, GStreamer has many GstClock implementations available. Note that clock time doesn't have to start from 0 or any other known value. Some clocks start counting from a particular start date, others from the last reboot, etc.

A GstClock returns the absolute-time according to that clock with gst_clock_get_time (). Theabsolute-time (or clock time) of a clock is monotonically increasing.

A running-time is the difference between a previous snapshot of the absolute-time called the base-time, and any other absolute-time.

running-time = absolute-time - base-time

A GStreamer GstPipeline object maintains a GstClock object and a base-time when it goes to the PLAYINGstate. The pipeline gives a handle to the selected GstClock to each element in the pipeline along with selected base-time. The pipeline will select a base-time in such a way that the running-time reflects the total time spent in the PLAYING state. As a result, when the pipeline is PAUSED, the running-time stands still.

Because all objects in the pipeline have the same clock and base-time, they can thus all calculate the running-time according to the pipeline clock.

Buffer running-time

To calculate a buffer running-time, we need a buffer timestamp and the SEGMENT event that preceded the buffer. First we can convert the SEGMENT event into a GstSegment object and then we can use thegst_segment_to_running_time () function to perform the calculation of the buffer running-time.

Synchronization is now a matter of making sure that a buffer with a certain running-time is played when the clock reaches the same running-time. Usually, this task is performed by sink elements. These elements also have to take into account the configured pipeline's latency and add it to the buffer running-time before synchronizing to the pipeline clock.

Non-live sources timestamp buffers with a running-time starting from 0. After a flushing seek, they will produce buffers again from a running-time of 0.

Live sources need to timestamp buffers with a running-time matching the pipeline running-time when the first byte of the buffer was captured.

Buffer stream-time

The buffer stream-time, also known as the position in the stream, is a value between 0 and the total duration of the media and it's calculated from the buffer timestamps and the preceding SEGMENT event.

The stream-time is used in:

-

Report the current position in the stream with the

POSITIONquery. -

The position used in the seek events and queries.

-

The position used to synchronize controlled values.

The stream-time is never used to synchronize streams, this is only done with the running-time.

Time overview

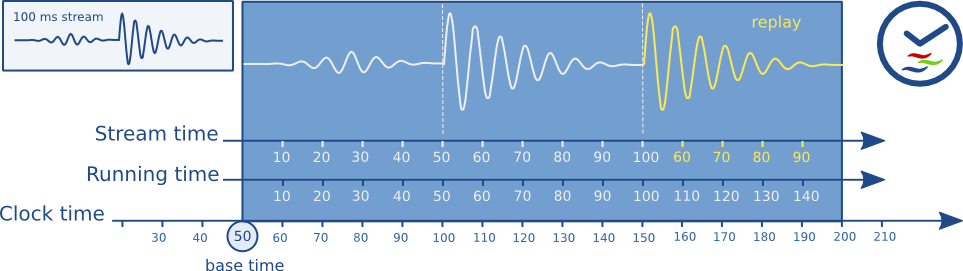

Here is an overview of the various timelines used in GStreamer.

The image below represents the different times in the pipeline when playing a 100ms sample and repeating the part between 50ms and 100ms.

You can see how the running-time of a buffer always increments monotonically along with the clock-time. Buffers are played when their running-time is equal to the clock-time - base-time. The stream-time represents the position in the stream and jumps backwards when repeating.

Clock providers

A clock provider is an element in the pipeline that can provide a GstClock object. The clock object needs to report an absolute-time that is monotonically increasing when the element is in the PLAYING state. It is allowed to pause the clock while the element is PAUSED.

Clock providers exist because they play back media at some rate, and this rate is not necessarily the same as the system clock rate. For example, a soundcard may play back at 44.1 kHz, but that doesn't mean that afterexactly 1 second according to the system clock, the soundcard has played back 44100 samples. This is only true by approximation. In fact, the audio device has an internal clock based on the number of samples played that we can expose.

If an element with an internal clock needs to synchronize, it needs to estimate when a time according to the pipeline clock will take place according to the internal clock. To estimate this, it needs to slave its clock to the pipeline clock.

If the pipeline clock is exactly the internal clock of an element, the element can skip the slaving step and directly use the pipeline clock to schedule playback. This can be both faster and more accurate. Therefore, generally, elements with an internal clock like audio input or output devices will be a clock provider for the pipeline.

When the pipeline goes to the PLAYING state, it will go over all elements in the pipeline from sink to source and ask each element if they can provide a clock. The last element that can provide a clock will be used as the clock provider in the pipeline. This algorithm prefers a clock from an audio sink in a typical playback pipeline and a clock from source elements in a typical capture pipeline.

There exist some bus messages to let you know about the clock and clock providers in the pipeline. You can see what clock is selected in the pipeline by looking at the NEW_CLOCK message on the bus. When a clock provider is removed from the pipeline, a CLOCK_LOST message is posted and the application should go toPAUSED and back to PLAYING to select a new clock.

Latency

The latency is the time it takes for a sample captured at timestamp X to reach the sink. This time is measured against the clock in the pipeline. For pipelines where the only elements that synchronize against the clock are the sinks, the latency is always 0 since no other element is delaying the buffer.

For pipelines with live sources, a latency is introduced, mostly because of the way a live source works. Consider an audio source, it will start capturing the first sample at time 0. If the source pushes buffers with 44100 samples at a time at 44100Hz it will have collected the buffer at second 1. Since the timestamp of the buffer is 0 and the time of the clock is now >= 1 second, the sink will drop this buffer because it is too late. Without any latency compensation in the sink, all buffers will be dropped.

Latency compensation

Before the pipeline goes to the PLAYING state, it will, in addition to selecting a clock and calculating a base-time, calculate the latency in the pipeline. It does this by doing a LATENCY query on all the sinks in the pipeline. The pipeline then selects the maximum latency in the pipeline and configures this with aLATENCY event.

All sink elements will delay playback by the value in the LATENCY event. Since all sinks delay with the same amount of time, they will be relatively in sync.

Dynamic Latency

Adding/removing elements to/from a pipeline or changing element properties can change the latency in a pipeline. An element can request a latency change in the pipeline by posting a LATENCY message on the bus. The application can then decide to query and redistribute a new latency or not. Changing the latency in a pipeline might cause visual or audible glitches and should therefore only be done by the application when it is allowed.

Buffering

The purpose of buffering is to accumulate enough data in a pipeline so that playback can occur smoothly and without interruptions. It is typically done when reading from a (slow) and non-live network source but can also be used for live sources.

GStreamer provides support for the following use cases:

-

Buffering up to a specific amount of data, in memory, before starting playback so that network fluctuations are minimized. See Stream buffering.

-

Download of the network file to a local disk with fast seeking in the downloaded data. This is similar to the quicktime/youtube players. See Download buffering.

-

Caching of (semi)-live streams to a local, on disk, ringbuffer with seeking in the cached area. This is similar to tivo-like timeshifting. See Timeshift buffering.

GStreamer can provide the application with progress reports about the current buffering state as well as let the application decide on how to buffer and when the buffering stops.

In the most simple case, the application has to listen for BUFFERING messages on the bus. If the percent indicator inside the BUFFERING message is smaller than 100, the pipeline is buffering. When a message is received with 100 percent, buffering is complete. In the buffering state, the application should keep the pipeline in the PAUSED state. When buffering completes, it can put the pipeline (back) in the PLAYING state.

What follows is an example of how the message handler could deal with the BUFFERING messages. We will see more advanced methods in Buffering strategies.

[...] switch (GST_MESSAGE_TYPE (message)) { case GST_MESSAGE_BUFFERING:{ gint percent; /* no state management needed for live pipelines */ if (is_live) break; gst_message_parse_buffering (message, &percent); if (percent == 100) { /* a 100% message means buffering is done */ buffering = FALSE; /* if the desired state is playing, go back */ if (target_state == GST_STATE_PLAYING) { gst_element_set_state (pipeline, GST_STATE_PLAYING); } } else { /* buffering busy */ if (!buffering && target_state == GST_STATE_PLAYING) { /* we were not buffering but PLAYING, PAUSE the pipeline. */ gst_element_set_state (pipeline, GST_STATE_PAUSED); } buffering = TRUE; } break; case ... [...]

Stream buffering

+---------+ +---------+ +-------+

| httpsrc | | buffer | | demux |

| src - sink src - sink ....

+---------+ +---------+ +-------+

In this case we are reading from a slow network source into a buffer element (such as queue2).

The buffer element has a low and high watermark expressed in bytes. The buffer uses the watermarks as follows:

-

The buffer element will post BUFFERING messages until the high watermark is hit. This instructs the application to keep the pipeline PAUSED, which will eventually block the srcpad from pushing while data is prerolled in the sinks.

-

When the high watermark is hit, a BUFFERING message with 100% will be posted, which instructs the application to continue playback.

-

When during playback, the low watermark is hit, the queue will start posting BUFFERING messages again, making the application PAUSE the pipeline again until the high watermark is hit again. This is called the rebuffering stage.

-

During playback, the queue level will fluctuate between the high and the low watermark as a way to compensate for network irregularities.

This buffering method is usable when the demuxer operates in push mode. Seeking in the stream requires the seek to happen in the network source. It is mostly desirable when the total duration of the file is not known, such as in live streaming or when efficient seeking is not possible/required.

The problem is configuring a good low and high watermark. Here are some ideas:

-

It is possible to measure the network bandwidth and configure the low/high watermarks in such a way that buffering takes a fixed amount of time.

The queue2 element in GStreamer core has the max-size-time property that, together with the use-rate-estimate property, does exactly that. Also the playbin buffer-duration property uses the rate estimate to scale the amount of data that is buffered.

-

Based on the codec bitrate, it is also possible to set the watermarks in such a way that a fixed amount of data is buffered before playback starts. Normally, the buffering element doesn't know about the bitrate of the stream but it can get this with a query.

-

Start with a fixed amount of bytes, measure the time between rebuffering and increase the queue size until the time between rebuffering is within the application's chosen limits.

The buffering element can be inserted anywhere in the pipeline. You could, for example, insert the buffering element before a decoder. This would make it possible to set the low/high watermarks based on time.

The buffering flag on playbin, performs buffering on the parsed data. Another advantage of doing the buffering at a later stage is that you can let the demuxer operate in pull mode. When reading data from a slow network drive (with filesrc) this can be an interesting way to buffer.

Download buffering

+---------+ +---------+ +-------+

| httpsrc | | buffer | | demux |

| src - sink src - sink ....

+---------+ +----|----+ +-------+

V

file

If we know the server is streaming a fixed length file to the client, the application can choose to download the entire file on disk. The buffer element will provide a push or pull based srcpad to the demuxer to navigate in the downloaded file.

This mode is only suitable when the client can determine the length of the file on the server.

In this case, buffering messages will be emitted as usual when the requested range is not within the downloaded area + buffersize. The buffering message will also contain an indication that incremental download is being performed. This flag can be used to let the application control the buffering in a more intelligent way, using the BUFFERING query, for example. See Buffering strategies.

Timeshift buffering

+---------+ +---------+ +-------+

| httpsrc | | buffer | | demux |

| src - sink src - sink ....

+---------+ +----|----+ +-------+

V

file-ringbuffer

In this mode, a fixed size ringbuffer is kept to download the server content. This allows for seeking in the buffered data. Depending on the size of the ringbuffer one can seek further back in time.

This mode is suitable for all live streams. As with the incremental download mode, buffering messages are emitted along with an indication that timeshifting download is in progress.

Live buffering

In live pipelines we usually introduce some fixed latency between the capture and the playback elements. This latency can be introduced by a queue (such as a jitterbuffer) or by other means (in the audiosink).

Buffering messages can be emitted in those live pipelines as well and serve as an indication to the user of the latency buffering. The application usually does not react to these buffering messages with a state change.

Buffering strategies

What follows are some ideas for implementing different buffering strategies based on the buffering messages and buffering query.

No-rebuffer strategy

We would like to buffer enough data in the pipeline so that playback continues without interruptions. What we need to know to implement this is know the total remaining playback time in the file and the total remaining download time. If the buffering time is less than the playback time, we can start playback without interruptions.

We have all this information available with the DURATION, POSITION and BUFFERING queries. We need to periodically execute the buffering query to get the current buffering status. We also need to have a large enough buffer to hold the complete file, worst case. It is best to use this buffering strategy with download buffering (see Download buffering).

This is what the code would look like:

#include <gst/gst.h> GstState target_state; static gboolean is_live; static gboolean is_buffering; static gboolean buffer_timeout (gpointer data) { GstElement *pipeline = data; GstQuery *query; gboolean busy; gint percent; gint64 estimated_total; gint64 position, duration; guint64 play_left; query = gst_query_new_buffering (GST_FORMAT_TIME); if (!gst_element_query (pipeline, query)) return TRUE; gst_query_parse_buffering_percent (query, &busy, &percent); gst_query_parse_buffering_range (query, NULL, NULL, NULL, &estimated_total); if (estimated_total == -1) estimated_total = 0; /* calculate the remaining playback time */ if (!gst_element_query_position (pipeline, GST_FORMAT_TIME, &position)) position = -1; if (!gst_element_query_duration (pipeline, GST_FORMAT_TIME, &duration)) duration = -1; if (duration != -1 && position != -1) play_left = GST_TIME_AS_MSECONDS (duration - position); else play_left = 0; g_message ("play_left %" G_GUINT64_FORMAT", estimated_total %" G_GUINT64_FORMAT ", percent %d", play_left, estimated_total, percent); /* we are buffering or the estimated download time is bigger than the * remaining playback time. We keep buffering. */ is_buffering = (busy || estimated_total * 1.1 > play_left); if (!is_buffering) gst_element_set_state (pipeline, target_state); return is_buffering; } static void on_message_buffering (GstBus *bus, GstMessage *message, gpointer user_data) { GstElement *pipeline = user_data; gint percent; /* no state management needed for live pipelines */ if (is_live) return; gst_message_parse_buffering (message, &percent); if (percent < 100) { /* buffering busy */ if (!is_buffering) { is_buffering = TRUE; if (target_state == GST_STATE_PLAYING) { /* we were not buffering but PLAYING, PAUSE the pipeline. */ gst_element_set_state (pipeline, GST_STATE_PAUSED); } } } } static void on_message_async_done (GstBus *bus, GstMessage *message, gpointer user_data) { GstElement *pipeline = user_data; if (!is_buffering) gst_element_set_state (pipeline, target_state); else g_timeout_add (500, buffer_timeout, pipeline); } gint main (gint argc, gchar *argv[]) { GstElement *pipeline; GMainLoop *loop; GstBus *bus; GstStateChangeReturn ret; /* init GStreamer */ gst_init (&argc, &argv); loop = g_main_loop_new (NULL, FALSE); /* make sure we have a URI */ if (argc != 2) { g_print ("Usage: %s <URI>\n", argv[0]); return -1; } /* set up */ pipeline = gst_element_factory_make ("playbin", "pipeline"); g_object_set (G_OBJECT (pipeline), "uri", argv[1], NULL); g_object_set (G_OBJECT (pipeline), "flags", 0x697 , NULL); bus = gst_pipeline_get_bus (GST_PIPELINE (pipeline)); gst_bus_add_signal_watch (bus); g_signal_connect (bus, "message::buffering", (GCallback) on_message_buffering, pipeline); g_signal_connect (bus, "message::async-done", (GCallback) on_message_async_done, pipeline); gst_object_unref (bus); is_buffering = FALSE; target_state = GST_STATE_PLAYING; ret = gst_element_set_state (pipeline, GST_STATE_PAUSED); switch (ret) { case GST_STATE_CHANGE_SUCCESS: is_live = FALSE; break; case GST_STATE_CHANGE_FAILURE: g_warning ("failed to PAUSE"); return -1; case GST_STATE_CHANGE_NO_PREROLL: is_live = TRUE; break; default: break; } /* now run */ g_main_loop_run (loop); /* also clean up */ gst_element_set_state (pipeline, GST_STATE_NULL); gst_object_unref (GST_OBJECT (pipeline)); g_main_loop_unref (loop); return 0; }

See how we set the pipeline to the PAUSED state first. We will receive buffering messages during the preroll state when buffering is needed. When we are prerolled (on_message_async_done) we see if buffering is going on, if not, we start playback. If buffering was going on, we start a timeout to poll the buffering state. If the estimated time to download is less than the remaining playback time, we start playback.

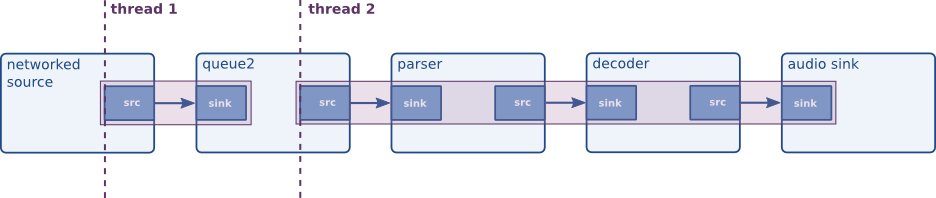

Threads

GStreamer is inherently multi-threaded, and is fully thread-safe. Most threading internals are hidden from the application, which should make application development easier. However, in some cases, applications may want to have influence on some parts of those. GStreamer allows applications to force the use of multiple threads over some parts of a pipeline. See When would you want to force a thread?.

GStreamer can also notify you when threads are created so that you can configure things such as the thread priority or the threadpool to use. See Configuring Threads in GStreamer.

Scheduling in GStreamer

Each element in the GStreamer pipeline decides how it is going to be scheduled. Elements can choose if their pads are to be scheduled push-based or pull-based. An element can, for example, choose to start a thread to start pulling from the sink pad or/and start pushing on the source pad. An element can also choose to use the upstream or downstream thread for its data processing in push and pull mode respectively. GStreamer does not pose any restrictions on how the element chooses to be scheduled. See the Plugin Writer Guide for more details.

What will happen in any case is that some elements will start a thread for their data processing, called the “streaming threads”. The streaming threads, or GstTask objects, are created from a GstTaskPool when the element needs to make a streaming thread. In the next section we see how we can receive notifications of the tasks and pools.

Configuring Threads in GStreamer

A STREAM_STATUS message is posted on the bus to inform you about the status of the streaming threads. You will get the following information from the message:

-

When a new thread is about to be created, you will be notified of this with a

GST_STREAM_STATUS_TYPE_CREATEtype. It is then possible to configure aGstTaskPoolin theGstTask. The custom taskpool will provide custom threads for the task to implement the streaming threads.This message needs to be handled synchronously if you want to configure a custom taskpool. If you don't configure the taskpool on the task when this message returns, the task will use its default pool.

-

When a thread is entered or left. This is the moment where you could configure thread priorities. You also get a notification when a thread is destroyed.

-

You get messages when the thread starts, pauses and stops. This could be used to visualize the status of streaming threads in a gui application.

We will now look at some examples in the next sections.

Boost priority of a thread

.----------. .----------.

| fakesrc | | fakesink |

| src->sink |

'----------' '----------'

Let's look at the simple pipeline above. We would like to boost the priority of the streaming thread. It will be the fakesrc element that starts the streaming thread for generating the fake data pushing them to the peer fakesink. The flow for changing the priority would go like this:

-

When going from

READYtoPAUSEDstate, fakesrc will require a streaming thread for pushing data into the fakesink. It will post aSTREAM_STATUSmessage indicating its requirement for a streaming thread. -

The application will react to the

STREAM_STATUSmessages with a sync bus handler. It will then configure a customGstTaskPoolon theGstTaskinside the message. The custom taskpool is responsible for creating the threads. In this example we will make a thread with a higher priority. -

Alternatively, since the sync message is called in the thread context, you can use thread

ENTER/LEAVEnotifications to change the priority or scheduling policy of the current thread.

In a first step we need to implement a custom GstTaskPool that we can configure on the task. Below is the implementation of a GstTaskPool subclass that uses pthreads to create a SCHED_RR real-time thread. Note that creating real-time threads might require extra privileges.

#include <pthread.h> typedef struct { pthread_t thread; } TestRTId; G_DEFINE_TYPE (TestRTPool, test_rt_pool, GST_TYPE_TASK_POOL); static void default_prepare (GstTaskPool * pool, GError ** error) { /* we don't do anything here. We could construct a pool of threads here that * we could reuse later but we don't */ } static void default_cleanup (GstTaskPool * pool) { } static gpointer default_push (GstTaskPool * pool, GstTaskPoolFunction func, gpointer data, GError ** error) { TestRTId *tid; gint res; pthread_attr_t attr; struct sched_param param; tid = g_slice_new0 (TestRTId); pthread_attr_init (&attr); if ((res = pthread_attr_setschedpolicy (&attr, SCHED_RR)) != 0) g_warning ("setschedpolicy: failure: %p", g_strerror (res)); param.sched_priority = 50; if ((res = pthread_attr_setschedparam (&attr, ¶m)) != 0) g_warning ("setschedparam: failure: %p", g_strerror (res)); if ((res = pthread_attr_setinheritsched (&attr, PTHREAD_EXPLICIT_SCHED)) != 0) g_warning ("setinheritsched: failure: %p", g_strerror (res)); res = pthread_create (&tid->thread, &attr, (void *(*)(void *)) func, data); if (res != 0) { g_set_error (error, G_THREAD_ERROR, G_THREAD_ERROR_AGAIN, "Error creating thread: %s", g_strerror (res)); g_slice_free (TestRTId, tid); tid = NULL; } return tid; } static void default_join (GstTaskPool * pool, gpointer id) { TestRTId *tid = (TestRTId *) id; pthread_join (tid->thread, NULL); g_slice_free (TestRTId, tid); } static void test_rt_pool_class_init (TestRTPoolClass * klass) { GstTaskPoolClass *gsttaskpool_class; gsttaskpool_class = (GstTaskPoolClass *) klass; gsttaskpool_class->prepare = default_prepare; gsttaskpool_class->cleanup = default_cleanup; gsttaskpool_class->push = default_push; gsttaskpool_class->join = default_join; } static void test_rt_pool_init (TestRTPool * pool) { } GstTaskPool * test_rt_pool_new (void) { GstTaskPool *pool; pool = g_object_new (TEST_TYPE_RT_POOL, NULL); return pool; }

The important function to implement when writing an taskpool is the “push” function. The implementation should start a thread that calls the given function. More involved implementations might want to keep some threads around in a pool because creating and destroying threads is not always the fastest operation.

In a next step we need to actually configure the custom taskpool when the fakesrc needs it. For this we intercept the STREAM_STATUS messages with a sync handler.

static GMainLoop* loop; static void on_stream_status (GstBus *bus, GstMessage *message, gpointer user_data) { GstStreamStatusType type; GstElement *owner; const GValue *val; GstTask *task = NULL; gst_message_parse_stream_status (message, &type, &owner); val = gst_message_get_stream_status_object (message); /* see if we know how to deal with this object */ if (G_VALUE_TYPE (val) == GST_TYPE_TASK) { task = g_value_get_object (val); } switch (type) { case GST_STREAM_STATUS_TYPE_CREATE: if (task) { GstTaskPool *pool; pool = test_rt_pool_new(); gst_task_set_pool (task, pool); } break; default: break; } } static void on_error (GstBus *bus, GstMessage *message, gpointer user_data) { g_message ("received ERROR"); g_main_loop_quit (loop); } static void on_eos (GstBus *bus, GstMessage *message, gpointer user_data) { g_main_loop_quit (loop); } int main (int argc, char *argv[]) { GstElement *bin, *fakesrc, *fakesink; GstBus *bus; GstStateChangeReturn ret; gst_init (&argc, &argv); /* create a new bin to hold the elements */ bin = gst_pipeline_new ("pipeline"); g_assert (bin); /* create a source */ fakesrc = gst_element_factory_make ("fakesrc", "fakesrc"); g_assert (fakesrc); g_object_set (fakesrc, "num-buffers", 50, NULL); /* and a sink */ fakesink = gst_element_factory_make ("fakesink", "fakesink"); g_assert (fakesink); /* add objects to the main pipeline */ gst_bin_add_many (GST_BIN (bin), fakesrc, fakesink, NULL); /* link the elements */ gst_element_link (fakesrc, fakesink); loop = g_main_loop_new (NULL, FALSE); /* get the bus, we need to install a sync handler */ bus = gst_pipeline_get_bus (GST_PIPELINE (bin)); gst_bus_enable_sync_message_emission (bus); gst_bus_add_signal_watch (bus); g_signal_connect (bus, "sync-message::stream-status", (GCallback) on_stream_status, NULL); g_signal_connect (bus, "message::error", (GCallback) on_error, NULL); g_signal_connect (bus, "message::eos", (GCallback) on_eos, NULL); /* start playing */ ret = gst_element_set_state (bin, GST_STATE_PLAYING); if (ret != GST_STATE_CHANGE_SUCCESS) { g_message ("failed to change state"); return -1; } /* Run event loop listening for bus messages until EOS or ERROR */ g_main_loop_run (loop); /* stop the bin */ gst_element_set_state (bin, GST_STATE_NULL); gst_object_unref (bus); g_main_loop_unref (loop); return 0; }

Note that this program likely needs root permissions in order to create real-time threads. When the thread can't be created, the state change function will fail, which we catch in the application above.

When there are multiple threads in the pipeline, you will receive multiple STREAM_STATUS messages. You should use the owner of the message, which is likely the pad or the element that starts the thread, to figure out what the function of this thread is in the context of the application.

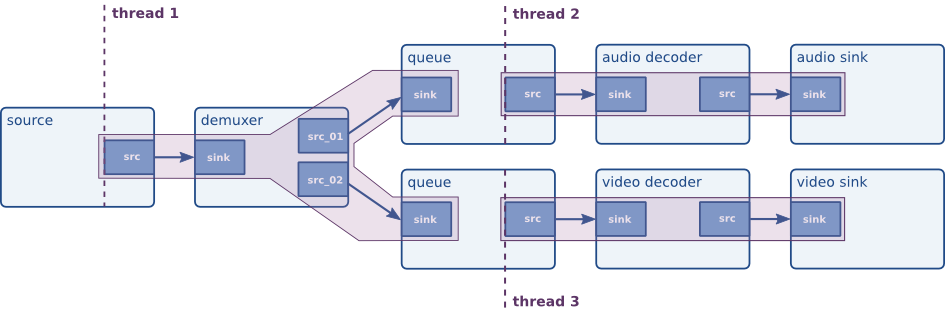

When would you want to force a thread?

We have seen that threads are created by elements but it is also possible to insert elements in the pipeline for the sole purpose of forcing a new thread in the pipeline.

There are several reasons to force the use of threads. However, for performance reasons, you never want to use one thread for every element out there, since that will create some overhead. Let's now list some situations where threads can be particularly useful:

-

Data buffering, for example when dealing with network streams or when recording data from a live stream such as a video or audio card. Short hickups elsewhere in the pipeline will not cause data loss. See also Stream buffering about network buffering with queue2.

-

Synchronizing output devices, e.g. when playing a stream containing both video and audio data. By using threads for both outputs, they will run independently and their synchronization will be better.

Above, we've mentioned the “queue” element several times now. A queue is the thread boundary element through which you can force the use of threads. It does so by using a classic provider/consumer model as learned in threading classes at universities all around the world. By doing this, it acts both as a means to make data throughput between threads threadsafe, and it can also act as a buffer. Queues have several GObjectproperties to be configured for specific uses. For example, you can set lower and upper thresholds for the element. If there's less data than the lower threshold (default: disabled), it will block output. If there's more data than the upper threshold, it will block input or (if configured to do so) drop data.

To use a queue (and therefore force the use of two distinct threads in the pipeline), one can simply create a “queue” element and put this in as part of the pipeline. GStreamer will take care of all threading details internally.

Pipeline manipulation

This chapter presents many ways in which you can manipulate pipelines from your application. These are some of the topics that will be covered:

- How to insert data from an application into a pipeline

- How to read data from a pipeline

- How to listen to a pipeline's data processing.

Parts of this chapter are very low level so you'll need some programming experience and a good understanding of GStreamer to follow them.

Using probes

Probing is best envisioned as having access to a pad listener. Technically, a probe is nothing more than a callback that can be attached to a pad using gst_pad_add_probe (). Conversely, you can usegst_pad_remove_probe () to remove the callback. While attached, the probe notifies you of any activity on the pad. You can define what kind of notifications you are interested in when you add the probe.

Probe types:

-

A buffer is pushed or pulled. You want to specify the

GST_PAD_PROBE_TYPE_BUFFERwhen registering the probe. Because the pad can be scheduled in different ways. It is also possible to specify in what scheduling mode you are interested with the optionalGST_PAD_PROBE_TYPE_PUSHandGST_PAD_PROBE_TYPE_PULLflags. You can use this probe to inspect, modify or drop the buffer. SeeData probes. -

A buffer list is pushed. Use the

GST_PAD_PROBE_TYPE_BUFFER_LISTwhen registering the probe. -

An event travels over a pad. Use the

GST_PAD_PROBE_TYPE_EVENT_DOWNSTREAMandGST_PAD_PROBE_TYPE_EVENT_UPSTREAMflags to select downstream and upstream events. There is also a convenienceGST_PAD_PROBE_TYPE_EVENT_BOTHto be notified of events going in both directions. By default, flush events do not cause a notification. You need to explicitly enableGST_PAD_PROBE_TYPE_EVENT_FLUSHto receive callbacks from flushing events. Events are always only notified in push mode. You can use this type of probe to inspect, modify or drop the event. -

A query travels over a pad. Use the

GST_PAD_PROBE_TYPE_QUERY_DOWNSTREAMandGST_PAD_PROBE_TYPE_QUERY_UPSTREAMflags to select downstream and upstream queries. The convenienceGST_PAD_PROBE_TYPE_QUERY_BOTHcan also be used to select both directions. Query probes are notified twice: when the query travels upstream/downstream and when the query result is returned. You can select in what stage the callback will be called with theGST_PAD_PROBE_TYPE_PUSHandGST_PAD_PROBE_TYPE_PULL, respectively when the query is performed and when the query result is returned.You can use a query probe to inspect or modify queries, or even to answer them in the probe callback. To answer a query you place the result value in the query and return

GST_PAD_PROBE_DROPfrom the callback. -

In addition to notifying you of dataflow, you can also ask the probe to block the dataflow when the callback returns. This is called a blocking probe and is activated by specifying the

GST_PAD_PROBE_TYPE_BLOCKflag. You can use this flag with the other flags to only block dataflow on selected activity. A pad becomes unblocked again if you remove the probe or when you returnGST_PAD_PROBE_REMOVEfrom the callback. You can let only the currently blocked item pass by returningGST_PAD_PROBE_PASSfrom the callback, it will block again on the next item.Blocking probes are used to temporarily block pads because they are unlinked or because you are going to unlink them. If the dataflow is not blocked, the pipeline would go into an error state if data is pushed on an unlinked pad. We will see how to use blocking probes to partially preroll a pipeline.

-

Be notified when no activity is happening on a pad. You install this probe with the

GST_PAD_PROBE_TYPE_IDLEflag. You can specifyGST_PAD_PROBE_TYPE_PUSHand/orGST_PAD_PROBE_TYPE_PULLto only be notified depending on the pad scheduling mode. The IDLE probe is also a blocking probe in that it will not let any data pass on the pad for as long as the IDLE probe is installed.You can use idle probes to dynamically relink a pad. We will see how to use idle probes to replace an element in the pipeline. See also Dynamically changing the pipeline.

Data probes

Data probes notify you when there is data passing on a pad. Pass GST_PAD_PROBE_TYPE_BUFFER and/orGST_PAD_PROBE_TYPE_BUFFER_LIST to gst_pad_add_probe () for creating this kind of probe. Most common buffer operations elements can do in _chain () functions, can be done in probe callbacks.

Data probes run in the pipeline's streaming thread context, so callbacks should try to avoid blocking and generally, avoid doing weird stuff. Doing so could have a negative impact on the pipeline's performance or, in case of bugs, lead to deadlocks or crashes. More precisely, one should usually avoid calling GUI-related functions from within a probe callback, nor try to change the state of the pipeline. An application may post custom messages on the pipeline's bus to communicate with the main application thread and have it do things like stop the pipeline.

The following is an example on using data probes. Compare this program's output with that of gst-launch-1.0 videotestsrc ! xvimagesink if you are not sure what to look for:

#include <gst/gst.h> static GstPadProbeReturn cb_have_data (GstPad *pad, GstPadProbeInfo *info, gpointer user_data) { gint x, y; GstMapInfo map; guint16 *ptr, t; GstBuffer *buffer; buffer = GST_PAD_PROBE_INFO_BUFFER (info); buffer = gst_buffer_make_writable (buffer); /* Making a buffer writable can fail (for example if it * cannot be copied and is used more than once) */ if (buffer == NULL) return GST_PAD_PROBE_OK; /* Mapping a buffer can fail (non-writable) */ if (gst_buffer_map (buffer, &map, GST_MAP_WRITE)) { ptr = (guint16 *) map.data; /* invert data */ for (y = 0; y < 288; y++) { for (x = 0; x < 384 / 2; x++) { t = ptr[384 - 1 - x]; ptr[384 - 1 - x] = ptr[x]; ptr[x] = t; } ptr += 384; } gst_buffer_unmap (buffer, &map); } GST_PAD_PROBE_INFO_DATA (info) = buffer; return GST_PAD_PROBE_OK; } gint main (gint argc, gchar *argv[]) { GMainLoop *loop; GstElement *pipeline, *src, *sink, *filter, *csp; GstCaps *filtercaps; GstPad *pad; /* init GStreamer */ gst_init (&argc, &argv); loop = g_main_loop_new (NULL, FALSE); /* build */ pipeline = gst_pipeline_new ("my-pipeline"); src = gst_element_factory_make ("videotestsrc", "src"); if (src == NULL) g_error ("Could not create 'videotestsrc' element"); filter = gst_element_factory_make ("capsfilter", "filter"); g_assert (filter != NULL); /* should always exist */ csp = gst_element_factory_make ("videoconvert", "csp"); if (csp == NULL) g_error ("Could not create 'videoconvert' element"); sink = gst_element_factory_make ("xvimagesink", "sink"); if (sink == NULL) { sink = gst_element_factory_make ("ximagesink", "sink"); if (sink == NULL) g_error ("Could not create neither 'xvimagesink' nor 'ximagesink' element"); } gst_bin_add_many (GST_BIN (pipeline), src, filter, csp, sink, NULL); gst_element_link_many (src, filter, csp, sink, NULL); filtercaps = gst_caps_new_simple ("video/x-raw", "format", G_TYPE_STRING, "RGB16", "width", G_TYPE_INT, 384, "height", G_TYPE_INT, 288, "framerate", GST_TYPE_FRACTION, 25, 1, NULL); g_object_set (G_OBJECT (filter), "caps", filtercaps, NULL); gst_caps_unref (filtercaps); pad = gst_element_get_static_pad (src, "src"); gst_pad_add_probe (pad, GST_PAD_PROBE_TYPE_BUFFER, (GstPadProbeCallback) cb_have_data, NULL, NULL); gst_object_unref (pad); /* run */ gst_element_set_state (pipeline, GST_STATE_PLAYING); /* wait until it's up and running or failed */ if (gst_element_get_state (pipeline, NULL, NULL, -1) == GST_STATE_CHANGE_FAILURE) { g_error ("Failed to go into PLAYING state"); } g_print ("Running ...\n"); g_main_loop_run (loop); /* exit */ gst_element_set_state (pipeline, GST_STATE_NULL); gst_object_unref (pipeline); return 0; }

Strictly speaking, a pad probe callback is only allowed to modify the buffer content if the buffer is writable. Whether this is the case or not depends a lot on the pipeline and the elements involved. Often enough, this is the case, but sometimes it is not, and if it is not then unexpected modification of the data or metadata can introduce bugs that are very hard to debug and track down. You can check if a buffer is writable with gst_buffer_is_writable (). Since you can pass back a different buffer than the one passed in, it is a good idea to make the buffer writable in the callback function with gst_buffer_make_writable ().

Pad probes are best suited for looking at data as it passes through the pipeline. If you need to modify data, you should rather write your own GStreamer element. Base classes like GstAudioFilter, GstVideoFilter orGstBaseTransform make this fairly easy.

If you just want to inspect buffers as they pass through the pipeline, you don't even need to set up pad probes. You could also just insert an identity element into the pipeline and connect to its "handoff" signal. The identity element also provides a few useful debugging tools like the dump and last-messageproperties; the latter is enabled by passing the '-v' switch to gst-launch and setting the silentproperty on the identity to FALSE.

Manually adding or removing data from/to a pipeline

Many people have expressed the wish to use their own sources to inject data into a pipeline, others, the wish to grab a pipeline's output and take care of it in their application. While these methods are strongly discouraged, GStreamer offers support for them -- Beware! You need to know what you are doing --. Since you don't have any support from a base class you need to thoroughly understand state changes and synchronization. If it doesn't work, there are a million ways to shoot yourself in the foot. It's always better to simply write a plugin and have the base class manage it. See the Plugin Writer's Guide for more information on this topic. Additionally, review the next section, which explains how to statically embed plugins in your application.

There are two possible elements that you can use for the above-mentioned purposes: appsrc (an imaginary source) and appsink (an imaginary sink). The same method applies to these elements. We will discuss how to use them to insert (using appsrc) or to grab (using appsink) data from a pipeline, and how to set negotiation.

Both appsrc and appsink provide 2 sets of API. One API uses standard GObject (action) signals and properties. The same API is also available as a regular C API. The C API is more performant but requires you to link to the app library in order to use the elements.

Inserting data with appsrc

Let's take a look at appsrc and how to insert application data into the pipeline.

appsrc has some configuration options that control the way it operates. You should decide about the following:

-

Will

appsrcoperate in push or pull mode. Thestream-typeproperty can be used to control this. Arandom-accessstream-typewill makeappsrcactivate pull mode scheduling while the otherstream-typesactivate push mode. -

The caps of the buffers that

appsrcwill push out. This needs to be configured with thecapsproperty. This property must be set to a fixed caps and will be used to negotiate a format downstream. -

Whether

appsrcoperates in live mode or not. This is configured with theis-liveproperty. When operating in live-mode it is also important to set themin-latencyandmax-latencyproperties.min-latencyshould be set to the amount of time it takes between capturing a buffer and when it is pushed insideappsrc. In live mode, you should timestamp the buffers with the pipelinerunning-timewhen the first byte of the buffer was captured before feeding them toappsrc. You can letappsrcdo the timestamping with thedo-timestampproperty, but then themin-latencymust be set to 0 becauseappsrctimestamps based on what was therunning-timewhen it got a given buffer. -

The format of the SEGMENT event that

appsrcwill push. This format has implications for how the buffers'running-timewill be calculated, so you must be sure you understand this. For live sources you probably want to set the format property toGST_FORMAT_TIME. For non-live sources, it depends on the media type that you are handling. If you plan to timestamp the buffers, you should probably useGST_FORMAT_TIMEas format, if you don't,GST_FORMAT_BYTESmight be appropriate. -

If

appsrcoperates in random-access mode, it is important to configure the size property with the number of bytes in the stream. This will allow downstream elements to know the size of the media and seek to the end of the stream when needed.

The main way of handling data to appsrc is by using the gst_app_src_push_buffer () function or by emitting the push-buffer action signal. This will put the buffer onto a queue from which appsrc will read in its streaming thread. It's important to note that data transport will not happen from the thread that performed the push-buffer call.

The max-bytes property controls how much data can be queued in appsrc before appsrc considers the queue full. A filled internal queue will always signal the enough-data signal, which signals the application that it should stop pushing data into appsrc. The block property will cause appsrc to block the push-buffer method until free data becomes available again.

When the internal queue is running out of data, the need-data signal is emitted, which signals the application that it should start pushing more data into appsrc.

In addition to the need-data and enough-data signals, appsrc can emit seek-data when the stream-mode property is set to seekable or random-access. The signal argument will contain the new desired position in the stream expressed in the unit set with the format property. After receiving the seek-datasignal, the application should push buffers from the new position.

When the last byte is pushed into appsrc, you must call gst_app_src_end_of_stream () to make it send an EOS downstream.

These signals allow the application to operate appsrc in push and pull mode as will be explained next.

Using appsrc in push mode

When appsrc is configured in push mode (stream-type is stream or seekable), the application repeatedly calls the push-buffer method with a new buffer. Optionally, the queue size in the appsrc can be controlled with the enough-data and need-data signals by respectively stopping/starting the push-buffer calls. The value of the min-percent property defines how empty the internal appsrc queue needs to be before the need-data signal is issued. You can set this to some positive value to avoid completely draining the queue.

Don't forget to implement a seek-data callback when the stream-type is set toGST_APP_STREAM_TYPE_SEEKABLE.

Use this mode when implementing various network protocols or hardware devices.

Using appsrc in pull mode

In pull mode, data is fed to appsrc from the need-data signal handler. You should push exactly the amount of bytes requested in the need-data signal. You are only allowed to push less bytes when you are at the end of the stream.

Use this mode for file access or other randomly accessible sources.

Appsrc example

This example application will generate black/white (it switches every second) video to an Xv-window output by using appsrc as a source with caps to force a format. We use a colorspace conversion element to make sure that we feed the right format to the X server. We configure a video stream with a variable framerate (0/1) and we set the timestamps on the outgoing buffers in such a way that we play 2 frames per second.

Note how we use the pull mode method of pushing new buffers into appsrc although appsrc is running in push mode.

#include <gst/gst.h> static GMainLoop *loop; static void cb_need_data (GstElement *appsrc, guint unused_size, gpointer user_data) { static gboolean white = FALSE; static GstClockTime timestamp = 0; GstBuffer *buffer; guint size; GstFlowReturn ret; size = 385 * 288 * 2; buffer = gst_buffer_new_allocate (NULL, size, NULL); /* this makes the image black/white */ gst_buffer_memset (buffer, 0, white ? 0xff : 0x0, size); white = !white; GST_BUFFER_PTS (buffer) = timestamp; GST_BUFFER_DURATION (buffer) = gst_util_uint64_scale_int (1, GST_SECOND, 2); timestamp += GST_BUFFER_DURATION (buffer); g_signal_emit_by_name (appsrc, "push-buffer", buffer, &ret); gst_buffer_unref (buffer); if (ret != GST_FLOW_OK) { /* something wrong, stop pushing */ g_main_loop_quit (loop); } } gint main (gint argc, gchar *argv[]) { GstElement *pipeline, *appsrc, *conv, *videosink; /* init GStreamer */ gst_init (&argc, &argv); loop = g_main_loop_new (NULL, FALSE); /* setup pipeline */ pipeline = gst_pipeline_new ("pipeline"); appsrc = gst_element_factory_make ("appsrc", "source"); conv = gst_element_factory_make ("videoconvert", "conv"); videosink = gst_element_factory_make ("xvimagesink", "videosink"); /* setup */ g_object_set (G_OBJECT (appsrc), "caps", gst_caps_new_simple ("video/x-raw", "format", G_TYPE_STRING, "RGB16", "width", G_TYPE_INT, 384, "height", G_TYPE_INT, 288, "framerate", GST_TYPE_FRACTION, 0, 1, NULL), NULL); gst_bin_add_many (GST_BIN (pipeline), appsrc, conv, videosink, NULL); gst_element_link_many (appsrc, conv, videosink, NULL); /* setup appsrc */ g_object_set (G_OBJECT (appsrc), "stream-type", 0, "format", GST_FORMAT_TIME, NULL); g_signal_connect (appsrc, "need-data", G_CALLBACK (cb_need_data), NULL); /* play */ gst_element_set_state (pipeline, GST_STATE_PLAYING); g_main_loop_run (loop); /* clean up */ gst_element_set_state (pipeline, GST_STATE_NULL); gst_object_unref (GST_OBJECT (pipeline)); g_main_loop_unref (loop); return 0; }

Grabbing data with appsink

Unlike appsrc, appsink is a little easier to use. It also supports pull and push-based modes for getting data from the pipeline.

The normal way of retrieving samples from appsink is by using the gst_app_sink_pull_sample() andgst_app_sink_pull_preroll() methods or by using the pull-sample and pull-preroll signals. These methods block until a sample becomes available in the sink or when the sink is shut down or reaches EOS.

appsink will internally use a queue to collect buffers from the streaming thread. If the application is not pulling samples fast enough, this queue will consume a lot of memory over time. The max-buffers property can be used to limit the queue size. The drop property controls whether the streaming thread blocks or if older buffers are dropped when the maximum queue size is reached. Note that blocking the streaming thread can negatively affect real-time performance and should be avoided.

If a blocking behaviour is not desirable, setting the emit-signals property to TRUE will make appsink emit the new-sample and new-preroll signals when a sample can be pulled without blocking.

The caps property on appsink can be used to control the formats that the latter can receive. This property can contain non-fixed caps, the format of the pulled samples can be obtained by getting the sample caps.

If one of the pull-preroll or pull-sample methods return NULL, the appsink is stopped or in the EOSstate. You can check for the EOS state with the eos property or with the gst_app_sink_is_eos() method.

The eos signal can also be used to be informed when the EOS state is reached to avoid polling.

Consider configuring the following properties in the appsink:

-

The

syncproperty if you want to have the sink base class synchronize the buffer against the pipeline clock before handing you the sample. -

Enable Quality-of-Service with the

qosproperty. If you are dealing with raw video frames and let the base class synchronize on the clock. It might also be a good idea to let the base class sendQOSevents upstream. -

The caps property that contains the accepted caps. Upstream elements will try to convert the format so that it matches the configured caps on

appsink. You must still check theGstSampleto get the actual caps of the buffer.

Appsink example

What follows is an example on how to capture a snapshot of a video stream using appsink.

#include <gst/gst.h> #ifdef HAVE_GTK #include <gtk/gtk.h> #endif #include <stdlib.h> #define CAPS "video/x-raw,format=RGB,width=160,pixel-aspect-ratio=1/1" int main (int argc, char *argv[]) { GstElement *pipeline, *sink; gint width, height; GstSample *sample; gchar *descr; GError *error = NULL; gint64 duration, position; GstStateChangeReturn ret; gboolean res; GstMapInfo map; gst_init (&argc, &argv); if (argc != 2) { g_print ("usage: %s <uri>\n Writes snapshot.png in the current directory\n", argv[0]); exit (-1); } /* create a new pipeline */ descr = g_strdup_printf ("uridecodebin uri=%s ! videoconvert ! videoscale ! " " appsink name=sink caps=\"" CAPS "\"", argv[1]); pipeline = gst_parse_launch (descr, &error); if (error != NULL) { g_print ("could not construct pipeline: %s\n", error->message); g_clear_error (&error); exit (-1); } /* get sink */ sink = gst_bin_get_by_name (GST_BIN (pipeline), "sink"); /* set to PAUSED to make the first frame arrive in the sink */ ret = gst_element_set_state (pipeline, GST_STATE_PAUSED); switch (ret) { case GST_STATE_CHANGE_FAILURE: g_print ("failed to play the file\n"); exit (-1); case GST_STATE_CHANGE_NO_PREROLL: /* for live sources, we need to set the pipeline to PLAYING before we can * receive a buffer. We don't do that yet */ g_print ("live sources not supported yet\n"); exit (-1); default: break; } /* This can block for up to 5 seconds. If your machine is really overloaded, * it might time out before the pipeline prerolled and we generate an error. A * better way is to run a mainloop and catch errors there. */ ret = gst_element_get_state (pipeline, NULL, NULL, 5 * GST_SECOND); if (ret == GST_STATE_CHANGE_FAILURE) { g_print ("failed to play the file\n"); exit (-1); } /* get the duration */ gst_element_query_duration (pipeline, GST_FORMAT_TIME, &duration); if (duration != -1) /* we have a duration, seek to 5% */ position = duration * 5 / 100; else /* no duration, seek to 1 second, this could EOS */ position = 1 * GST_SECOND; /* seek to the a position in the file. Most files have a black first frame so * by seeking to somewhere else we have a bigger chance of getting something * more interesting. An optimisation would be to detect black images and then * seek a little more */ gst_element_seek_simple (pipeline, GST_FORMAT_TIME, GST_SEEK_FLAG_KEY_UNIT | GST_SEEK_FLAG_FLUSH, position); /* get the preroll buffer from appsink, this block untils appsink really * prerolls */ g_signal_emit_by_name (sink, "pull-preroll", &sample, NULL); /* if we have a buffer now, convert it to a pixbuf. It's possible that we * don't have a buffer because we went EOS right away or had an error. */ if (sample) { GstBuffer *buffer; GstCaps *caps; GstStructure *s; /* get the snapshot buffer format now. We set the caps on the appsink so * that it can only be an rgb buffer. The only thing we have not specified * on the caps is the height, which is dependant on the pixel-aspect-ratio * of the source material */ caps = gst_sample_get_caps (sample); if (!caps) { g_print ("could not get snapshot format\n"); exit (-1); } s = gst_caps_get_structure (caps, 0); /* we need to get the final caps on the buffer to get the size */ res = gst_structure_get_int (s, "width", &width); res |= gst_structure_get_int (s, "height", &height); if (!res) { g_print ("could not get snapshot dimension\n"); exit (-1); } /* create pixmap from buffer and save, gstreamer video buffers have a stride * that is rounded up to the nearest multiple of 4 */ buffer = gst_sample_get_buffer (sample); /* Mapping a buffer can fail (non-readable) */ if (gst_buffer_map (buffer, &map, GST_MAP_READ)) { #ifdef HAVE_GTK pixbuf = gdk_pixbuf_new_from_data (map.data, GDK_COLORSPACE_RGB, FALSE, 8, width, height, GST_ROUND_UP_4 (width * 3), NULL, NULL); /* save the pixbuf */ gdk_pixbuf_save (pixbuf, "snapshot.png", "png", &error, NULL); #endif gst_buffer_unmap (buffer, &map); } gst_sample_unref (sample); } else { g_print ("could not make snapshot\n"); } /* cleanup and exit */ gst_element_set_state (pipeline, GST_STATE_NULL); gst_object_unref (sink); gst_object_unref (pipeline); exit (0); }

Forcing a format

Sometimes you'll want to set a specific format. You can do this with a capsfilter element.

If you want, for example, a specific video size and color format or an audio bitsize and a number of channels; you can force a specific GstCaps on the pipeline using filtered caps. You set filtered caps on a link by putting a capsfilter between two elements and specifying your desired GstCaps in its caps property. Thecapsfilter will only allow types compatible with these capabilities to be negotiated.

See also Creating capabilities for filtering.

Changing format in a PLAYING pipeline

It is also possible to dynamically change the format in a pipeline while PLAYING. This can simply be done by changing the caps property on a capsfilter. The capsfilter will send a RECONFIGURE event upstream that will make the upstream element attempt to renegotiate a new format and allocator. This only works if the upstream element is not using fixed caps on its source pad.

Below is an example of how you can change the caps of a pipeline while in the PLAYING state:

#include <stdlib.h> #include <gst/gst.h> #define MAX_ROUND 100 int main (int argc, char **argv) { GstElement *pipe, *filter; GstCaps *caps; gint width, height; gint xdir, ydir; gint round; GstMessage *message; gst_init (&argc, &argv); pipe = gst_parse_launch_full ("videotestsrc ! capsfilter name=filter ! " "ximagesink", NULL, GST_PARSE_FLAG_NONE, NULL); g_assert (pipe != NULL); filter = gst_bin_get_by_name (GST_BIN (pipe), "filter"); g_assert (filter); width = 320; height = 240; xdir = ydir = -10; for (round = 0; round < MAX_ROUND; round++) { gchar *capsstr; g_print ("resize to %dx%d (%d/%d) \r", width, height, round, MAX_ROUND); /* we prefer our fixed width and height but allow other dimensions to pass * as well */ capsstr = g_strdup_printf ("video/x-raw, width=(int)%d, height=(int)%d", width, height); caps = gst_caps_from_string (capsstr); g_free (capsstr); g_object_set (filter, "caps", caps, NULL); gst_caps_unref (caps); if (round == 0) gst_element_set_state (pipe, GST_STATE_PLAYING); width += xdir; if (width >= 320) xdir = -10; else if (width < 200) xdir = 10; height += ydir; if (height >= 240) ydir = -10; else if (height < 150) ydir = 10; message = gst_bus_poll (GST_ELEMENT_BUS (pipe), GST_MESSAGE_ERROR, 50 * GST_MSECOND); if (message) { g_print ("got error \n"); gst_message_unref (message); } } g_print ("done \n"); gst_object_unref (filter); gst_element_set_state (pipe, GST_STATE_NULL); gst_object_unref (pipe); return 0; }

Note how we use gst_bus_poll() with a small timeout to get messages and also introduce a short sleep.

It is possible to set multiple caps for the capsfilter separated with a ;. The capsfilter will try to renegotiate to the first possible format from the list.

Dynamically changing the pipeline

In this section we talk about some techniques for dynamically modifying the pipeline. We are talking specifically about changing the pipeline while in PLAYING state and without interrupting the data flow.

There are some important things to consider when building dynamic pipelines:

-

When removing elements from the pipeline, make sure that there is no dataflow on unlinked pads because that will cause a fatal pipeline error. Always block source pads (in push mode) or sink pads (in pull mode) before unlinking pads. See also Changing elements in a pipeline.

-

When adding elements to a pipeline, make sure to put the element into the right state, usually the same state as the parent, before allowing dataflow. When an element is newly created, it is in the

NULLstate and will return an error when it receives data. See also Changing elements in a pipeline. -

When adding elements to a pipeline, GStreamer will by default set the clock and base-time on the element to the current values of the pipeline. This means that the element will be able to construct the same pipeline running-time as the other elements in the pipeline. This means that sinks will synchronize buffers like the other sinks in the pipeline and that sources produce buffers with a running-time that matches the other sources.

-

When unlinking elements from an upstream chain, always make sure to flush any queued data in the element by sending an

EOSevent down the element sink pad(s) and by waiting that theEOSleaves the elements (with an event probe).If you don't perform a flush, you will lose the data buffered by the unlinked element. This can result in a simple frame loss (a few video frames, several milliseconds of audio, etc) but If you remove a muxer -- and in some cases an encoder or similar elements --, you risk getting a corrupted file which can't be played properly because some relevant metadata (header, seek/index tables, internal sync tags) might not be properly stored or updated.

See also Changing elements in a pipeline.

-

A live source will produce buffers with a

running-timeequal to the pipeline's currentrunning-time.A pipeline without a live source produces buffers with a

running-timestarting from 0. Likewise, after a flushing seek, these pipelines reset therunning-timeback to 0.The

running-timecan be changed withgst_pad_set_offset (). It is important to know therunning-timeof the elements in the pipeline in order to maintain synchronization. -

Adding elements might change the state of the pipeline. Adding a non-prerolled sink, for example, brings the pipeline back to the prerolling state. Removing a non-prerolled sink, for example, might change the pipeline to PAUSED and PLAYING state.

Adding a live source cancels the preroll stage and puts the pipeline in the playing state. Adding any live element might also change the pipeline's latency.

Adding or removing pipeline's elements might change the clock selection of the pipeline. If the newly added element provides a clock, it might be good for the pipeline to use the new clock. If, on the other hand, the element that is providing the clock for the pipeline is removed, a new clock has to be selected.

-

Adding and removing elements might cause upstream or downstream elements to renegotiate caps and/or allocators. You don't really need to do anything from the application, plugins largely adapt themselves to the new pipeline topology in order to optimize their formats and allocation strategy.

What is important is that when you add, remove or change elements in a pipeline, it is possible that the pipeline needs to negotiate a new format and this can fail. Usually you can fix this by inserting the right converter elements where needed. See also Changing elements in a pipeline.

GStreamer offers support for doing almost any dynamic pipeline modification but you need to know a few details before you can do this without causing pipeline errors. In the following sections we will demonstrate a few typical modification use-cases.

Changing elements in a pipeline

In this example we have the following element chain:

- ----. .----------. .---- -

element1 | | element2 | | element3

src -> sink src -> sink

- ----' '----------' '---- -

We want to replace element2 by element4 while the pipeline is in the PLAYING state. Let's say that element2 is a visualization and that you want to switch the visualization in the pipeline.

We can't just unlink element2's sinkpad from element1's source pad because that would leave element1's source pad unlinked and would cause a streaming error in the pipeline when data is pushed on the source pad. The technique is to block the dataflow from element1's source pad before we replace element2 by element4 and then resume dataflow as shown in the following steps:

-

Block element1's source pad with a blocking pad probe. When the pad is blocked, the probe callback will be called.

-

Inside the block callback nothing is flowing between element1 and element2 and nothing will flow until unblocked.

-

Unlink element1 and element2.

-

Make sure data is flushed out of element2. Some elements might internally keep some data, you need to make sure not to lose any by forcing it out of element2. You can do this by pushing

EOSinto element2, like this:-

Put an event probe on element2's source pad.

-

Send

EOSto element2's sink pad. This makes sure that all the data inside element2 is forced out. -

Wait for the

EOSevent to appear on element2's source pad. When theEOSis received, drop it and remove the event probe.

-

-

Unlink element2 and element3. You can now also remove element2 from the pipeline and set the state to

NULL. -

Add element4 to the pipeline, if not already added. Link element4 and element3. Link element1 and element4.

-

Make sure element4 is in the same state as the rest of the elements in the pipeline. It should be at least in the

PAUSEDstate before it can receive buffers and events. -

Unblock element1's source pad probe. This will let new data into element4 and continue streaming.

The above algorithm works when the source pad is blocked, i.e. when there is dataflow in the pipeline. If there is no dataflow, there is also no point in changing the element (just yet) so this algorithm can be used in the PAUSED state as well.

This example changes the video effect on a simple pipeline once per second:

#include <gst/gst.h> static gchar *opt_effects = NULL; #define DEFAULT_EFFECTS "identity,exclusion,navigationtest," \ "agingtv,videoflip,vertigotv,gaussianblur,shagadelictv,edgetv" static GstPad *blockpad; static GstElement *conv_before; static GstElement *conv_after; static GstElement *cur_effect; static GstElement *pipeline; static GQueue effects = G_QUEUE_INIT; static GstPadProbeReturn event_probe_cb (GstPad * pad, GstPadProbeInfo * info, gpointer user_data) { GMainLoop *loop = user_data; GstElement *next; if (GST_EVENT_TYPE (GST_PAD_PROBE_INFO_DATA (info)) != GST_EVENT_EOS) return GST_PAD_PROBE_PASS; gst_pad_remove_probe (pad, GST_PAD_PROBE_INFO_ID (info)); /* push current effect back into the queue */ g_queue_push_tail (&effects, gst_object_ref (cur_effect)); /* take next effect from the queue */ next = g_queue_pop_head (&effects); if (next == NULL) { GST_DEBUG_OBJECT (pad, "no more effects"); g_main_loop_quit (loop); return GST_PAD_PROBE_DROP; } g_print ("Switching from '%s' to '%s'..\n", GST_OBJECT_NAME (cur_effect), GST_OBJECT_NAME (next)); gst_element_set_state (cur_effect, GST_STATE_NULL); /* remove unlinks automatically */ GST_DEBUG_OBJECT (pipeline, "removing %" GST_PTR_FORMAT, cur_effect); gst_bin_remove (GST_BIN (pipeline), cur_effect); GST_DEBUG_OBJECT (pipeline, "adding %" GST_PTR_FORMAT, next); gst_bin_add (GST_BIN (pipeline), next); GST_DEBUG_OBJECT (pipeline, "linking.."); gst_element_link_many (conv_before, next, conv_after, NULL); gst_element_set_state (next, GST_STATE_PLAYING); cur_effect = next; GST_DEBUG_OBJECT (pipeline, "done"); return GST_PAD_PROBE_DROP; } static GstPadProbeReturn pad_probe_cb (GstPad * pad, GstPadProbeInfo * info, gpointer user_data) { GstPad *srcpad, *sinkpad; GST_DEBUG_OBJECT (pad, "pad is blocked now"); /* remove the probe first */ gst_pad_remove_probe (pad, GST_PAD_PROBE_INFO_ID (info)); /* install new probe for EOS */ srcpad = gst_element_get_static_pad (cur_effect, "src"); gst_pad_add_probe (srcpad, GST_PAD_PROBE_TYPE_BLOCK | GST_PAD_PROBE_TYPE_EVENT_DOWNSTREAM, event_probe_cb, user_data, NULL); gst_object_unref (srcpad); /* push EOS into the element, the probe will be fired when the * EOS leaves the effect and it has thus drained all of its data */ sinkpad = gst_element_get_static_pad (cur_effect, "sink"); gst_pad_send_event (sinkpad, gst_event_new_eos ()); gst_object_unref (sinkpad); return GST_PAD_PROBE_OK; } static gboolean timeout_cb (gpointer user_data) { gst_pad_add_probe (blockpad, GST_PAD_PROBE_TYPE_BLOCK_DOWNSTREAM, pad_probe_cb, user_data, NULL); return TRUE; } static gboolean bus_cb (GstBus * bus, GstMessage * msg, gpointer user_data) { GMainLoop *loop = user_data; switch (GST_MESSAGE_TYPE (msg)) { case GST_MESSAGE_ERROR:{ GError *err = NULL; gchar *dbg; gst_message_parse_error (msg, &err, &dbg); gst_object_default_error (msg->src, err, dbg); g_clear_error (&err); g_free (dbg); g_main_loop_quit (loop); break; } default: break; } return TRUE; } int main (int argc, char **argv) { GOptionEntry options[] = { {"effects", 'e', 0, G_OPTION_ARG_STRING, &opt_effects, "Effects to use (comma-separated list of element names)", NULL}, {NULL} }; GOptionContext *ctx; GError *err = NULL; GMainLoop *loop; GstElement *src, *q1, *q2, *effect, *filter1, *filter2, *sink; gchar **effect_names, **e; ctx = g_option_context_new (""); g_option_context_add_main_entries (ctx, options, NULL); g_option_context_add_group (ctx, gst_init_get_option_group ()); if (!g_option_context_parse (ctx, &argc, &argv, &err)) { g_print ("Error initializing: %s\n", err->message); g_clear_error (&err); g_option_context_free (ctx); return 1; } g_option_context_free (ctx); if (opt_effects != NULL) effect_names = g_strsplit (opt_effects, ",", -1); else effect_names = g_strsplit (DEFAULT_EFFECTS, ",", -1); for (e = effect_names; e != NULL && *e != NULL; ++e) { GstElement *el; el = gst_element_factory_make (*e, NULL); if (el) { g_print ("Adding effect '%s'\n", *e); g_queue_push_tail (&effects, el); } } pipeline = gst_pipeline_new ("pipeline"); src = gst_element_factory_make ("videotestsrc", NULL); g_object_set (src, "is-live", TRUE, NULL); filter1 = gst_element_factory_make ("capsfilter", NULL); gst_util_set_object_arg (G_OBJECT (filter1), "caps", "video/x-raw, width=320, height=240, " "format={ I420, YV12, YUY2, UYVY, AYUV, Y41B, Y42B, " "YVYU, Y444, v210, v216, NV12, NV21, UYVP, A420, YUV9, YVU9, IYU1 }"); q1 = gst_element_factory_make ("queue", NULL); blockpad = gst_element_get_static_pad (q1, "src"); conv_before = gst_element_factory_make ("videoconvert", NULL); effect = g_queue_pop_head (&effects); cur_effect = effect; conv_after = gst_element_factory_make ("videoconvert", NULL); q2 = gst_element_factory_make ("queue", NULL); filter2 = gst_element_factory_make ("capsfilter", NULL); gst_util_set_object_arg (G_OBJECT (filter2), "caps", "video/x-raw, width=320, height=240, " "format={ RGBx, BGRx, xRGB, xBGR, RGBA, BGRA, ARGB, ABGR, RGB, BGR }"); sink = gst_element_factory_make ("ximagesink", NULL); gst_bin_add_many (GST_BIN (pipeline), src, filter1, q1, conv_before, effect, conv_after, q2, sink, NULL); gst_element_link_many (src, filter1, q1, conv_before, effect, conv_after, q2, sink, NULL); gst_element_set_state (pipeline, GST_STATE_PLAYING); loop = g_main_loop_new (NULL, FALSE); gst_bus_add_watch (GST_ELEMENT_BUS (pipeline), bus_cb, loop); g_timeout_add_seconds (1, timeout_cb, loop); g_main_loop_run (loop); gst_element_set_state (pipeline, GST_STATE_NULL); gst_object_unref (pipeline); return 0; }

Note how we added videoconvert elements before and after the effect. This is needed because some elements might operate in different colorspaces; by inserting the conversion elements, we can help ensure a proper format can be negotiated.