上篇文章介绍了zookeeper集群环境的搭建传送门,接下来这篇文章主要介绍jstorm安装与集群环境的配置以及jstorm ui配置。

jstorm最新版本为2.2.1 下载地址传送门。本文将采用192.168.72.140,141,142作为zookeeper集群服务器,192.168.72.151,152,153作为jstorm集群服务器,其中151作为master,UI服务器,接下来进入本文主题部分。

一、环境准备

1.配置主机名和映射地址

151上执行

hostname jstorm-master

vim /etc/hosts

192.168.72.140 zookeeper-master

192.168.72.141 zookeeper-slave1

192.168.72.142 zookeeper-slave2

192.168.72.151 jstorm-master

192.168.72.152 jstorm-slave1

192.168.72.153 jstorm-slave22.在跟目录创建jstorm文件夹,用于存放所有jstorm相关文件.

mkdir /jstorm3.解压jstorm并拷贝到jstorm文件夹下

cp -r jstorm-2.2.1 /jstorm/4.在/jstorm/jstorm-2.2.1/目录下创建jstorm_data目录

mkdir /jstorm/jstorm-2.2.1/jstorm_data5.配置jstorm环境变量

echo 'export JSTORM_HOME=/jstorm/jstorm-2.2.1' >> ~/.bashrc

echo 'export PATH=$PATH:$JSTORM_HOME/bin' >> ~/.bashrc重启配置文件使之生效

source ~/.bashrc6.创建jstorm_data文件夹用于保存运行时产生的数据

mkdir -p /jstorm/jstorm-2.2.1/jstorm_data7.备份storm.yaml文件

cp /jstorm/jstorm-2.2.1/conf/storm.yaml /jstorm/jstorm-2.2.1/conf/storm.yaml.back8.编辑storm.yaml文件

########### These MUST be filled in for a storm configuration

storm.zookeeper.servers:

- "192.168.72.142"

- "192.168.72.141"

- "192.168.72.140"

storm.zookeeper.root: "/jstorm"

nimbus.host: "192.168.72.151"

# cluster.name: "default"

#nimbus.host/nimbus.host.start.supervisor is being used by $JSTORM_HOME/bin/start.sh

#it only support IP, please don't set hostname

# For example

# nimbus.host: "10.132.168.10, 10.132.168.45"

#nimbus.host.start.supervisor: false

# %JSTORM_HOME% is the jstorm home directory

storm.local.dir: "/jstorm/jstorm-2.2.1/jstorm_data"

# please set absolute path, default path is JSTORM_HOME/logs

# jstorm.log.dir: "absolute path"

# java.library.path: "/usr/local/lib:/opt/local/lib:/usr/lib"

nimbus.childopts: "-Xms1g -Xmx1g -Xmn512m -XX:SurvivorRatio=4 -XX:MaxTenuringThreshold=15 -XX:+UseConcMarkSweepGC -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 -XX:+HeapDumpOnOutOfMemoryError -XX:CMSMaxAbortablePrecleanTime=5000"

# if supervisor.slots.ports is null,

# the port list will be generated by cpu cores and system memory size

# for example,

# there are cpu_num = system_physical_cpu_num/supervisor.slots.port.cpu.weight

# there are mem_num = system_physical_memory_size/(worker.memory.size * supervisor.slots.port.mem.weight)

# The final port number is min(cpu_num, mem_num)

# supervisor.slots.ports.base: 6800

# supervisor.slots.port.cpu.weight: 1.2

# supervisor.slots.port.mem.weight: 0.7

# supervisor.slots.ports: null

supervisor.slots.ports:

- 6800

- 6801

- 6802

- 6803

# Default disable user-define classloader

# If there are jar conflict between jstorm and application,

# please enable it

# topology.enable.classloader: false

# enable supervisor use cgroup to make resource isolation

# Before enable it, you should make sure:

# 1. Linux version (>= 2.6.18)

# 2. Have installed cgroup (check the file's existence:/proc/cgroups)

# 3. You should start your supervisor on root

# You can get more about cgroup:

# http://t.cn/8s7nexU

# supervisor.enable.cgroup: false

### Netty will send multiple messages in one batch

### Setting true will improve throughput, but more latency

# storm.messaging.netty.transfer.async.batch: true

### default worker memory size, unit is byte

# worker.memory.size: 2147483648

# Metrics Monitor

# topology.performance.metrics: it is the switch flag for performance

# purpose. When it is disabled, the data of timer and histogram metrics

# will not be collected.

# topology.alimonitor.metrics.post: If it is disable, metrics data

# will only be printed to log. If it is enabled, the metrics data will be

# posted to alimonitor besides printing to log.

# topology.performance.metrics: true

# topology.alimonitor.metrics.post: false

# UI MultiCluster

# Following is an example of multicluster UI configuration

ui.clusters:

- {

name: "jstorm",

zkRoot: "/jstorm",

zkServers:

[ "192.168.72.140","192.168.72.141","192.168.72.142"],

zkPort: 2181,

}说明:其中需要修改的配置为

storm.zookeeper.servers:

- "192.168.72.142"

- "192.168.72.141"

- "192.168.72.140"上面配置的是zookeeper服务器

supervisor.slots.ports:

- 6800

- 6801

- 6802

- 6803标识配置jstorm的一些端口,一般为默认,只需要打开注释即可。

jstorm主服务位置配置

nimbus.host: "192.168.72.151" nimbus.childopts: "-Xms1g -Xmx1g -Xmn512m -XX:SurvivorRatio=4 -XX:MaxTenuringThreshold=15 -XX:+UseConcMarkSweepGC -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 -XX:+HeapDumpOnOutOfMemoryError -XX:CMSMaxAbortablePrecleanTime=5000"配置JVM否则在启动UI的时候会报内存方面错误

ui.clusters:

- {

name: "jstorm",

zkRoot: "/jstorm",

zkServers:

[ "192.168.72.140","192.168.72.141","192.168.72.142"],

zkPort: 2181,

}这段代码是用于配置 Jstorm UI监控,只需要在UI服务器上进行配置,在该示例中151作为jstorm nimbus和UI的服务器,slave无需配置上面这段代码。执行到这一步jstorm集群配置基本完毕(从节点).

对于主节点还需要执行一下脚本,而且每次变更都需要执行第二句话

mkdir ~/.jstorm

cp -f $JSTORM_HOME/conf/storm.yaml ~/.jstorm

二、启动Jstorm UI

将jstorm目录下jstrom-ui-2.2.1.war拷贝到自己tomcat下

执行

mkdir ~/.jstorm

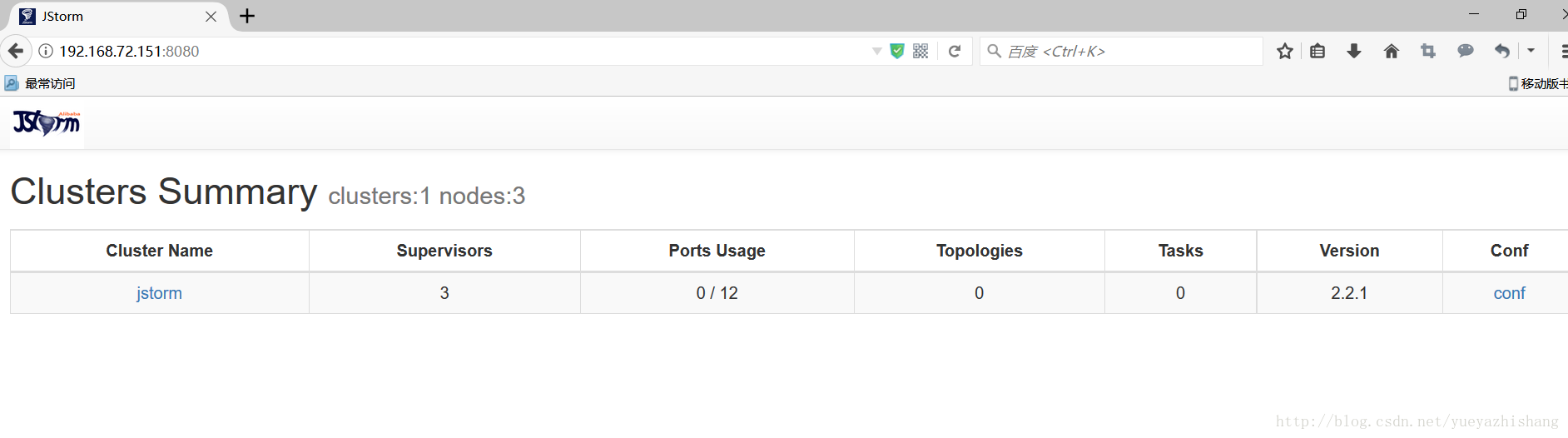

cp -f $JSTORM_HOME/conf/storm.yaml ~/.jstorm启动tomcat,看到如下界面说明UI配置成功

看到下面界面说明Jstorm集群配置成功