本文作为学习记录,参考 此处,如有侵权,联系删除。

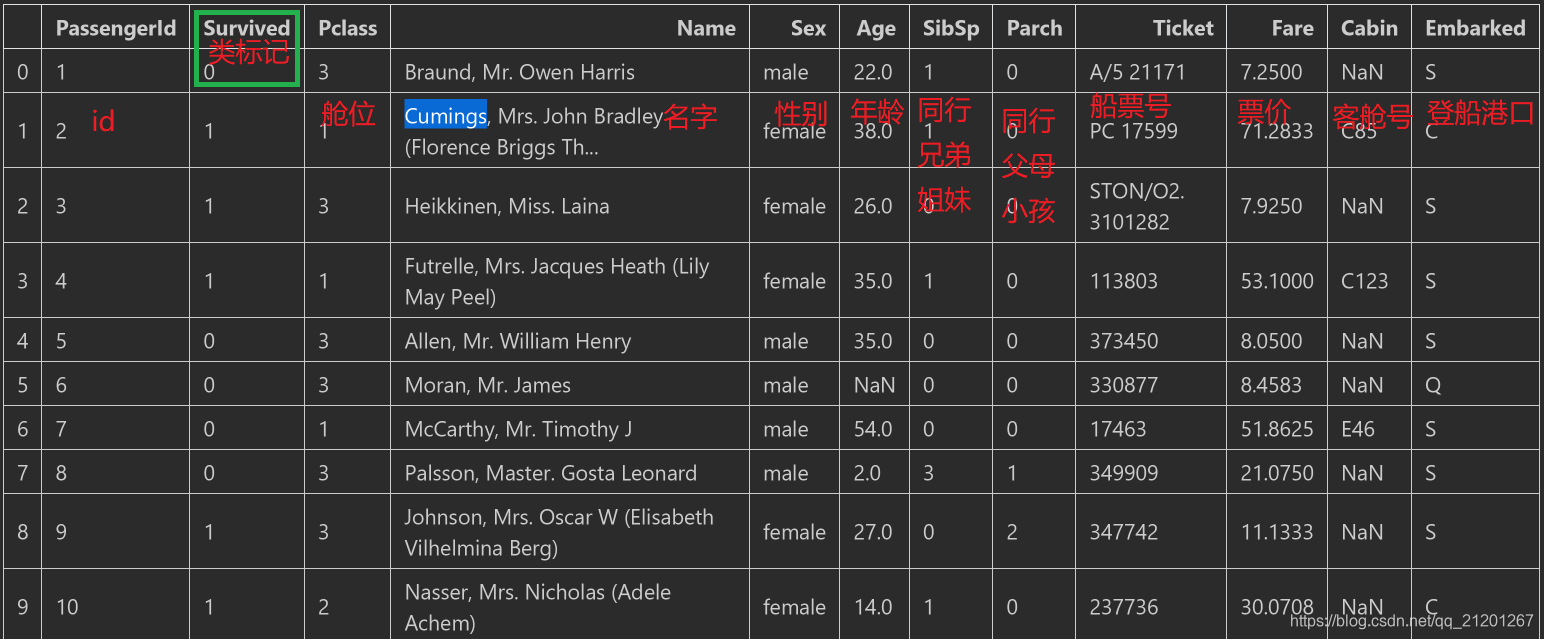

1. 数据预览

import pandas as pd

import numpy as np

from pandas import Series, DataFrame

data_train = pd.read_csv("titanic_train.csv")

data_test = pd.read_csv("titanic_test.csv")

# 读取前10行

data_train.head(10)

data_train.info()

--------------------------------

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

PassengerId 891 non-null int64

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 714 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Ticket 891 non-null object

Fare 891 non-null float64

Cabin 204 non-null object # 有的原始信息缺失

Embarked 889 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.7+ KB

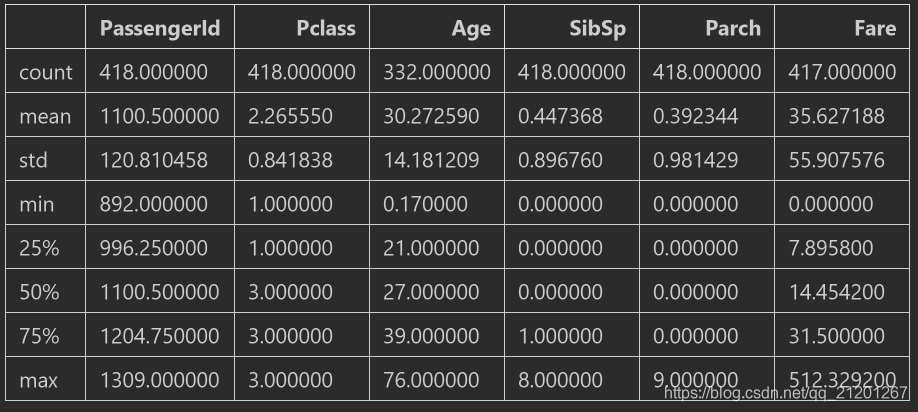

data_test.describe() # 可见一些统计信息

2. 特征初步选择

- 由于

Cabin客舱号大部分都缺失,进行填补,可能会造成较大误差,不选 - 乘客

id,是个连续数据,跟是否存活应该无关,不选 - 年龄

Age,是个比较重要的特征,对缺失的部分用中位数进行填充

data_train["Age"] = data_train["Age"].fillna(data_train["Age"].median())

- 初步调用一些模型(默认参数)进行预测:

algs = [Perceptron(),KNeighborsClassifier(),GaussianNB(),DecisionTreeClassifier(), LinearRegression(),LogisticRegression(),SVC(),RandomForestClassifier()]

from sklearn.linear_model import Perceptron

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.tree import DecisionTreeClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier # boost

from sklearn.model_selection import KFold # 交叉验证

features = ["Pclass","Age","SibSp","Parch","Fare"]

algs = [Perceptron(),KNeighborsClassifier(),GaussianNB(),DecisionTreeClassifier(),

LinearRegression(),LogisticRegression(),SVC(),RandomForestClassifier()]

for alg in algs:

kf = KFold(n_splits=5,shuffle=True,random_state=1)

predictions = []

for train, test in kf.split(data_train):

train_features = (data_train[features].iloc[train,:])

train_label = data_train["Survived"].iloc[train]

alg.fit(train_features,train_label)

test_predictions = alg.predict(data_train[features].iloc[test,:])

predictions.append(test_predictions)

predictions = np.concatenate(predictions,axis=0) # 合并3组数据

predictions[predictions>0.5] = 1

predictions[predictions<=0.5] = 0

accuracy = sum(predictions == data_train["Survived"])/len(predictions)

print("模型准确率:", accuracy)

交叉验证的参数 shuffle = True,打乱数据

模型准确率: 0.531986531986532

模型准确率: 0.5488215488215489

模型准确率: 0.5566778900112234

模型准确率: 0.5353535353535354

模型准确率: 0.5712682379349046

模型准确率: 0.569023569023569

模型准确率: 0.5712682379349046

模型准确率: 0.5364758698092031

交叉验证参数 shuffle = False,正确率就提高了,why ???求解答

模型准确率: 0.5679012345679012

模型准确率: 0.6644219977553311

模型准确率: 0.6745230078563412

模型准确率: 0.632996632996633

模型准确率: 0.6947250280583613

模型准确率: 0.6980920314253648

模型准确率: 0.6644219977553311

模型准确率: 0.6846240179573513

3. 增加特征Sex和Embarked

- 上面效果不好,增加一些特征

- 增加特征

Sex和Embarked,查看对预测的影响 - 这两个特征为字符串,需要转成数字

print(pd.value_counts(data_train.loc[:,"Embarked"]))

----------------------

S 644

C 168

Q 77

Name: Embarked, dtype: int64

# sex转成数字

data_train.loc[data_train["Sex"]=="male","Sex"] = 0

data_train.loc[data_train["Sex"]=="female","Sex"] = 1

# 登船地点,缺失的用最多的S进行填充

data_train["Embarked"] = data_train["Embarked"].fillna('S')

data_train.loc[data_train["Embarked"]=="S", "Embarked"]=0

data_train.loc[data_train["Embarked"]=="C", "Embarked"]=1

data_train.loc[data_train["Embarked"]=="Q", "Embarked"]=2

features = ["Pclass","Age","SibSp","Parch","Fare","Embarked","Sex"]

交叉验证的参数 shuffle = True,正确率依然很低,再次提问,why ???

模型准确率: 0.5521885521885522

模型准确率: 0.5432098765432098

模型准确率: 0.5185185185185185

模型准确率: 0.5286195286195287

模型准确率: 0.5230078563411896

模型准确率: 0.5252525252525253

模型准确率: 0.5723905723905723

模型准确率: 0.5196408529741863

交叉验证参数 shuffle = False,正确率相比于上面缺少特征Sex和Embarked时,提高了不少,好的特征对预测结果提升很有帮助

模型准确率: 0.675645342312009

模型准确率: 0.691358024691358

模型准确率: 0.7856341189674523

模型准确率: 0.7822671156004489

模型准确率: 0.7878787878787878

模型准确率: 0.792368125701459

模型准确率: 0.6655443322109988

模型准确率: 0.8058361391694725

4. 选择随机森林调参

从上面可以看出随机森林模型的预测效果最好,使用该模型,进行调参

features = ["Pclass","Age","SibSp","Parch","Fare","Embarked","Sex"]

estimator_num = [5,10,15,20,25,30]

splits_num = [3,5,10,15]

alg = RandomForestClassifier(n_estimators=10)

for e_n in estimator_num:

for sp_n in splits_num:

alg = RandomForestClassifier(n_estimators=e_n)

kf = KFold(n_splits=sp_n,shuffle=False,random_state=1)

predictions_train = []

for train, test in kf.split(data_train):

train_features = (data_train[features].iloc[train,:])

train_label = data_train["Survived"].iloc[train]

alg.fit(train_features,train_label)

train_pred = alg.predict(data_train[features].iloc[test,:])

predictions_train.append(train_pred)

predictions_train = np.concatenate(predictions_train,axis=0) # 合并3组数据

predictions_train[predictions_train>0.5] = 1

predictions_train[predictions_train<=0.5] = 0

accuracy = sum(predictions_train == data_train["Survived"])/len(predictions_train)

print("%d折数据集,%d棵决策树,模型准确率:%.4f" %(sp_n,e_n,accuracy))

3折数据集,5棵决策树,模型准确率:0.7890

5折数据集,5棵决策树,模型准确率:0.7901

10折数据集,5棵决策树,模型准确率:0.7935

15折数据集,5棵决策树,模型准确率:0.8092

3折数据集,10棵决策树,模型准确率:0.7890

5折数据集,10棵决策树,模型准确率:0.8047

10折数据集,10棵决策树,模型准确率:0.8137

15折数据集,10棵决策树,模型准确率:0.8092

3折数据集,15棵决策树,模型准确率:0.7868

5折数据集,15棵决策树,模型准确率:0.8002

10折数据集,15棵决策树,模型准确率:0.8092

15折数据集,15棵决策树,模型准确率:0.8047

3折数据集,20棵决策树,模型准确率:0.7969

5折数据集,20棵决策树,模型准确率:0.8092

10折数据集,20棵决策树,模型准确率:0.8114

15折数据集,20棵决策树,模型准确率:0.8092

3折数据集,25棵决策树,模型准确率:0.7924

5折数据集,25棵决策树,模型准确率:0.8070

10折数据集,25棵决策树,模型准确率:0.8103

15折数据集,25棵决策树,模型准确率:0.8025

3折数据集,30棵决策树,模型准确率:0.7890

5折数据集,30棵决策树,模型准确率:0.8013

10折数据集,30棵决策树,模型准确率:0.8081

15折数据集,30棵决策树,模型准确率:0.8193

最后一种参数下,随机森林模型的预测效果最好

5. 实践总结

熟悉了机器学习的基本流程

- 导入工具包 numpy, pandas, sklearn等

- 数据读取,

pandas.read_csv(file) - pandas的一些数据处理

data.head(n)读取前n行展示

data.info()获取数据的信息

data.describe()获取统计信息(均值、方差等)

data["Age"] = data["Age"].fillna(data["Age"].median())缺失数据填补(均值、最大值、根据别的特征分段填充等)

性别等字符串特征数字化 - 选取特征,初步预测

- 不断的加入新的特征预测

- 选定较好的模型,再调整这些模型的参数,选出最好的模型参数