需求:在给定的文本文件中统计输出每一个单词出现的总次数

第一步: 准备一个aaa.txt文本文档

第二步: 在文本文档中随便写入一些测试数据,这里我写入的是

hello,world,hadoop

hello,hive,sqoop,flume

kitty,tom,jerry,world

hadoop

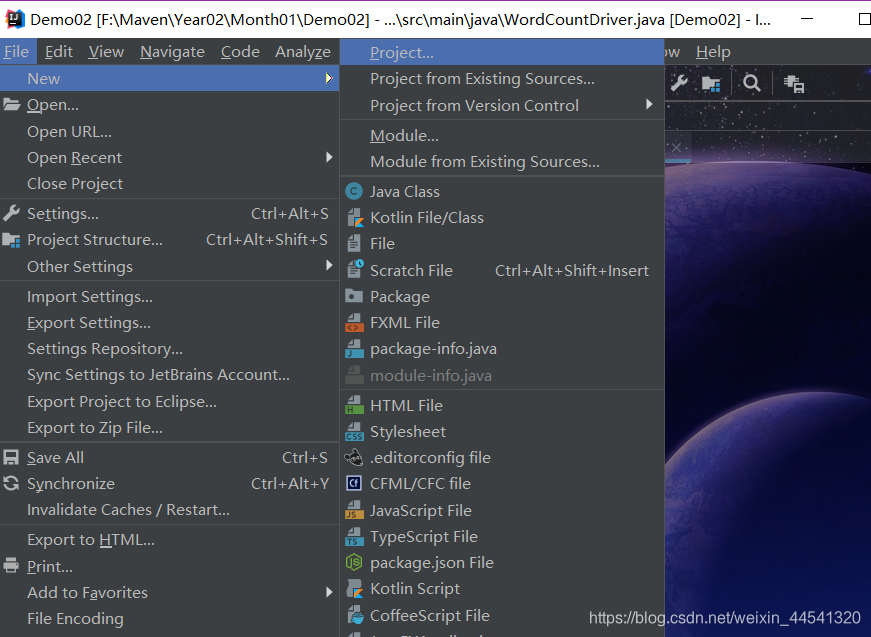

第三步: 打开编码器(我用的是idea)

新建一个项目…

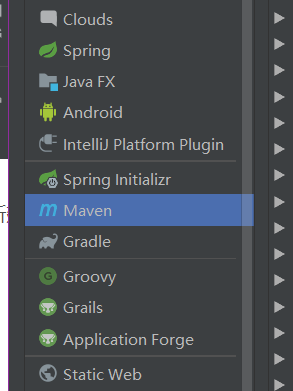

创建maven项目 不用选择框架

修改pom文件

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.itcast</groupId>

<artifactId>mapreduce</artifactId>

<version>1.0-SNAPSHOT</version>

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.Hadoop</groupId>

<artifactId>Hadoop-client</artifactId>

<version>2.6.0-mr1-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.Hadoop</groupId>

<artifactId>Hadoop-common</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.Hadoop</groupId>

<artifactId>Hadoop-hdfs</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>org.apache.Hadoop</groupId>

<artifactId>Hadoop-mapreduce-client-core</artifactId>

<version>2.6.0-cdh5.14.0</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testng</groupId>

<artifactId>testng</artifactId>

<version>RELEASE</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.0</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<minimizeJar>true</minimizeJar>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

定义一个mapper类

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* @Author:Lenvo

* @Description:

* @Date: 2019-11-12 10:32

*/

public class WordCountMap extends Mapper<LongWritable, Text, Text, LongWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//key 指的是游戏中的编号

//key代码里是 这行数据的偏移量

//value 一串图形

// value zhangsan,lisi,wangwu

//1 将value 从text转为String

String datas = value.toString();

//2 切分数据 zhangsan lisi wangwu

String[] splits = datas.split(",");

//3 遍历输出

/* for (int i = 0; i < splits.length; i++) {

}*/

for (String data : splits) {

//输出数据

context.write(new Text(data), new LongWritable(1));

}

//zhangsan 1 输出一次(送一次)

//lisi 1 输出一次(送一次)

//wangwu 1 输出一次(送一次)

}

}

定义一个reducer类

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* @Author:Lenvo

* @Description:

* @Date: 2019-11-12 11:39

*/

public class WordCountReduce extends Reducer<Text, LongWritable, Text, LongWritable> {

//zhangsan 1,1,1,1

//lisi 1,1,1

@Override

protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

//key 是游戏中的图形(zhangsan lisi )

//values 好多 1

long sum = 0;

//遍历values

for (LongWritable value : values) {

sum += value.get();

}

//输出

context.write(key, new LongWritable(sum));

}

}

定义一个主类,用来描述job并提交job

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

/**

* @Author:Lenvo

* @Description:

* @Date: 2019-11-12 11:39

*/

public class WordCountDriver extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

//将已经编写好的Map Reduce添加到计算框架中

//1 、实例一个job

Job job = Job.getInstance(new Configuration(),"WordCount34");

//2、使用job 设置读取数据(包括数据的路径)

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.addInputPath(job,new Path("F:\\aaa.txt"));

//3、使用job 设置MAP类(map 输出的类型)

job.setMapperClass(WordCountMap.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

//4、使用job 设置Reduce类(Reduce 输入和输出的类型)

job.setReducerClass(WordCountReduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//5、使用job 设置数据的输出路径

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job,new Path("F:\\BBB.txt"));

//6、返回执行状态编号

return job.waitForCompletion(true)?0:1;

}

//执行job

public static void main(String[] args) throws Exception {

int run = ToolRunner.run(new WordCountDriver(), args);

System.out.println(run);

}

}

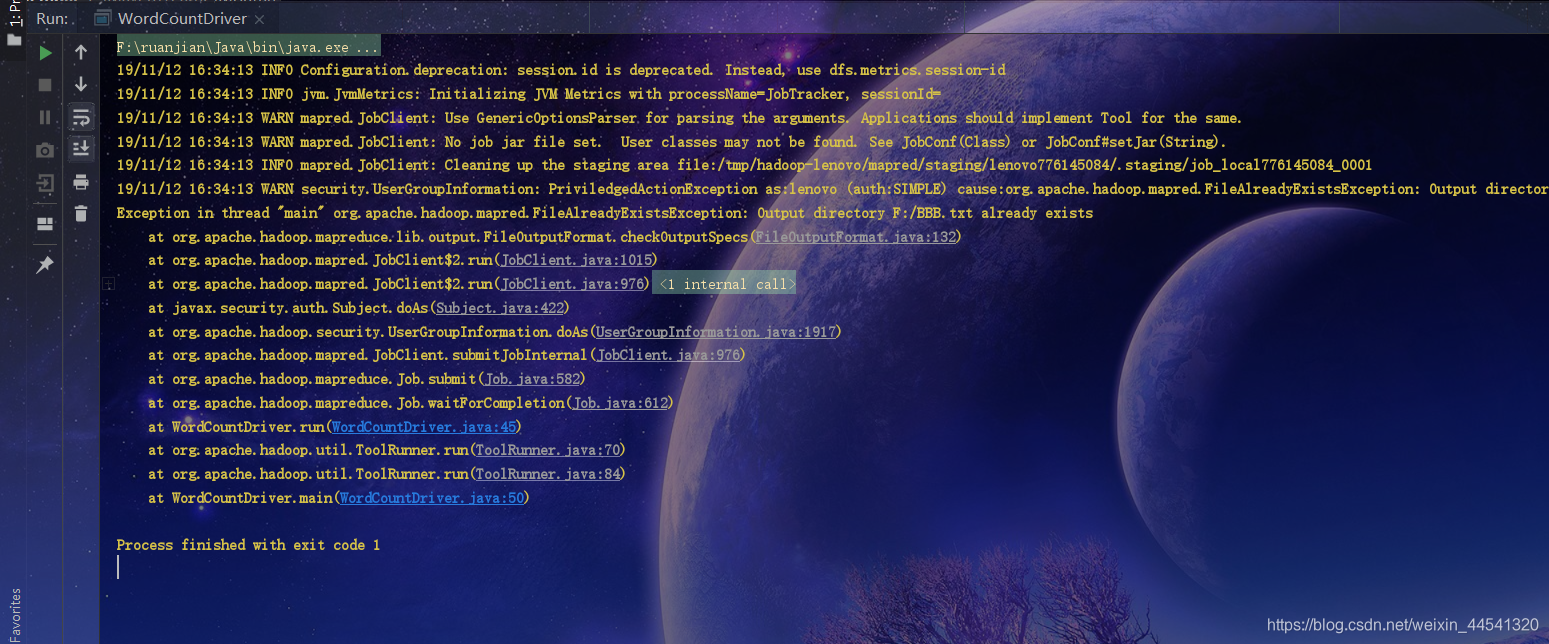

点击执行 出现以下结果 执行成功

F:\ruanjian\Java\bin\java.exe "-javaagent:F:\ruanjian\IntelliJ IDEA 2018.3.5\lib\idea_rt.jar=52321:F:\ruanjian\IntelliJ IDEA 2018.3.5\bin" -Dfile.encoding=UTF-8 -classpath F:\ruanjian\Java\jre\lib\charsets.jar;F:\ruanjian\Java\jre\lib\deploy.jar;F:\ruanjian\Java\jre\lib\ext\access-bridge-64.jar;F:\ruanjian\Java\jre\lib\ext\cldrdata.jar;F:\ruanjian\Java\jre\lib\ext\dnsns.jar;F:\ruanjian\Java\jre\lib\ext\jaccess.jar;F:\ruanjian\Java\jre\lib\ext\jfxrt.jar;F:\ruanjian\Java\jre\lib\ext\localedata.jar;F:\ruanjian\Java\jre\lib\ext\nashorn.jar;F:\ruanjian\Java\jre\lib\ext\sunec.jar;F:\ruanjian\Java\jre\lib\ext\sunjce_provider.jar;F:\ruanjian\Java\jre\lib\ext\sunmscapi.jar;F:\ruanjian\Java\jre\lib\ext\sunpkcs11.jar;F:\ruanjian\Java\jre\lib\ext\zipfs.jar;F:\ruanjian\Java\jre\lib\javaws.jar;F:\ruanjian\Java\jre\lib\jce.jar;F:\ruanjian\Java\jre\lib\jfr.jar;F:\ruanjian\Java\jre\lib\jfxswt.jar;F:\ruanjian\Java\jre\lib\jsse.jar;F:\ruanjian\Java\jre\lib\management-agent.jar;F:\ruanjian\Java\jre\lib\plugin.jar;F:\ruanjian\Java\jre\lib\resources.jar;F:\ruanjian\Java\jre\lib\rt.jar;F:\Maven\Year02\Month01\Demo02\target\classes;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-client\2.6.0-mr1-cdh5.14.0\Hadoop-client-2.6.0-mr1-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-common\2.6.0-cdh5.14.0\hadoop-common-2.6.0-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-hdfs\2.6.0-cdh5.14.0\hadoop-hdfs-2.6.0-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-core\2.6.0-mr1-cdh5.14.0\hadoop-core-2.6.0-mr1-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\hsqldb\hsqldb\1.8.0.10\hsqldb-1.8.0.10.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-annotations\2.6.0-cdh5.14.0\hadoop-annotations-2.6.0-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\google\guava\guava\11.0.2\guava-11.0.2.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-cli\commons-cli\1.2\commons-cli-1.2.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\commons\commons-math3\3.1.1\commons-math3-3.1.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\xmlenc\xmlenc\0.52\xmlenc-0.52.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-httpclient\commons-httpclient\3.1\commons-httpclient-3.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-codec\commons-codec\1.4\commons-codec-1.4.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-io\commons-io\2.4\commons-io-2.4.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-net\commons-net\3.1\commons-net-3.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-collections\commons-collections\3.2.2\commons-collections-3.2.2.jar;F:\ruanjian\apache-maven-3.3.9\repository\javax\servlet\servlet-api\2.5\servlet-api-2.5.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\mortbay\jetty\jetty\6.1.26.cloudera.4\jetty-6.1.26.cloudera.4.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\mortbay\jetty\jetty-util\6.1.26.cloudera.4\jetty-util-6.1.26.cloudera.4.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\sun\jersey\jersey-core\1.9\jersey-core-1.9.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\sun\jersey\jersey-json\1.9\jersey-json-1.9.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\codehaus\jettison\jettison\1.1\jettison-1.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\sun\xml\bind\jaxb-impl\2.2.3-1\jaxb-impl-2.2.3-1.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\codehaus\jackson\jackson-jaxrs\1.8.3\jackson-jaxrs-1.8.3.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\codehaus\jackson\jackson-xc\1.8.3\jackson-xc-1.8.3.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\sun\jersey\jersey-server\1.9\jersey-server-1.9.jar;F:\ruanjian\apache-maven-3.3.9\repository\asm\asm\3.1\asm-3.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\tomcat\jasper-compiler\5.5.23\jasper-compiler-5.5.23.jar;F:\ruanjian\apache-maven-3.3.9\repository\tomcat\jasper-runtime\5.5.23\jasper-runtime-5.5.23.jar;F:\ruanjian\apache-maven-3.3.9\repository\javax\servlet\jsp\jsp-api\2.1\jsp-api-2.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-el\commons-el\1.0\commons-el-1.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-logging\commons-logging\1.1.3\commons-logging-1.1.3.jar;F:\ruanjian\apache-maven-3.3.9\repository\log4j\log4j\1.2.17\log4j-1.2.17.jar;F:\ruanjian\apache-maven-3.3.9\repository\net\java\dev\jets3t\jets3t\0.9.0\jets3t-0.9.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\httpcomponents\httpclient\4.1.2\httpclient-4.1.2.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\httpcomponents\httpcore\4.1.2\httpcore-4.1.2.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\jamesmurty\utils\java-xmlbuilder\0.4\java-xmlbuilder-0.4.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-lang\commons-lang\2.6\commons-lang-2.6.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-configuration\commons-configuration\1.6\commons-configuration-1.6.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-digester\commons-digester\1.8\commons-digester-1.8.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-beanutils\commons-beanutils\1.7.0\commons-beanutils-1.7.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-beanutils\commons-beanutils-core\1.8.0\commons-beanutils-core-1.8.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\slf4j\slf4j-api\1.7.5\slf4j-api-1.7.5.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\slf4j\slf4j-log4j12\1.7.5\slf4j-log4j12-1.7.5.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\codehaus\jackson\jackson-core-asl\1.8.8\jackson-core-asl-1.8.8.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\codehaus\jackson\jackson-mapper-asl\1.8.8\jackson-mapper-asl-1.8.8.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\avro\avro\1.7.6-cdh5.14.0\avro-1.7.6-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\thoughtworks\paranamer\paranamer\2.3\paranamer-2.3.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\xerial\snappy\snappy-java\1.0.4.1\snappy-java-1.0.4.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\google\protobuf\protobuf-java\2.5.0\protobuf-java-2.5.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\google\code\gson\gson\2.2.4\gson-2.2.4.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-auth\2.6.0-cdh5.14.0\hadoop-auth-2.6.0-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\directory\server\apacheds-kerberos-codec\2.0.0-M15\apacheds-kerberos-codec-2.0.0-M15.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\directory\server\apacheds-i18n\2.0.0-M15\apacheds-i18n-2.0.0-M15.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\directory\api\api-asn1-api\1.0.0-M20\api-asn1-api-1.0.0-M20.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\directory\api\api-util\1.0.0-M20\api-util-1.0.0-M20.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\curator\curator-framework\2.7.1\curator-framework-2.7.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\jcraft\jsch\0.1.42\jsch-0.1.42.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\curator\curator-client\2.7.1\curator-client-2.7.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\curator\curator-recipes\2.7.1\curator-recipes-2.7.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\google\code\findbugs\jsr305\3.0.0\jsr305-3.0.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\htrace\htrace-core4\4.0.1-incubating\htrace-core4-4.0.1-incubating.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\zookeeper\zookeeper\3.4.5-cdh5.14.0\zookeeper-3.4.5-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\commons\commons-compress\1.4.1\commons-compress-1.4.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\tukaani\xz\1.0\xz-1.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\commons-daemon\commons-daemon\1.0.13\commons-daemon-1.0.13.jar;F:\ruanjian\apache-maven-3.3.9\repository\io\netty\netty\3.10.5.Final\netty-3.10.5.Final.jar;F:\ruanjian\apache-maven-3.3.9\repository\io\netty\netty-all\4.0.23.Final\netty-all-4.0.23.Final.jar;F:\ruanjian\apache-maven-3.3.9\repository\xerces\xercesImpl\2.9.1\xercesImpl-2.9.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\xml-apis\xml-apis\1.3.04\xml-apis-1.3.04.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\fusesource\leveldbjni\leveldbjni-all\1.8\leveldbjni-all-1.8.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-mapreduce-client-core\2.6.0-cdh5.14.0\Hadoop-mapreduce-client-core-2.6.0-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-yarn-common\2.6.0-cdh5.14.0\hadoop-yarn-common-2.6.0-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\apache\hadoop\hadoop-yarn-api\2.6.0-cdh5.14.0\hadoop-yarn-api-2.6.0-cdh5.14.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\javax\xml\bind\jaxb-api\2.2.2\jaxb-api-2.2.2.jar;F:\ruanjian\apache-maven-3.3.9\repository\javax\xml\stream\stax-api\1.0-2\stax-api-1.0-2.jar;F:\ruanjian\apache-maven-3.3.9\repository\javax\activation\activation\1.1\activation-1.1.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\sun\jersey\jersey-client\1.9\jersey-client-1.9.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\google\inject\guice\3.0\guice-3.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\javax\inject\javax.inject\1\javax.inject-1.jar;F:\ruanjian\apache-maven-3.3.9\repository\aopalliance\aopalliance\1.0\aopalliance-1.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\sun\jersey\contribs\jersey-guice\1.9\jersey-guice-1.9.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\google\inject\extensions\guice-servlet\3.0\guice-servlet-3.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\org\testng\testng\7.0.0\testng-7.0.0.jar;F:\ruanjian\apache-maven-3.3.9\repository\com\beust\jcommander\1.72\jcommander-1.72.jar WordCountDriver

19/11/12 16:08:17 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

19/11/12 16:08:17 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

19/11/12 16:08:17 WARN mapred.JobClient: Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

19/11/12 16:08:17 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

19/11/12 16:08:17 INFO input.FileInputFormat: Total input paths to process : 1

19/11/12 16:08:17 INFO mapred.LocalJobRunner: OutputCommitter set in config null

19/11/12 16:08:17 INFO mapred.JobClient: Running job: job_local868986662_0001

19/11/12 16:08:17 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

19/11/12 16:08:17 INFO mapred.LocalJobRunner: Waiting for map tasks

19/11/12 16:08:17 INFO mapred.LocalJobRunner: Starting task: attempt_local868986662_0001_m_000000_0

19/11/12 16:08:18 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead

19/11/12 16:08:18 INFO mapred.Task: Using ResourceCalculatorPlugin : null

19/11/12 16:08:18 INFO mapred.MapTask: Processing split: file:/F:/aaa.txt:0+73

19/11/12 16:08:18 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

19/11/12 16:08:18 INFO mapred.MapTask: io.sort.mb = 100

19/11/12 16:08:18 INFO mapred.MapTask: data buffer = 79691776/99614720

19/11/12 16:08:18 INFO mapred.MapTask: record buffer = 262144/327680

19/11/12 16:08:18 INFO mapred.LocalJobRunner:

19/11/12 16:08:18 INFO mapred.MapTask: Starting flush of map output

19/11/12 16:08:18 INFO mapred.MapTask: Finished spill 0

19/11/12 16:08:18 INFO mapred.Task: Task:attempt_local868986662_0001_m_000000_0 is done. And is in the process of commiting

19/11/12 16:08:18 INFO mapred.LocalJobRunner:

19/11/12 16:08:18 INFO mapred.Task: Task 'attempt_local868986662_0001_m_000000_0' done.

19/11/12 16:08:18 INFO mapred.LocalJobRunner: Finishing task: attempt_local868986662_0001_m_000000_0

19/11/12 16:08:18 INFO mapred.LocalJobRunner: Map task executor complete.

19/11/12 16:08:18 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead

19/11/12 16:08:18 INFO mapred.Task: Using ResourceCalculatorPlugin : null

19/11/12 16:08:18 INFO mapred.LocalJobRunner:

19/11/12 16:08:18 INFO mapred.Merger: Merging 1 sorted segments

19/11/12 16:08:18 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 193 bytes

19/11/12 16:08:18 INFO mapred.LocalJobRunner:

19/11/12 16:08:18 INFO mapred.Task: Task:attempt_local868986662_0001_r_000000_0 is done. And is in the process of commiting

19/11/12 16:08:18 INFO mapred.LocalJobRunner:

19/11/12 16:08:18 INFO mapred.Task: Task attempt_local868986662_0001_r_000000_0 is allowed to commit now

19/11/12 16:08:18 INFO output.FileOutputCommitter: Saved output of task 'attempt_local868986662_0001_r_000000_0' to F:/BBB.txt

19/11/12 16:08:18 INFO mapred.LocalJobRunner: reduce > reduce

19/11/12 16:08:18 INFO mapred.Task: Task 'attempt_local868986662_0001_r_000000_0' done.

19/11/12 16:08:18 INFO mapred.JobClient: map 100% reduce 100%

19/11/12 16:08:18 INFO mapred.JobClient: Job complete: job_local868986662_0001

19/11/12 16:08:18 INFO mapred.JobClient: Counters: 17

19/11/12 16:08:18 INFO mapred.JobClient: File System Counters

19/11/12 16:08:18 INFO mapred.JobClient: FILE: Number of bytes read=613

19/11/12 16:08:18 INFO mapred.JobClient: FILE: Number of bytes written=339766

19/11/12 16:08:18 INFO mapred.JobClient: FILE: Number of read operations=0

19/11/12 16:08:18 INFO mapred.JobClient: FILE: Number of large read operations=0

19/11/12 16:08:18 INFO mapred.JobClient: FILE: Number of write operations=0

19/11/12 16:08:18 INFO mapred.JobClient: Map-Reduce Framework

19/11/12 16:08:18 INFO mapred.JobClient: Map input records=4

19/11/12 16:08:18 INFO mapred.JobClient: Map output records=12

19/11/12 16:08:18 INFO mapred.JobClient: Map output bytes=167

19/11/12 16:08:18 INFO mapred.JobClient: Input split bytes=81

19/11/12 16:08:18 INFO mapred.JobClient: Combine input records=0

19/11/12 16:08:18 INFO mapred.JobClient: Combine output records=0

19/11/12 16:08:18 INFO mapred.JobClient: Reduce input groups=9

19/11/12 16:08:18 INFO mapred.JobClient: Reduce shuffle bytes=0

19/11/12 16:08:18 INFO mapred.JobClient: Reduce input records=12

19/11/12 16:08:18 INFO mapred.JobClient: Reduce output records=9

19/11/12 16:08:18 INFO mapred.JobClient: Spilled Records=24

19/11/12 16:08:18 INFO mapred.JobClient: Total committed heap usage (bytes)=514850816

0

Process finished with exit code 0

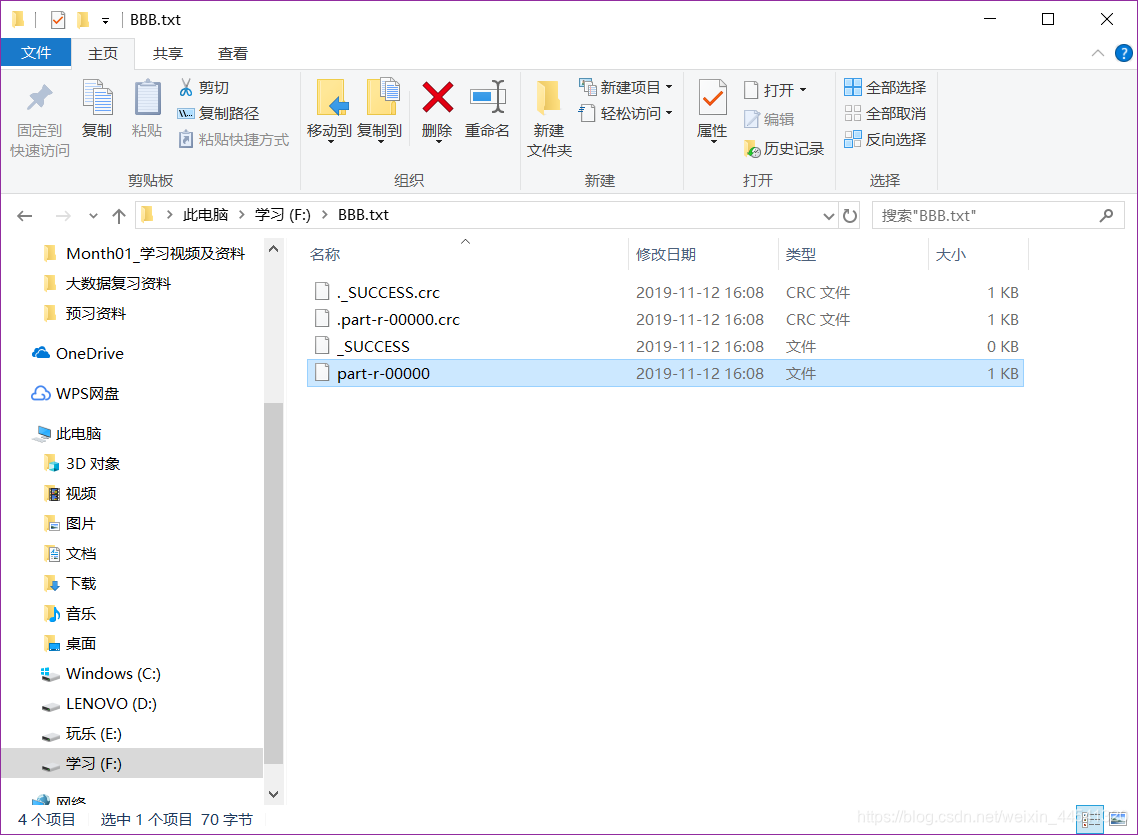

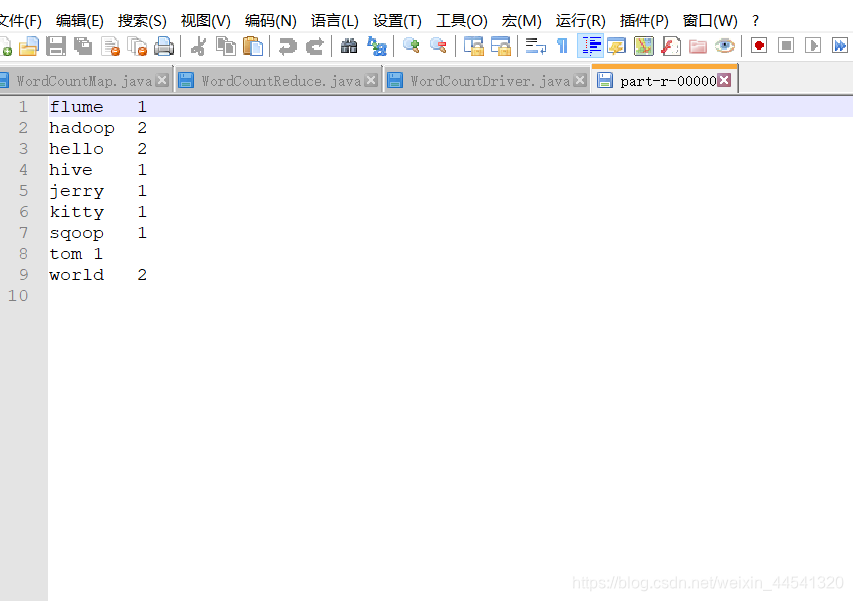

最后在保存路径中查看

用代码编辑器中打开查看 可看到以下结果

MapReduce初体验结束

注意出现这个错误时 说明保存路径中已有计算结果的文件 不可再次保存(因为不支持覆盖)