文章目录

1.前言

Sklearn 中的 Cross Validation (交叉验证)对于我们选择正确的 Model 和 Model 的参数是非常有帮助的, 有了它的帮助,我们能直观的看出不同 Model 或者参数对结构准确度的影响。

2.非交叉验证实验

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

iris = load_iris()

x = iris.data

y = iris.target

x_train, x_test, y_train, y_test = train_test_split(x,y,random_state = 12)

knn = KNeighborsClassifier()

knn.fit(x_train, y_train)

print(knn.score(x_test, y_test))

#输出

0.9210526315789473

可以看到基础验证的准确率为0.9210526315789473

3.交叉验证实验

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import cross_val_score # K折交叉验证模块

iris = load_iris()

x = iris.data

y = iris.target

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state = 16)

knn = KNeighborsClassifier()

knn.fit(x_train, y_train)

scores = cross_val_score(knn, x, y,cv=5,scoring='accuracy') #使用K折交叉验证模块

print(scores) #将5次的预测准确率打印出

print(scores.mean()) #将5次的预测准确平均率打印出

#输出

[0.96666667 1. 0.93333333 0.96666667 1. ]

0.9733333333333334

4.准确率与平方误差

4.1.准确率实验

from sklearn.datasets import load_iris

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import cross_val_score

import matplotlib.pyplot as plt

iris = load_iris()

x = iris.data

y = iris.target

k_scores = []

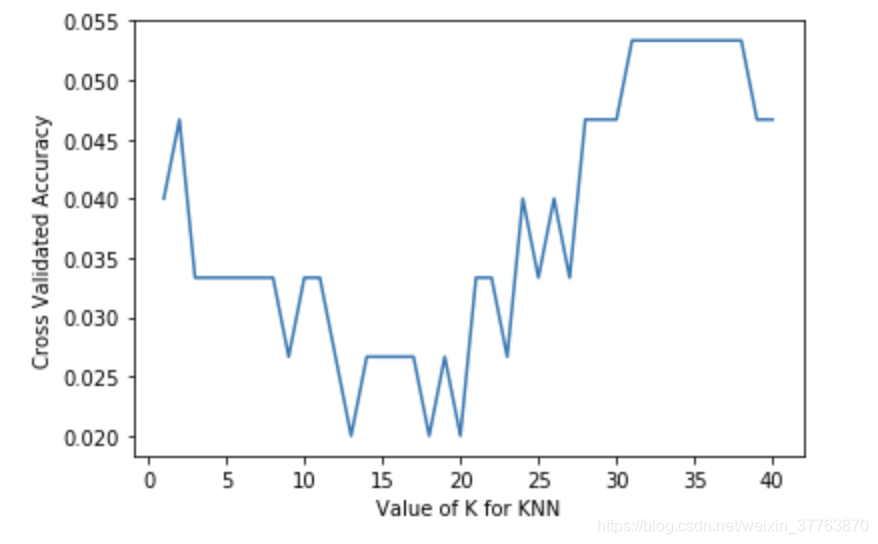

for k in range(1,41):

knn = KNeighborsClassifier(n_neighbors = k)

scores = cross_val_score(knn,x,y,cv=10,scoring = 'accuracy') #分类 十折交叉验证

#loss = -cross_val_score(knn,x,y,cv=10,scoring = 'mean_squared_error') #回归

k_scores.append(scores.mean())

plt.plot(range(1,41),k_scores)

plt.xlabel("Value of K for KNN")

plt.ylabel("Cross Validated Accuracy")

plt.show()

从图中可以得知,选择12~20的k值最好。高过20之后,准确率开始下降则是因为过拟合(Over fitting)的问题。

4.2.均方误差实验

一般来说平均方差(Mean squared error)会用于判断回归(Regression)模型的好坏。

from sklearn.datasets import load_iris

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import cross_val_score

import matplotlib.pyplot as plt

iris = load_iris()

x = iris.data

y = iris.target

for k in range(1,41):

knn = KNeighborsClassifier(n_neighbors = k)

#scores = cross_val_score(knn,x,y,cv=10,scoring = 'accuracy') #分类

loss = -cross_val_score(knn,x,y,cv=10,scoring = 'neg_mean_squared_error') #回归

k_scores.append(loss.mean())

plt.plot(range(1,41),k_scores)

plt.xlabel("Value of K for KNN")

plt.ylabel("Cross Validated Accuracy")

plt.show()

由图可以得知,平均方差越低越好,因此选择13~20左右的K值会最好。

5.Learning curve 检查过拟合

sklearn.learning_curve 中的 learning curve 可以很直观的看出我们的 model 学习的进度, 对比发现有没有 overfitting 的问题. 然后我们可以对我们的 model 进行调整, 克服 overfitting 的问题.

5.1.加载必要模块

from sklearn.model_selection import learning_curve

from sklearn.datasets import load_digits

from sklearn.svm import SVC

import matplotlib.pyplot as plt

import numpy as np

5.2.加载数据

加载digits数据集,其包含的是手写体的数字,从0到9。数据集总共有1797个样本,每个样本由64个特征组成, 分别为其手写体对应的8×8像素表示,每个特征取值0~16。

digits = load_digits()

X = digits.data

y = digits.target

5.3.调用learning_curve

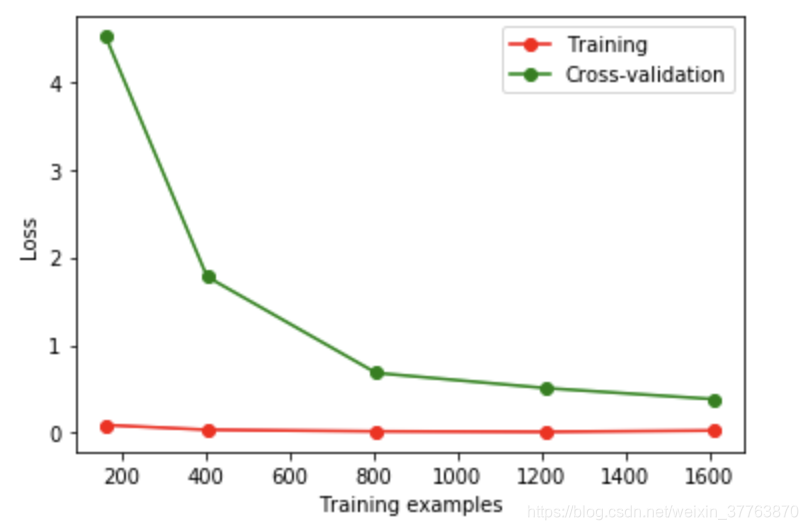

观察样本由小到大的学习曲线变化, 采用K折交叉验证 cv=10, 选择平均方差检视模型效能 scoring=‘neg_mean_squared_error’, 样本由小到大分成5轮检视学习曲线(10%, 25%, 50%, 75%, 100%):

train_sizes, train_loss, test_loss = learning_curve(

SVC(gamma=0.001), X, y, cv=10, scoring='neg_mean_squared_error',

train_sizes=[0.1, 0.25, 0.5, 0.75, 1])

#平均每一轮所得到的平均方差(共5轮,分别为样本10%、25%、50%、75%、100%)

train_loss_mean = -np.mean(train_loss, axis=1)

test_loss_mean = -np.mean(test_loss, axis=1)

5.4.learning_curve可视化

plt.plot(train_sizes, train_loss_mean, 'o-', color="r",

label="Training")

plt.plot(train_sizes, test_loss_mean, 'o-', color="g",

label="Cross-validation")

plt.xlabel("Training examples")

plt.ylabel("Loss")

plt.legend(loc="best")

plt.show()

6.validation_curve 检查过拟合

继续上面的例子,并稍作小修改即可画出图形。这次我们来验证SVC中的一个参数 gamma 在什么范围内能使 model 产生好的结果. 以及过拟合和 gamma 取值的关系.

from sklearn.model_selection import validation_curve

from sklearn.datasets import load_digits

from sklearn.svm import SVC

import matplotlib.pyplot as plt

import numpy as np

digits = load_digits()

X = digits.data

y = digits.target

param_range = np.logspace(-5,-3,6)

train_loss, test_loss = validation_curve(

SVC(), X, y,param_name = 'gamma',param_range = param_range, cv=10, scoring='neg_mean_squared_error')

#平均每一轮所得到的平均方差(共5轮,分别为样本10%、25%、50%、75%、100%)

train_loss_mean = -np.mean(train_loss, axis=1)

test_loss_mean = -np.mean(test_loss, axis=1)

plt.plot(param_range, train_loss_mean, 'o-', color="r",

label="Training")

plt.plot(param_range, test_loss_mean, 'o-', color="g",

label="Cross-validation")

plt.xlabel("Gamma")

plt.ylabel("Loss")

plt.legend(loc="best")

plt.show()