kibana启动报错

Elasticsearch cluster did not respond with license information

本次报错环境是使用4台elasticsearch服务器组成的ES集群,版本都是elk7.1.0,具体安装步骤网上文档较多。

使用cdh1作为master,cdh2,cdh3,cdh4作为datanode节点。

在elasticsearch安装完成后,通过http://cdh1:9200 也能访问,随后即安装了kibana。

当安装完kibana后,通过http://cdh1:5601,无法访问kibana界面。

Kibana server is not ready yet

问题排查:

首先通过ps和netstat命令查看elasticsearch和kibana是否都启动,查看后发现进程和端口都是正常运行。

然后先查看kibana的日志,vim /var/log/kibana.log ,发现有以下错误:

大概意思是,kibana启动成功,状态从green转成red,是因为 Elasticsearch cluster did not respond with license information

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:metrics@undefined","info"],"pid":10215,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:timelion@undefined","info"],"pid":10215,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:elasticsearch@undefined","info"],"pid":10215,"state":"green","message":"Status changed from yellow to green - Ready","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}

{"type":"log","@timestamp":"2020-04-01T03:14:13Z","tags":["status","plugin:[email protected]","error"],"pid":10215,"state":"red","message":"Status changed from yellow to red - [data] Elasticsearch cluster did not respond with license information.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}kibana日志显示是elasticsearch的问题,就把排查方向转到elasticsearch。

通过以下命令查看elasticsearch

http://cdh1:9200/_xpack?pretty

{

"build" : {

"hash" : "606a173",

"date" : "2019-05-16T00:45:05.657481Z"

},

"license" : null,

"features" : {

"ccr" : {

"description" : "Cross Cluster Replication",

"available" : false,

"enabled" : true

},

"graph" : {

"description" : "Graph Data Exploration for the Elastic Stack",

"available" : false,

"enabled" : true

} ………………

……………………可以看到license为null。可能的原因就是要连接的这个 elasticsearch 节点不能和master节点建立连接。

ES的集群中master节点是cdh1,在master节点查看本集群的日志

vim /var/log/elasticsearch/es-cluster-test.log

2020-04-01T10:37:31,693][DEBUG][o.e.a.a.c.h.TransportClusterHealthAction] [cdh1] timed out while retrying [cluster:monitor/health] after failure (timeout [30s])

[2020-04-01T10:37:31,694][WARN ][r.suppressed ] [cdh1] path: /_cluster/health, params: {pretty=}

org.elasticsearch.discovery.MasterNotDiscoveredException: null

at org.elasticsearch.action.support.master.TransportMasterNodeAction$AsyncSingleAction$4.onTimeout(TransportMasterNodeAction.java:259) [elasticsearch-7.1.0.jar:7.1.0]

at org.elasticsearch.cluster.ClusterStateObserver$ContextPreservingListener.onTimeout(ClusterStateObserver.java:322) [elasticsearch-7.1.0.jar:7.1.0]

at org.elasticsearch.cluster.ClusterStateObserver$ObserverClusterStateListener.onTimeout(ClusterStateObserver.java:249) [elasticsearch-7.1.0.jar:7.1.0]

at org.elasticsearch.cluster.service.ClusterApplierService$NotifyTimeout.run(ClusterApplierService.java:555) [elasticsearch-7.1.0.jar:7.1.0]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:681) [elasticsearch-7.1.0.jar:7.1.0]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) [?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) [?:?]

at java.lang.Thread.run(Thread.java:835) [?:?]

[2020-04-01T10:37:33,777][WARN ][o.e.c.c.ClusterFormationFailureHelper] [cdh1] master not discovered yet, this node has not previously joined a bootstrapped (v7+) cluster, and [cluster.initial_master_nodes] is empty on this node: have discovered []; discovery will continue using [10.1.203.39:9300, 10.1.203.40:9300, 10.1.203.41:9300] from hosts providers and [{cdh1}{Wcy_JzKjT7OWxAHVTxaYLg}{xMXoEeElT2end9CATinG8Q}{10.1.203.38}{10.1.203.38:9300}{ml.machine_memory=25112449024, xpack.installed=true, ml.max_open_jobs=20}] from last-known cluster state; node term 0, last-accepted version 0 in term 0

[2020-04-01T10:37:43,779][WARN ][o.e.c.c.ClusterFormationFailureHelper] [cdh1] master not discovered yet, this node has not previously joined a bootstrapped (v7+) cluster, and [cluster.initial_master_nodes] is empty on this node: have discovered []; discovery will continue using [10.1.203.39:9300, 10.1.203.40:9300, 10.1.203.41:9300] from hosts providers and [{cdh1}{Wcy_JzKjT7OWxAHVTxaYLg}{xMXoEeElT2end9CATinG8Q}{10.1.203.38}{10.1.203.38:9300}{ml.machine_memory=25112449024, xpack.installed=true, ml.max_open_jobs=20}] from last-known cluster state; node term 0, last-accepted version 0 in term 0从上面发现MasterNotDiscoveredException 异常,集群中找不到master节点,在cluster.initial_master_nodes配置中是空。

打开配置文件

[root@cdh1 elk7.1.0]# vim /etc/elasticsearch/elasticsearch.yml

# For more information, consult the network module documentation.

#

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

#discovery.seed_hosts: ["host1", "host2"]

discovery.seed_hosts: ["cdh1", "cdh2","cdh3","cdh4"]

#

# Bootstrap the cluster using an initial set of master-eligible nodes:

#

#cluster.initial_master_nodes: ["node-1", "node-2"]

#

# For more information, consult the discovery and cluster formation module documentation.

#

# ---------------------------------- Gateway -----------------------------------查看cluster.initial_master_nodes 未开启配置,在配置文件中开启此配置项:

cluster.initial_master_nodes: ["cdh1"]

标识chd1为集群的初始主节点,并把配置文件分发到集群中其他节点。

================================================

解决办法:

通过在/etc/elasticsearch/elasticsearch.yml 增加cluster.initial_master_nodes: ["cdh1"]配置。

使用systemctl start elasticsearch启动服务

在查看/var/log/elasticsearch/elasticsearch.log 无报错,日志正常。

[2020-04-01T11:34:22,957][INFO ][o.e.p.PluginsService ] [cdh1] loaded module [x-pack-watcher]

[2020-04-01T11:34:22,957][INFO ][o.e.p.PluginsService ] [cdh1] no plugins loaded

[2020-04-01T11:34:26,335][INFO ][o.e.x.s.a.s.FileRolesStore] [cdh1] parsed [0] roles from file [/etc/elasticsearch/roles.yml]

[2020-04-01T11:34:26,942][INFO ][o.e.x.m.p.l.CppLogMessageHandler] [cdh1] [controller/14787] [Main.cc@109] controller (64 bit): Version 7.1.0 (Build a8ee6de8087169) Copyright (c) 2019 Elasticsearch BV

[2020-04-01T11:34:27,314][DEBUG][o.e.a.ActionModule ] [cdh1] Using REST wrapper from plugin org.elasticsearch.xpack.security.Security

[2020-04-01T11:34:27,530][INFO ][o.e.d.DiscoveryModule ] [cdh1] using discovery type [zen] and seed hosts providers [settings]

[2020-04-01T11:34:28,230][INFO ][o.e.n.Node ] [cdh1] initialized

[2020-04-01T11:34:28,231][INFO ][o.e.n.Node ] [cdh1] starting ...

[2020-04-01T11:34:28,345][INFO ][o.e.t.TransportService ] [cdh1] publish_address {10.1.203.38:9300}, bound_addresses {[::]:9300}

[2020-04-01T11:34:28,352][INFO ][o.e.b.BootstrapChecks ] [cdh1] bound or publishing to a non-loopback address, enforcing bootstrap checks

[2020-04-01T11:34:28,366][INFO ][o.e.c.c.Coordinator ] [cdh1] setting initial configuration to VotingConfiguration{Wcy_JzKjT7OWxAHVTxaYLg}

[2020-04-01T11:34:28,497][INFO ][o.e.c.s.MasterService ] [cdh1] elected-as-master ([1] nodes joined)[{cdh1}{Wcy_JzKjT7OWxAHVTxaYLg}{Ch2XED_FSZC0NtDK_yuD-w}{10.1.203.38}{10.1.203.38:9300}{ml.machine_memory=25112449024, xpack.installed=true, ml.max_open_jobs=20} elect leader, _BECOME_MASTER_TASK_, _FINISH_ELECTION_], term: 1, version: 1, reason: master node changed {previous [], current [{cdh1}{Wcy_JzKjT7OWxAHVTxaYLg}{Ch2XED_FSZC0NtDK_yuD-w}{10.1.203.38}{10.1.203.38:9300}{ml.machine_memory=25112449024, xpack.installed=true, ml.max_open_jobs=20}]}

[2020-04-01T11:34:28,516][INFO ][o.e.c.c.CoordinationState] [cdh1] cluster UUID set to [bWkcxxG8S8OglVU9PPf35w]

[2020-04-01T11:34:28,528][INFO ][o.e.c.s.ClusterApplierService] [cdh1] master node changed {previous [], current [{cdh1}{Wcy_JzKjT7OWxAHVTxaYLg}{Ch2XED_FSZC0NtDK_yuD-w}{10.1.203.38}{10.1.203.38:9300}{ml.machine_memory=25112449024, xpack.installed=true, ml.max_open_jobs=20}]}, term: 1, version: 1, reason: Publication{term=1, version=1}

[2020-04-01T11:34:28,618][WARN ][o.e.x.s.a.s.m.NativeRoleMappingStore] [cdh1] Failed to clear cache for realms [[]]

[2020-04-01T11:34:28,619][INFO ][o.e.h.AbstractHttpServerTransport] [cdh1] publish_address {10.1.203.38:9200}, bound_addresses {[::]:9200}

[2020-04-01T11:34:28,619][INFO ][o.e.n.Node ] [cdh1] started

[2020-04-01T11:34:28,668][INFO ][o.e.g.GatewayService ] [cdh1] recovered [0] indices into cluster_state

[2020-04-01T11:34:28,770][INFO ][o.e.c.m.MetaDataIndexTemplateService] [cdh1] adding template [.watches] for index patterns [.watches*]

[2020-04-01T11:34:28,812][INFO ][o.e.c.m.MetaDataIndexTemplateService] [cdh1] adding template [.triggered_watches] for index patterns [.triggered_watches*]

[2020-04-01T11:34:28,846][INFO ][o.e.c.m.MetaDataIndexTemplateService] [cdh1] adding template [.watch-history-9] for index patterns [.watcher-history-9*]

[2020-04-01T11:34:28,892][INFO ][o.e.c.m.MetaDataIndexTemplateService] [cdh1] adding template [.monitoring-logstash] for index patterns [.monitoring-logstash-7-*]

[2020-04-01T11:34:28,935][INFO ][o.e.c.m.MetaDataIndexTemplateService] [cdh1] adding template [.monitoring-es] for index patterns [.monitoring-es-7-*]

[2020-04-01T11:34:28,962][INFO ][o.e.c.m.MetaDataIndexTemplateService] [cdh1] adding template [.monitoring-beats] for index patterns [.monitoring-beats-7-*]

[2020-04-01T11:34:28,986][INFO ][o.e.c.m.MetaDataIndexTemplateService] [cdh1] adding template [.monitoring-alerts-7] for index patterns [.monitoring-alerts-7]

[2020-04-01T11:34:29,006][INFO ][o.e.c.m.MetaDataIndexTemplateService] [cdh1] adding template [.monitoring-kibana] for index patterns [.monitoring-kibana-7-*]

[2020-04-01T11:34:29,081][INFO ][o.e.l.LicenseService ] [cdh1] license [c5c4dee7-2304-43b7-93a5-f1406ba877de] mode [basic] - valid

[2020-04-01T11:34:29,086][INFO ][o.e.x.i.a.TransportPutLifecycleAction] [cdh1] adding index lifecycle policy [watch-history-ilm-policy]当ES集群中其他节点启动完成后,再次启动kibana服务。

systemctl start kibana

查看kibana日志无报错

{"type":"log","@timestamp":"2020-04-01T03:35:50Z","tags":["reporting","browser-driver","warning"],"pid":15307,"message":"Enabling the Chromium sandbox provides an additional layer of protection."}

{"type":"log","@timestamp":"2020-04-01T03:35:50Z","tags":["reporting","warning"],"pid":15307,"message":"Generating a random key for xpack.reporting.encryptionKey. To prevent pending reports from failing on restart, please set xpack.reporting.encryptionKey in kibana.yml"}

{"type":"log","@timestamp":"2020-04-01T03:35:50Z","tags":["status","plugin:[email protected]","info"],"pid":15307,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"}

{"type":"log","@timestamp":"2020-04-01T03:35:50Z","tags":["info","task_manager"],"pid":15307,"message":"Installing .kibana_task_manager index template version: 7010099."}

{"type":"log","@timestamp":"2020-04-01T03:35:50Z","tags":["info","task_manager"],"pid":15307,"message":"Installed .kibana_task_manager index template: version 7010099 (API version 1)"}

{"type":"log","@timestamp":"2020-04-01T03:35:51Z","tags":["info","migrations"],"pid":15307,"message":"Creating index .kibana_1."}

{"type":"log","@timestamp":"2020-04-01T03:35:52Z","tags":["info","migrations"],"pid":15307,"message":"Pointing alias .kibana to .kibana_1."}

{"type":"log","@timestamp":"2020-04-01T03:35:52Z","tags":["info","migrations"],"pid":15307,"message":"Finished in 496ms."}

{"type":"log","@timestamp":"2020-04-01T03:35:52Z","tags":["listening","info"],"pid":15307,"message":"Server running at http://cdh1:5601"}

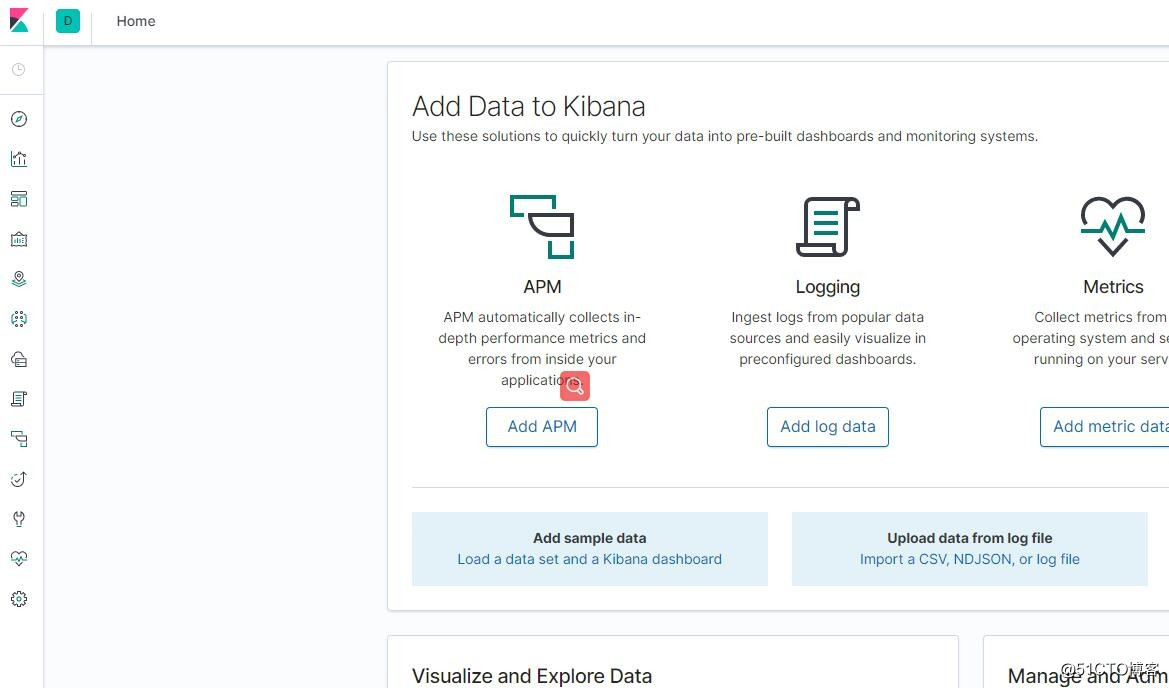

{"type":"log","@timestamp":"2020-04-01T03:35:52Z","tags":["status","plugin:[email protected]","info"],"pid":15307,"state":"green","message":"Status changed from yellow to green - Ready","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"}通过浏览器访问 http://cdh1:5601,即可以打开kibana界面