十、MapReduce综合实战

综合实战:环境大数据

案列目的

1.学会分析环境数据文件;

2.学会编写解析环境数据文件并进行统计的代码;

3.学会进行递归MapReduce。

案例要求

要求实验结束时,每位学生均已在master服务器上运行从北京2016年1月到6月这半年间的历史天气和空气质量数据文件中分析出的环境统计结果,包含月平均气温、空气质量分布情况等。

实现原理

近年来,由于雾霾问题的持续发酵,越来越多的人开始关注城市相关的环境数据,包括空气质量数据、天气数据等等。

如果每小时记录一次城市的天气实况和空气质量实况信息,则每个城市每天都会产生24条环境数据,全国所有2500多个城市如果均如此进行记录,那每天产生的数据量将达到6万多条,每年则会产生2190万条记录,已经可以称得上环境大数据。

对于这些原始监测数据,我们可以根据时间的维度来进行统计,从而得出与该城市相关的日度及月度平均气温、空气质量优良及污染天数等等,从而为研究空气污染物扩散条件提供有力的数据支持。

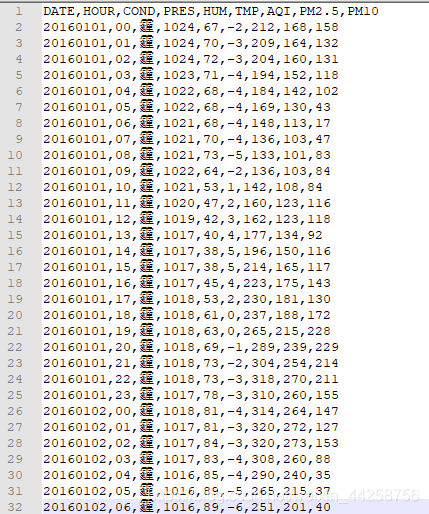

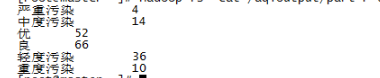

本实验中选取了北京2016年1月到6月这半年间的每小时天气和空气质量数据(未取到数据的字段填充“N/A”),利用MapReduce来统计月度平均气温和半年内空气质量为优、良、轻度污染、中度污染、重度污染和严重污染的天数。

实验数据如下

第一题:编写月平均气温统计程序

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.mapreduce.lib.partition.HashPartitioner;

public class TmpStat

{

public static class StatMapper extends Mapper<Object, Text, Text, IntWritable>

{

private IntWritable intValue = new IntWritable();

private Text dateKey = new Text();

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException

{

String[] items = value.toString().split(",");

String date = items[0];

String tmp = items[5];

if(!"DATE".equals(date) && !"N/A".equals(tmp))

{//排除第一行说明以及未取到数据的行

dateKey.set(date.substring(0, 6));

intValue.set(Integer.parseInt(tmp));

context.write(dateKey, intValue);

}

}

}

public static class StatReducer extends Reducer<Text, IntWritable, Text, IntWritable>

{

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException

{

int tmp_sum = 0;

int count = 0;

for(IntWritable val : values)

{

tmp_sum += val.get();

count++;

}

int tmp_avg = tmp_sum/count;

result.set(tmp_avg);

context.write(key, result);

}

}

public static void main(String args[])

throws IOException, ClassNotFoundException, InterruptedException

{

Configuration conf = new Configuration();

Job job = new Job(conf, "MonthlyAvgTmpStat");

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.setInputPaths(job, args[0]);

job.setJarByClass(TmpStat.class);

job.setMapperClass(StatMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setPartitionerClass(HashPartitioner.class);

job.setReducerClass(StatReducer.class);

job.setNumReduceTasks(Integer.parseInt(args[2]));

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

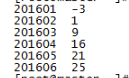

运行结果:

第二题:编写每日空气质量统计程序

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.mapreduce.lib.partition.HashPartitioner;

public class AqiStatDaily

{

public static class StatMapper extends Mapper<Object, Text, Text, IntWritable>

{

private IntWritable intValue = new IntWritable();

private Text dateKey = new Text();

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException

{

String[] items = value.toString().split(",");

String date = items[0];

String aqi = items[6];

if(!"DATE".equals(date) && !"N/A".equals(aqi))

{

dateKey.set(date);

intValue.set(Integer.parseInt(aqi));

context.write(dateKey, intValue);

}

}

}

public static class StatReducer extends Reducer<Text, IntWritable, Text, IntWritable>

{

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException

{

int aqi_sum = 0;

int count = 0;

for(IntWritable val : values)

{

aqi_sum += val.get();

count++;

}

int aqi_avg = aqi_sum/count;

result.set(aqi_avg);

context.write(key, result);

}

}

public static void main(String args[])

throws IOException, ClassNotFoundException, InterruptedException

{

Configuration conf = new Configuration();

Job job = new Job(conf, "AqiStatDaily");

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.setInputPaths(job, args[0]);

job.setJarByClass(AqiStatDaily.class);

job.setMapperClass(StatMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setPartitionerClass(HashPartitioner.class);

job.setReducerClass(StatReducer.class);

job.setNumReduceTasks(Integer.parseInt(args[2]));

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

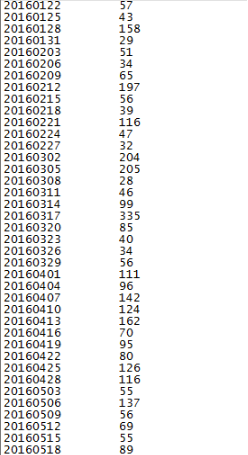

运行结果:

第三题: 编写各空气质量天数统计程序

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.mapreduce.lib.partition.HashPartitioner;

public class AqiStat

{

public static final String GOOD = "优";

public static final String MODERATE = "良";

public static final String LIGHTLY_POLLUTED = "轻度污染";

public static final String MODERATELY_POLLUTED = "中度污染";

public static final String HEAVILY_POLLUTED = "重度污染";

public static final String SEVERELY_POLLUTED = "严重污染";

public static class StatMapper extends Mapper<Object, Text, Text, IntWritable>

{

private final static IntWritable one = new IntWritable(1);

private Text cond = new Text();

// map方法,根据AQI值,将对应空气质量的天数加1

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException

{

String[] items = value.toString().split("\t");

int aqi = Integer.parseInt(items[1]);

if(aqi <= 50)

{

// 优

cond.set(GOOD);

}

else if(aqi <= 100)

{

// 良

cond.set(MODERATE);

}

else if(aqi <= 150)

{

// 轻度污染

cond.set(LIGHTLY_POLLUTED);

}

else if(aqi <= 200)

{

// 中度污染

cond.set(MODERATELY_POLLUTED);

}

else if(aqi <= 300)

{

// 重度污染

cond.set(HEAVILY_POLLUTED);

}

else

{

// 严重污染

cond.set(SEVERELY_POLLUTED);

}

context.write(cond, one);

}

}

// 定义reduce类,对相同的空气质量状况,把它们<K,VList>中VList值全部相加

public static class StatReducer extends Reducer<Text, IntWritable, Text, IntWritable>

{

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,Context context)

throws IOException, InterruptedException

{

int sum = 0;

for (IntWritable val : values)

{

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String args[])

throws IOException, ClassNotFoundException, InterruptedException

{

Configuration conf = new Configuration();

Job job = new Job(conf, "AqiStat");

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.setInputPaths(job, args[0]);

job.setJarByClass(AqiStat.class);

job.setMapperClass(StatMapper.class);

job.setCombinerClass(StatReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setPartitionerClass(HashPartitioner.class);

job.setReducerClass(StatReducer.class);

job.setNumReduceTasks(Integer.parseInt(args[2]));

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

运行结果:

有什么不懂的,多多问问博主呦!!!