1. What is ImagesPipeline?

ImagesPipeline is scrapy's own class, used to process images (download images to the local when crawling).

2. Advantages of ImagesPipeline:

- Convert downloaded images into common jpg and rgb formats

- Avoid repeated downloads

- Thumbnail generation

- Image size filter

- Asynchronous download

3. ImagesPipeline workflow

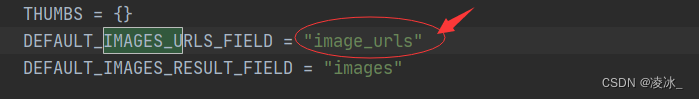

- Crawl an item and put the urls of the image into the image_urls field

- The item returned from the spider is passed to the item pipeline

- When the item is passed to the imagepipeline, the scrapy scheduler and downloader will be called to complete the scheduling and downloading of the urls in image_urls.

- After the image download is completed successfully, information such as the image download path, URL, and checksum will be filled in the images field.

4. Use ImagesPipeline to download full-page pictures of beautiful women

(1) Web page analysis

Details page information

(2) Create project

scrapy start project Uis

cd Uis

scrapy genspider -t crawl ai_img xx.com

(3) Modify the setting.py file

USER_AGENT = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/113.0.0.0 Safari/537.36"

ROBOTSTXT_OBEY = False

DOWNLOAD_DELAY = 1

(4) Write the spider file ai_img.py

First view the ImagesPipeline source file

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from ..items import *

class AiImgSpider(CrawlSpider):

name = "ai_img"

allowed_domains = ["netbian.com"]

start_urls = ["http://www.netbian.com/mei/index.htm"] # 起始url

rules = (

Rule(LinkExtractor(allow=r"^http://www.netbian.com/desk/(.*?)$"), #详情页的路径

callback="parse_item", follow=False),)

def parse_item(self, response):

#创建item对象

item =UisItem()

# 图片url ->保存到管道中 是字符串类型

url_=response.xpath('//div[@class="pic"]//p/a/img/@src').get()

#图片名称

title_=response.xpath('//h1/text()').get()

# 注意:必须是列表的形式

item['image_urls']=[url_]

item['title_']=title_

return item(5) Write item.py file

class UisItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

#默认字段image_urls,查看源码

image_urls=scrapy.Field()

title_=scrapy.Field()

pass

(6) Write pipelines pipelines.py

First view the ImagesPipeline source file

1) Default saved folder

2) Get the Item object

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

import hashlib

from itemadapter import ItemAdapter

from scrapy import Request

from scrapy.pipelines.images import ImagesPipeline

from scrapy.utils.python import to_bytes

#继承ImagesPipeline

class UisPipeline(ImagesPipeline):

# 重写1:直接修改默认路径

# def file_path(self, request, response=None, info=None, *, item=None):

# image_guid = hashlib.sha1(to_bytes(request.url)).hexdigest()

# # 修改默认文件夹路径

# return f"desk/{image_guid}.jpg"

#重写2:需要修改文件夹和文件名

def file_path(self, request, response=None, info=None, *, item=None):

#获取item对象

item_=request.meta.get('db')

#获取图片名称

image_guid = item_['title_'].replace(' ','').replace(',','')

print(image_guid)

# 修改默认文件夹路径

return f"my/{image_guid}.jpg"

# 重写-item对象的图片名称数据

def get_media_requests(self, item, info):

urls = ItemAdapter(item).get(self.images_urls_field, [])

# 传递item对象

return [Request(u, meta={'db':item}) for u in urls](7) Set settings.py and open the image pipeline

ITEM_PIPELINES = {

#普通管道

# "Uis.pipelines.UisPipeline": 300,

# "scrapy.pipelines.images.ImagesPipeline": 301, #图片的管道开启

"Uis.pipelines.UisPipeline": 302, #自定义图片的管道开启

}

# 保存下载图片的路径

IMAGES_STORE='./'(8) Run: scrapy crawl ia_img

(9) Results display