//

With the comprehensive development of the Internet and terminal devices, live broadcast has become more and more common in daily life. More and more people are beginning to interact with anchors during live broadcasts as a way of entertainment. However, frequent lags on some live broadcast platforms and a single reward special effect will greatly reduce the user's live broadcast experience. LiveVideoStack invited Jiang Min from Tencent Cloud to introduce to us how Tencent Cloud applies cloud rendering in live broadcast scenarios to bring a better experience to live broadcasts.

Text/Jiang Min

Editor/LiveVideoStack

Hello everyone, I am Jiang Min from Tencent Cloud. The topic I will share with you today is the technical exploration of combining real-time cloud rendering with live broadcast application scenarios. I joined the Tencent Cloud rendering team in 2020. The Tencent Cloud rendering platform has gradually developed from the initial cloud game platform, and currently supports 3D cloud applications, virtual live broadcast, cloud computers and other related functions. Today we will introduce the introduction of cloud rendering and the live broadcast scene combined with cloud rendering.

-01-

Introduction to cloud rendering

1.1| What is cloud rendering

Cloud rendering is to deploy software or games in the cloud, and images are rendered in the cloud. It supports real-time operations by users, supports full-end SDK (Web, Android, iOS) access, and provides users with an operating experience close to local latency and high image quality. . The above figure shows the main process of cloud rendering. When the user operates on the web, applet or related APP, the signaling will be sent to the cloud rendering instance. What runs on the cloud rendering instance can be 3D software, games, etc. application. The application or game accepts relevant instructions, gives real responses, and generates corresponding pictures. These pictures are collected and encoded and sent to the cloud rendering terminal.

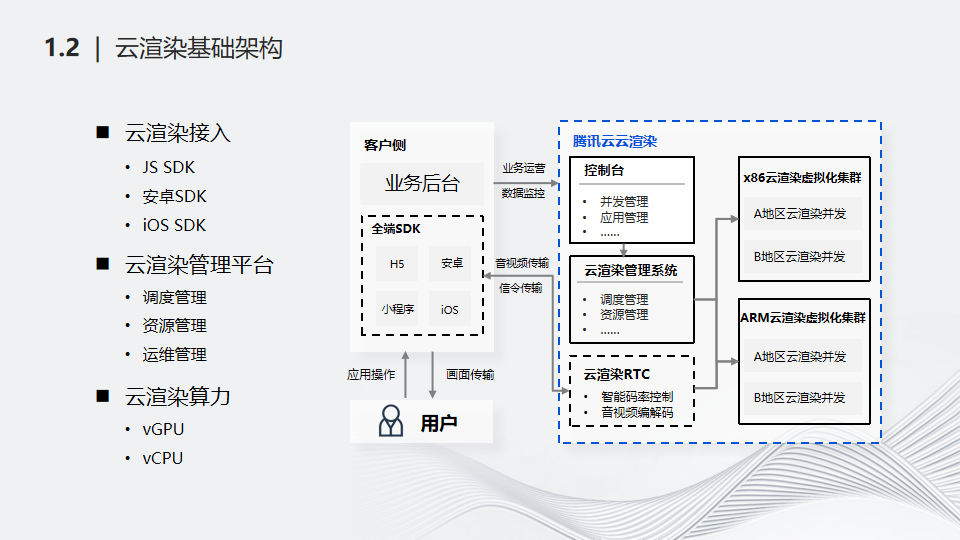

1.2| Cloud rendering infrastructure

Let’s introduce the infrastructure of cloud rendering. Functionally, the cloud rendering architecture can be divided into three layers: user access layer, cloud rendering logic processing layer, and cloud rendering computing power layer.

The uppermost customer access layer is mainly responsible for cloud rendering customers accessing cloud rendering terminal SDK and cloud rendering instance management and resource management and other related APIs.

The middle layer is the cloud rendering logic processing layer, whose functions mainly include: installing and running customer applications, resource management, concurrent scheduling, and cloud rendering RTC transmission.

The lowest layer is the cloud rendering computing power layer, such as GPU resources, CPU resources, network resources, edge node resources, etc.

-02-

Exploration of live broadcast scenes combined with cloud rendering

The following will introduce some directions and technical explorations of cloud rendering in live broadcast scenarios based on specific scenarios.

2.1| Danmaku Game Live Broadcast

First, let’s introduce the recently popular barrage game live broadcast. The main gameplay of barrage game live broadcast is that the host broadcasts barrage mini-games in the live broadcast room, and the audience interacts on the screen through comments or gifts in the live broadcast room. Danmaku games can not only effectively increase the popularity of the live broadcast room, but also increase the revenue from live broadcast sessions. Compared with traditional live broadcasts, the biggest feature of barrage game live broadcasts is that they are more interactive and have a stronger sense of audience participation. For example, viewers can determine the outcome of a game through comments or gifts. Research has found that all major domestic live broadcast manufacturers have supported live streaming of barrage games.

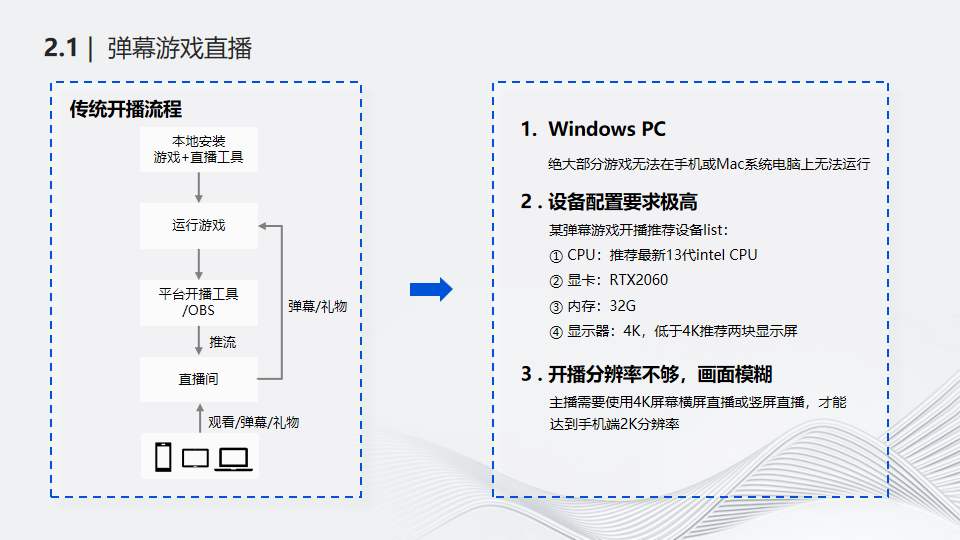

Take a look at the traditional broadcast process. First, the anchor installs the game and broadcast tools on the PC host. The broadcast tools may be provided by the live broadcast manufacturer or some open source software such as OBS; then run the game and broadcast tools, adjust the relevant settings of the broadcast tools and Push the game screen to the live broadcast background; finally the anchor and the players enter the live broadcast room, and the players send comments or buy gifts in the anchor's live broadcast room to participate in the game.

The entire process summarizes three issues that need to be resolved:

(1) Windows PC requirements. Most barrage games are developed based on Windows systems and cannot be run on mobile phones or MAC system computers; in addition, in some extreme scenarios, mobile phone performance cannot meet the requirements of barrage games.

(2) Audiences of barrage game live broadcasts participate in real-time interactions by sending barrages and purchasing gifts. The interactive content needs to be calculated and rendered in real time, and needs to provide a smooth and high-quality gaming and viewing experience, so the performance of mobile phones or ordinary computers cannot meet the requirements. Current requirements, as shown on the right side of the picture above, show a list of broadcast equipment recommended by a Danmaku game developer: ① Processor requirements, the recommended CPU is the latest 13th generation Intel CPU; ② Graphics card requirements, the recommended performance is no less than RTX2060; ③ Memory requirements, No less than 32G; ④Monitor requirements, two 4K screens are recommended. Therefore, by transferring the calculation and rendering tasks of Danmaku games to the cloud and relying on the more powerful computing power and graphics processing capabilities of the cloud server, there is no need to worry about the limitations of local terminal performance. Judging from the current situation, most barrage games have been switched to the cloud for processing and rendering.

(3) The broadcast resolution is not enough and the picture is blurry. The audience mainly enters the live broadcast room through mobile phones, so the barrage games are also developed according to the vertical screen. In addition, the resolution of mobile phones can reach 2K, so in order to ensure that the audience has a good viewing experience, the host is required to use a monitor with the highest resolution when starting the broadcast. It would be nice to reach 4K.

In response to the above problems, Tencent Cloud has provided three targeted solutions:

The first option is to launch conventional lightweight cloud rendering. Based on the SaaS solution of Tencent Cloud Rendering, customers do not need to access any API or SDK of cloud rendering and access cloud rendering instances through a web browser. The picture will be transferred to the local PC, and then use the broadcast tool to start the broadcast according to the ordinary live broadcast process. In this way, the Danmaku game runs on the cloud, which can solve the hardware performance problem of Danmaku live broadcast. The anchor only needs to estimate in advance how much computing power is needed for the entire game and live broadcast, and then purchase the corresponding computing power machines and concurrency. However, the game screen collection in the live broadcast room is still on the host's PC machine, so the resolution problem has not been effectively solved. In addition, the host may live broadcast multiple games and connect to multiple live broadcast platforms. Different games and live broadcast platforms require different configuration, so the broadcasting environment is not standard and the broadcasting process is not standardized enough. These difficulties can be perfectly solved by in-depth integration of cloud rendering launch solutions.

The second option is to deeply integrate cloud rendering for broadcasting. Specifically, the live broadcast manufacturer integrates the cloud rendering SDK into the manufacturer's broadcast tool, so that the manufacturer's broadcast tool can obtain the original audio and video data collected and encoded by cloud rendering, and can push the audio and video stream to the live broadcast room directly or with secondary optimization. This solution can not only solve the performance problem of the broadcasting hardware, but also solve the problem of the resolution of the anchor's host; and the anchor only needs to open the corresponding broadcasting assistant to start broadcasting, so it also standardizes the anchor's broadcasting process. In-depth integration with cloud rendering requires live broadcast platform manufacturers to access the cloud rendering SDK and related APIs, which incurs certain development costs. Of course, some manufacturers are currently doing this.

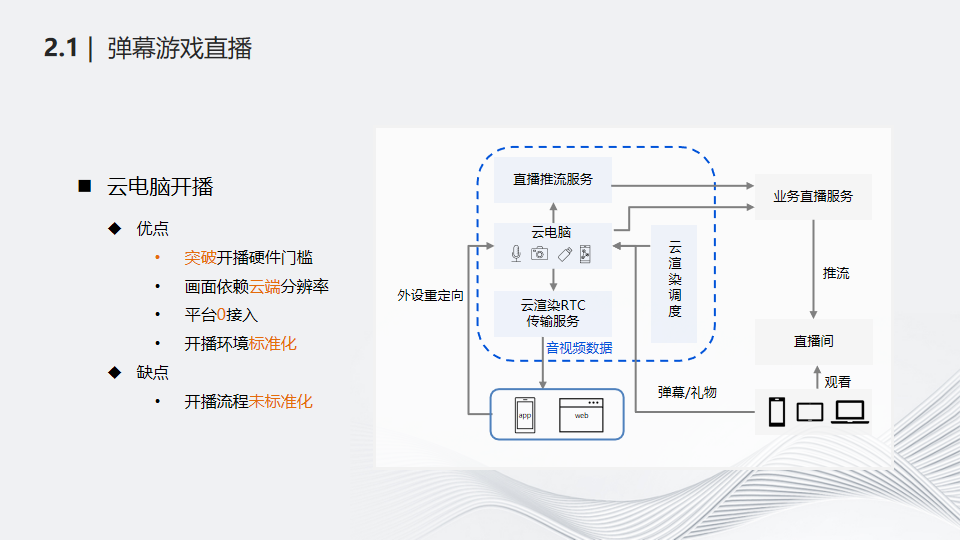

The last option is to start broadcasting on cloud computers. Cloud computer broadcasting pushes the entire computer screen to the host, not just the game screen, and redirects the local terminal's microphone, camera and other hardware devices to the cloud computer. The anchor installs barrage games, broadcast assistants and other related software on the cloud computer before starting the broadcast, and then starts the broadcast on the cloud computer. All operations are performed on the cloud computer. This solution runs the game in the cloud, which can definitely solve the problem of broadcast hardware performance; and because the audio and video data is pushed directly to the live broadcast platform system by the cloud computer, it also solves the problem of the resolution of the host host. Of course, the platform can also produce cloud computer images that meet the scenario according to actual needs. The anchor selects the required image to generate the broadcast environment before starting the broadcast, so the entire broadcast process is relatively standardized. The entire solution is very lightweight for platform access, with almost no additional development costs.

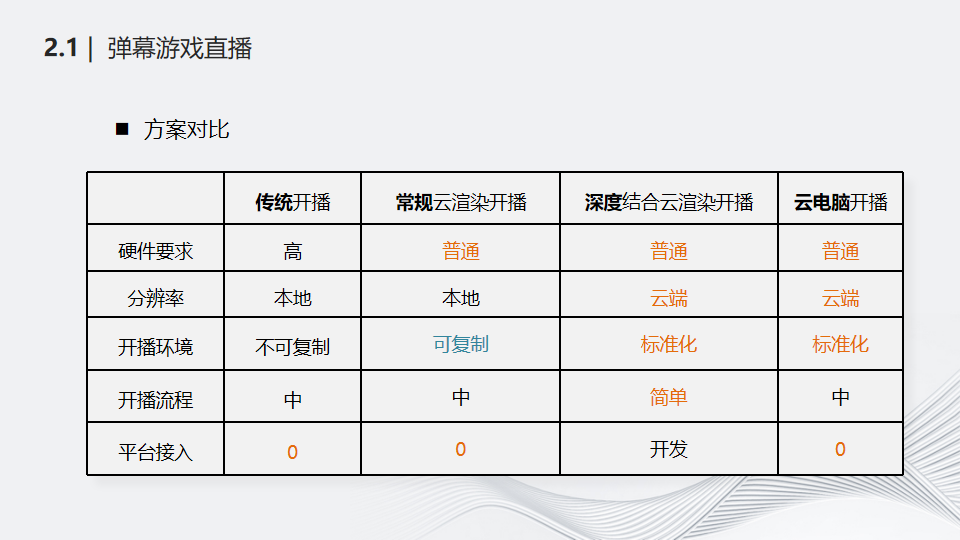

Finally, let’s look at the comparison between the three different solutions and traditional broadcasting in five aspects: hardware requirements, resolution, broadcasting environment, broadcasting process and platform intervention.

2.2| Virtual special effects

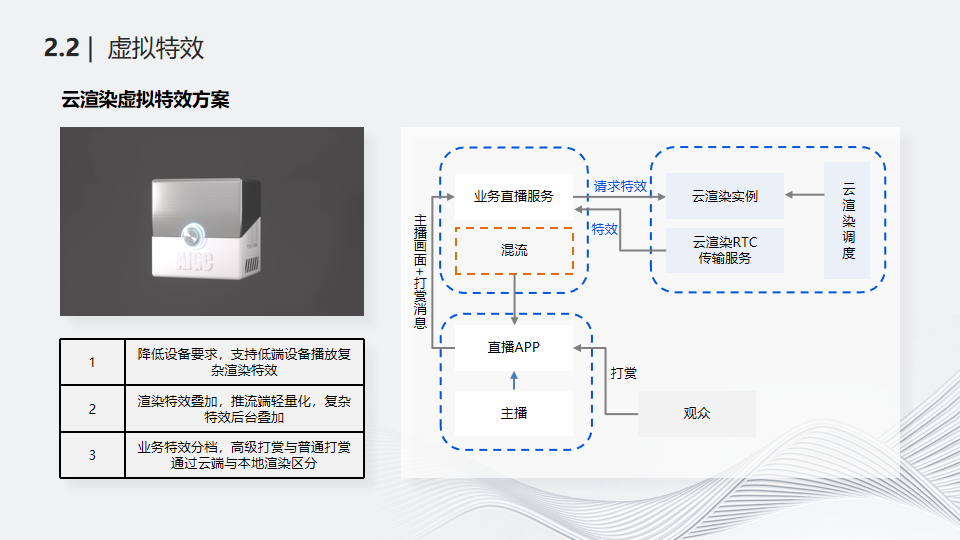

Next let’s take a look at the virtual special effects scene. Traditional gift giving basically follows the process shown in the figure above. Users purchase gifts, messages are sent to the live broadcast APP, and gift special effects are directly rendered locally on the audience terminal. Since the special effects are rendered locally, the performance differences of different terminals must be taken into account, so the special effects are relatively simple and have a single effect. Cloud rendering virtual special effects hopes to achieve the following goals:

Improve the diversity of special effects and make the display effect more cool.

Shielding terminal performance differences, all special effects can be played on any terminal.

Special effects can reach thousands of people.

As shown in the picture above, we move the rendering of virtual special effects from the audience terminal to the cloud, shielding the performance differences of the audience terminal, and rendering more complex special effects. The right side of the figure above shows the basic process of cloud rendering virtual special effects. Audiences in the live broadcast room give rewards on the APP. The reward messages will be sent to the services of each live broadcast platform. The live broadcast service forwards the special effects request to the cloud rendering service. The special effects will be displayed on the cloud rendering instance. Rendering and cloud rendering instances collect and encode the rendered special effects images, forward the encoded stream data to the live broadcast platform, mix the special effects and live video streams on the live broadcast platform, and push the mixed data to the live broadcast room.

At the beginning of designing virtual special effects, we were thinking about how to achieve the effect of virtual special effects for thousands of people. The picture above shows the ability to combine the capabilities of AIGC to realize special effects. In the specific process, the user gives the prompt word, and the background goes through text review, word prompt optimization, text drawing, picture review, and special effects are generated based on the corresponding scene. It also supports manufacturers to upload their own models and combine them with cloud rendering services to achieve special effects more relevant to their own business.

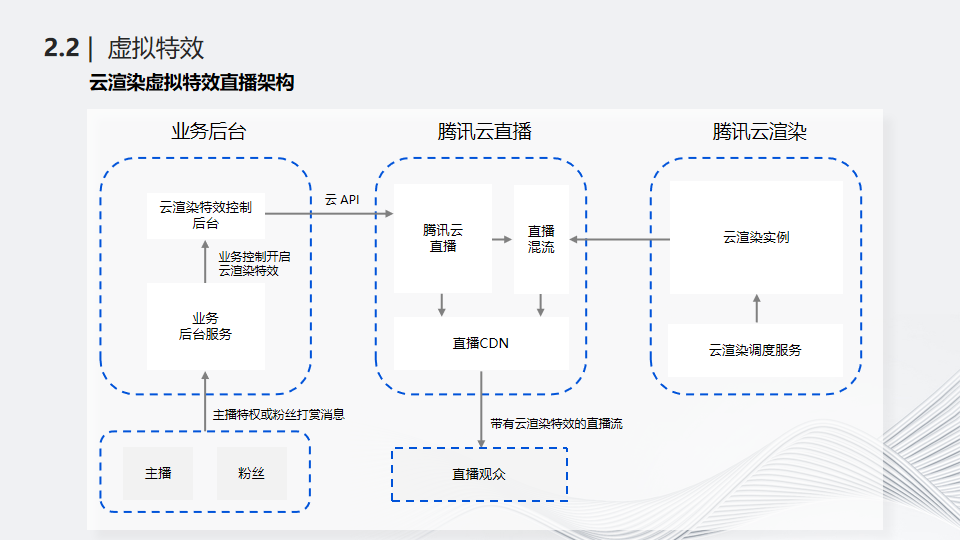

The figure above takes Tencent Cloud Live Broadcast as an example to introduce the architecture of the combination of cloud rendering virtual special effects and live broadcast system. Anchors or fans give rewards and messages are sent to the business backend. The business backend passes the special effects request to the cloud rendering system. The cloud rendering system sends the rendered special effects data to the Tencent Cloud live broadcast system, mixes the live broadcast, and finally pushes the mixed data to Studio.

2.3| Multi-person interactive live broadcast on the same screen

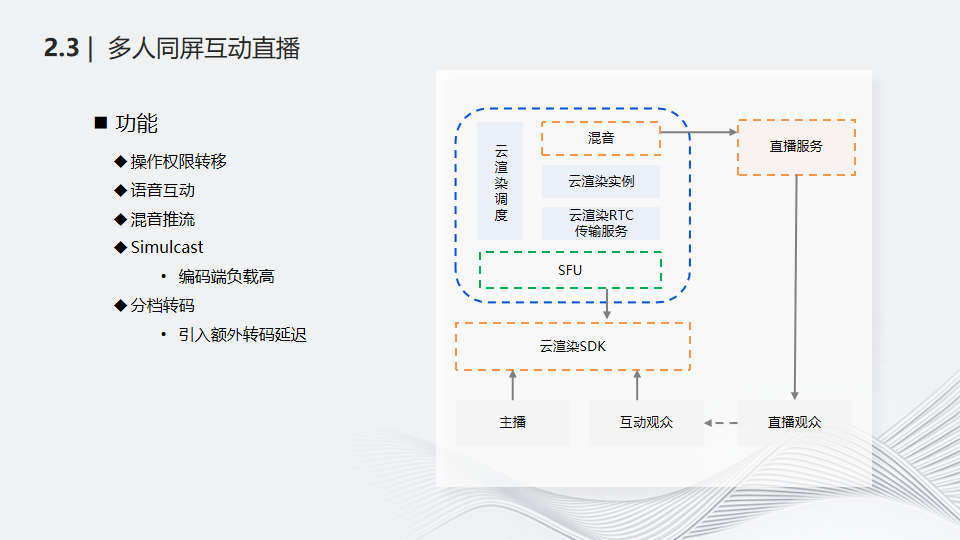

Then let’s look at the multi-person interaction scene on the same screen. At present, live broadcast interaction is mainly based on video connection, and the interaction is relatively simple. Multi-person interaction on the same screen is to add interactive effects to video live broadcast applications. During the live broadcast, the host can interact with the audience in real-time in the form of voice or video.

First, let’s watch a video to intuitively experience the effect of interactive live broadcast with multiple people on the same screen. The video shows that anchors and viewers can transfer operating permissions to each other, switch roles and other functions.

The right side of the picture above shows the basic process of multi-person interactive live broadcast on the same screen. The audience enters the live broadcast room, applies for an interactive role to the host, the host approves it, and the audience role is upgraded to an interactive audience that can participate in the game. The interactive audience can operate their own games locally. The character and cloud rendering SDK pass user operation instructions to the cloud rendering instance. The game running on the cloud rendering instance accepts the operation instructions from the interactive audience and makes real responses. Throughout the entire process, the cloud rendering system needs to support permission transfer, role switching, and accurate identification of the audience's operations and corresponding game characters, etc. At the same time, how to ensure a high-quality, low-latency experience and break through the limit on the number of people interacting are also issues that need to be solved urgently.

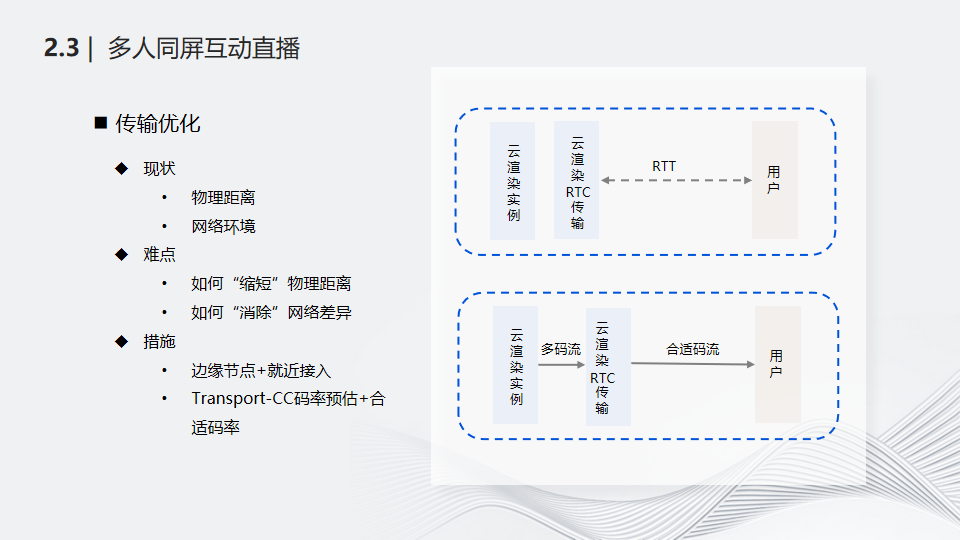

First of all, how to ensure a smooth gaming experience in multi-person interactive live broadcasts on the same screen? The focus here is to solve the transmission problem. There are two main aspects that affect transmission, one is physical distance and the other is network environment.

As the name suggests, physical distance is the distance between two places in real life. The longer the distance, the more difficult it is to guarantee the transmission effect. In addition, even if two people are in the same location, the transmission effects will be very different depending on the network environment. Therefore, "shortening" the physical distance and "eliminating" network differences have become the key to solving the problem.

"Shortening" the physical distance is solved by adding edge nodes and nearby access. If the transmission delay is very high at a close distance, it will be saved as historical information and used as a reference for the next scheduling strategy.

"Eliminating" network differences is solved by selecting the appropriate code stream for transmission. Specifically, when a new user connects, the stream with the maximum code rate is delivered for t1 seconds; after t1 seconds, the system evaluates the acceptable code rate b of the user's network environment based on the algorithm and the code rate of the system encoding ( For example, the system has compiled three code streams, and the code rates from low to high are b1, b2, and b3) to decide whether to cut the stream. Specifically, it is to compare the sizes of b and b1/b2/b3, and select the flow closest to b and smaller than b to switch.

Code rate switching is divided into:

① When switching from a large code rate stream to a small code rate stream, continue to deliver the new stream for t2 seconds, then switch back to the large code rate and try again to detect whether the user network environment has improved. Retry n times, and the interval between each retry gradually increases. increase;

② When switching from a small code rate stream to a large code rate stream, the new stream will continue to be delivered for t3 seconds; during the process of continuing to deliver the new stream, if the estimated code rate change rate is relatively large and exceeds the set threshold T, It will stop continuously delivering this stream, and calculate which stream to forward based on the actual bit rate.

Based on the above explanation, multi-person interactive live broadcast scenarios on the same screen introduce multiple bit rates and edge nodes, and the entire architecture is upgraded as shown above. When viewers access, they may not directly connect to the cloud rendering instance, but may be connected to the data transparent transmission service. In addition, Lianmai also needs to add the function of mixing, and the live broadcast service can also provide features such as segmented transcoding according to actual conditions.

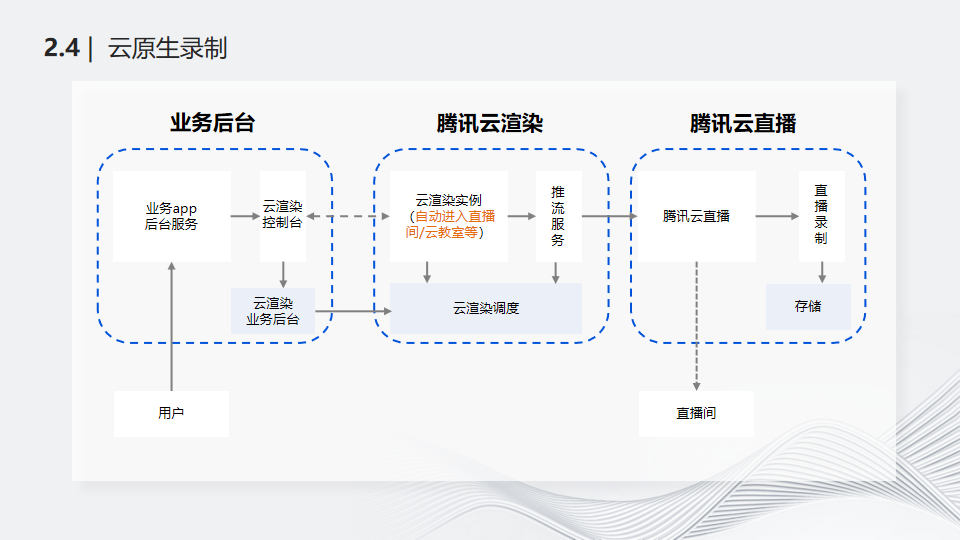

2.4| Cloud native recording

Finally, we take a look at the cloud native recording scenario. First let’s look at the difficulties faced with regular video recording and manual cell phone recording.

Conventional video recording refers to the live broadcast system’s built-in recording function in the background, which cannot record what you see. For example, you cannot record audio and video content rendered locally by the APP such as barrages and gift effects.

Manual mobile phone recording faces problems, first of all, it is unable to achieve large-scale and automated material accumulation; secondly, it occupies mobile phone bandwidth, affecting the viewing or gaming experience; finally, it needs to ask for video recording permissions from the user, and the success rate is low.

The cloud rendering cloud native recording solution means that the customer calls the cloud rendering instance through the API, allowing it to enter the live broadcast room as an audience member and record all the audio and video data in the live broadcast room. What runs on the cloud rendering instance may be an Android APP or a cloud computer. For cloud-native recording, the live broadcast manufacturer needs to provide login-free or special account login to the live broadcast room, and ensure that the cloud rendering instance screen can be collected. For example, the Windows system ensures that the screen does not turn off. Interestingly, we found that some customers directly push recorded videos to the live broadcast backend as the video source for live broadcasts.

2.5| Self-service resource management

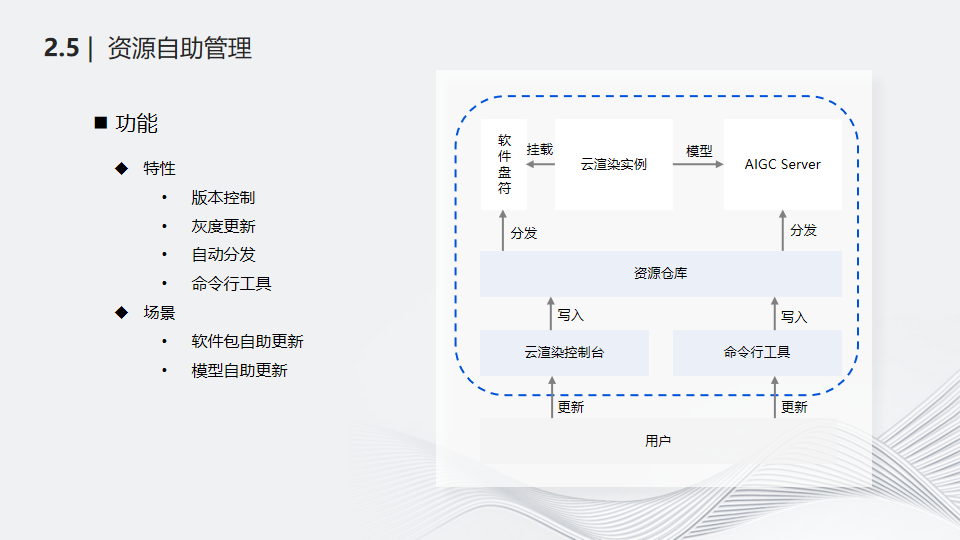

Finally, whether it is barrage games, virtual special effects or multi-player interactive live broadcasts on the same screen, these resources run on cloud rendering instances. If all these resource management are handed over to the cloud rendering team, there will be a huge workload. To this end, we have developed a self-service resource management platform, including the following functions: version control, grayscale update (update specified instances or proportions), automatic distribution, and command line tools (can be combined with user pipelines for automated resource management). The main usage scenarios of this function include self-service updates of software packages and self-service updates of models.

thank you all!

▲Scan the QR code in the picture or click " Read the original text " ▲

Direct access to LiveVideoStackCon 2023 Shenzhen Station 10% off ticket purchase channel