5.3 the basics of cache

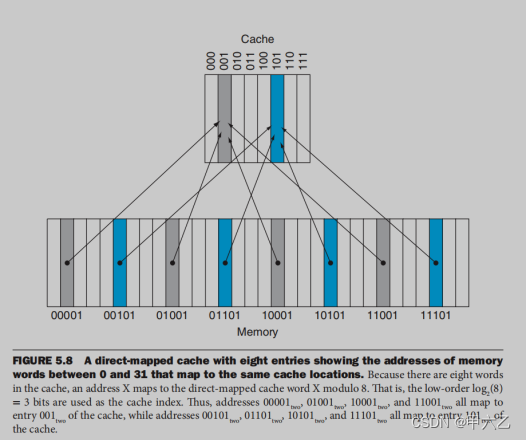

Directed mapping cache

Usually there are three cache mapping methods, directed, set association, and full mapping. Directed mapping is introduced here.

Directed mapping means that each memory location can only exist in a fixed location in the cache.

The location in the cache is calculated based on the address, as follows

Because the storage granularity in the cache is block (that is, cache line), the block address is used above. The lower bits of the address determine which cache line is stored in the cache.

Multiple locations can be mapped to the same location in the cache, so it is necessary to compare which memory location is stored in the cache. The thing used for comparison is tag, which is generally the high bit in the address.

Valid bit

Each cache line has a valid bit to indicate whether the cache line is valid

The hit rates of the cache prediction on modern computers are often above 95%。

Each cache line stores:

- Data(block)

- Tag

- Valid bit

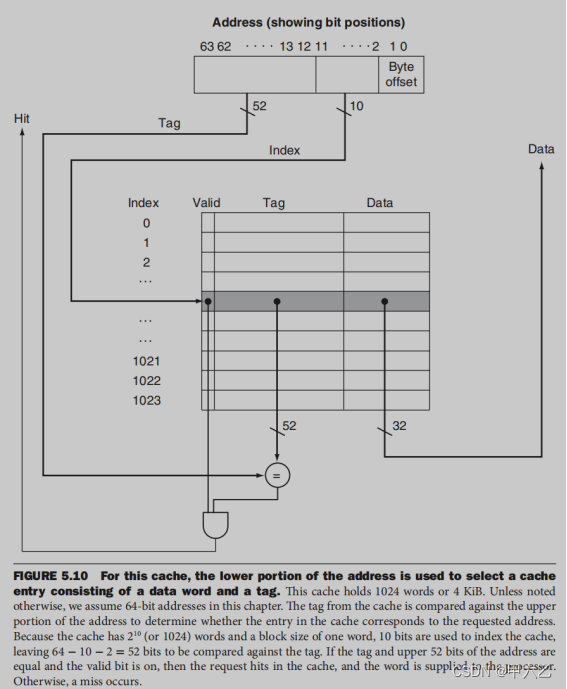

The following is the directed cache address mapping process. The address is divided into three parts:

- Tag A tag field, which is used to compare with the value of the tag field of the

cache

- Index。 A cache index, which is used to select the block

- Offset

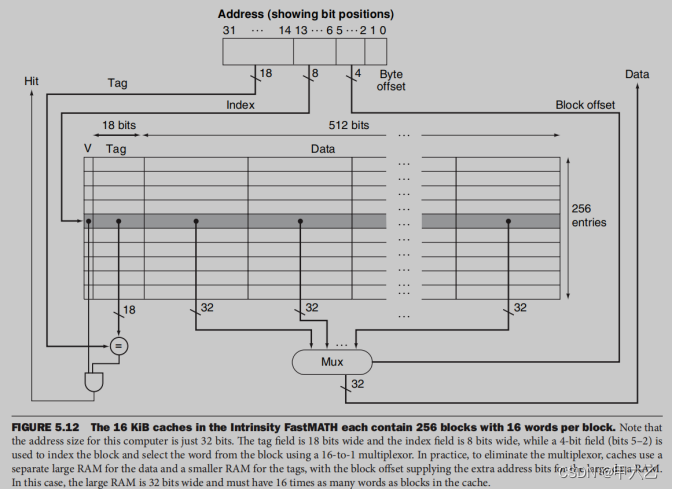

The cache in the above figure:

■ 64-bit addresses

■ A direct-mapped cache

■ The cache size is 2^n blocks, so n bits are used for the index

■ The block size is 2^m words (2^(m+2) bytes), so m bits are used for the word within

the block, and two bits are used for the byte part of the address

The size of the tag field is

64 - (n+m+2) .

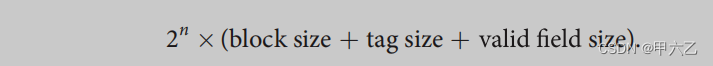

The total number of bits in a direct-mapped cache is

Hit rate and miss rate

hit rate The fraction of memory accesses found in a level of the memory hierarchy.

miss rate The fraction of memory accesses not found in a level of the memory hierarchy.

Miss penalty

miss penalty The time required to fetch a block into a level of the memory hierarchy from the lower level, including the time to access the block, transmit it from one level to the other, insert it in the level that experienced

the miss, and then pass the block to the requestor.

Hit time

hit time The time required to access a level of the memory hierarchy, including the time needed to determine whether the access is a hit or a miss.

Relationship of hit rate, penalty and block size

The larger the Cache line block size, the greater the hit rate, but the greater the penalty when a miss occurs; because it takes more time to move data from the lower memory hierarchy to the higher hierarchy.

Penalty reduction techniques

Early restart

resume execution as soon as the requested word of the block is returned, rather than wait for the entire block

Requested word first or critical word first

the requested word is transferred from the memory to the cache first. The remainder

of the block is then transferred, starting with the address after the requested word and wrapping around to the beginning of the block.

Cache miss

When a cache miss occurs, for the in-order processor, it will stall the pipeline and wait for the cache miss to be processed, that is, to move the corresponding block from the memory to the cache.

For out-order processors, instructions can continue to be executed.

The instruction cache miss processing process is as follows, and the data cache miss processing is similar to this:

1. Send the original PC value to the memory.

2. Instruct main memory to perform a read and wait for the memory to

complete its access.

3. Write the cache entry, putting the data from memory in the data portion of

the entry, writing the upper bits of the address (from the ALU) into the tag

field, and turning the valid bit on.

4. Restart the instruction execution at the first step, which will refetch the

instruction, this time finding it in the cache

Write through and write back

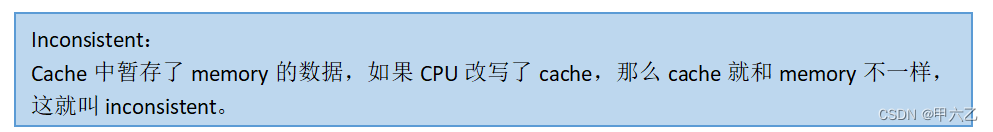

Write through and write back are two commonly used cache write-back strategies.

Write through

Write through means that every time the CPU rewrites a certain word in the cache, it will write the word back to the memory at the same time to ensure that the cache and memory are consistent and consistent.

Only the rewritten word is written back to memory, not the entire cache line.

In the Write through strategy, each store and write operation will generate memory write access, which is relatively slow and reduces performance.

Write buffer

Write buffer is used to solve the problem of waiting for memory access done every time in the write through strategy. The CPU writes the data into the cache and write buffer, and the CPU can continue to execute the program. After the data in the write buffer is written into the memory, the entry in write buffer is released; if the write buffer is full, the CPU must wait for the write buffer to be empty and write the data into the write buffer before continuing to execute the program.

There are two situations when the Write buffer is full:

- If the memory store rate of the CPU is greater than the speed at which data is written from the write buffer to the memory, the write buffer will always be full, and the write buffer will not work.

- During a long write burst, the write buffer is full. In this case, the buffer depth can be increased to make the depth larger than a cache line entry.

Write back

When the modified cache line is to be replaced by other blocks, the modified cache line is written back to memory.

In terms of implementation, write back is more difficult than write through, especially in multi-core processors, it is necessary to ensure that the memory seen by multiple cores is the same.

Write allocation and write non-allocation

Write allocation:

A cache miss occurs during Write, first read the block from the memory, and then write the block into the cache. If it is a write through strategy, the written data must also be written back to memory.

Write non-allocation:

A cache miss occurs during Write, and the data is directly written into memory.

Replace cache line

For write through cache, just replace it directly, because the cache and memory block are the same.

For write back cache, it is necessary to judge whether the cache line is dirty. If so, it is necessary to write the cache line back to memory before replacing the cache line.

Write back can also use the write buffer to move the cache line to be replaced to the write buffer (one cache line size), and then read data from the memory and write it into the cache.

Cache Example

For the following cache, the cache line size is 16 words, that is, 64 bytes. Cache size is 16KB.

Therefore, the offset is 6 bits, and the lower 2 bits are word alignment, so ignore it, bit5-bit2 is which word to index

Index: used to index the cache line , 2KB/64byte = 2^8, so 8bit is index;

Tag: The highest 18bit is used as a tag for comparison.