闲聊

这部分在这就省了吧 感兴趣去我自己搭的博客看 : www.jojo-m.cn

代码实现

import requests

from lxml import etree

import time

import re

import os

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'

}

def Download(url):

# 获取网页

# url = 'https://www.xxxx.com/9456.html'

# url = 'https://www.xxxx.com/13487.html'

def getPage(url):

response = requests.get(url, headers=header)

return response.text

# 下载

# names = html.xpath('//*[@id="app"]/div/div/div[1]/dl/dd/a/@title')

# times = html.xpath('//p[@class="releasetime"]/text()')

# 创建文件夹

html = etree.HTML(getPage(url))

dir_name = html.xpath('//h1[@class="post-title h3"]/text()')

if not os.path.exists("./spider/vmgirls/Down/vmgirls/" + dir_name[0]):

os.makedirs("./spider/vmgirls/Down/vmgirls/" + dir_name[0])

# 下载图片

if html.xpath('//div[@class="post-content"]/div/p/a/img/@data-src') != []:

imgs = html.xpath(

'//div[@class="post-content"]/div/p/a/img/@data-src')

else:

imgs = html.xpath(

'//div[@class="post-content"]/div/p/img/@data-src')

# print(imgs)

for img in imgs:

time.sleep(1)

file_name = img.split('/')[-1]

print(file_name)

response = requests.get(img, headers=header)

with open('./spider/vmgirls/Down/vmgirls/' + dir_name[0] + '/' + file_name, 'wb') as f:

f.write(response.content)

# num = 9017

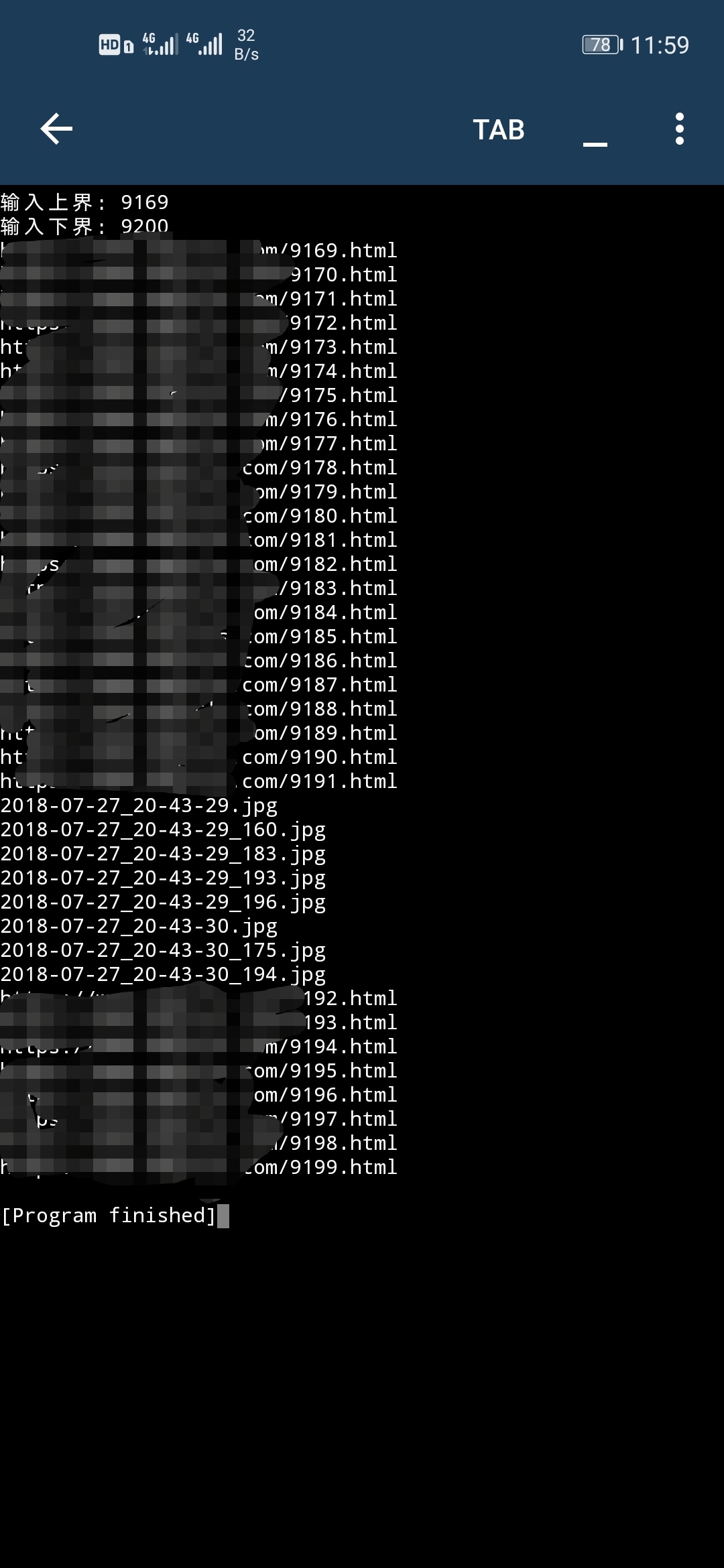

a = int(input("输入上界:"))

b = int(input("输入下界:"))

for num in range(a, b):

time.sleep(1)

print('https://www.xxxx.com/' + str(num) + '.html')

if(requests.get('https://www.xxxx.com/' + str(num) + '.html', headers=header).status_code == 200):

Download('https://www.xxxx.com/' + str(num) + '.html')

因为这个网站似乎是个人性质的网站 而且浏览的人好像也不少 我就两个地方写了 sleep(1) 不给他服务器太大压力 以免宕机造成损失

然后手机上下载 Pydroid3 这个软件 手机上也能运行 (这样就可以在手机上看小姐姐了(滑稽))