接上节文章,本节主要讲解在项目中,如何部署redis replication的读写分离架构

4.5. 在项目中部署redis replication的读写分离架构

之前几讲都是在铺垫各种redis replication的原理和知识,包括主从复制、读写分离,但我们怎么去使用,怎么去搭建主从复制、读写分离的环境呢?

一主一从,往主节点去写,在从节点去读,可以读到,主从架构就搭建成功了。

4.5.1.启用复制,部署slave node

配置主从复制,redis 默认就是主库,只需要配置从库。配置方法:

1.修改配置文件(永久生效,但需要重起服务才能生效)

在redis.conf(根据上文redis安装部署,配置文件是redis_6379)配置文件中配置主master的ip:post, 即可完成主从复制的目的。

slaveof 192.168.92.120 63792.命令行配置:(马上生效,不需要重启服务,一旦重起服务失效)也可以使用slaveof命令

命令行设置,设置从库:

192.168.92.120 :6379> slaveof 192.168.4.51 6351

4.5.2.强制读写分离

基于主从复制架构,实现读写分离

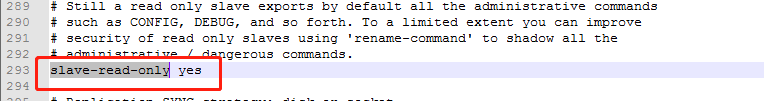

redis slave node只读,默认开启,slave-read-only

开启了只读的redis slave node,会拒绝所有的写操作,这样可以强制搭建成读写分离的架构

4.5.3.集群安全认证

master上启用安全认证:requirepass

requirepass foobared

slave的配置文件中配置master连接认证:masterauth

masterauth futurecloud

如下redis.conf slaveof 配置:

slaveof 192.168.92.120 6379

# If the master is password protected (using the "requirepass" configuration

# directive below) it is possible to tell the slave to authenticate before

# starting the replication synchronization process, otherwise the master will

# refuse the slave request.

#

masterauth futurecloud

4.5.4.读写分离架构的测试

先启动主节点,cache01上的redis实例

再启动从节点,cache02上的redis实例

刚才我调试了一下,redis slave node一直说没法连接到主节点的6379的端口

在搭建生产环境的集群的时候,不要忘记修改一个配置,bind

bind 127.0.0.1 -> 本地开发调试模式,就只能127.0.0.1本地才能访问到6379的端口

每个redis.conf中的bind .

bind 127.0.0.1 -> bind自己的ip地址

在每个节点上都打开6379端口

iptables -A INPUT -ptcp --dport 6379 -j ACCEPT

redis-cli -h ipaddr

info replication

在主上写,在从上读,如下,配置好cache02作为redis 的slave后,启动cache02上的redis,cache01上的redis作为master,自动将数据复制到cache02上:

[root@cache02 redis-4.0.1]# redis-cli -a futurecloud shutdown

[root@cache02 redis-4.0.1]# service redis start

Starting Redis server...

[root@cache02 redis-4.0.1]# 7720:C 24 Apr 23:02:11.953 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

7720:C 24 Apr 23:02:11.953 # Redis version=4.0.1, bits=64, commit=00000000, modified=0, pid=7720, just started

7720:C 24 Apr 23:02:11.953 # Configuration loaded

[root@cache02 redis-4.0.1]#

[root@cache02 redis-4.0.1]# ps -ef|grep redis

root 7721 1 0 23:02 ? 00:00:00 /usr/local/redis-4.0.1/bin/redis-server 0.0.0.0:6379

root 7728 2576 0 23:02 pts/0 00:00:00 grep --color=auto redis

[root@cache02 redis-4.0.1]#

[root@cache02 redis-4.0.1]#

[root@cache02 redis-4.0.1]# redis-cli -a futurecloud

127.0.0.1:6379> get k1

"value1"

127.0.0.1:6379> get k2

"value2"

127.0.0.1:6379> get k3

"value3"

127.0.0.1:6379> get k4

"v4"

127.0.0.1:6379>

4.5.5. redis replication架构进行QPS压测以及水平扩容

对以上搭建好的redis做压测,检测搭建的redis replication的性能和QPS。

我们使用redis自己提供的redis-benchmark压测工具。

1、对redis读写分离架构进行压测,单实例写QPS+单实例读QPS

redis-3.2.8/src

./redis-benchmark -h 192.168.92.120

-c <clients> Number of parallel connections (default 50)

-n <requests> Total number of requests (default 100000)

-d <size> Data size of SET/GET value in bytes (default 2)

根据自己的业务,配置高峰期的访问量,一般在高峰期,瞬时最大用户量会达到10万+。

-c 100000,-n 10000000,-d 50

====== PING_INLINE ======

100000 requests completed in 1.28 seconds

50 parallel clients

3 bytes payload

keep alive: 1

99.78% <= 1 milliseconds

99.93% <= 2 milliseconds

99.97% <= 3 milliseconds

100.00% <= 3 milliseconds

78308.54 requests per second

====== PING_BULK ======

100000 requests completed in 1.30 seconds

50 parallel clients

3 bytes payload

keep alive: 1

99.87% <= 1 milliseconds

100.00% <= 1 milliseconds

76804.91 requests per second

====== SET ======

100000 requests completed in 2.50 seconds

50 parallel clients

3 bytes payload

keep alive: 1

5.95% <= 1 milliseconds

99.63% <= 2 milliseconds

99.93% <= 3 milliseconds

99.99% <= 4 milliseconds

100.00% <= 4 milliseconds

40032.03 requests per second

====== GET ======

100000 requests completed in 1.30 seconds

50 parallel clients

3 bytes payload

keep alive: 1

99.73% <= 1 milliseconds

100.00% <= 2 milliseconds

100.00% <= 2 milliseconds

76628.35 requests per second

====== INCR ======

100000 requests completed in 1.90 seconds

50 parallel clients

3 bytes payload

keep alive: 1

80.92% <= 1 milliseconds

99.81% <= 2 milliseconds

99.95% <= 3 milliseconds

99.96% <= 4 milliseconds

99.97% <= 5 milliseconds

100.00% <= 6 milliseconds

52548.61 requests per second

====== LPUSH ======

100000 requests completed in 2.58 seconds

50 parallel clients

3 bytes payload

keep alive: 1

3.76% <= 1 milliseconds

99.61% <= 2 milliseconds

99.93% <= 3 milliseconds

100.00% <= 3 milliseconds

38684.72 requests per second

====== RPUSH ======

100000 requests completed in 2.47 seconds

50 parallel clients

3 bytes payload

keep alive: 1

6.87% <= 1 milliseconds

99.69% <= 2 milliseconds

99.87% <= 3 milliseconds

99.99% <= 4 milliseconds

100.00% <= 4 milliseconds

40469.45 requests per second

====== LPOP ======

100000 requests completed in 2.26 seconds

50 parallel clients

3 bytes payload

keep alive: 1

28.39% <= 1 milliseconds

99.83% <= 2 milliseconds

100.00% <= 2 milliseconds

44306.60 requests per second

====== RPOP ======

100000 requests completed in 2.18 seconds

50 parallel clients

3 bytes payload

keep alive: 1

36.08% <= 1 milliseconds

99.75% <= 2 milliseconds

100.00% <= 2 milliseconds

45871.56 requests per second

====== SADD ======

100000 requests completed in 1.23 seconds

50 parallel clients

3 bytes payload

keep alive: 1

99.94% <= 1 milliseconds

100.00% <= 2 milliseconds

100.00% <= 2 milliseconds

81168.83 requests per second

====== SPOP ======

100000 requests completed in 1.28 seconds

50 parallel clients

3 bytes payload

keep alive: 1

99.80% <= 1 milliseconds

99.96% <= 2 milliseconds

99.96% <= 3 milliseconds

99.97% <= 5 milliseconds

100.00% <= 5 milliseconds

78369.91 requests per second

====== LPUSH (needed to benchmark LRANGE) ======

100000 requests completed in 2.47 seconds

50 parallel clients

3 bytes payload

keep alive: 1

15.29% <= 1 milliseconds

99.64% <= 2 milliseconds

99.94% <= 3 milliseconds

100.00% <= 3 milliseconds

40420.37 requests per second

====== LRANGE_100 (first 100 elements) ======

100000 requests completed in 3.69 seconds

50 parallel clients

3 bytes payload

keep alive: 1

30.86% <= 1 milliseconds

96.99% <= 2 milliseconds

99.94% <= 3 milliseconds

99.99% <= 4 milliseconds

100.00% <= 4 milliseconds

27085.59 requests per second

====== LRANGE_300 (first 300 elements) ======

100000 requests completed in 10.22 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.03% <= 1 milliseconds

5.90% <= 2 milliseconds

90.68% <= 3 milliseconds

95.46% <= 4 milliseconds

97.67% <= 5 milliseconds

99.12% <= 6 milliseconds

99.98% <= 7 milliseconds

100.00% <= 7 milliseconds

9784.74 requests per second

====== LRANGE_500 (first 450 elements) ======

100000 requests completed in 14.71 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 1 milliseconds

0.07% <= 2 milliseconds

1.59% <= 3 milliseconds

89.26% <= 4 milliseconds

97.90% <= 5 milliseconds

99.24% <= 6 milliseconds

99.73% <= 7 milliseconds

99.89% <= 8 milliseconds

99.96% <= 9 milliseconds

99.99% <= 10 milliseconds

100.00% <= 10 milliseconds

6799.48 requests per second

====== LRANGE_600 (first 600 elements) ======

100000 requests completed in 18.56 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.00% <= 2 milliseconds

0.23% <= 3 milliseconds

1.75% <= 4 milliseconds

91.17% <= 5 milliseconds

98.16% <= 6 milliseconds

99.04% <= 7 milliseconds

99.83% <= 8 milliseconds

99.95% <= 9 milliseconds

99.98% <= 10 milliseconds

100.00% <= 10 milliseconds

5387.35 requests per second

====== MSET (10 keys) ======

100000 requests completed in 4.02 seconds

50 parallel clients

3 bytes payload

keep alive: 1

0.01% <= 1 milliseconds

53.22% <= 2 milliseconds

99.12% <= 3 milliseconds

99.55% <= 4 milliseconds

99.70% <= 5 milliseconds

99.90% <= 6 milliseconds

99.95% <= 7 milliseconds

100.00% <= 8 milliseconds

24869.44 requests per second

使用4核4G内存搭建的专用集群,redis单个节点读请求QPS在5万左右,两个redis从节点,所有的读请求打到两台机器上去,承载整个集群读QPS在10万+。

4.6. redis replication怎么做到高可用性

什么是99.99%高可用?

系统架构上必须要保证99.99%的高可用性。

99.99%的公式:系统可用的时间 / 系统故障的时间

如在一年365天时间内,系统能保证99.99%的时间都可以对外提供服务,那就是高可用性。

redis replication架构,当master node挂掉,就不能对外提供写业务,没有了数据,就不能同步数据到slave node,从而导致不能提供读业务,此时就会出现大量的请求到数据库,从而出现缓存雪崩现象,严重情况整个系统就处于瘫痪。

使用哨兵sentinel cluster 实现 redis replication 高可用,具体讲解请看下篇文章。