有点爱好的你,偶尔应该会看点妹子图片,最近小网站经常崩溃消失,不如想一个办法本地化吧,把小照片珍藏起来!

首先,准备一个珍藏的小网站,然后就可以开始啦!

完整代码在文末哦!!

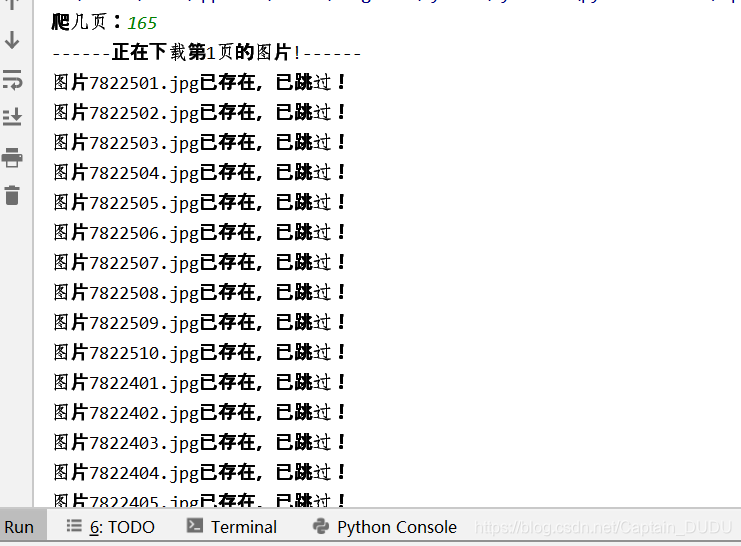

本次更新,加入history模块,可以随时暂停下载!!加入os库自动生成路径,每个文件夹存满490个图片后自动新建文件夹,方便你一键导入百度云哦!!全自动换文件夹存储,

history模块

def history(link):

global picnamelist

global name

link=link

pic_name = name + link[link.rfind('/') + 1:]

path_out = os.getcwd()

h_path=filenames(path_out)

#p_path=pic_names(path_out,h_path)

path_name = h_path+'/' +pic_name

with open('history.txt','a+',encoding='utf8') as f:

f.seek(0,0)

picnamelist=f.readlines()

if pic_name+'\n' not in picnamelist:

f.writelines(pic_name+'\n')

download_img(link,path_name)

return

else:

print('图片%s已存在,已跳过!'%(pic_name))

pass

自动新建文件夹模块

def filenames(path_root):

global picCount

root_path=path_root

os.chdir(root_path)

root_wwj="NewPics"

n=picCount //490

root_wwj=root_wwj+str(n)

if os.path.exists(root_path+"/"+root_wwj)==False:

os.mkdir(root_wwj)

os.chdir(root_path)

return root_wwj

else:

return root_wwj

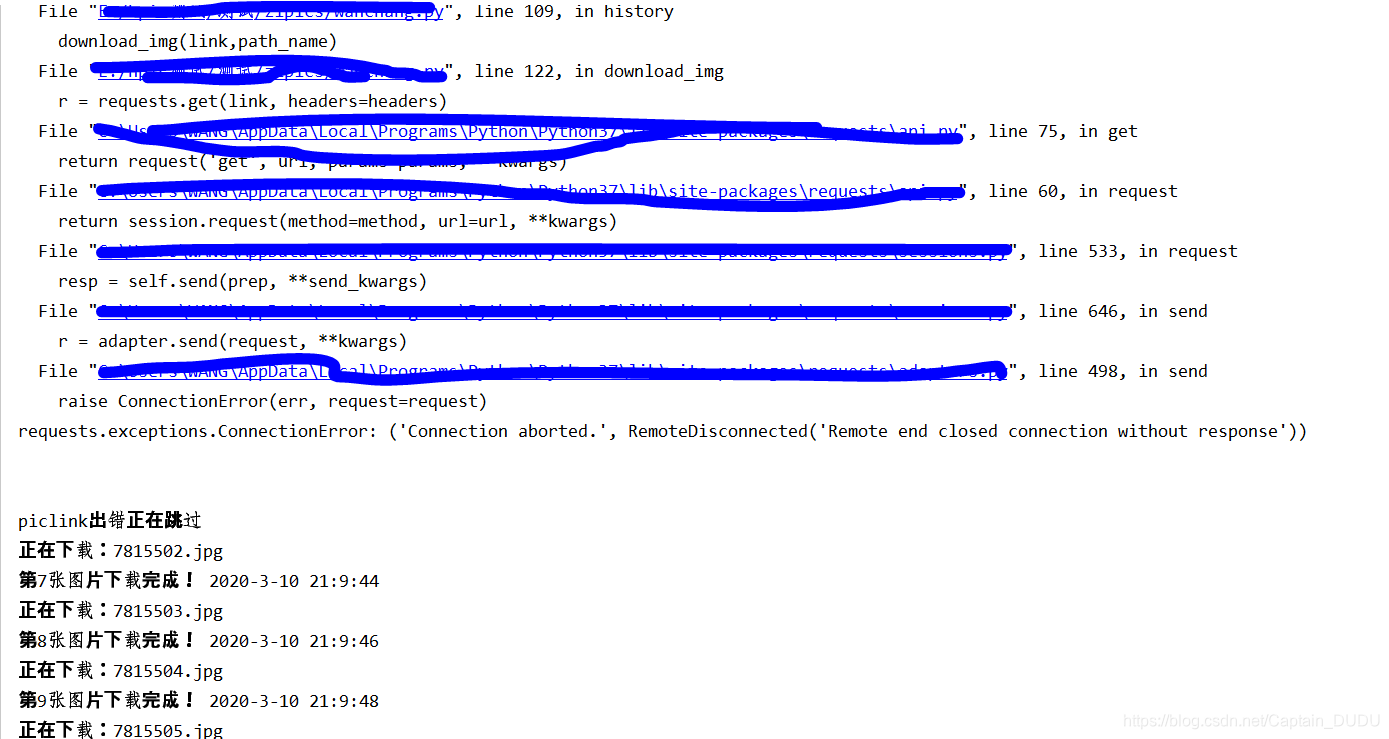

加入异常静默模块,网站报频繁后自动等待10秒,再新发起链接

好了,正题开始

第一步

我们先写一个获取网站的url的链接,因为url常常是由page或者,其他元素构成,我们就把他分离出来,我找到的网站主页下有图片栏目,图片栏目内有标题页,一个标题里有10张照片大概

所以步骤是:

第一步:进入图片栏目的标题页,图片栏目的标题页

规律为第一页:www.xxxx…/tp.html

第二页:www.xxxx…/tp-2.html

第三页:www.xxxx…/tp-3.html

…

…

def getHTML(pages):

for i in range(1,pages+1):

url = 'https://'

if i>1:

url=url+'-'+str(i)+'.html'

print('------正在下载第%d页的图片!------'%(i))

htmlTex(url)

else:

url=url+'.html'

print('------正在下载第%d页的图片!------'%(i))

htmlTex(url)

第二步

上面我们已经拿到我们的链接了,这个链接是每一个换页面的链接,我们要从换页链接里头获取进入标题的链接,标题里有各种图片,我们用xpath 获取标题链接路径待会

所以我们给url 伪装一下header,我这里选择伪装的手机浏览

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

,然后requests.get(url, headers=headers)获取页面 text内容

传给下一个函数用来抓取进入标题的链接

def htmlTex(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

r = requests.get(url, headers=headers)

r.encoding = 'utf8'

htmlTXT = r.text

getLinks(htmlTXT)

第三步

getLinks函数拿到解析代码,根据xpath路径获取指定的标题链接,

再次requests该标题链接,就可以获取标题里头的图片所在页面的text文本传给 getPicLINK 函数

def getLinks(txt):

global name

content = txt

content = content.replace('<!--', '').replace('-->', '')

LISTtree = html.etree.HTML(content)

links=LISTtree.xpath('//div[@class="text-list-html"]/div/ul/li/a/@href')

for link in links:

name=link[link.rfind('/')+1:link.rfind('.')]

url='https:'+link

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

r = requests.get(url, headers=headers)

r.encoding = 'utf8'

# print(r.text)

htmlTXT = r.text

getPicLINK(htmlTXT)

getPicLINK 收到图片地址文本,用xpath获取图片地址,然后下载

def getPicLINK(txt):

global url

content=txt

content=content.replace('<!--','').replace('-->','')

LISTtree=html.etree.HTML(content)

link_list1=LISTtree.xpath('//main/div[@class="content"]/img[@class="videopic lazy"]/@data-original')

for link in link_list1:

try:

history(link)

except Exception as e:

with open("errorLog.txt", "a+", encoding="utf8") as f1:

f1.write(getNowTime() + "-- " + traceback.format_exc() + '\n')

print(getNowTime() + "-- " + traceback.format_exc() + '\n')

print("piclink出错正在跳过")

continue

其中 history函数用于判断图片是否已存在,原理是在每次下载时存入图片名字到txt中,下载前读取txt内图片名字判断图片是否存在

def history(link):

global picnamelist

global name

link=link

pic_name = name + link[link.rfind('/') + 1:]

path_name = 'pics2/' + pic_name

with open('pics2/history.txt','a+',encoding='utf8') as f:

f.seek(0,0)

picnamelist=f.readlines()

if pic_name+'\n' not in picnamelist:

f.writelines(pic_name+'\n')

download_img(link,path_name)

return

else:

print('图片%s已存在,已跳过!'%(pic_name))

pass

下载函数:

picCount=0

def download_img(link,picName):

global picCount

global picnamelist

pic_name=picName

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

r = requests.get(link, headers=headers)

print("正在下载:%s" % (pic_name[pic_name.rfind('/')+1:]))

with open(pic_name,'wb',) as f:

f.write(r.content)

picCount=picCount+1

print("第%d张图片下载完成! %s" % (picCount,getNowTime()))

看完文章觉得不错就点赞加个关注吧

很多人在求代码,这里代码都在了

# vx:13237066568

# QQ:672377334

# 爬虫群加wx: hsrjdz01(通过语:爬虫)

# 进群口令1(添加好友时填写或通过后回复):爬虫

# 进群口令2(添加好友时填写或通过后回复):csdn

import requests

from lxml import html

import time

import traceback

import os

i=0

name=''

picnamelist=[]

url_h= 'https://www.zie2.com'

key_v='/tupian/list-%E5%81%B7%E6%8B%8D%E8%87%AA%E6%8B%8D'

def getNowTime():

year = time.localtime().tm_year

month = time.localtime().tm_mon

day = time.localtime().tm_mday

hour = time.localtime().tm_hour

minute = time.localtime().tm_min

second = time.localtime().tm_sec

return str(year) + "-" + str(month) + "-" + str(day) + " " + str(hour) + ":" + str(minute) + ":" + str(second)

def getHTML(pages):

global url_h

global key_v

for i in range(1,pages+1):

url = url_h+key_v

if i>1:

url=url+'-'+str(i)+'.html'

print('------正在下载第%d页的图片!------'%(i))

htmlTex(url)

else:

url=url+'.html'

print('------正在下载第%d页的图片!------'%(i))

htmlTex(url)

def htmlTex(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

r = requests.get(url, headers=headers)

r.encoding = 'utf8'

htmlTXT = r.text

getLinks(htmlTXT)

def getLinks(txt):

global name

global url_h

content = txt

content = content.replace('<!--', '').replace('-->', '')

LISTtree = html.etree.HTML(content)

links=LISTtree.xpath('//div[@class="text-list-html"]/div/ul/li/a/@href')

for link in links:

name=link[link.rfind('/')+1:link.rfind('.')]

url=url_h+link

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

r = requests.get(url, headers=headers)

r.encoding = 'utf8'

# print(r.text)

htmlTXT = r.text

getPicLINK(htmlTXT)

except Exception as e:

with open("errorLog.txt", "a+", encoding="utf8") as f1:

f1.write(getNowTime() + "-- " + traceback.format_exc() + '\n')

print(getNowTime() + "-- " + traceback.format_exc() + '\n')

print('错误getLinks')

continue

def getPicLINK(txt):

# //div[@class="box list channel max-border list-text-my"]/ul/li[@class]/a/@href

# with open(File_name,encoding="utf8") as f:

# //main/div[@class="content"]/img[@class="videopic lazy"]/@src

global url

content=txt

content=content.replace('<!--','').replace('-->','')

LISTtree=html.etree.HTML(content)

link_list1=LISTtree.xpath('//main/div[@class="content"]/img[@class="videopic lazy"]/@data-original')

for link in link_list1:

try:

history(link)

except Exception as e:

with open("errorLog.txt", "a+", encoding="utf8") as f1:

f1.write(getNowTime() + "-- " + traceback.format_exc() + '\n')

print(getNowTime() + "-- " + traceback.format_exc() + '\n')

print("piclink出错正在跳过")

continue

def history(link):

global picnamelist

global name

link=link

pic_name = name + link[link.rfind('/') + 1:]

path_out = os.getcwd()

h_path=filenames(path_out)

#p_path=pic_names(path_out,h_path)

path_name = h_path+'/' +pic_name

with open('history.txt','a+',encoding='utf8') as f:

f.seek(0,0)

picnamelist=f.readlines()

if pic_name+'\n' not in picnamelist:

f.writelines(pic_name+'\n')

download_img(link,path_name)

return

else:

print('图片%s已存在,已跳过!'%(pic_name))

pass

picCount=0

def download_img(link,picName):

global picCount

global picnamelist

pic_name=picName

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Mobile Safari/537.36'

}

r = requests.get(link, headers=headers)

print("正在下载:%s" % (pic_name[pic_name.rfind('/')+1:]))

with open(pic_name,'wb',) as f:

f.write(r.content)

picCount=picCount+1

print("第%d张图片下载完成! %s" % (picCount,getNowTime()))

def filenames(path_root):

global picCount

root_path=path_root

os.chdir(root_path)

root_wwj="NewPics"

n=picCount //490

root_wwj=root_wwj+str(n)

if os.path.exists(root_path+"/"+root_wwj)==False:

os.mkdir(root_wwj)

os.chdir(root_path)

return root_wwj

else:

return root_wwj

# def pic_names(path,h_path):

# global picCount

# os.chdir(h_path)

# pic_name_path=picCount//499

# if os.path.exists(path+"/"+h_path+'/'+pic_name_path)==False:

# os.mkdir(pic_name_path)

# os.chdir(path)

# return pic_name_path

if __name__ == '__main__':

pages=int(input('爬几页:'))

getHTML(pages)

print("完成")