关于Kubernetes实验及线上环境安装部署记录

https://blog.csdn.net/qq180782842/article/details/88535409

以下记录kubernetes实验环境及线上环境的使用kubeadm的安装步骤:

kubernetes线上环境安装

由于线上环境已解决国外源及镜像源的下载问题, 其步骤更精简:

基础环境

sudo hostname xxx

sudo vi /etc/hostname

sudo vi /etc/hosts

sudo swapoff -a

docker

skipped

kubernetes 下载(可指定版本, 如1.14.5)

sudo apt-get update && sudo apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

or

sudo apt-get install -y kubelet=1.14.5-00 kubectl=1.14.5-00 kubeadm=1.14.5-00

sudo apt-mark hold kubelet kubeadm kubectl

运行kubeadm init

较新版本的kubernetes, 如v1.16

sudo kubeadm init --pod-network-cidr=xxx.xxx.xxx.xxx/16 \

--control-plane-endpoint "LOAD_BALANCER_DNS:LOAD_BALANCER_PORT" --upload-certs

非最新版本的kubernetes, 如v1.14

sudo kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs

kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: stable

apiServer:

certSANs:

- "xxx.xxx.xxx.xxx"

controlPlaneEndpoint: "xxx.xxx.xxx.xxx:6443"

networking:

podSubnet: "xxx.xxx.xxx.xxx/16"

kubeadm init后续

sudo mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubeadm join

对于Control Panel, kubeadm join后接的xxx.xxx.xxx.xxx:6443可以是lb或master节点的IP/地址,此值要等于kubeadm-config.yaml的controlPlaneEndpoin的值(旧版本)或kubeadm init --control-plane-endpoint指定的值(新版本)

对于Node, 若想指定worker node只访问特定的master,则可以kubeadm join后接的xxx.xxx.xxx.xxx:6443设为指定master的地址, 而不一定要设为lvs的地址。

跨多机房的kubernetes的灾备配置可由以上两点延伸。

CNI和Ingress Controller

kubectl apply -f https://docs.projectcalico.org/v3.8/manifests/canal.yaml

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

Optional: 修改节点角色及使master可调度

kubectl taint node xxx node-role.kubernetes.io/master:NoSchedule-

kubectl label node xxx node-role.kubernetes.io/worker=worker

kubernetes实验环境安装

kubernetes:1.3.4

ubuntu版本:16.04

HA方案:stacked

CNI:Calico 3.6.1

角色 主机 数量

Control Panel 10.0.0.129 \130 \133 3

Node 10.0.0.131-132 2

Haproxy + Keeplived 10.0.0.134-135(vip: 10.0.0.200) 2

各节点/etc/hosts文件如下:

127.0.0.1 localhost

10.0.0.133 master1

10.0.0.129 master2

10.0.0.130 master3

10.0.0.131 node1

10.0.0.132 node2

10.0.0.135 lb1

10.0.0.134 lb2

10.0.0.200 cluster.kube.com

配置要点

各k8s节点安装 kubeadm, kubelet and kubectl

报错1:

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg |

> apt-key add -

解决:用浏览器打开以上url, apt-key.gpg发送到虚拟机, 再运行apt-key add apt-key.gpg

报错2

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list deb

https://apt.kubernetes.io/ kubernetes-xenial main EOF

解决:

用国内源代替

cat < /etc/apt/sources.list.d/kubernetes.list deb

http://mirrors.ustc.edu.cn/kubernetes/apt kubernetes-xenial main EOF

各k8s节点安装Docker

apt-get purge docker lxc-docker docker-engine docker.io

apt-get install curl apt-transport-https ca-certificates software-properties-common

curl -fsSL https://mirrors.ustc.edu.cn/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://mirrors.ustc.edu.cn/docker-ce/linux/ubuntu \

$(lsb_release -cs) stable"

apt-get update

apt install docker-ce

安装和部署Keepalived 和Haproxy

keepalived:

apt-get install keepalived

vi /etc/keepalived/keepalived.conf

主服务器

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

}

virtual_ipaddress {

10.0.0.200

}

}

备服务器

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.200

} }

主服务器上运行systemctl networking stop检测vip是否会迁移到被服务器

Haproxy:

global

chroot /var/lib/haproxy

daemon

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning

pidfile /var/lib/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode tcp

retries 3

option redispatch

listen https-apiserver

bind 10.0.0.200:6443

mode tcp

balance roundrobin timeout connect 3s

timeout server 15s

timeout connect 15s

timeout check 10s

server apiserver01 10.0.0.133:6443 check port 6443 inter 5000 fall 5

server apiserver02 10.0.0.129:6443 check port 6443 inter 5000 fall 5

server apiserver02 10.0.0.130:6443 check port 6443 inter 5000 fall 5

listen http-apiserver

bind 10.0.0.200:8080

mode tcp

balance roundrobin timeout connect 3s

timeout server 15s

timeout connect 15s

timeout check 10s

server apiserver01 10.0.0.133:8080 check port 8080 inter 5000 fall 5

server apiserver02 10.0.0.129:8080 check port 8080 inter 5000 fall 5 server apiserver02 10.0.0.130:8080 check port 8080 inter 5000

fall 5

listen stats # Define a listen section called "stats" bind

10.0.0.200:9000 mode http timeout connect 3s

timeout server 15s

timeout connect 15s

timeout check 10s stats enable stats hide-version stats realm Haproxy\ Statistics stats uri /haproxy_stats

stats auth xxx:xxxx

可到http://10.0.0.200:9000/haproxy_stats查看haproxy的转发情况。

4 安装kubernetes 组件:

按照官网配置,有如下注意要点 :

禁用各节点swap

swapoff -a

kubeadm 生成第一个master节点

images=(kube-apiserver:v1.13.4 kube-controller-manager:v1.13.4 kube-scheduler:v1.13.4 kube-proxy:v1.13.4 pause:3.1 etcd:3.2.24 coredns:1.2.6)

for imageName in ${images[@]} ; do

docker pull mirrorgooglecontainers/$imageName

docker tag mirrorgooglecontainers/$imageName k8s.gcr.io/$imageName

docker rmi mirrorgooglecontainers/$imageName

done

kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: stable

apiServer:

certSANs:

- "cluster.kube.com"

controlPlaneEndpoint: "cluster.kube.com:6443"

networking:

podSubnet: "192.168.0.0/16"

kubeadm init --config=kubeadm-config.yaml 命令必须加--config=关键字 否则如下

kubeadm init kubeadm-config.yaml

该命令会生成默认配置的ClusterConfiguration,导致controlPlaneEndpoint为空,剩余的master节点不能成功添加到k8s master集群中。

kubeadm 加入剩余的master节点

kubeadm join cluster.kube.com:6443 --token 9crxk1.o5iyfhprrzyan598 --discovery-token-ca-cert-hash sha256:c6967ebf094d33220721cffec0db1cb53028ac7dad0c6cdb609d6ec488cbfe6b --experimental-control-plane

kubeadm 加入worker节点

kubeadm join cluster.kube.com:6443 --token 9crxk1.o5iyfhprrzyan598 --discovery-token-ca-cert-hash sha256:c6967ebf094d33220721cffec0db1cb53028ac7dad0c6cdb609d6ec488cbfe6b

安装过程注意项:

kubeadm初始化前, 可以运行kubeadm config命令查看各类配置

kubeadm 自动化安装过程中遇到ERROR可使用kubeadm reset重置。

kubernates 两种HA方案中,kubeadm reset对external etcd方案中etcd的重设不生效。

Loadbalancer 的单独节点配置与Loadbalancer和Master节点重用的方案配置一样。使用keepalived内置的负载均衡模块实现效果一样,但haproxy七层转发性能更优。

kubeadm-config.yaml中注意提前按照选用的CNI要求设置好podSubnet。

各节点的主机名不可重复,配置好正确的/etc/hosts及/etc/hostname,否则kubeadm join 会引起错误,难以排查。

各Master节点应在运行kubadm init和join指令前下载好需要的镜像,可用kubeadm config images list查看系统镜像命名和版本。Worker节点只需要pause和kube-proxy镜像。

若选用calico CNI方案,需按照节点数规模选用不同的calico配置,如选用Typha, 确保calico-typha deployment 的replica数大于1, 否则会引起节点路由表更新不同步,路由表异常等现象。

集群配置成功后,可停用docker服务使得节点NotReady, 观察路由的更新情况,检测CNI的功能是否正常。

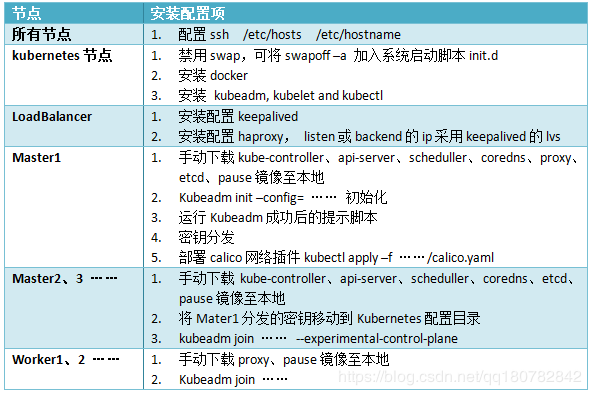

总体安装部署路线如下: