一、原理介绍

对于webrtc p2p音视频功能,不管是本端视频流还是对端视频流,视频流的源头都是摄像头,终点是都是屏幕(不同的终端需要不同的控件支持)。明白了这一点,每一帧数据需要调用VideoRenderer,然后通过渲染器在控件上进行绘制。

VideoRenderer中定义了如下的接口:

public static interface Callbacks {

void renderFrame(org.webrtc.VideoRenderer.I420Frame i420Frame);

}

其基本原理和《webrtc系列——截取图像》一致,但不同的是我这次使用VideoFileRenderer这个类,该类继承自VideoRenderer,并且实现了renderFrame接口。

看一下主要的成员函数:

// 初始化

public VideoFileRenderer(java.lang.String outputFile, int outputFileWidth, int outputFileHeight, org.webrtc.EglBase.Context sharedContext) throws java.io.IOException { /* compiled code */ }

// 回调函数接口

public void renderFrame(org.webrtc.VideoRenderer.I420Frame frame) { /* compiled code */ }

// 保存视频文件的真正实现函数

private void renderFrameOnRenderThread(org.webrtc.VideoRenderer.I420Frame frame) { /* compiled code */ }

// 释放资源

public void release() { /* compiled code */ }

public static native void nativeI420Scale(java.nio.ByteBuffer byteBuffer, int i, java.nio.ByteBuffer byteBuffer1, int i1, java.nio.ByteBuffer byteBuffer2, int i2, int i3, int i4, java.nio.ByteBuffer byteBuffer3, int i5, int i6);

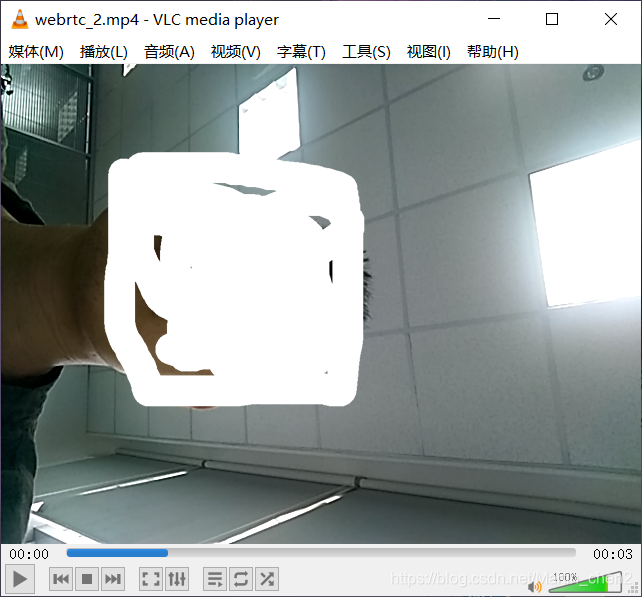

二、实现效果

上图是一个3秒钟的视频的截图。

三、代码实现

// 实现VideoRenderer.Callbacks的接口

private static class ProxyRenderer implements VideoRenderer.Callbacks {

private VideoRenderer.Callbacks target;

private int offest = 0;

private int sum = 0;

// 初始化VideoFileRenderer

static private VideoFileRenderer outFile = new VideoFileRenderer("/storage/emulated/0/1/webrtc_2.mp4", 640, 480, rootEglBase.getEglBaseContext());

@Override

synchronized public void renderFrame(VideoRenderer.I420Frame frame) {

if (target == null) {

Logging.d(TAG, "Dropping frame in proxy because target is null.");

VideoRenderer.renderFrameDone(frame);

return;

}

VideoRenderer.I420Frame frame2 = new VideoRenderer.I420Frame(frame.width, frame.height, frame.rotationDegree, frame.yuvStrides, frame.yuvPlanes, NULL);

target.renderFrame(frame2);

outFile.renderFrame(frame);

// 保存100帧画面后停止

if(sum++ == 100)

{

// 释放资源

outFile.release();

}

synchronized public void setTarget(VideoRenderer.Callbacks target) {

this.target = target;

}

}

}四、注意事项

如果未调用release()接口,不会有视频生成。

生成的视频文件没有经过压缩,文件比较大,且只能用VLC播放器进行播放。

五、拓展

把该文件的源码(老版本)贴出来,我用的老版本,新版本改动较大

/**

* Can be used to save the video frames to file.

*/

public class VideoFileRenderer implements VideoRenderer.Callbacks {

private static final String TAG = "VideoFileRenderer";

private final YuvConverter yuvConverter;

private final HandlerThread renderThread;

private final Object handlerLock = new Object();

private final Handler renderThreadHandler;

private final FileOutputStream videoOutFile;

private final int outputFileWidth;

private final int outputFileHeight;

private final int outputFrameSize;

private final ByteBuffer outputFrameBuffer;

public VideoFileRenderer(String outputFile, int outputFileWidth, int outputFileHeight,

EglBase.Context sharedContext) throws IOException {

if ((outputFileWidth % 2) == 1 || (outputFileHeight % 2) == 1) {

throw new IllegalArgumentException("Does not support uneven width or height");

}

yuvConverter = new YuvConverter(sharedContext);

this.outputFileWidth = outputFileWidth;

this.outputFileHeight = outputFileHeight;

outputFrameSize = outputFileWidth * outputFileHeight * 3 / 2;

outputFrameBuffer = ByteBuffer.allocateDirect(outputFrameSize);

videoOutFile = new FileOutputStream(outputFile);

videoOutFile.write(

("YUV4MPEG2 C420 W" + outputFileWidth + " H" + outputFileHeight + " Ip F30:1 A1:1\n")

.getBytes());

renderThread = new HandlerThread(TAG);

renderThread.start();

renderThreadHandler = new Handler(renderThread.getLooper());

}

@Override

public void renderFrame(final VideoRenderer.I420Frame frame) {

renderThreadHandler.post(new Runnable() {

@Override

public void run() {

renderFrameOnRenderThread(frame);

}

});

}

private void renderFrameOnRenderThread(VideoRenderer.I420Frame frame) {

final float frameAspectRatio = (float) frame.rotatedWidth() / (float) frame.rotatedHeight();

final float[] rotatedSamplingMatrix =

RendererCommon.rotateTextureMatrix(frame.samplingMatrix, frame.rotationDegree);

final float[] layoutMatrix = RendererCommon.getLayoutMatrix(

false, frameAspectRatio, (float) outputFileWidth / outputFileHeight);

final float[] texMatrix = RendererCommon.multiplyMatrices(rotatedSamplingMatrix, layoutMatrix);

try {

videoOutFile.write("FRAME\n".getBytes());

if (!frame.yuvFrame) {

yuvConverter.convert(outputFrameBuffer, outputFileWidth, outputFileHeight, outputFileWidth,

frame.textureId, texMatrix);

int stride = outputFileWidth;

byte[] data = outputFrameBuffer.array();

int offset = outputFrameBuffer.arrayOffset();

// Write Y

videoOutFile.write(data, offset, outputFileWidth * outputFileHeight);

// Write U

for (int r = outputFileHeight; r < outputFileHeight * 3 / 2; ++r) {

videoOutFile.write(data, offset + r * stride, stride / 2);

}

// Write V

for (int r = outputFileHeight; r < outputFileHeight * 3 / 2; ++r) {

videoOutFile.write(data, offset + r * stride + stride / 2, stride / 2);

}

} else {

nativeI420Scale(frame.yuvPlanes[0], frame.yuvStrides[0], frame.yuvPlanes[1],

frame.yuvStrides[1], frame.yuvPlanes[2], frame.yuvStrides[2], frame.width, frame.height,

outputFrameBuffer, outputFileWidth, outputFileHeight);

videoOutFile.write(

outputFrameBuffer.array(), outputFrameBuffer.arrayOffset(), outputFrameSize);

}

} catch (IOException e) {

Logging.e(TAG, "Failed to write to file for video out");

throw new RuntimeException(e);

} finally {

VideoRenderer.renderFrameDone(frame);

}

}

public void release() {

final CountDownLatch cleanupBarrier = new CountDownLatch(1);

renderThreadHandler.post(new Runnable() {

@Override

public void run() {

try {

videoOutFile.close();

} catch (IOException e) {

Logging.d(TAG, "Error closing output video file");

}

cleanupBarrier.countDown();

}

});

ThreadUtils.awaitUninterruptibly(cleanupBarrier);

renderThread.quit();

}

public static native void nativeI420Scale(ByteBuffer srcY, int strideY, ByteBuffer srcU,

int strideU, ByteBuffer srcV, int strideV, int width, int height, ByteBuffer dst,

int dstWidth, int dstHeight);

}参考链接:

https://www.jianshu.com/p/5902d4953ed9

https://www.jianshu.com/p/e3d7d71522ee