程序实现的功能

给定一些点,拟合出回归直线,数据在百度云链接

1.以numpy格式读取csv文件

points = np.genfromtxt("data.csv", delimiter=",")

print(points)

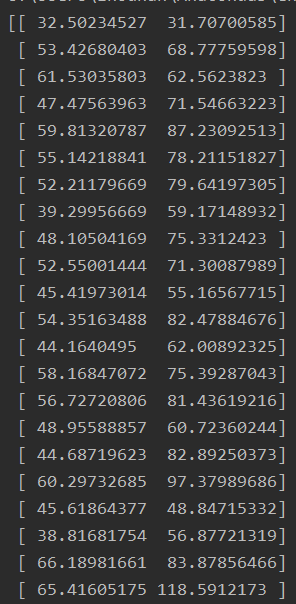

打印一下point看一下numpy格式

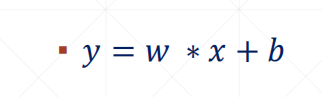

2.初始化直线的参数 w,b,直线的形式如图所示,初始化w和b都为0

在这里插入代码片

initial_b = 0 # initial y-intercept guess

initial_w = 0 # initial slope guess

3.计算损失函数(loss)

def compute_error_for_line_given_points(b, w, points):

totalError = 0

for i in range(0, len(points)):

x = points[i, 0]

y = points[i, 1]

# computer mean-squared-error

totalError += (y - (w * x + b)) ** 2

# average loss for each point

return totalError / float(len(points))

其中,这两句是numpy的调用格式

x = points[i, 0]#相当于points[i][0],表示第i个点的第x坐标

y = points[i, 1]#相当于points[i][1],表示第i个点的y坐标

这是我们构建的损失函数的形式,也就是损失平方和,再除以N

totalError += (y - (w * x + b)) ** 2

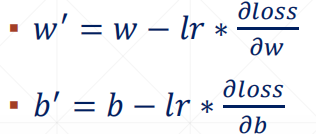

4.更新 b,w的值,采用梯度下降的方法,如图所示,w‘代表新的w值

def gradient_descent_runner(points, starting_b, starting_w, learning_rate, num_iterations):

b = starting_b

w = starting_w

# update for several times

for i in range(num_iterations):

b, w = step_gradient(b, w, np.array(points), learning_rate)

return [b, w]

loss函数对b,对w求偏导

b_gradient += (2/N) * ((w_current * x + b_current) - y)

w_gradient += (2/N) * x * ((w_current * x + b_current) - y)

def step_gradient(b_current, w_current, points, learningRate):

b_gradient = 0

w_gradient = 0

N = float(len(points))

for i in range(0, len(points)):

x = points[i, 0]

y = points[i, 1]

# grad_b = 2(wx+b-y)

b_gradient += (2/N) * ((w_current * x + b_current) - y)

# grad_w = 2(wx+b-y)*x

w_gradient += (2/N) * x * ((w_current * x + b_current) - y)

# update w'

new_b = b_current - (learningRate * b_gradient)

new_w = w_current - (learningRate * w_gradient)

return [new_b, new_w]

new_b = b_current - (learningRate * b_gradient)

new_w = w_current - (learningRate * w_gradient)

完整代码和数据打包到百度云:

链接:https://pan.baidu.com/s/1vWlsyR9BykcXVM1BYIQJuw

提取码:pj2z