hlog写入流程

如果配置了属性

hbase.wal.provide=multiwal,则一个RS会有多个HLOG。This parallelization is done by partitioning incoming edits by their Region,并行化是通过对region分区(分组)实现的,因此无法提高单个region的吞吐量。 具体分几个WAL,这个有待继续探究。

HLOG日志格式

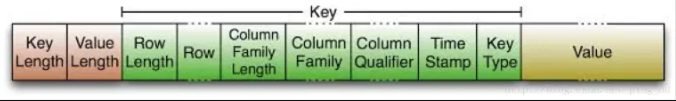

完整的WAL记录分WALKey和KeyValue两部分组成。

WalKeyImpl:

KeyValue:

使用hbase wal命令解析的WAL日志文件

[Writer Classes: ProtobufLogWriter AsyncProtobufLogWriter

Cell Codec Class: org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec

{"sequence":27376,"region":"019c5fa7b9981b70019ac8822453df8f","actions":[{"qualifier":"NAME","vlen":36,"row":"04461088-3248-40f0-a0e7-bba03ff31e01","family":"0","value":"04461088-3248-40f0-a0e7-bba03ff31e01","timestamp":1567081811760,"total_size_sum":152},{"qualifier":"PASSWD","vlen":36,"row":"04461088-3248-40f0-a0e7-bba03ff31e01","family":"0","value":"04461088-3248-40f0-a0e7-bba03ff31e01","timestamp":1567081811760,"total_size_sum":152},{"qualifier":"AGE","vlen":4,"row":"04461088-3248-40f0-a0e7-bba03ff31e01","family":"0","value":"\\x00\\x00\\x1C\\x8E","timestamp":1567081811760,"total_size_sum":112}],"table":{"name":"VVNFUg==","nameAsString":"USER","namespace":"ZGVmYXVsdA==","namespaceAsString":"default","qualifier":"VVNFUg==","qualifierAsString":"USER","systemTable":false,"nameWithNamespaceInclAsString":"default:USER"}}edit heap size: 456

position: 416

,{

"sequence": 27377,

"region": "019c5fa7b9981b70019ac8822453df8f",

"actions": [

{

"qualifier": "NAME",

"vlen": 36,

"row": "e333b4c5-58f1-4716-9593-b73a7ed3b30c",

"family": "0",

"value": "e333b4c5-58f1-4716-9593-b73a7ed3b30c",

"timestamp": 1567081811760,

"total_size_sum": 152

},

{

"qualifier": "PASSWD",

"vlen": 36,

"row": "e333b4c5-58f1-4716-9593-b73a7ed3b30c",

"family": "0",

"value": "e333b4c5-58f1-4716-9593-b73a7ed3b30c",

"timestamp": 1567081811760,

"total_size_sum": 152

}

],

"table": {

"name": "VVNFUg==",

"nameAsString": "USER",

"namespace": "ZGVmYXVsdA==",

"namespaceAsString": "default",

"qualifier": "VVNFUg==",

"qualifierAsString": "USER",

"systemTable": false,

"nameWithNamespaceInclAsString": "default:USER"

}

}edit heap size: 344

position: 674

,{"sequence":27378,"region":"019c5fa7b9981b70019ac8822453df8f","actions":[{"qualifier":"NAME","vlen":36,"row":"1f6c6d0d-e7c1-4029-a591-460f2743ef6f","family":"0","value":"1f6c6d0d-e7c1-4029-a591-460f2743ef6f","timestamp":1567081811760,"total_size_sum":152},{"qualifier":"PASSWD","vlen":36,"row":"1f6c6d0d-e7c1-4029-a591-460f2743ef6f","family":"0","value":"1f6c6d0d-e7c1-4029-a591-460f2743ef6f","timestamp":1567081811760,"total_size_sum":152},{"qualifier":"AGE","vlen":4,"row":"1f6c6d0d-e7c1-4029-a591-460f2743ef6f","family":"0","value":"\\x00\\x00\\x1C\\x90","timestamp":1567081811760,"total_size_sum":112}],"table":{"name":"VVNFUg==","nameAsString":"USER","namespace":"ZGVmYXVsdA==","namespaceAsString":"default","qualifier":"VVNFUg==","qualifierAsString":"USER","systemTable":false,"nameWithNamespaceInclAsString":"default:USER"}}edit heap size: 456

position: 1000

- 当然WAL中不仅有普通表变更的数据,还有一些hbase本身的一些事件的记录,比如FLUSH,COMPACTION等。

- 在hbase事件执行时,这里的sequence并不是完全递增的,可能中间会有调过的情况。

下面是同一个region进行flush操作后的日志:

WAL写入流程 FSHLOG

分析WAL写入流程前,先简单看下hbase put/delete 的操作流程。核心类是HRegion.java,put/delete操作都要先找到数据所属的region,然后调用HRegion的相关方法进行操作。下面以put操作为例,简单说明:

put方法

put 方法是入口。实际执行逻辑在doBatchMutate方法中。

@Override

public void put(Put put) throws IOException {

checkReadOnly();//检查是否只读

// Do a rough check that we have resources to accept a write. The check is

// 'rough' in that between the resource check and the call to obtain a

// read lock, resources may run out. For now, the thought is that this

// will be extremely rare; we'll deal with it when it happens.

checkResources();

startRegionOperation(Operation.PUT);

try {

// All edits for the given row (across all column families) must happen atomically.

// 一行的所有列族必须 原子性 的修改

doBatchMutate(put);

} finally {

closeRegionOperation(Operation.PUT);

}

}

doMiniBatchMutate

doMiniBatchMutate方法中体现了完整的数据put的流程,可以看到,分为以下几步:

- 添加读写锁

- 数据写入的时间以获取锁后的时间为准

- 构建 WALEdit

- 将WALEdits写WAL并且sync刷盘

- 写入memStore

- 完成MiniBatchOperations

/**

* Called to do a piece of the batch that came in to {@link #batchMutate(Mutation[], long, long)}

* In here we also handle replay of edits on region recover.

* @return Change in size brought about by applying <code>batchOp</code>

*/

private void doMiniBatchMutate(BatchOperation<?> batchOp) throws IOException {

boolean success = false;

WALEdit walEdit = null;

WriteEntry writeEntry = null;

boolean locked = false;

// We try to set up a batch in the range [batchOp.nextIndexToProcess,lastIndexExclusive)

MiniBatchOperationInProgress<Mutation> miniBatchOp = null;

/** Keep track of the locks we hold so we can release them in finally clause */

List<RowLock> acquiredRowLocks = Lists.newArrayListWithCapacity(batchOp.size());

try {

// STEP 1. Try to acquire as many locks as we can and build mini-batch of operations with

// locked rows

// 添加行锁(实际是下面的读写锁)

miniBatchOp = batchOp.lockRowsAndBuildMiniBatch(acquiredRowLocks);

// We've now grabbed as many mutations off the list as we can

// Ensure we acquire at least one.

if (miniBatchOp.getReadyToWriteCount() <= 0) {

// Nothing to put/delete -- an exception in the above such as NoSuchColumnFamily?

return;

}

// 添加读写锁。【这里为什么加读锁,有待研究】

lock(this.updatesLock.readLock(), miniBatchOp.getReadyToWriteCount());

locked = true;

// STEP 2. Update mini batch of all operations in progress with LATEST_TIMESTAMP timestamp

// We should record the timestamp only after we have acquired the rowLock,

// otherwise, newer puts/deletes are not guaranteed to have a newer timestamp

// 这里的时间戳获取的是 加锁以后的时间戳,保证时间戳的有效性

long now = EnvironmentEdgeManager.currentTime();

batchOp.prepareMiniBatchOperations(miniBatchOp, now, acquiredRowLocks);

// STEP 3. Build WAL edit

List<Pair<NonceKey, WALEdit>> walEdits = batchOp.buildWALEdits(miniBatchOp);

// STEP 4. Append the WALEdits to WAL and sync.

for(Iterator<Pair<NonceKey, WALEdit>> it = walEdits.iterator(); it.hasNext();) {

Pair<NonceKey, WALEdit> nonceKeyWALEditPair = it.next();

walEdit = nonceKeyWALEditPair.getSecond();

NonceKey nonceKey = nonceKeyWALEditPair.getFirst();

if (walEdit != null && !walEdit.isEmpty()) {

writeEntry = doWALAppend(walEdit, batchOp.durability, batchOp.getClusterIds(), now,

nonceKey.getNonceGroup(), nonceKey.getNonce(), batchOp.getOrigLogSeqNum());

}

// Complete mvcc for all but last writeEntry (for replay case)

if (it.hasNext() && writeEntry != null) {

mvcc.complete(writeEntry);

writeEntry = null;

}

}

// STEP 5. Write back to memStore

// NOTE: writeEntry can be null here

// 写 memstore

writeEntry = batchOp.writeMiniBatchOperationsToMemStore(miniBatchOp, writeEntry);

// STEP 6. Complete MiniBatchOperations: If required calls postBatchMutate() CP hook and

// complete mvcc for last writeEntry

// 完成MiniBatchOperations

batchOp.completeMiniBatchOperations(miniBatchOp, writeEntry);

writeEntry = null;

success = true;

} finally {

// Call complete rather than completeAndWait because we probably had error if walKey != null

if (writeEntry != null) mvcc.complete(writeEntry);

if (locked) {

this.updatesLock.readLock().unlock();

}

releaseRowLocks(acquiredRowLocks);

final int finalLastIndexExclusive =

miniBatchOp != null ? miniBatchOp.getLastIndexExclusive() : batchOp.size();

final boolean finalSuccess = success;

batchOp.visitBatchOperations(true, finalLastIndexExclusive, (int i) -> {

batchOp.retCodeDetails[i] =

finalSuccess ? OperationStatus.SUCCESS : OperationStatus.FAILURE;

return true;

});

batchOp.doPostOpCleanupForMiniBatch(miniBatchOp, walEdit, finalSuccess);

batchOp.nextIndexToProcess = finalLastIndexExclusive;

}

}

这里主要看第三步和第四步。

AsyncFSWAL和FSHlog 是写入WAL的 核心类。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-9Y4slDyK-1572431119081)(http://git.caimi-inc.com/middleware/hbase2.0/uploads/a93aafdf42b23d145d374d6a86fccf6b/image.png)]](https://img-blog.csdnimg.cn/20191030182647887.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9pdDAwNy5ibG9nLmNzZG4ubmV0,size_16,color_FFFFFF,t_70)

核心流程如下,其中入口是HRegion的put/delete方法:

之所以写入速度那么快,很大原因是因为采用了disruptor框架。disruptor是LMAX开发的非常优秀的一个高性能队列,它还获得了Oracle官方的Duke大奖。目前得到广泛应用:包括但不限于 hbase、 Apache Storm、Camel、Log4j2…

wal日志的写入是通过HRegion中的wal对象写入的。

org.apache.hadoop.hbase.regionserver.wal.AsyncFSWAL#append 源码:

public long append(final RegionInfo hri, final WALKeyImpl key, final WALEdit edits,

final boolean inMemstore) throws IOException {

return stampSequenceIdAndPublishToRingBuffer(hri, key, edits, inMemstore,

disruptor.getRingBuffer());

}

protected final long stampSequenceIdAndPublishToRingBuffer(RegionInfo hri, WALKeyImpl key,

WALEdit edits, boolean inMemstore, RingBuffer<RingBufferTruck> ringBuffer)

throws IOException {

if (this.closed) {

throw new IOException(

"Cannot append; log is closed, regionName = " + hri.getRegionNameAsString());

}

MutableLong txidHolder = new MutableLong();

MultiVersionConcurrencyControl.WriteEntry we = key.getMvcc().begin(() -> {

txidHolder.setValue(ringBuffer.next());//由ringBuffer生成序列号

});

long txid = txidHolder.longValue();//txid与上面序列号一致

try (TraceScope scope = TraceUtil.createTrace(implClassName + ".append")) {

FSWALEntry entry = new FSWALEntry(txid, key, edits, hri, inMemstore);//创建FSWALEntry实例

entry.stampRegionSequenceId(we);

ringBuffer.get(txid).load(entry);//写入disruptor队列

} finally {

ringBuffer.publish(txid);//写入disruptor队列

}

return txid;

}

上文说的KeyValue实际是封装到了WALEdit中。WALEdit中有ArrayList<Cell> cells = null,而KeyValue就是Cell的实现类

sync源码(源码很多小方法调用, 便于查看,这里合并为一个方法):

public void sync(boolean forceSync) throws IOException {

try (TraceScope scope = TraceUtil.createTrace("FSHLog.sync")) {

//通过RingBuffer获取序列号

long sequence = this.disruptor.getRingBuffer().next();

SyncFuture syncFuture = publishSyncOnRingBuffer(sequence, forceSync);

//写入RingBuffer后需要阻塞等待,确保刷盘成功

blockOnSync(syncFuture);

}

}

//这里sync实际写入的 syncFuture,可以理解为 是一个刷盘标记

protected SyncFuture publishSyncOnRingBuffer(long sequence, boolean forceSync) {

// here we use ring buffer sequence as transaction id。 使用ring buffer sequence作为txid

SyncFuture syncFuture = getSyncFuture(sequence).setForceSync(forceSync);

try {

RingBufferTruck truck = this.disruptor.getRingBuffer().get(sequence);

truck.load(syncFuture);

} finally {

this.disruptor.getRingBuffer().publish(sequence);

}

return syncFuture;

}

protected final void blockOnSync(SyncFuture syncFuture) throws IOException {

// Now we have published the ringbuffer, halt the current thread until we get an answer back.

try {

if (syncFuture != null) {

if (closed) {

throw new IOException("WAL has been closed");

} else {

//RingBufferEventHandler消费完sync的消息后会唤醒该线程

syncFuture.get(walSyncTimeoutNs);

}

}

} catch (TimeoutIOException tioe) {

//省略catch

}

FSHLog.RingBufferEventHandler#onEvent的核心代码:

public void onEvent(final RingBufferTruck truck, final long sequence, boolean endOfBatch)

throws Exception {

try {

if (truck.type() == RingBufferTruck.Type.SYNC) {

this.syncFutures[this.syncFuturesCount.getAndIncrement()] = truck.unloadSync();

// 收集一批syncFuture任务,为了提高效率。不过批次不宜太大,否则Region Server RPC服务线程阻塞在SyncFuture.get()上的时间就越长

if (this.syncFuturesCount.get() == this.syncFutures.length) {

endOfBatch = true;

}

} else if (truck.type() == RingBufferTruck.Type.APPEND) {

FSWALEntry entry = truck.unloadAppend();

append(entry);

} catch (Exception e) {

//...

}

} else {

//...

}

//.......

//轮询从syncRunners中拿一个线程

this.syncRunnerIndex = (this.syncRunnerIndex + 1) % this.syncRunners.length;

try {

//将要执行的任务添加到syncRunner的阻塞队列

this.syncRunners[this.syncRunnerIndex].offer(sequence, this.syncFutures,

this.syncFuturesCount.get());

}

}

FSHLog.SyncRunner#run的核心代码:

public void run() {

long currentSequence;

while (!isInterrupted()) {

int syncCount = 0;

try {

while (true) {

takeSyncFuture = null;

//从syncFutures队列中取任务执行

takeSyncFuture = this.syncFutures.take();

currentSequence = this.sequence;

long syncFutureSequence = takeSyncFuture.getTxid();

if (syncFutureSequence > currentSequence) {

throw new IllegalStateException("currentSequence=" + currentSequence

+ ", syncFutureSequence=" + syncFutureSequence);

}

// WAL日志消费线程一次会提交多个SyncFuture。对此,SyncRunner线程只会落实执行其中最新的SyncFuture(也就是Sequence ID最大的那个)所代表的Sync操作。而忽略之前的SyncFuture。

long currentHighestSyncedSequence = highestSyncedTxid.get();

if (currentSequence < currentHighestSyncedSequence) {

//跳过的任务直接释放了,这里不应该在最后一起释放吗?

syncCount += releaseSyncFuture(takeSyncFuture, currentHighestSyncedSequence, null);

continue;

}

break;

}

long start = System.nanoTime();

Throwable lastException = null;

try {

writer.sync(useHsync);//它的实现在 ProtobufLogWriter.sync ,调用FSDataOutputStream的hsync或hflush方法刷盘

} catch (Exception e) {

} finally {

//唤醒等待的线程

syncCount += releaseSyncFuture(takeSyncFuture, currentSequence, lastException);

syncCount += releaseSyncFutures(currentSequence, lastException);

}

postSync(System.nanoTime() - start, syncCount);

} catch (Exception e) {

//...

}

}

}

}

RS关闭或重启

RS关闭后,会将其内的所有数据刷盘,并且wal文件迁移到oldwals中。

重启后会根据相应的walgroup分配规则重新划分组。

元数据记录WAL

建表,删表等的操作,也需要通过wal日志进行解析复制。

任务分配根据HDFS文件列表扫描结果是否准确?

准确。

RS扩容缩容,或者被kill -9以后,HDFS中日志总是可以与真实服务相对应。

kill -9 停止RS后,该RS服务会被拆分到其他目录下。 拆分过程中的路径名称是:/hbase/WALs/RSName-splitting