最近刚完成了一份关于大数据的毕设项目,其中使用到的框架就包括Springboot。因为做的是一个离线的数据分析,所以在组件的选用上面也是选择了Hive(如果是做实时的可能就要用到Spark或者HBase了…)。本篇博客,为大家带来的就是关于如何在Springboot项目中配置Hive做一个说明。

更改Pom文件

创建完项目后,打开pom.xml文件,加入下面的内容。

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

</properties>

<dependencies>

<!-- 支持 web项目

SpringBoot 简化了项目导入坐标

在SpringBoot只需要导入starter启动器,就会帮你N多个jar包

spring-boot-starter-web : 包含了Spring,Spring MVC,SpringBoot 所需要 的jar包

<!- 支持使用jsp -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- 支持web项目,整合SpringMVC -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- 支持使用jsp -->

<!--<dependency>-->

<!--<groupId>org.apache.tomcat.embed</groupId>-->

<!--<artifactId>tomcat-embed-jasper</artifactId>-->

<!--</dependency>-->

<!-- mybatis 支持-->

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>1.3.2</version>

</dependency>

<!--通用mapper启动器 -->

<dependency>

<groupId>tk.mybatis</groupId>

<artifactId>mapper-spring-boot-starter</artifactId>

<version>2.0.2</version>

</dependency>

<!--数据库连接池启动器-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.10</version>

</dependency>

<!-- mysql驱动 -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.46</version>

</dependency>

<!-- jdbc 启动器 -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

</dependency>

<!-- 在springboot中使用JSTL库 -->

<dependency>

<groupId>jstl</groupId>

<artifactId>jstl</artifactId>

<version>1.2</version>

</dependency>

<dependency>

<groupId>taglibs</groupId>

<artifactId>standard</artifactId>

<version>1.1.2</version>

</dependency>

<dependency>

<groupId>com.github.pagehelper</groupId>

<artifactId>pagehelper-spring-boot-starter</artifactId>

<version>1.2.5</version>

</dependency>

<!-- 添加hadoop依赖 -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>G:/jdk1.8/jdk/lib/tools.jar</systemPath>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

<!-- 添加hive依赖 -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>1.1.0</version>

<exclusions>

<exclusion>

<groupId>org.eclipse.jetty.aggregate</groupId>

<artifactId>*</artifactId>

</exclusion>

</exclusions>

</dependency>

</dependencies>

加入配置文件application.yml

在你的父模块的resources包下,添加配置文件application.yml

# 配置mybatis

mybatis:

mapperLocations: classpath:/com/springboot/sixmonth/dao/mapper/**/*.xml

#配置多个数据源属性(这里只配置两个,有需要可自行新增)

spring:

datasource:

mysqlMain: #mysql主数据源,可关联mybatis

type:

com.alibaba.druid.pool.DruidDataSource

url: jdbc:mysql://127.0.0.1:3306/youtobe_bigdata?useUnicode=true&characterEncoding=utf-8&zeroDateTimeBehavior=convertToNull&allowMultiQueries=true&useSSL=true&rewriteBatchedStatements=true

username: root

password: root

driver-class-name: com.mysql.jdbc.Driver

hive: # hive数据源

url: jdbc:hive2://192.168.100.100:10000/youtube

type: com.alibaba.druid.pool.DruidDataSource

username: root

password: 123456

driver-class-name: org.apache.hive.jdbc.HiveDriver

commonConfig: # 连接池统一配置,应用到所有的数据源

initialSize: 1

minIdle: 1

maxIdle: 5

maxActive: 50

maxWait: 10000

timeBetweenEvictionRunsMillis: 10000

minEvictableIdleTimeMillis: 300000

validationQuery: select 'x'

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

maxOpenPreparedStatements: 20

filters: stat

上面的配置文件中,只加入了hive和mysql的配置,大家可以根据需求自行增减。

添加配置类

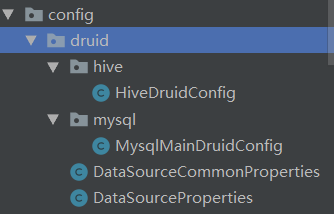

个人建议添加在项目的dao层,也就是与数据库连接的那一层。

目录结构如下

HiveDruidConfig

@Configuration

@EnableConfigurationProperties({DataSourceProperties.class, DataSourceCommonProperties.class})//将配置类注入到bean容器,使ConfigurationProperties注解类生效

public class HiveDruidConfig {

private static Logger logger = LoggerFactory.getLogger(HiveDruidConfig.class);

@Autowired

private DataSourceProperties dataSourceProperties;

@Autowired

private DataSourceCommonProperties dataSourceCommonProperties;

@Bean("hiveDruidDataSource") //新建bean实例

@Qualifier("hiveDruidDataSource")//标识

public DataSource dataSource(){

DruidDataSource datasource = new DruidDataSource();

//配置数据源属性

datasource.setUrl(dataSourceProperties.getHive().get("url"));

datasource.setUsername(dataSourceProperties.getHive().get("username"));

datasource.setPassword(dataSourceProperties.getHive().get("password"));

datasource.setDriverClassName(dataSourceProperties.getHive().get("driver-class-name"));

//配置统一属性

datasource.setInitialSize(dataSourceCommonProperties.getInitialSize());

datasource.setMinIdle(dataSourceCommonProperties.getMinIdle());

datasource.setMaxActive(dataSourceCommonProperties.getMaxActive());

datasource.setMaxWait(dataSourceCommonProperties.getMaxWait());

datasource.setTimeBetweenEvictionRunsMillis(dataSourceCommonProperties.getTimeBetweenEvictionRunsMillis());

datasource.setMinEvictableIdleTimeMillis(dataSourceCommonProperties.getMinEvictableIdleTimeMillis());

datasource.setValidationQuery(dataSourceCommonProperties.getValidationQuery());

datasource.setTestWhileIdle(dataSourceCommonProperties.isTestWhileIdle());

datasource.setTestOnBorrow(dataSourceCommonProperties.isTestOnBorrow());

datasource.setTestOnReturn(dataSourceCommonProperties.isTestOnReturn());

datasource.setPoolPreparedStatements(dataSourceCommonProperties.isPoolPreparedStatements());

try {

datasource.setFilters(dataSourceCommonProperties.getFilters());

} catch (SQLException e) {

logger.error("Druid configuration initialization filter error.", e);

}

return datasource;

}

}

MysqlMainDruidConfig

@Configuration

@EnableConfigurationProperties({DataSourceProperties.class, DataSourceCommonProperties.class})//将配置类注入到bean容器,使ConfigurationProperties注解类生效

public class MysqlMainDruidConfig {

private static Logger logger = LoggerFactory.getLogger(MysqlMainDruidConfig.class);

@Autowired

private DataSourceProperties dataSourceProperties;

@Autowired

private DataSourceCommonProperties dataSourceCommonProperties;

@Primary //标明为主数据源,只能标识一个主数据源,mybatis连接默认主数据源

@Bean("mysqlDruidDataSource") //新建bean实例

@Qualifier("mysqlDruidDataSource")//标识

public DataSource dataSource(){

DruidDataSource datasource = new DruidDataSource();

//配置数据源属性

datasource.setUrl(dataSourceProperties.getMysqlMain().get("url"));

datasource.setUsername(dataSourceProperties.getMysqlMain().get("username"));

datasource.setPassword(dataSourceProperties.getMysqlMain().get("password"));

datasource.setDriverClassName(dataSourceProperties.getMysqlMain().get("driver-class-name"));

//配置统一属性

datasource.setInitialSize(dataSourceCommonProperties.getInitialSize());

datasource.setMinIdle(dataSourceCommonProperties.getMinIdle());

datasource.setMaxActive(dataSourceCommonProperties.getMaxActive());

datasource.setMaxWait(dataSourceCommonProperties.getMaxWait());

datasource.setTimeBetweenEvictionRunsMillis(dataSourceCommonProperties.getTimeBetweenEvictionRunsMillis());

datasource.setMinEvictableIdleTimeMillis(dataSourceCommonProperties.getMinEvictableIdleTimeMillis());

datasource.setValidationQuery(dataSourceCommonProperties.getValidationQuery());

datasource.setTestWhileIdle(dataSourceCommonProperties.isTestWhileIdle());

datasource.setTestOnBorrow(dataSourceCommonProperties.isTestOnBorrow());

datasource.setTestOnReturn(dataSourceCommonProperties.isTestOnReturn());

datasource.setPoolPreparedStatements(dataSourceCommonProperties.isPoolPreparedStatements());

try {

datasource.setFilters(dataSourceCommonProperties.getFilters());

} catch (SQLException e) {

logger.error("Druid configuration initialization filter error.", e);

}

return datasource;

}

}

DataSourceCommonProperties

@ConfigurationProperties(prefix = "spring.datasource.commonconfig", ignoreUnknownFields = false)

public class DataSourceCommonProperties {

// final static String DS = "spring.datasource.commonConfig";

private int initialSize = 10;

private int minIdle;

private int maxIdle;

private int maxActive;

private int maxWait;

private int timeBetweenEvictionRunsMillis;

private int minEvictableIdleTimeMillis;

private String validationQuery;

private boolean testWhileIdle;

private boolean testOnBorrow;

private boolean testOnReturn;

private boolean poolPreparedStatements;

private int maxOpenPreparedStatements;

private String filters;

private String mapperLocations;

private String typeAliasPackage;

//为节省空间,这里省略set和get方法,可自行添加

//

// public static String getDS() {

// return DS;

// }

public int getInitialSize() {

return initialSize;

}

public void setInitialSize(int initialSize) {

this.initialSize = initialSize;

}

public int getMinIdle() {

return minIdle;

}

public void setMinIdle(int minIdle) {

this.minIdle = minIdle;

}

public int getMaxIdle() {

return maxIdle;

}

public void setMaxIdle(int maxIdle) {

this.maxIdle = maxIdle;

}

public int getMaxActive() {

return maxActive;

}

public void setMaxActive(int maxActive) {

this.maxActive = maxActive;

}

public int getMaxWait() {

return maxWait;

}

public void setMaxWait(int maxWait) {

this.maxWait = maxWait;

}

public int getTimeBetweenEvictionRunsMillis() {

return timeBetweenEvictionRunsMillis;

}

public void setTimeBetweenEvictionRunsMillis(int timeBetweenEvictionRunsMillis) {

this.timeBetweenEvictionRunsMillis = timeBetweenEvictionRunsMillis;

}

public int getMinEvictableIdleTimeMillis() {

return minEvictableIdleTimeMillis;

}

public void setMinEvictableIdleTimeMillis(int minEvictableIdleTimeMillis) {

this.minEvictableIdleTimeMillis = minEvictableIdleTimeMillis;

}

public String getValidationQuery() {

return validationQuery;

}

public void setValidationQuery(String validationQuery) {

this.validationQuery = validationQuery;

}

public boolean isTestWhileIdle() {

return testWhileIdle;

}

public void setTestWhileIdle(boolean testWhileIdle) {

this.testWhileIdle = testWhileIdle;

}

public boolean isTestOnBorrow() {

return testOnBorrow;

}

public void setTestOnBorrow(boolean testOnBorrow) {

this.testOnBorrow = testOnBorrow;

}

public boolean isTestOnReturn() {

return testOnReturn;

}

public void setTestOnReturn(boolean testOnReturn) {

this.testOnReturn = testOnReturn;

}

public boolean isPoolPreparedStatements() {

return poolPreparedStatements;

}

public void setPoolPreparedStatements(boolean poolPreparedStatements) {

this.poolPreparedStatements = poolPreparedStatements;

}

public int getMaxOpenPreparedStatements() {

return maxOpenPreparedStatements;

}

public void setMaxOpenPreparedStatements(int maxOpenPreparedStatements) {

this.maxOpenPreparedStatements = maxOpenPreparedStatements;

}

public String getFilters() {

return filters;

}

public void setFilters(String filters) {

this.filters = filters;

}

public String getMapperLocations() {

return mapperLocations;

}

public void setMapperLocations(String mapperLocations) {

this.mapperLocations = mapperLocations;

}

public String getTypeAliasPackage() {

return typeAliasPackage;

}

public void setTypeAliasPackage(String typeAliasPackage) {

this.typeAliasPackage = typeAliasPackage;

}

}

DataSourceProperties

@ConfigurationProperties(prefix = DataSourceProperties.DS, ignoreUnknownFields = false)

public class DataSourceProperties {

final static String DS = "spring.datasource";

private Map<String,String> mysqlMain;

private Map<String,String> hive;

private Map<String,String> commonConfig;

//为节省空间,这里省略set和get方法,可自行添加

public static String getDS() {

return DS;

}

public Map<String, String> getMysqlMain() {

return mysqlMain;

}

public void setMysqlMain(Map<String, String> mysqlMain) {

this.mysqlMain = mysqlMain;

}

public Map<String, String> getHive() {

return hive;

}

public void setHive(Map<String, String> hive) {

this.hive = hive;

}

public Map<String, String> getCommonConfig() {

return commonConfig;

}

public void setCommonConfig(Map<String, String> commonConfig) {

this.commonConfig = commonConfig;

}

}

封装Hive查询代码

创建一个类,例如TestHiveDao,写一个方法,封装我们通过String类型的变量(sql)返回查询结果集合的代码。

/**

* 通用工具类,通过传入的指定sql,返回返回在hive中查询的结果,并将结果集返回

* @param sql sql语句

* @return 结果集

* @throws SQLException

*/

public List<String> splicer(String sql) throws SQLException {

// 新建一个集合,保存最后的结果

ArrayList<String> list = new ArrayList<>();

Statement statement = druidDataSource.getConnection().createStatement();

// 查询语句

ResultSet res = statement.executeQuery(sql);

// 获取查询结果列的个数

int count = res.getMetaData().getColumnCount();

System.out.println("count:"+count);

String str = null;

while (res.next()) {

str = "";

for (int i = 1; i < count; i++) {

// 一行数据的信息,每个字段之间以空格隔开

str += res.getString(i) + "\t";

}

// 将查询到的一行结果存入集合

str += res.getString(count);

// 添加至集合

list.add(str);

}

return list;

}

然后当我们想使用到hive进行hql查询的时候,就可以调用这个方法,就可以简单轻松的获取到想要的结果了。

返回的结果类似于下面控制台所显示的内容

通过传入一个字符串类型的hql语句,返回的集合包含所查询出的每一行元素。是不是很简便呢~

本次的分享就到这里了,受益的小伙伴们记得留个赞哟,对大数据技术感兴趣的朋友可以关注一下小菌~(✪ω✪)