Paper Title:Deep Learning for Single Image Super-Resolution:A Brief Review

I.Sections

section2:相关背景概念

section3:有效神经网络结构forSISR

section4:SR不同的用处

II.Backgrounds

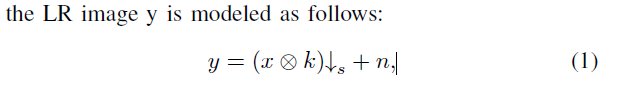

1.

2.Interpolation-based SISR methods, such as bicubic interpolation

[10] and Lanczos resampling [11]

3.Learning-based SISR methods:

3.1.The Markov random field (MRF) [16] approach was first adopted by Freeman et al. to exploit the abundant real-world images to synthesize visually pleasing image textures.

3.2.Neighbor embedding methods [17] proposed by Chang et al. took advantage of similar local geometry between LR and HR to restore HR image patches.

3.3.Inspired by the sparse signal recovery theory [18], researchers applied sparse coding methods [19], [20], [21], [22], [23], [24] to SISR problems.

3.4.Lately, random forest [25] has also been used to achieve improvement in the reconstruction performance.

3.5.Meanwhile, many works combined the merits of reconstruction-based methods with the learning-based approaches to further reduce artifacts introduced by external training examples [26], [27], [28], [29].

4.deep learning:

artificial neural network (ANN)[32]->convolutional neural network (CNN) [35]->recurrent neural network (RNN) [36]->pretraining the DNN with restricted Boltzmann machine(RBM)->deep Boltzmann machine (DBM) [42], variational autoencoder (VAE) [43], [44] and generative adversarial nets (GAN) [45]

III.DEEP ARCHITECTURES FOR SISR

discuss the efficient architectures proposed for SISR in recent years.

A.Benchmark of Deep Architecture for SISR

- SRCNN: three-layer CNNCNN, where the filter

sizes of each layer are 64 * 1 * 9 * 9, 32 * 64 * 5 * 5 and 1 * 32 * 5 * 5.

loss function:mean square error (MSE),

- problems:

① the first question emerges: can we design CNN architectures that directly implement LR as input to address these problems

② how can we design such models of greater complexity?

B. State-of-the-Art Deep SISR Networks

Learning Effective Upsampling with CNN:

- upsampling operation:deconvolution [50] or transposed convolution [51]

Given the upsampling factor, the deconvolution layer is composed of an arbitrary interpolation operator (usually, we choose the nearest neighbor interpolation for simplicity) and a following convolution operator with a stride of 1, as shown in Fig. 3.(近邻插值,乘卷积核)