Kubeadm 部署企业级高可用Kubernetes(适用于ECS)

1.准备工作

介绍

kubernetes 安装有多种选择,本文档教程根据官方教程和企业云上运行的业务场景实践而部署,每个插件都是经过多次测试后选择,均有稳定性的特点:

- Centos 7.6 以上

- Kubernetes 1.16.0

- Docker 18.09.7

- flannel 3.3

- nginx-ingress 1.5.3

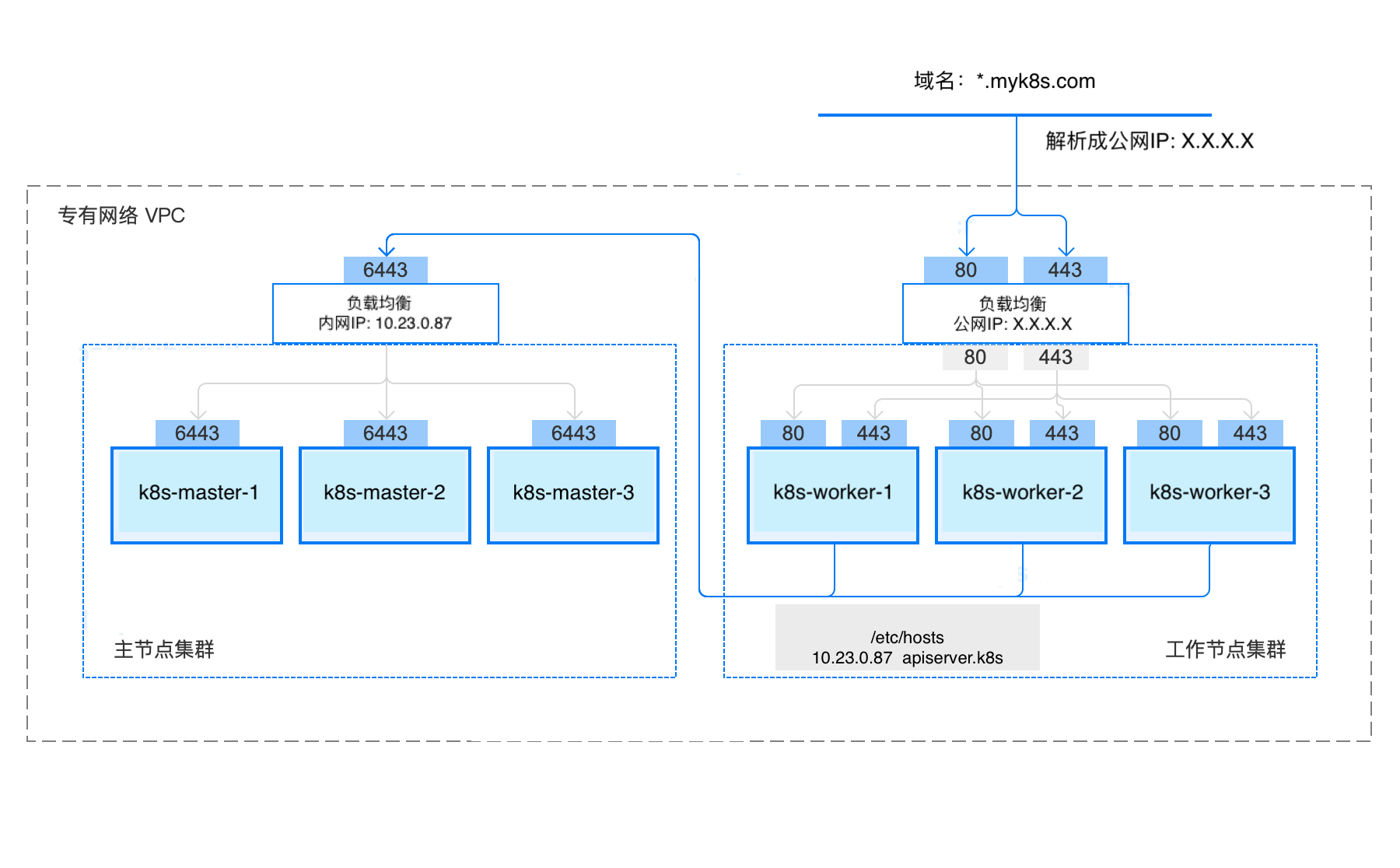

- 三个 master 组成主节点集群,通过内网SLB实现负载均衡

- 多个 worker 组成工作节点集群,通过外网SLB实现负载均衡

拓扑图

主机配置

| 实例名称 | 实例IP | 备注 |

|---|---|---|

| k8s-master-1 | 10.23.0.69 | 4vCPU 8G内存 100G硬盘 |

| k8s-master-2 | 10.23.0.79 | 4vCPU 8G内存 100G硬盘 |

| k8s-master-2 | 10.23.0.79 | 4vCPU 8G内存 100G硬盘 |

| 内网SLB | 10.23.0.87 | 随机分配 |

系统环境配置

关闭swap分区、firewalld、开启时间同步

修改主机名

hostnamectl set-hostname k8s-master-1

关闭 Selinux

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config && setenforce 0

修改/etc/hosts

10.23.0.69 k8s-master-1

10.23.0.79 k8s-master-2

10.23.0.81 k8s-master-3

10.23.0.63 k8s-worker-1

加载ipvs

cat > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

执行脚本

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

创建ApiServer的SLB

监听端口:6443,后端服务:所有Master节点(开启SOURCE_ADDRESS 会话保持)

2. 服务安装

安装docker

使用阿里云脚本安装docker1.18,并启动

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

systemctl enable docker && systemctl start docker

配置daemon.json

cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"registry-mirrors": ["https://uyah70su.mirror.aliyuncs.com"]

}

加载并重启

systemctl daemon-reload && systemctl restart docker

安装kubeadm、kubelet、kubectl

配置kubernetes阿里云yum源

cat /etc/yum.repos.d/kubenetes.repo

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

安装对应的版本软件

yum install -y kubelet-1.16.0-0 kubeadm-1.16.0-0 kubectl-1.16.0-0 --disableexcludes=kubernetes

设置开机启动(先不要启动服务,否则报错,初始化后会自动启动)

systemctl enable kubelet.service

3. 初始化第一个master节点

添加host记录

echo "127.0.0.1 apiserver.k8s" >> /etc/hosts

创建初始化文件

cat > kubeadm-config.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.16.0

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

controlPlaneEndpoint: "apiserver.k8s:6443"

networking:

serviceSubnet: "179.10.0.0/16”

podSubnet: "179.20.0.0/16"

dnsDomain: “cluster.local”

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

下载初始化所需的镜像

kubeadm config images pull --config kubeadm-config.yaml

执行初始化

kubeadm init --config=kubeadm-config.yaml --upload-certs

初始化出现下面内容及成功,第一个join用于初始化master节点,第二个join用于初始化worker节点

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join apiserver.k8s:6443 --token jjo28g.q573caofz5i9ef02 \

--discovery-token-ca-cert-hash sha256:7b5a619a9270ec7c5843679c4bf1021ac4da5e61a3118a298c7c04e66d26e66a \

--control-plane --certificate-key 81e3f41062e3a039c31339f25d7dac4a4f7b8bc8adbc278014919ed4615bede3

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join apiserver.k8s:6443 --token jjo28g.q573caofz5i9ef02 \

--discovery-token-ca-cert-hash sha256:7b5a619a9270ec7c5843679c4bf1021ac4da5e61a3118a298c7c04e66d26e66a

根据提示操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看pod运行情况(coredns没有网络插件,暂时Pending状态)

[root@k8s-master-1 ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-58cc8c89f4-45br7 0/1 Pending 0 7m12s <none> <none> <none> <none>

coredns-58cc8c89f4-8b7fv 0/1 Pending 0 7m12s <none> <none> <none> <none>

etcd-k8s-master-1 1/1 Running 0 6m11s 10.23.0.69 k8s-master-1 <none> <none>

kube-apiserver-k8s-master-1 1/1 Running 0 6m24s 10.23.0.69 k8s-master-1 <none> <none>

kube-controller-manager-k8s-master-1 1/1 Running 0 6m25s 10.23.0.69 k8s-master-1 <none> <none>

kube-proxy-rw96m 1/1 Running 0 7m12s 10.23.0.69 k8s-master-1 <none> <none>

kube-scheduler-k8s-master-1 1/1 Running 0 6m17s 10.23.0.69 k8s-master-1 <none> <none>

安装CNI网络插件,支持coredns启动(这里使用flannel)

下载yml文件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml`

修改配置网络

"Network": "179.20.0.0/16",

创建flannel网络

kubectl apply -f kube-flannel.yml

4. 初始化第二,第三个master节点

在master2,master3上执行

添加host记录

echo "127.0.0.1 apiserver.k8s" >> /etc/hosts

执行mster的 join 命令即可,使用命令kubeadm token create --print-join-command重新获取。

kubeadm join apiserver.k8s:6443 --token jjo28g.q573caofz5i9ef02 \

--discovery-token-ca-cert-hash sha256:7b5a619a9270ec7c5843679c4bf1021ac4da5e61a3118a298c7c04e66d26e66a \

--control-plane --certificate-key 81e3f41062e3a039c31339f25d7dac4a4f7b8bc8adbc278014919ed4615bede3

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

根据上面提示操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看集群运行情况

[root@k8s-master-2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-1 Ready master 64m v1.16.0

k8s-master-2 Ready master 54m v1.16.0

k8s-master-3 Ready master 50m v1.16.0

查看POD运行情况

[root@k8s-master-2 ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-67c766df46-qzgt2 1/1 Running 0 72m

kube-system coredns-67c766df46-zq76c 1/1 Running 0 72m

kube-system etcd-k8s-master-1 1/1 Running 0 71m

kube-system etcd-k8s-master-2 1/1 Running 0 62m

kube-system etcd-k8s-master-3 1/1 Running 0 58m

kube-system kube-apiserver-k8s-master-1 1/1 Running 0 71m

kube-system kube-apiserver-k8s-master-2 1/1 Running 0 62m

kube-system kube-apiserver-k8s-master-3 1/1 Running 0 58m

kube-system kube-controller-manager-k8s-master-1 1/1 Running 1 71m

kube-system kube-controller-manager-k8s-master-2 1/1 Running 0 62m

kube-system kube-controller-manager-k8s-master-3 1/1 Running 0 58m

kube-system kube-flannel-ds-amd64-754lv 1/1 Running 2 62m

kube-system kube-flannel-ds-amd64-9x5bk 1/1 Running 0 58m

kube-system kube-flannel-ds-amd64-cscmt 1/1 Running 0 69m

kube-system kube-proxy-8j4sk 1/1 Running 0 58m

kube-system kube-proxy-ct46p 1/1 Running 0 62m

kube-system kube-proxy-rdjrg 1/1 Running 0 72m

kube-system kube-scheduler-k8s-master-1 1/1 Running 1 71m

kube-system kube-scheduler-k8s-master-2 1/1 Running 0 62m

kube-system kube-scheduler-k8s-master-3 1/1 Running 0 58m

查看etcd集群状态

[root@k8s-master-2 ~]# kubectl -n kube-system exec etcd-k8s-master-1 -- etcdctl --endpoints=https://10.23.0.69:2379 --ca-file=/etc/kubernetes/pki/etcd/ca.crt --cert-file=/etc/kubernetes/pki/etcd/server.crt --key-file=/etc/kubernetes/pki/etcd/server.key cluster-health

member 87decc97a1526c9 is healthy: got healthy result from https://10.23.0.81:2379

member 689b586b03109caa is healthy: got healthy result from https://10.23.0.79:2379

member 74607e593fdaa944 is healthy: got healthy result from https://10.23.0.69:2379

验证IPVS (如果inti时未生效,需要手工修改)

查看kube-proxy日志,第一行输出Using ipvs Proxier

[root@k8s-master-1 ~]# kubectl -n kube-system logs -f kube-proxy-h782c

I0210 02:43:15.059064 1 node.go:135] Successfully retrieved node IP: 10.23.0.79

I0210 02:43:15.059101 1 server_others.go:176] Using ipvs Proxier.

W0210 02:43:15.059254 1 proxier.go:420] IPVS scheduler not specified, use rr by default

I0210 02:43:15.059447 1 server.go:529] Version: v1.16.0

5. 初始化worker节点

添加host记录

echo "10.23.0.87 apiserver.k8s" >> /etc/hosts

执行worker节点的 join 命令即可,使用命令kubeadm token create --print-join-command重新获取。

kubeadm join apiserver.k8s:6443 --token uxafy2.8ri0m2jibivycllh --discovery-token-ca-cert-hash sha256:7b5a619a9270ec7c5843679c4bf1021ac4da5e61a3118a298c7c04e66d26e66a

复制master上init配置文件,在master上执行(否则worker节点无法执行命令)

ssh k8s-worker-1 mkdir -p $HOME/.kube

scp -r $HOME/.kube/config k8s-worker-1:/root/.kube/

ssh k8s-worker-1 sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看节点状态

[root@k8s-worker-1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-1 Ready master 42h v1.16.0

k8s-master-2 Ready master 42h v1.16.0

k8s-master-3 Ready master 42h v1.16.0

k8s-worker-1 Ready <none> 6m11s v1.16.0

6. Ingress Controller

在master节点上

kubectl apply -f https://kuboard.cn/install-script/v1.16.2/nginx-ingress.yaml

创建ingress公网SLB ,监听端口:80,443,后端服务:所有worker节点(开启SOURCE_ADDRESS 会话保持)

配置域名解析:将域名 test.myk8s.com 解析到公网SLB地址

7. 测试集群应用(以tomcat为例)

创建dedeployment

kubectl apply -f deployment/deployment-tomcat.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-tomcat

spec:

selector:

matchLabels:

app: tomcat

replicas: 2

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: rightctrl/tomcat

imagePullPolicy: Always

ports:

- containerPort: 8080

---

kind: Service

apiVersion: v1

metadata:

name: service-tomcat

spec:

selector:

app: tomcat

ports:

- protocol: TCP

port: 8080

targetPort: 8080

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-tomcat

spec:

backend:

serviceName: service-tomcat

servicePort: 8080

创建ingress

kubectl apply -f ingress/ingress-tomcat.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: ingress-tomcat

spec:

rules:

- host: test.myk8s.com

http:

paths:

- path:

backend:

serviceName: service-tomcat

servicePort: 8080

看ingress是否创建成功

[root@k8s-master-1 ~]# kubectl describe ingresses.

Name: ingress-tomcat

Namespace: default

Address:

Default backend: default-http-backend:80 (<none>)

Rules:

Host Path Backends

---- ---- --------

test.myk8s.com

service-tomcat:8080 (179.20.3.7:8080,179.20.3.8:8080)

Annotations:

kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"extensions/v1beta1","kind":"Ingress","metadata":{"annotations":{},"name":"ingress-tomcat","namespace":"default"},"spec":{"rules":[{"host":"test.myk8s.com","http":{"paths":[{"backend":{"serviceName":"service-tomcat","servicePort":8080},"path":null}]}}]}}

Events: <none>

访问域名

8. 验证集群高可用

关闭master1节点,节点状态正常,服务正常

[root@k8s-master-3 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master-1 NotReady master 3d15h v1.16.0

k8s-master-2 Ready master 3d15h v1.16.0

k8s-master-3 Ready master 3d15h v1.16.0

k8s-worker-1 Ready <none> 44h v1.16.0

[root@k8s-master-3 ~]# curl -I test.myk8s.com

HTTP/1.1 200 OK

Date: Wed, 12 Feb 2020 03:17:31 GMT

Content-Type: text/html;charset=UTF-8

Connection: keep-alive

Vary: Accept-Encoding

Set-Cookie: SERVERID=668f1fc010a1560f1a6962e4c40b0c6a|1581477451|1581477451;Path=/

关闭master2节点,节点状态失败,服务正常

[root@k8s-master-3 ~]# kubectl get node

Error from server: etcdserver: request timed out

[root@k8s-master-3 ~]# curl -I test.myk8s.com

HTTP/1.1 200 OK

Date: Wed, 12 Feb 2020 03:18:59 GMT

Content-Type: text/html;charset=UTF-8

Connection: keep-alive

Vary: Accept-Encoding

Set-Cookie: SERVERID=668f1fc010a1560f1a6962e4c40b0c6a|1581477539|1581477539;Path=/