https://en.wikipedia.org/wiki/List_of_S%26P_500_companies

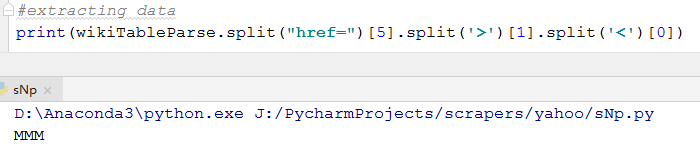

Using the Split function to Extract the content what we need

#get every thing before '0001555280'

#CIK is unique so we can use last one of them to split a table

wikiFirstParse = wikiResponse.text.split('0001555280')[0]![]()

#get every thing after the second '500 component stocks'

wikiTableParse = wikiFirstParse.split('500 component stocks')[3]

#finding a rule

#the symbol 'MMM' is on the 5th hyper link

But some symbols (such as 'AEE' is not on the next forth hyper link(after Bristol)), so we have to figure out another method

I found that the hyperlink contains "nyse" or "nasdaq" will include the symbol (in the text of html tag 'a')

import requests

url = "https://finance.yahoo.com/quote/AAPL?p=AAPL&.tsrc=fin-srch"

#Get More Ticker Symbols from WiKi

wikiURL = "https://en.wikipedia.org/wiki/List_of_S%26P_500_companies"

companySymbolList = {"Company":[]}

wikiResponse = requests.get(wikiURL)

response = requests.get(url)

#extracting

#get every thing before '0001555280'

#CIK is unique so we can use last one of them to split a table

wikiFirstParse = wikiResponse.text.split('0001555280')[0]

#get every thing after the second '500 component stocks'

wikiTableParse = wikiFirstParse.split('500 component stocks')[3]

hyperLinkSplitWiki = wikiTableParse.split('href=')

#get data

#print(wikiTableParse.split("href=")[5]) # 5->9 ->13 ->...

#print(wikiTableParse.split("href=")[5].split('>')[1].split('<')[0])

for position in range(5,len(hyperLinkSplitWiki)):

#tempData=wikiTableParse.split("href=")[position] #.split('>')[1].split('<')[0]

if 'nyse' in hyperLinkSplitWiki[position] :

tempData = hyperLinkSplitWiki[position].split('>')[1].split('<')[0]

companySymbolList['Company'].append(tempData)

if 'nasdaq' in hyperLinkSplitWiki[position]:

tempData = hyperLinkSplitWiki[position].split('>')[1].split('<')[0]

companySymbolList['Company'].append(tempData)

print(companySymbolList)

htmlText = response.text

indicatorDict = {"Previous Close":[],

"Open":[],

"Bid":[],

"Ask":[],

"Day's Range":[],

"52 Week Range":[],

"Volume":[],

"Avg. Volume":[],

"Market Cap":[],

"Beta":[],

"PE Ratio (TTM)":[],

"EPS (TTM)":[],

"Earnings Date":[],

"Dividend & Yield":[],

"Ex-Dividend Date":[],

"1y Target Est":[]

}

specialSplitDict={"Day's Range":"Day's Range",

"52 Week Range": "52 Week Range",

"Dividend & Yield":"Dividend & Yield"}

for indicator in indicatorDict:

if indicator not in specialSplitDict:

splitList = htmlText.split(indicator)

secondSplitList = splitList[1].split("\">")[2]

data = secondSplitList.split("</")[0]

else: #replaced

splitList = htmlText.split(specialSplitDict[indicator])

secondSplitList = splitList[1].split("\">")[1]

data = secondSplitList.split("</td")[0]

indicatorDict[indicator].append(data)

#print(indicatorDict){'Company': ['MMM', 'ABT', 'ABBV', 'ABMD', 'ACN', 'ATVI', 'ADBE', 'AMD', 'AAP', 'AES', 'AMG', 'AFL', 'A', 'APD', 'AKAM', 'ALK', 'ALB', 'ARE', 'ALXN', 'ALGN', 'ALLE', 'AGN', 'ADS', 'LNT', 'ALL', 'GOOGL', 'GOOG', 'MO', 'AMZN', 'AMCR', 'AEE', 'AAL', 'AEP', 'AXP', 'AIG', 'AMT', 'AWK', 'AMP', 'ABC', 'AME', 'AMGN', 'APH', 'APC', 'ADI', 'ANSS', 'ANTM', 'AON', 'AOS', 'APA', 'AIV', 'AAPL', 'AMAT', 'APTV', 'ADM', 'ARNC', 'ANET', 'AJG', 'AIZ', 'ATO', 'T', 'ADSK', 'ADP', 'AZO', 'AVB', 'AVY', 'BHGE', 'BLL', 'BAC', 'BK', 'BAX', 'BBT', 'BDX', 'BRK.B', 'BBY', 'BIIB', 'BLK', 'HRB', 'BA', 'BKNG', 'BWA', 'BXP', 'BSX', 'BMY', 'AVGO', 'BR', 'BF.B', 'CHRW', 'COG', 'CDNS', 'CPB', 'COF', 'CPRI', 'CAH', 'KMX', 'CCL', 'CAT', 'CBRE', 'CBS', 'CE', 'CELG', 'CNC', 'CNP', 'CTL', 'CERN', 'CF', 'SCHW', 'CHTR', 'CVX', 'CMG', 'CB', 'CHD', 'CI', 'XEC', 'CINF', 'CTAS', 'CSCO', 'C', 'CFG', 'CTXS', 'CLX', 'CME', 'CMS', 'KO', 'CTSH', 'CL', 'CMCSA', 'CMA', 'CAG', 'CXO', 'COP', 'ED', 'STZ', 'COO', 'CPRT', 'GLW', 'CTVA', 'COST', 'COTY', 'CCI', 'CSX', 'CMI', 'CVS', 'DHI', 'DHR', 'DRI', 'DVA', 'DE', 'DAL', 'XRAY', 'DVN', 'FANG', 'DLR', 'DFS', 'DISCA', 'DISCK', 'DISH', 'DG', 'DLTR', 'D', 'DOV', 'DOW', 'DTE', 'DUK', 'DRE', 'DD', 'DXC', 'ETFC', 'EMN', 'ETN', 'EBAY', 'ECL', 'EIX', 'EW', 'EA', 'EMR', 'ETR', 'EOG', 'EFX', 'EQIX', 'EQR', 'ESS', 'EL', 'EVRG', 'ES', 'RE', 'EXC', 'EXPE', 'EXPD', 'EXR', 'XOM', 'FFIV', 'FB', 'FAST', 'FRT', 'FDX', 'FIS', 'FITB', 'FE', 'FRC', 'FISV', 'FLT', 'FLIR', 'FLS', 'FMC', 'FL', 'F', 'FTNT', 'FTV', 'FBHS', 'FOXA', 'FOX', 'BEN', 'FCX', 'GPS', 'GRMN', 'IT', 'GD', 'GE', 'GIS', 'GM', 'GPC', 'GILD', 'GPN', 'GS', 'GWW', 'HAL', 'HBI', 'HOG', 'HIG', 'HAS', 'HCA', 'HCP', 'HP', 'HSIC', 'HSY', 'HES', 'HPE', 'HLT', 'HFC', 'HOLX', 'HD', 'HON', 'HRL', 'HST', 'HPQ', 'HUM', 'HBAN', 'HII', 'IDXX', 'INFO', 'ITW', 'ILMN', 'IR', 'INTC', 'ICE', 'IBM', 'INCY', 'IP', 'IPG', 'IFF', 'INTU', 'ISRG', 'IVZ', 'IPGP', 'IQV', 'IRM', 'JKHY', 'JEC', 'JBHT', 'JEF', 'SJM', 'JNJ', 'JCI', 'JPM', 'JNPR', 'KSU', 'K', 'KEY', 'KEYS', 'KMB', 'KIM', 'KMI', 'KLAC', 'KSS', 'KHC', 'KR', 'LB', 'LHX', 'LH', 'LRCX', 'LW', 'LEG', 'LEN', 'LLY', 'LNC', 'LIN', 'LKQ', 'LMT', 'L', 'LOW', 'LYB', 'MTB', 'MAC', 'M', 'MRO', 'MPC', 'MKTX', 'MAR', 'MMC', 'MLM', 'MAS', 'MA', 'MKC', 'MXIM', 'MCD', 'MCK', 'MDT', 'MRK', 'MET', 'MTD', 'MGM', 'MCHP', 'MU', 'MSFT', 'MAA', 'MHK', 'TAP', 'MDLZ', 'MNST', 'MCO', 'MS', 'MOS', 'MSI', 'MSCI', 'MYL', 'NDAQ', 'NOV', 'NKTR', 'NTAP', 'NFLX', 'NWL', 'NEM', 'NWSA', 'NWS', 'NEE', 'NLSN', 'NKE', 'NI', 'NBL', 'JWN', 'NSC', 'NTRS', 'NOC', 'NCLH', 'NRG', 'NUE', 'NVDA', 'ORLY', 'OXY', 'OMC', 'OKE', 'ORCL', 'PCAR', 'PKG', 'PH', 'PAYX', 'PYPL', 'PNR', 'PBCT', 'PEP', 'PKI', 'PRGO', 'PFE', 'PM', 'PSX', 'PNW', 'PXD', 'PNC', 'PPG', 'PPL', 'PFG', 'PG', 'PGR', 'PLD', 'PRU', 'PEG', 'PSA', 'PHM', 'PVH', 'QRVO', 'PWR', 'QCOM', 'DGX', 'RL', 'RJF', 'RTN', 'O', 'RHT', 'REG', 'REGN', 'RF', 'RSG', 'RMD', 'RHI', 'ROK', 'ROL', 'ROP', 'ROST', 'RCL', 'CRM', 'SBAC', 'SLB', 'STX', 'SEE', 'SRE', 'SHW', 'SPG', 'SWKS', 'SLG', 'SNA', 'SO', 'LUV', 'SPGI', 'SWK', 'SBUX', 'STT', 'SYK', 'STI', 'SIVB', 'SYMC', 'SYF', 'SNPS', 'SYY', 'TROW', 'TTWO', 'TPR', 'TGT', 'TEL', 'FTI', 'TFX', 'TXN', 'TXT', 'TMO', 'TIF', 'TWTR', 'TJX', 'TMK', 'TSS', 'TSCO', 'TDG', 'TRV', 'TRIP', 'TSN', 'UDR', 'ULTA', 'USB', 'UAA', 'UA', 'UNP', 'UAL', 'UNH', 'UPS', 'URI', 'UTX', 'UHS', 'UNM', 'VFC', 'VLO', 'VAR', 'VTR', 'VRSN', 'VRSK', 'VZ', 'VRTX', 'VIAB', 'V', 'VNO', 'VMC', 'WAB', 'WMT', 'WBA', 'DIS', 'WM', 'WAT', 'WEC', 'WCG', 'WFC', 'WELL', 'WDC', 'WU', 'WRK', 'WY', 'WHR', 'WMB', 'WLTW', 'WYNN', 'XEL', 'XRX', 'XLNX', 'XYL', 'YUM', 'ZBH', 'ZION', 'ZTS']}

#####################################################################################################

"""

@author: LlQ

@contact:[email protected]

@file:sNp.py

@time: 7/7/2019 3:42 PM

"""

import requests

#Get More Ticker Symbols from WiKi

wikiURL = "https://en.wikipedia.org/wiki/List_of_S%26P_500_companies"

wikiResponse = requests.get(wikiURL)

companySymbolList = []

#extracting

#get every thing before '0001555280'

#CIK is unique so we can use last one of them to split a table

wikiFirstParse = wikiResponse.text.split('0001555280')[0]

#get every thing after the second '500 component stocks'

wikiTableParse = wikiFirstParse.split('500 component stocks')[3]

hyperLinkSplitWiki = wikiTableParse.split('href=')

#get data

#print(wikiTableParse.split("href=")[5]) # 5->9 ->13 ->...

#print(wikiTableParse.split("href=")[5].split('>')[1].split('<')[0])

for position in range(5,len(hyperLinkSplitWiki)):

#tempData=wikiTableParse.split("href=")[position] #.split('>')[1].split('<')[0]

if 'nyse' in hyperLinkSplitWiki[position] :

tempData = hyperLinkSplitWiki[position].split('>')[1].split('<')[0]

companySymbolList.append(tempData)

if 'nasdaq' in hyperLinkSplitWiki[position]:

tempData = hyperLinkSplitWiki[position].split('>')[1].split('<')[0]

companySymbolList.append(tempData)

#url = "https://finance.yahoo.com/quote/AAPL?p=AAPL&.tsrc=fin-srch"

for tickerSymbol in companySymbolList:

url = "https://finance.yahoo.com/quote/" + tickerSymbol + "?p=" + tickerSymbol + "&.tsrc=fin-srch"

response = requests.get(url)

htmlText = response.text

indicatorDict = {"Previous Close":[],

"Open":[],

"Bid":[],

"Ask":[],

"Day's Range":[],

"52 Week Range":[],

"Volume":[],

"Avg. Volume":[],

"Market Cap":[],

"Beta":[],

"PE Ratio (TTM)":[],

"EPS (TTM)":[],

"Earnings Date":[],

"Dividend & Yield":[],

"Ex-Dividend Date":[],

"1y Target Est":[]

}

specialSplitDict={"Day's Range":"Day's Range",

"52 Week Range": "52 Week Range",

"Dividend & Yield":"Dividend & Yield"}

for indicator in indicatorDict:

if indicator not in specialSplitDict:

splitList = htmlText.split(indicator)

secondSplitList = splitList[1].split("\">")[2]

data = secondSplitList.split("</")[0]

else: #replaced

splitList = htmlText.split(specialSplitDict[indicator])

secondSplitList = splitList[1].split("\">")[1]

data = secondSplitList.split("</td")[0]

indicatorDict[indicator].append(data)

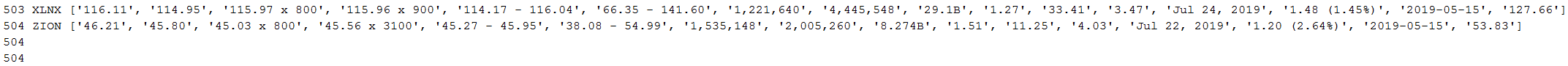

print(tickerSymbol,": ", indicatorDict)

"list index out of range" since the BF.S was not normal stock and has different quotes summary. And I don't want it, so I can fill its data with ‘N/A’’

for indicator in indicatorDict:

try:

if indicator not in specialSplitDict:

splitList = htmlText.split(indicator)

secondSplitList = splitList[1].split("\">")[2]

data = secondSplitList.split("</")[0]

else: #replaced

splitList = htmlText.split(specialSplitDict[indicator])

secondSplitList = splitList[1].split("\">")[1]

data = secondSplitList.split("</td")[0]

indicatorDict[indicator].append(data)

except:

indicatorDict[indicator].append('N/A')

print(tickerSymbol,": ", indicatorDict)

##############################################all codes###########################################

#you may found some codes are different with previous since the website changed the data table structure.

################################################################################################

#!/usr/bin/python

#encoding:utf-8

"""

@author: LlQ

@contact:[email protected]

@file:sNp.py

@time: 7/7/2019 3:42 PM

"""

import requests

import pandas as pd

#Get More Ticker Symbols from WiKi

wikiURL = "https://en.wikipedia.org/wiki/List_of_S%26P_500_companies"

wikiResponse = requests.get(wikiURL)

companySymbolDict = {"Ticker":[]}

#extracting

#get every thing before '0001555280'

#CIK is unique so we can use last one of them to split a table

wikiFirstParse = wikiResponse.text.split('0001555280')[0]

#get every thing after the second '500 component stocks'

wikiTableParse = wikiFirstParse.split('500 component stocks')[3]

hyperLinkSplitWiki = wikiTableParse.split('href=')

#get data

#print(wikiTableParse.split("href=")[5]) # 5->9 ->13 ->...

#print(wikiTableParse.split("href=")[5].split('>')[1].split('<')[0])

for position in range(5,len(hyperLinkSplitWiki)):

#tempData=wikiTableParse.split("href=")[position] #.split('>')[1].split('<')[0]

if 'nyse' in hyperLinkSplitWiki[position] :

tempData = hyperLinkSplitWiki[position].split('>')[1].split('<')[0]

companySymbolDict["Ticker"].append(tempData)

if 'nasdaq' in hyperLinkSplitWiki[position]:

tempData = hyperLinkSplitWiki[position].split('>')[1].split('<')[0]

companySymbolDict["Ticker"].append(tempData)

indicatorDict={"Previous Close":[],

"Open":[],

"Bid":[],

"Ask":[],

"Day's Range":[],

"52 Week Range":[],

"Volume":[],

"Avg. Volume":[],

"Market Cap":[],

"Beta":[],

"PE Ratio (TTM)":[],

"EPS (TTM)":[],

"Earnings Date":[],

"Dividend & Yield":[],

"Ex-Dividend Date":[],

"1y Target Est":[]}

specialSplitDict={"Day's Range":"Day's Range",

"52 Week Range":"52 Week Range",

"Dividend & Yield":"Dividend & Yield",

"Bid":"Bid",

"Ask":"Ask"}

#url = "https://finance.yahoo.com/quote/AAPL?p=AAPL&.tsrc=fin-srch"

for tickerSymbol in companySymbolDict["Ticker"]:

url = "https://finance.yahoo.com/quote/" + tickerSymbol + "?p=" + tickerSymbol + "&.tsrc=fin-srch"

response = requests.get(url)

htmlText = response.text

for indicator in indicatorDict:

try:

if indicator not in specialSplitDict:

splitList = htmlText.split(indicator)

secondSplitList = splitList[1].split("\">")[2]

data = secondSplitList.split("</")[0]

else: #replaced

splitList = htmlText.split(specialSplitDict[indicator])

secondSplitList = splitList[1].split("\">")[1]

data = secondSplitList.split("</td")[0]

indicatorDict[indicator].append(data)

except:

indicatorDict[indicator].append('N/A')

#print(tickerSymbol,": ", indicatorDict)

companySymbolDict.update(indicatorDict) #same number of rows

print(len(companySymbolDict["Ticker"])) #504

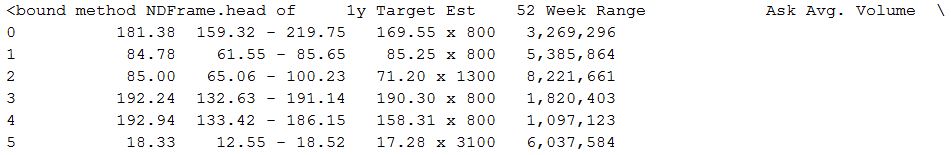

df = pd.DataFrame(companySymbolDict)

print(df.head())

Jupyter Notebook:

Pycharm

#####################################################################################################

XPath Version

#####################################################################################################

#!/usr/bin/python

#encoding:utf-8

"""

@author: LlQ

@contact:[email protected]

@file:sNp500XPath.py

@time: 7/8/2019 1:27 AM

"""

import requests

import pandas as pd

from lxml import etree

#Get More Ticker Symbols from WiKi

wikiURL = "https://en.wikipedia.org/wiki/List_of_S%26P_500_companies"

wikiResponse = requests.get(wikiURL)

html = wikiResponse.content.decode('utf-8')

#print(html)

companySymbolDict = {"Ticker":[]} # pd.DataFrame(companySymbolDict)

selector = etree.HTML(html) #"nasdaq"

tickerSymbolList=selector.xpath('//table[@id="constituents"]/tbody/tr/td/a[contains(@href, "nyse")]/text()')

companySymbolDict["Ticker"].extend(tickerSymbolList)

tickerSymbolList=selector.xpath('//table[@id="constituents"]/tbody/tr/td/a[contains(@href, "nasdaq")]/text()')

companySymbolDict["Ticker"].extend(tickerSymbolList)

print(companySymbolDict)

print(len(companySymbolDict["Ticker"]))output:

D:\Anaconda3\python.exe J:/PycharmProjects/scrapers/yahoo/sNp500XPath.py

{'Ticker': ['MMM', 'ABT', 'ABBV', 'ACN', 'AAP', 'AES', 'AMG', 'AFL', 'A', 'APD', 'ALK', 'ALB', 'ARE', 'ALLE', 'AGN', 'ADS', 'LNT', 'ALL', 'MO', 'AMCR', 'AEE', 'AEP', 'AXP', 'AIG', 'AMT', 'AWK', 'AMP', 'ABC', 'AME', 'APH', 'APC', 'ANTM', 'AON', 'AOS', 'APA', 'AIV', 'APTV', 'ADM', 'ARNC', 'ANET', 'AJG', 'AIZ', 'ATO', 'T', 'AZO', 'AVB', 'AVY', 'BHGE', 'BLL', 'BAC', 'BK', 'BAX', 'BBT', 'BDX', 'BRK.B', 'BBY', 'BLK', 'HRB', 'BA', 'BWA', 'BXP', 'BSX', 'BMY', 'BR', 'BF.B', 'COG', 'CPB', 'COF', 'CPRI', 'CAH', 'KMX', 'CCL', 'CAT', 'CBRE', 'CBS', 'CE', 'CNC', 'CNP', 'CTL', 'CF', 'SCHW', 'CVX', 'CMG', 'CB', 'CHD', 'CI', 'XEC', 'C', 'CFG', 'CLX', 'CMS', 'KO', 'CL', 'CMA', 'CAG', 'CXO', 'COP', 'ED', 'STZ', 'COO', 'GLW', 'CTVA', 'COTY', 'CCI', 'CMI', 'CVS', 'DHI', 'DHR', 'DRI', 'DVA', 'DE', 'DAL', 'DVN', 'DLR', 'DFS', 'DG', 'D', 'DOV', 'DOW', 'DTE', 'DUK', 'DRE', 'DD', 'DXC', 'EMN', 'ETN', 'ECL', 'EIX', 'EW', 'EMR', 'ETR', 'EOG', 'EFX', 'EQR', 'ESS', 'EL', 'EVRG', 'ES', 'RE', 'EXC', 'EXR', 'XOM', 'FRT', 'FDX', 'FIS', 'FE', 'FRC', 'FLT', 'FLS', 'FMC', 'FL', 'F', 'FTV', 'FBHS', 'BEN', 'FCX', 'GPS', 'IT', 'GD', 'GE', 'GIS', 'GM', 'GPC', 'GPN', 'GS', 'GWW', 'HAL', 'HBI', 'HOG', 'HIG', 'HCA', 'HCP', 'HP', 'HSY', 'HES', 'HPE', 'HLT', 'HFC', 'HD', 'HON', 'HRL', 'HST', 'HPQ', 'HUM', 'HII', 'ITW', 'IR', 'ICE', 'IBM', 'IP', 'IPG', 'IFF', 'IVZ', 'IQV', 'IRM', 'JEC', 'JEF', 'SJM', 'JNJ', 'JCI', 'JPM', 'JNPR', 'KSU', 'K', 'KEY', 'KEYS', 'KMB', 'KIM', 'KMI', 'KSS', 'KR', 'LB', 'LHX', 'LH', 'LW', 'LEG', 'LEN', 'LLY', 'LNC', 'LIN', 'LMT', 'L', 'LOW', 'LYB', 'MTB', 'MAC', 'M', 'MRO', 'MPC', 'MMC', 'MLM', 'MAS', 'MA', 'MKC', 'MCD', 'MCK', 'MDT', 'MRK', 'MET', 'MTD', 'MGM', 'MAA', 'MHK', 'TAP', 'MCO', 'MS', 'MOS', 'MSI', 'MSCI', 'NOV', 'NEM', 'NEE', 'NLSN', 'NKE', 'NI', 'NBL', 'JWN', 'NSC', 'NOC', 'NCLH', 'NRG', 'NUE', 'OXY', 'OMC', 'OKE', 'ORCL', 'PKG', 'PH', 'PNR', 'PKI', 'PRGO', 'PFE', 'PM', 'PSX', 'PNW', 'PXD', 'PNC', 'PPG', 'PPL', 'PFG', 'PG', 'PGR', 'PLD', 'PRU', 'PEG', 'PSA', 'PHM', 'PVH', 'PWR', 'DGX', 'RL', 'RJF', 'RTN', 'O', 'RHT', 'REG', 'RF', 'RSG', 'RMD', 'RHI', 'ROK', 'ROL', 'ROP', 'RCL', 'CRM', 'SLB', 'SEE', 'SRE', 'SHW', 'SPG', 'SLG', 'SNA', 'SO', 'LUV', 'SPGI', 'SWK', 'STT', 'SYK', 'STI', 'SYF', 'SYY', 'TPR', 'TGT', 'TEL', 'FTI', 'TFX', 'TXT', 'TMO', 'TIF', 'TWTR', 'TJX', 'TMK', 'TSS', 'TDG', 'TRV', 'TSN', 'UDR', 'USB', 'UAA', 'UA', 'UNP', 'UAL', 'UNH', 'UPS', 'URI', 'UTX', 'UHS', 'UNM', 'VFC', 'VLO', 'VAR', 'VTR', 'VZ', 'V', 'VNO', 'VMC', 'WAB', 'WMT', 'DIS', 'WM', 'WAT', 'WEC', 'WCG', 'WFC', 'WELL', 'WU', 'WRK', 'WY', 'WHR', 'WMB', 'XEL', 'XRX', 'XYL', 'YUM', 'ZBH', 'ZTS', 'ABMD', 'ATVI', 'ADBE', 'AMD', 'AKAM', 'ALXN', 'ALGN', 'GOOGL', 'GOOG', 'AMZN', 'AAL', 'AMGN', 'ADI', 'ANSS', 'AAPL', 'AMAT', 'ADSK', 'ADP', 'BIIB', 'BKNG', 'AVGO', 'CHRW', 'CDNS', 'CELG', 'CERN', 'CHTR', 'CINF', 'CTAS', 'CSCO', 'CTXS', 'CME', 'CTSH', 'CMCSA', 'CPRT', 'COST', 'CSX', 'XRAY', 'FANG', 'DISCA', 'DISCK', 'DISH', 'DLTR', 'ETFC', 'EBAY', 'EA', 'EQIX', 'EXPE', 'EXPD', 'FFIV', 'FB', 'FAST', 'FITB', 'FISV', 'FLIR', 'FTNT', 'FOXA', 'FOX', 'GRMN', 'GILD', 'HAS', 'HSIC', 'HOLX', 'HBAN', 'IDXX', 'INFO', 'ILMN', 'INTC', 'INCY', 'INTU', 'ISRG', 'IPGP', 'JKHY', 'JBHT', 'KLAC', 'KHC', 'LRCX', 'LKQ', 'MKTX', 'MAR', 'MXIM', 'MCHP', 'MU', 'MSFT', 'MDLZ', 'MNST', 'MYL', 'NDAQ', 'NKTR', 'NTAP', 'NFLX', 'NWL', 'NWSA', 'NWS', 'NTRS', 'NVDA', 'ORLY', 'PCAR', 'PAYX', 'PYPL', 'PBCT', 'PEP', 'QRVO', 'QCOM', 'REGN', 'ROST', 'SBAC', 'STX', 'SWKS', 'SBUX', 'SIVB', 'SYMC', 'SNPS', 'TROW', 'TTWO', 'TXN', 'TSCO', 'TRIP', 'ULTA', 'VRSN', 'VRSK', 'VRTX', 'VIAB', 'WBA', 'WDC', 'WLTW', 'WYNN', 'XLNX', 'ZION']}

504

Process finished with exit code 0

#####################################################################################################

I want to save the data of the company symbol tickers(the list in the dict{key:[]}) and quote-summaries(the list in dict{key:[]}) in a dataframe, so they have to be the same length

Volume and Volume .Avg

Solutions:

span[text()="{0}"] instead of span[contains(text(),"{0}"]![]()

two earnings date:

Solution:

del(indicatorDict[indicator][-1])![]()

#######################################Request + Xpath All Codes######################################

#!/usr/bin/python

#encoding:utf-8

"""

@author: LlQ

@contact:[email protected]

@file:sNp500XPath.py

@time: 7/8/2019 1:27 AM

"""

import requests

import pandas as pd

from lxml import etree

#Get More Ticker Symbols from WiKi

wikiURL = "https://en.wikipedia.org/wiki/List_of_S%26P_500_companies"

wikiResponse = requests.get(wikiURL)

html = wikiResponse.content.decode('utf-8')

#print(html)

companySymbolDict = {"Ticker":[]} # pd.DataFrame(companySymbolDict)

selector = etree.HTML(html) #"nasdaq"

tickerSymbolList=selector.xpath('//table[@id="constituents"]/tbody/tr/td/a[contains(@href, "nyse")]/text()')

companySymbolDict["Ticker"].extend(tickerSymbolList)

tickerSymbolList=selector.xpath('//table[@id="constituents"]/tbody/tr/td/a[contains(@href, "nasdaq")]/text()')

companySymbolDict["Ticker"].extend(tickerSymbolList)

# print(companySymbolDict)

# print(len(companySymbolDict["Ticker"]))

indicatorDict={"Previous Close":[],

"Open":[],

"Bid":[],

"Ask":[],

"Day's Range":[],

"52 Week Range":[],

"Volume":[],

"Avg. Volume":[], #note: space after dot

"Market Cap":[],

"Beta (3Y Monthly)":[],

"PE Ratio (TTM)":[],

"EPS (TTM)":[],

"Earnings Date":[],

"Forward Dividend & Yield":[],

"Ex-Dividend Date":[],

"1y Target Est":[]}

specialSplitDict={"Day's Range":"Day's Range",

"52 Week Range": "52 Week Range",

"Forward Dividend & Yield":"Forward Dividend & Yield",

#"Bid": "Bid", #sometimes you will not see these data and

#"Ask":"Ask" #will cause the webpage's structure to be changed (a little bit)

}

counter=0

#url = "https://finance.yahoo.com/quote/AAPL?p=AAPL&.tsrc=fin-srch"

for tickerSymbol in companySymbolDict["Ticker"]:

url = "https://finance.yahoo.com/quote/" + tickerSymbol + "?p=" + tickerSymbol + "&.tsrc=fin-srch"

#https://finance.yahoo.com/quote/BF.B?p=BF.B&.tsrc=fin-srch

req = requests.get(url)

html = req.content.decode('utf-8','ignore')

selector = etree.HTML(html)

dataList=[]

counter=counter+1

i=0

for indicator in indicatorDict:

i=i+1

try:

if indicator not in specialSplitDict: #span[contains(text(),"{0}"]

data = selector.xpath('//div[@id="quote-summary"]//span[text()='

'"{0}"]/../../td[2]/span/text()'.format(indicator))

else: #span[contains(text(),"%s"]

data = selector.xpath('//div[@id="quote-summary"]//span[text()='

'"%s"]/../../td[2]/text()' % indicator) #without replaced

indicatorDict[indicator].extend(data) #dataList

dataList.extend(data)

except:

indicatorDict[indicator].append('N/A')

dataList.append('N/A')

if(len(dataList)<i):

dataList.append('N/A')

if len(indicatorDict[indicator]) <counter:

indicatorDict[indicator].append('N/A')

if(len(dataList)>i):

del(dataList[-1])

if len(indicatorDict[indicator]) > counter:

del(indicatorDict[indicator][-1])

print(counter,tickerSymbol,dataList)

companySymbolDict.update(indicatorDict)

#print(companySymbolDict)

print(len(companySymbolDict['Ticker'])) #504

print(len(indicatorDict["Previous Close"])) #504

df = pd.DataFrame(companySymbolDict)

print(df.head)part of output snapshot

![]()