本文作为学习笔记参考用:

【1】批量抓取代理ip:

找到第三方ip代理的网站,进行分析,并批量抓取,抓取程序放到Proxies_spider.py中,如下所示:

import re

import requests

from retrying import retry

class proxies_spider:

def __init__(self):

self.url_temp = "https://www.freeip.top/?page={}&protocol=https"

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"

}

self.ip_lists = []

def clear_data(self, res):

mr = re.findall("<td>(.*?)</td>", res)

# print(mr)

i = 0

while True:

if i >= len(mr):

break

self.ip_lists.append(mr[i] + ":" + mr[i + 1])

i += 11

@retry(stop_max_attempt_number=3)

def get_request(self, url):

print("*" * 25)

res = requests.get(url, headers=self.headers, timeout=3).content.decode("utf-8")

return res

def run(self):

# urls = "https://www.freeip.top/?page=1&protocol=https"

# 构造url

num = 1

while True:

url = self.url_temp.format(num)

try:

res = self.get_request(url)

except Exception as e:

print(e)

# 清洗数据,把数据存入列表

self.clear_data(res)

if len(self.ip_lists) % 15 != 0:

break

num += 1

print(len(self.ip_lists))

return self.ip_lists

【2】对代理ip进行验证,并将数据存入到 Proxies.txt中,如下所示:

import requests

from DouBan_Sprider import Proxies_spider

from retrying import retry

import json

class Proxies_vertify:

def __init__(self):

self.url = "https://www.baidu.com"

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"

}

self.end_ip_lists = []

@retry(stop_max_attempt_number=2)

def request_get(self, ip):

requests.get(self.url, headers=self.headers, proxies=ip, timeout=2)

def run(self):

# 读取数据,测验是否完整

proxies_spider = Proxies_spider.proxies_spider()

ip_list = proxies_spider.run()

for ip in ip_list:

try:

ips = {"https": "https://{}".format(ip)}

self.request_get(ips)

self.end_ip_lists.append(ip)

print("*" * 20)

print(ip)

except Exception as e:

print(e)

print(self.end_ip_lists)

with open("Proxies.txt", "a") as f:

f.write(json.dumps(self.end_ip_lists) + "\n")

return self.end_ip_lists

【3】抓取豆瓣网站影视信息,如下所示:

import requests

import json

from DouBan_Sprider import Proxies_vertify

from retrying import retry

class DouBan_spider:

def __init__(self):

# 初始url

self.url_list_temp = "https://movie.douban.com/j/search_subjects?type=tv&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_start={}"

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"

}

@retry(stop_max_attempt_number=2)

def get_request(self, url, proxies):

res_json = requests.get(url, headers=self.headers, proxies=proxies, timeout=3, verify=False)

assert res_json.status_code == 200

return res_json.content.decode("utf-8")

def write_data(self, res_list):

with open("douban_sprider.txt", "a", encoding="gbk") as f:

for data in res_list:

rate = data["rate"]

title = data["title"]

url = data["url"]

cover = data["cover"]

f.write("电视剧名称:" + title + ", 影评:" + rate + ", 播放地址:" + url + ", Img_url: " + cover + "\n")

def run(self):

# 下一页变量 num+20

num = 0

count = 0

Vertify = Proxies_vertify.Proxies_vertify()

ip_list = Vertify.run()

while True:

url = self.url_list_temp.format(num)

proxies = {"https": "https://{}".format(ip_list[count])}

count = (count + 1) % len(ip_list)

# proxies = {"https": "https://68.183.235.141:8080"}

try:

res_json = self.get_request(url, proxies)

res_list = json.loads(res_json)["subjects"]

while len(res_list) == 0:

break

# 把数据写入 douban_sprider.txt

self.write_data(res_list)

print("第 " + str(int(num / 20) + 1) + "页")

num += 20

except Exception as e:

print(e)

if __name__ == '__main__':

douban_spider = DouBan_spider()

douban_spider.run()

一次性抓取到的并验证响应时间足够快的代理ip:

["103.28.121.58:3128", "47.99.65.77:3128", "51.254.167.223:3128", "47.100.120.52:3128", "165.227.84.45:3128", "51.158.68.133:8811", "68.183.232.63:3128", "43.255.228.150:3128", "116.196.85.166:3128", "116.196.85.166:3128", "51.158.68.26:8811", "163.172.189.32:8811", "51.158.113.142:8811", "51.158.106.54:8811", "159.203.8.223:3128", "218.60.8.99:3129", "210.22.5.117:3128", "51.158.98.121:8811", "163.172.136.226:8811", "163.172.152.52:8811", "103.28.121.58:80", "128.199.250.116:3128", "136.243.47.220:3128", "89.37.122.216:3129", "210.26.49.88:3128", "51.158.123.35:8811", "128.199.251.126:3128", "51.158.99.51:8811", "180.210.201.57:3130", "178.128.50.214:8080", "138.197.5.192:8888", "121.225.199.78:3128", "121.225.199.78:3128", "138.197.5.192:8888", "163.172.147.94:8811", "118.70.144.77:3128", "128.199.205.90:3128", "167.71.95.30:3128", "51.158.68.68:8811", "47.104.234.32:3128", "120.234.63.196:3128", "122.70.148.66:808", "116.196.85.150:3128", "128.199.227.242:3128", "167.172.135.255:8080", "68.183.123.186:8080", "14.207.26.94:8080", "14.207.124.80:8080", "51.158.108.135:8811", "51.158.111.229:8811", "159.203.44.177:3128", "209.97.174.166:8080", "52.80.58.248:3128"]

也可抓取多次,一次验证,写入文件,抓取网站的时候循环使用列表中的代理ip。

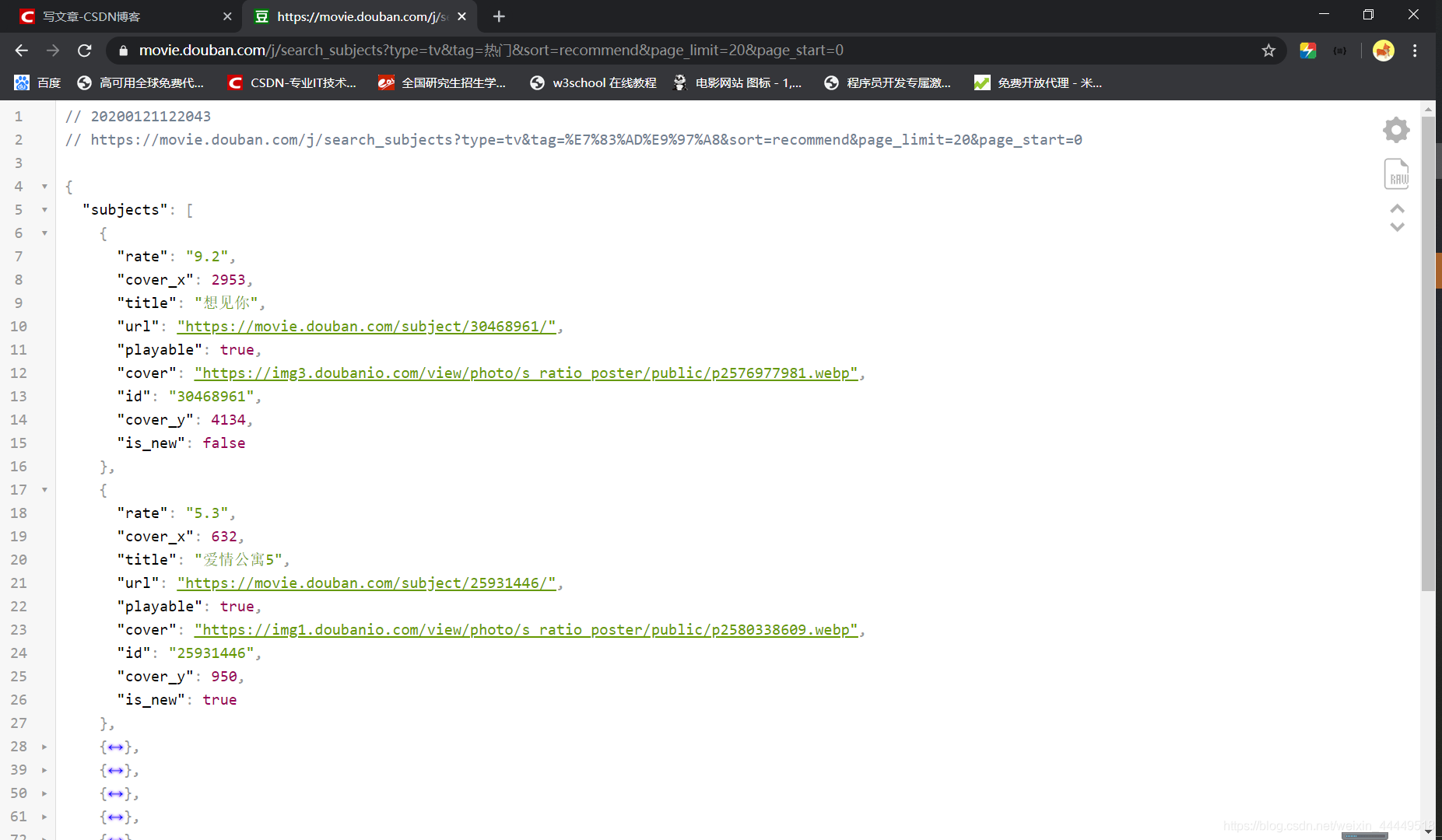

期间用到的Json数据分析的工具为:Chrome的插件——> JSON Viewer

如下图所示分析效果: