平台centos7.4_x86_64

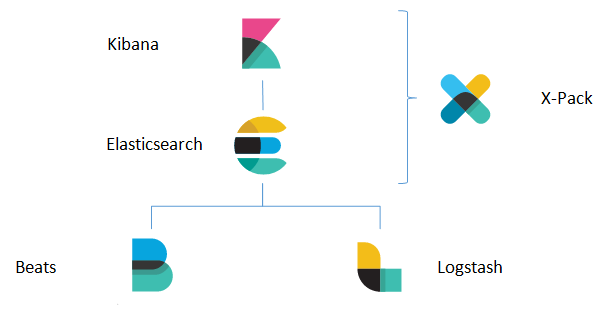

ELK由Elasticsearch、Logstash和Kibana三部分组件组成;

Elasticsearch是个开源分布式搜索引擎,特点:分布式,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash是一个完全开源的工具,可对日志进行收集、分析,并将过滤后的数据转给Elasticsearch使用

kibana 是一个开源和免费的工具,它可以为 Logstash 和 ElasticSearch 提供友好的web可视化界面,帮助您汇总、分析和搜索重要数据日志。

beats是开源的轻量级数据传输组件,面向简单明确的数据传输场景,可将数据传输给Logstash 和 ElasticSearch,安装在采集端

X-Pack是Elastcsearch的扩展插件,包括基于用户的安全管理、集群监控告警、数据报表导出、图探索,需分别在Elasticsearch和kibana节点安装,X-Pack是付费的。

常用于对应用产生的日志进行收集、整理、展示。

若想快速上手尝试下ELK,本文能帮到你

最简单的ELK架构 filebeat --> elasticsearch --> kibana

ELK服务器上安装elasticsearch+kibna,其他产生日志的服务器上安装filebeat

1,elasticsearch安装

yum install -y java-1.8.0

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.0.1.rpm

rpm -ivh elasticsearch-6.0.1.rpm

vi /etc/elasticsearch/elasticsearch.yml

cluster.name: my-app

node.name: elk-1

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 0.0.0.0

http.port: 9200

systemctl daemon-reload

systemctl enable elasticsearch

systemctl restart elasticsearch

systemctl status elasticsearch

http://10.31.44.167:9200 若能看到文字最后'for search, you know',即启动成功

2,kibana安装

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.0.1-x86_64.rpm

rpm -ivh kibana-6.0.1-x86_64.rpm

vi /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://localhost:9200"

kibana.index: ".kibana"

systemctl enable kibana

systemctl start kibana

systemctl status kibana

3,客户端 filebeat安装

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.0.1-x86_64.rpm

rpm -ivh filebeat-6.0.1-x86_64.rpm

vi /etc/filebeat/filebeat.yml

filebeat.prospectors:

- type: log

enabled: true

paths:

- /var/log/nginx/*.log

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

tags: [webA]

setup.kibana:

host: "10.31.44.167:5601"

output.elasticsearch:

hosts: ["10.31.44.167:9200"]

systemctl enable filebeat

systemctl start filebeat

systemctl status filebeat

管理地址:http://10.31.44.167:5601

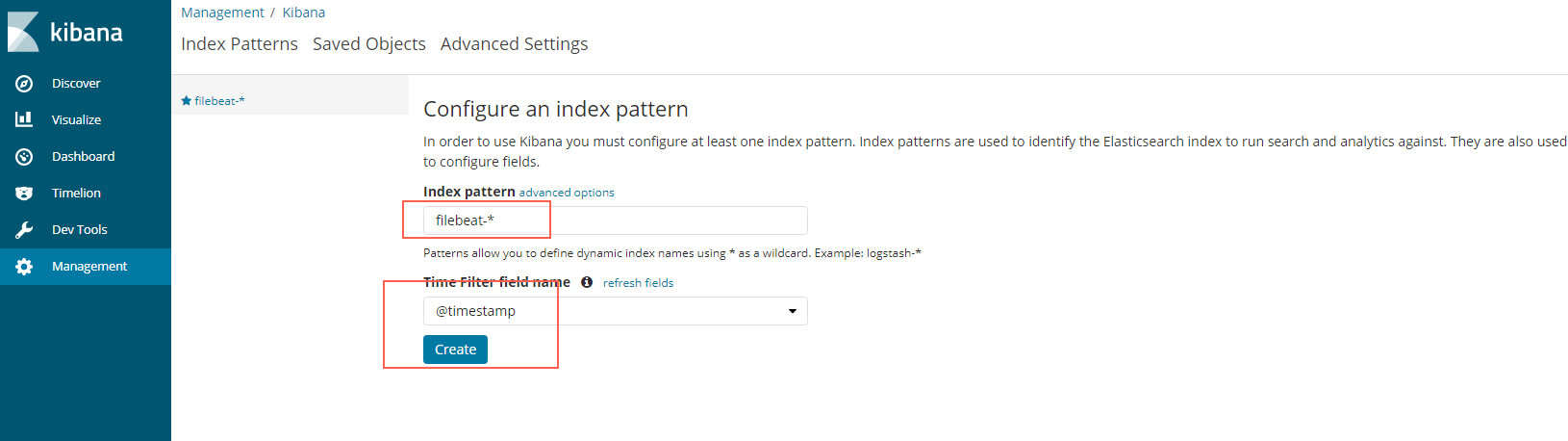

如图,将默认的logstash-*改为filebeat-*,create

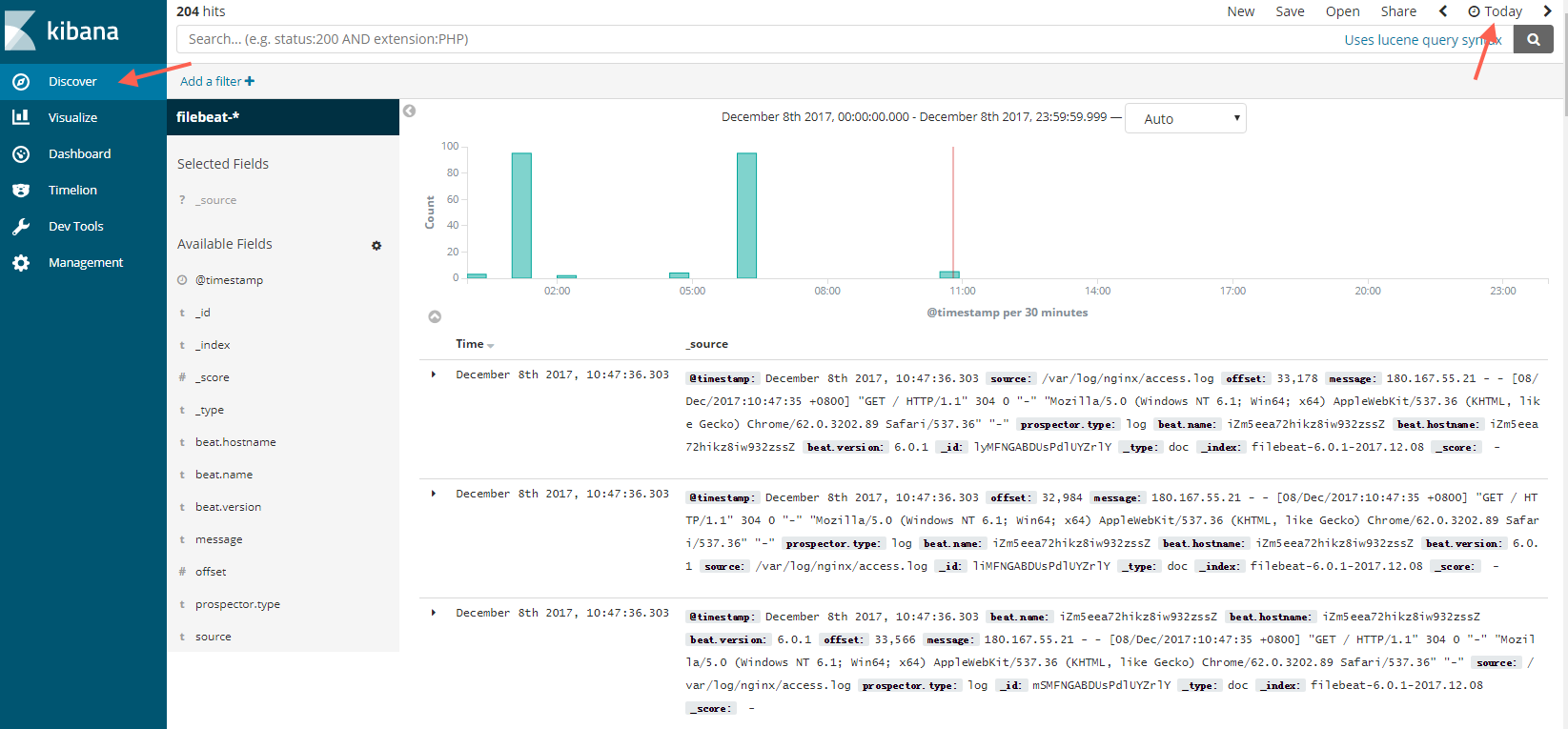

选择左边栏‘discovery’即可看到收集的日志展示

完整的ELK是:filebeat --> logstash --> elasticsearch --> kibana

filebeat配置

filebeat.prospectors:

- type: log

enabled: true

paths:

- /var/log/nginx/*.log

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

tags: [webA]

output.logstash:

hosts: ["10.31.44.167:5044"]

logstash配置

input {

beats {

port => 5044

}

}

output{

elasticsearch {

hosts => ["10.31.44.167:9200"]

index => "test"

}

}

随后需在kibana上index pattern里添加logstash里配置的index名称beat,查看到对应的日志记录即success

为保证安全可将filebeat和logstash之间的传输加密,方法如下:

用openssl分别在filebeat和logstash上的当前目录下生成证书

openssl req -subj '/CN=10.31.44.167/' -days $((100*365)) -batch -nodes -newkey rsa:2048 -keyout ./filebeat.key -out ./filebeat.crt

将生成的证书放到/etc/pki/tls/certs/

最后将crt证书相互放到filebeat和logstash上

filebeat配置

tls:

## logstash server 的自签名证书。

certificate_authorities: ["/etc/pki/tls/certs/logstash.crt"]

certificate: "/etc/pki/tls/certs/filebeat.crt"

certificate_key: "/etc/pki/tls/private/filebeat.key"

logstash配置

input {

beats {

port => 5044

ssl => true

ssl_certificate_authorities => ["/etc/pki/tls/certs/filebeat.crt"]

ssl_certificate => "/etc/pki/tls/certs/logstash.crt"

ssl_key => "/etc/pki/tls/private/logstash.key"

ssl_verify_mode => "force_peer"

}

}